跨网络overlay-maclan

环境:三台主机: consul 192.168.159.147

host1: 192.168.159.141

host2: 192.168.159.142

下载镜像

[root@localhost ~]# docker pull progrium/consul

启动consul容器

[root@localhost ~]# docker run -d --restart always -p 8400:8400 -p 8500:8500 -p 8600:53/udp -h node1 progrium/consul -server -bootstrap -ui-dir /ui 3e03c69f1382ea979cc9b32d6a4d2e46f229756064de911f70936a1dd3f1382c

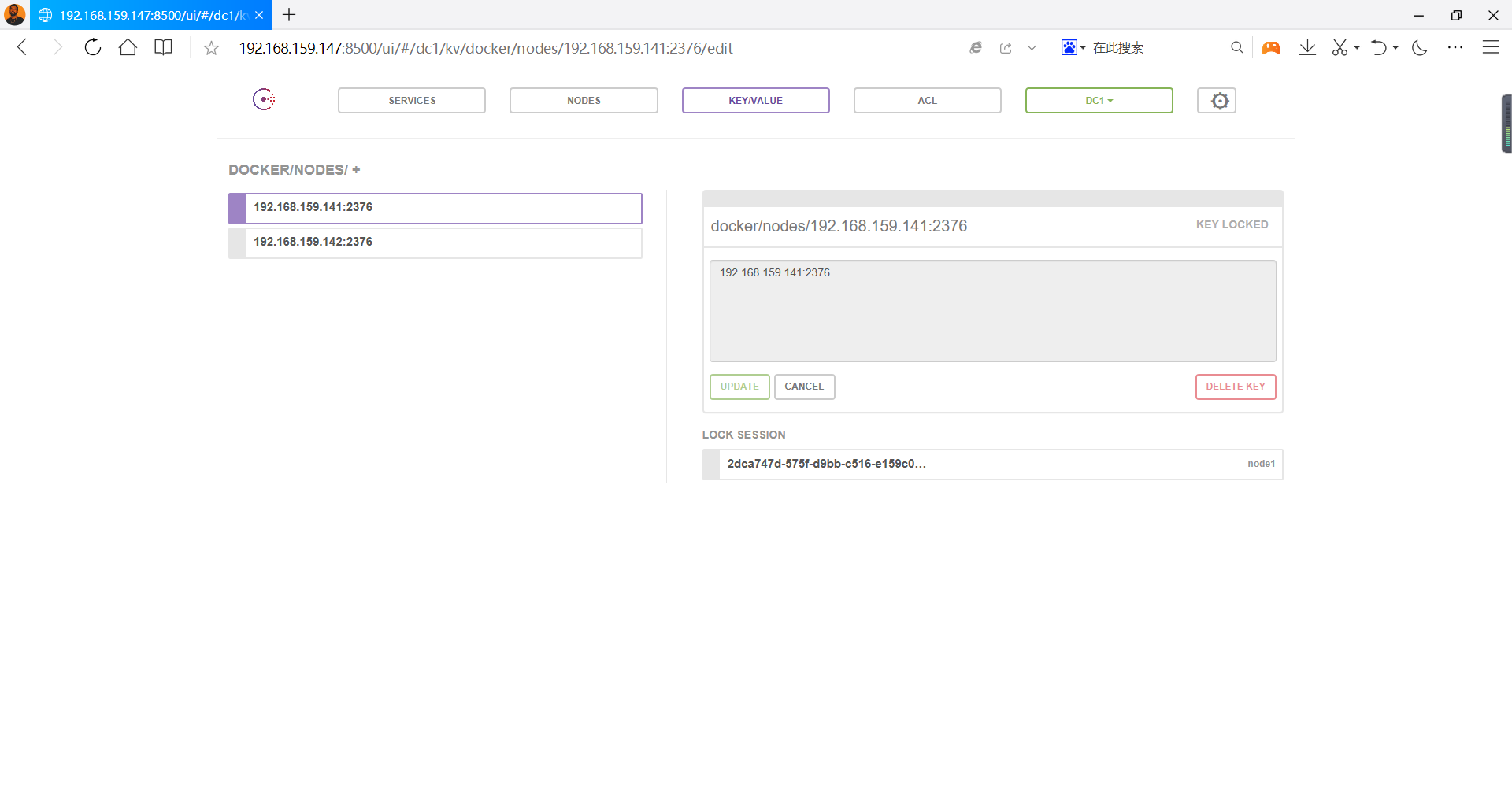

容器启动以后,浏览器访问192.168.159.147:8500 是否正常运行

将三台主机的防火墙全部关闭

指定consul服务器的选项(两台host上都需要操作)

[root@localhost ~]# vim /usr/lib/systemd/system/docker.service [Service] Type=notify # the default is not to use systemd for cgroups because the delegate issues still # exists and systemd currently does not support the cgroup feature set required # for containers run by docker ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --cluster-store=consul://192.168.159.147:8500 --cluster-advertise=ens33:2376

[root@localhost ~]# systemctl daemon-reload

[root@localhost ~]# systemctl restart docker

然后去网页查看

在节点1或者节点2上创建一个overlay网络

[root@localhost ~]# docker network create -d overlay ov_net1 03e5eed7d838603bf807a55f958e68df5aef321b94dc3c670ba8edd00719711a [root@localhost ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 3f8bd4b68439 bridge bridge local 207e47629b22 host host local 812bc9e86ba9 none null local 03e5eed7d838 ov_net1 overlay global

分别在连个节点使用创建的overlay网络生成容器,验证overlay网络

[root@localhost ~]# docker pull busybox Using default tag: latest latest: Pulling from library/busybox 76df9210b28c: Pull complete Digest: sha256:95cf004f559831017cdf4628aaf1bb30133677be8702a8c5f2994629f637a209 Status: Downloaded newer image for busybox:latest docker.io/library/busybox:latest [root@localhost ~]# docker run -dit --name test1 --network ov_net1 busybox fc37bd52d378d19f9aee51080cebe7812497b213b86dc46d43015c0db99ca579

[root@localhost ~]# docker pull busybox Using default tag: latest latest: Pulling from library/busybox 76df9210b28c: Pull complete Digest: sha256:95cf004f559831017cdf4628aaf1bb30133677be8702a8c5f2994629f637a209 Status: Downloaded newer image for busybox:latest docker.io/library/busybox:latest [root@localhost ~]# docker run -dit --name test2 --network ov_net1 busybox 4c9c3f84429e6937a3b3ec3e6ed4da3f11b4d7e0f277fc2cddea655ab900c735

两台节点开启网卡混杂模式

[root@localhost ~]# ifconfig ens33 promisc [root@localhost ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 4f63c70326ae bridge bridge local e1a4327d8e4b docker_gwbridge bridge local 04fedf57105e host host local a0e2932deecc none null local 03e5eed7d838 ov_net1 overlay global [root@localhost ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:9c:47:5f brd ff:ff:ff:ff:ff:ff inet 192.168.159.142/24 brd 192.168.159.255 scope global dynamic ens33 valid_lft 1761sec preferred_lft 1761sec inet6 fe80::f1c7:655f:b65e:3a20/64 scope link valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:95:9c:f9:89 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever 8: docker_gwbridge: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP link/ether 02:42:67:62:22:b1 brd ff:ff:ff:ff:ff:ff inet 172.18.0.1/16 brd 172.18.255.255 scope global docker_gwbridge valid_lft forever preferred_lft forever inet6 fe80::42:67ff:fe62:22b1/64 scope link valid_lft forever preferred_lft forever 10: veth1c8eef5@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker_gwbridge state UP link/ether ae:f8:8b:90:4a:07 brd ff:ff:ff:ff:ff:ff link-netnsid 1 inet6 fe80::acf8:8bff:fe90:4a07/64 scope link valid_lft forever preferred_lft forever

验证

[root@localhost ~]# docker exec -it test1 ping test2 PING test2 (10.0.0.3): 56 data bytes

发现可以域名解析为ip

macvxlan

macvxlan是属于linux内核中的一部分,基于linux kernel之上属于网卡中的子接口,整个结构类似于单臂路由,在docker中,他主要的功能是能够实现配置多个mac地址,然后就会有多个interface,并且,每个interface都会有自己的独立ip地址。

docker主要是通过他来实现跨主机跨网络的一个通信,macvlan的性能十分卓越,并且相比较其他通信方法而言,他不需要再去创建bridge网络,而是直接通过以太网的interface链接到物理网络上,原则上跟桥接差不多,将所有的数据都桥接到我们的物理网卡上。

环境:两台主机

1:node1 192.168.159.141

2:node2 192.168.159.142

node1和node2开启网卡混杂模式

[root@localhost ~]# ip link show ens33 2: ens33: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT qlen 1000 link/ether 00:0c:29:1f:9a:c6 brd ff:ff:ff:ff:ff:ff

在node1上创建macvlan网络

[root@localhost ~]# docker network create -d macvlan --subnet 172.33.11.0/24 --gateway 172.33.11.1 -o parent=ens33 mac_net1 cf46b65bdfbcd6251755b4dfc7a4a597e3f6f412b15eae17517d3222b50c2200

docker run -dit --name bbox1 --ip 172.33.11.10 --network mac_net1 busybox b7e08ecb7ddb3f499910889d724216cab1dd793c17af0bdbc98779716c0459f2

[root@localhost ~]# docker exec -it bbox1 /bin/sh

/ # ping 172.33.11.20 PING 172.33.11.20 (172.33.11.20): 56 data bytes 64 bytes from 172.33.11.20: seq=0 ttl=64 time=1.118 ms 64 bytes from 172.33.11.20: seq=1 ttl=64 time=0.511 ms 64 bytes from 172.33.11.20: seq=2 ttl=64 time=0.521 ms 64 bytes from 172.33.11.20: seq=3 ttl=64 time=0.365 ms 64 bytes from 172.33.11.20: seq=4 ttl=64 time=1.305 ms 64 bytes from 172.33.11.20: seq=5 ttl=64 time=0.368 ms 64 bytes from 172.33.11.20: seq=6 ttl=64 time=0.756 ms 64 bytes from 172.33.11.20: seq=7 ttl=64 time=0.356 ms 64 bytes from 172.33.11.20: seq=8 ttl=64 time=0.302 ms 64 bytes from 172.33.11.20: seq=9 ttl=64 time=0.303 ms