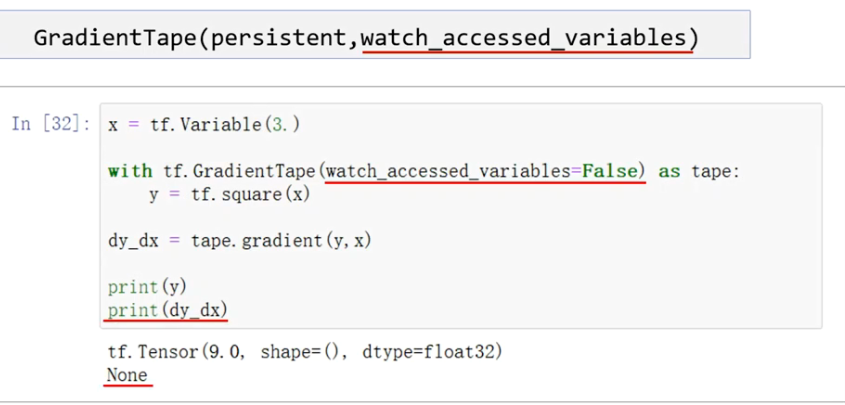

该参数表示是否监视可训练变量,若为False,则无法监视该变量,则输出也为None

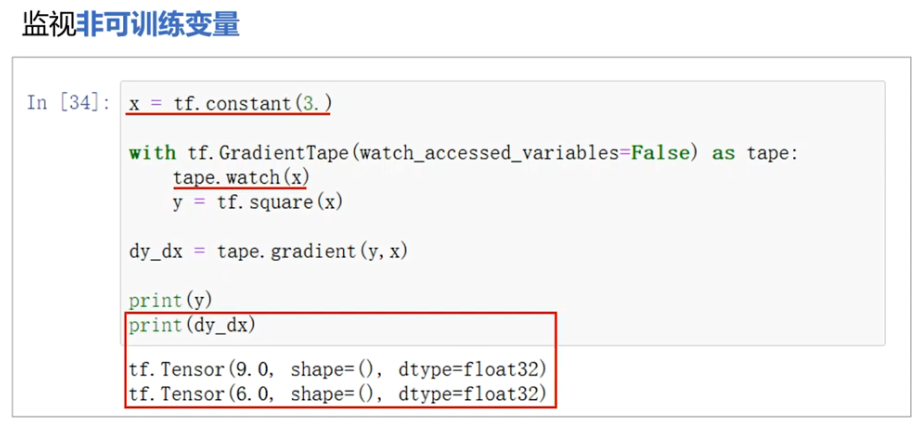

手动添加监视

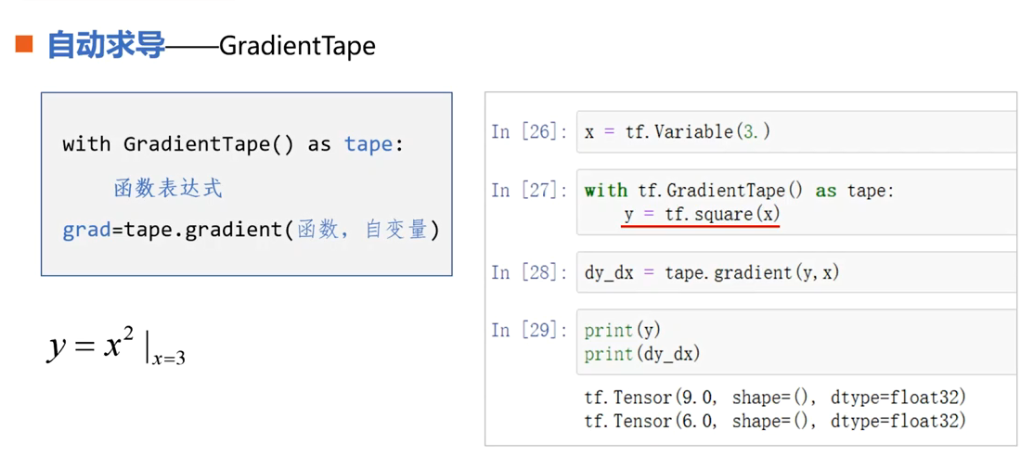

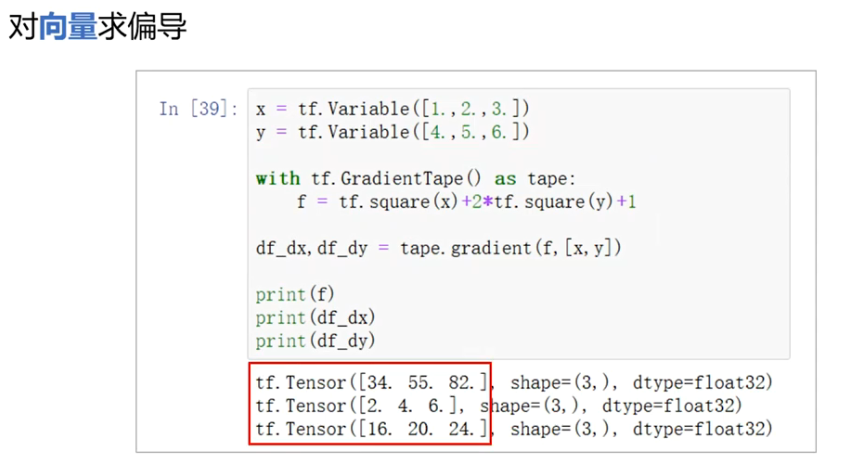

import tensorflow as tf ############################### tf.GradientTape(persistent,watch_accessed_variables) print('###############一元函数求导##############') x = tf.Variable(3.) # x = tf.constant(3.) with tf.GradientTape(persistent = True,watch_accessed_variables = True)as tape: # persistent = True表示可以再次使用这个tape而不会立即销毁 # tape.watch(x) # 手动添加监视 y = 3 * pow(x, 3) + 2 * x z = pow(x,4) dy_dx = tape.gradient(y,x) dz_dx = tape.gradient(z,x) print('y:',y) print('y对x的导数为:',dy_dx) print('z:',z) print('z对x的导数为:',dz_dx) print() del tape print('###############一元函数求二阶导##############') x = tf.Variable(10.) with tf.GradientTape() as tape1: with tf.GradientTape() as tape2: y = pow(x,2) y2 = tape2.gradient(y,x) y3 = tape1.gradient(y2,x) print('x**2在x=10的二阶导数为:',y3) print() print('###############多元函数求偏导##############') x = tf.Variable(4.) y = tf.Variable(2.) with tf.GradientTape(persistent = True) as tape: z = pow(x,2) + x * y # dz_dx = tape.gradient(z,x) # dz_dy = tape.gradient(z,y) dz_dx,dz_dy = tape.gradient(z,[x,y]) result = tape.gradient(z,[x,y]) print('z:',z) print('z对x的导数为:',dz_dx) print('z对y的导数为:',dz_dy) print('result: ',result) print() print('###############对向量求偏导##############') x = tf.Variable([[1.,2.,3.]]) with tf.GradientTape() as tape: y = 3 * pow(x,2) dy_dx = tape.gradient(y,x) print('向量求导dy_dx:',dy_dx)