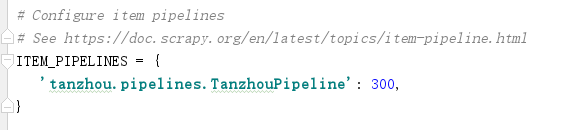

1. 先打开settings.py文件将 'ITEM_PIPELINES'启动(取消注释即可)

2. spider代码

# -*- coding: utf-8 -*- import scrapy import json class TzcSpider(scrapy.Spider): # spider的名字,唯一 name = 'tzc' # 起始地址 start_urls = ['https://hr.tencent.com/position.php?keywords=python&tid=0&lid=2268'] # 每个url爬取之后会调用这个方法 def parse(self, response): tr = response.xpath( '//table[@class="tablelist"]/tr[@class = "even"]|//table[@class="tablelist"]/tr[@class = "odd"]') if tr: for i in tr: data = { "jobName": i.xpath('./td[1]/a/text()').extract_first(), "jobType":i.xpath('./td[2]/text()').extract_first(), "Num":i.xpath('./td[3]/text()').extract_first(), "Place":i.xpath('./td[4]/text()').extract_first(), "Time":i.xpath('./td[5]/text()').extract_first() } # 将数据变成json数据便于存储 # data = json.dumps(data,ensure_ascii=False) yield data # 寻找下一页标签 url_next = response.xpath('//a[@id = "next"]/@href').extract_first() # 提取的是段标签,需要加上域名 url_next = 'https://hr.tencent.com/{}'.format(url_next) # 返回下一页地址,scrapy会递归 yield scrapy.Request(url_next)

3. pipelines.py代码

import json class TanzhouPipeline(object): def process_item(self, item, spider): # 数据json化 item = json.dumps(item,ensure_ascii=False) self.f.write(item) self.f.write(' ') return item # 爬虫开启时运行 def open_spider(self,spider): # 打开文件 self.f = open('info3.json','w') # 爬虫关闭时运行 def close_spider(self,spider): # 关闭文件 self.f.close()

4. 补充2,防止item不规范,可以使用items.py文件对其限制(还要改spider中的item代码)(还要修改pipelines中的代码,要先dict(item)转化成字典,再json转化)

pipeline.py中先转化成字典dict()再json转化:

item = json.dumps(dict(item),ensure_ascii=False)

items.py代码:

import scrapy class TanzhouItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() jobName = scrapy.Field() jobType = scrapy.Field() Num = scrapy.Field() Place = scrapy.Field() Time =scrapy.Field()

spider代码:

import scrapy import json from ..items import TanzhouItem class TzcSpider(scrapy.Spider): # spider的名字,唯一 name = 'tzc' # 起始地址 start_urls = ['https://hr.tencent.com/position.php?keywords=python&tid=0&lid=2268'] # 每个url爬取之后会调用这个方法 def parse(self, response): tr = response.xpath( '//table[@class="tablelist"]/tr[@class = "even"]|//table[@class="tablelist"]/tr[@class = "odd"]') if tr: for i in tr: # 自定义字典的方式,下面是第二种方式 data = { "jobName": i.xpath('./td[1]/a/text()').extract_first(), "jobType":i.xpath('./td[2]/text()').extract_first(), "Num":i.xpath('./td[3]/text()').extract_first(), "Place":i.xpath('./td[4]/text()').extract_first(), "Time":i.xpath('./td[5]/text()').extract_first() } # 第二种方式,用items.py约束 # data = TanzhouItem() # data["jobName"] = i.xpath('./td[1]/a/text()').extract_first() # data["jobType"] = i.xpath('./td[2]/text()').extract_first() # data["Num"] = i.xpath('./td[3]/text()').extract_first() # data["Place"] = i.xpath('./td[4]/text()').extract_first() # data["Time"] = i.xpath('./td[5]/text()').extract_first() # 将数据变成json数据便于存储 # data = json.dumps(data,ensure_ascii=False) yield data # 寻找下一页标签 url_next = response.xpath('//a[@id = "next"]/@href').extract_first() # 提取的是段标签,需要加上域名 url_next = 'https://hr.tencent.com/{}'.format(url_next) # 返回下一页地址,scrapy会递归 yield scrapy.Request(url_next)