为了睡觉,我写简单点

反正就是个记录

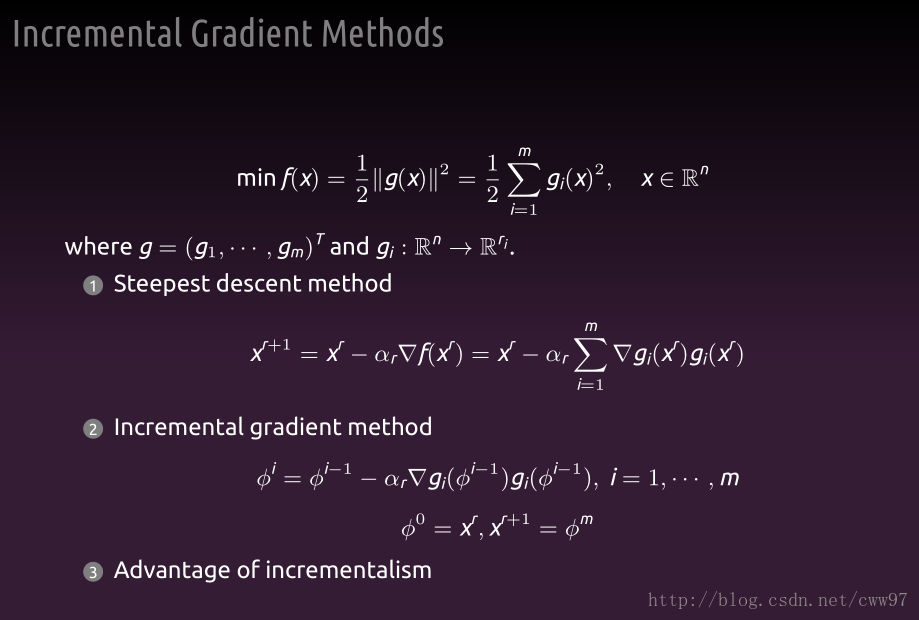

梯度下降

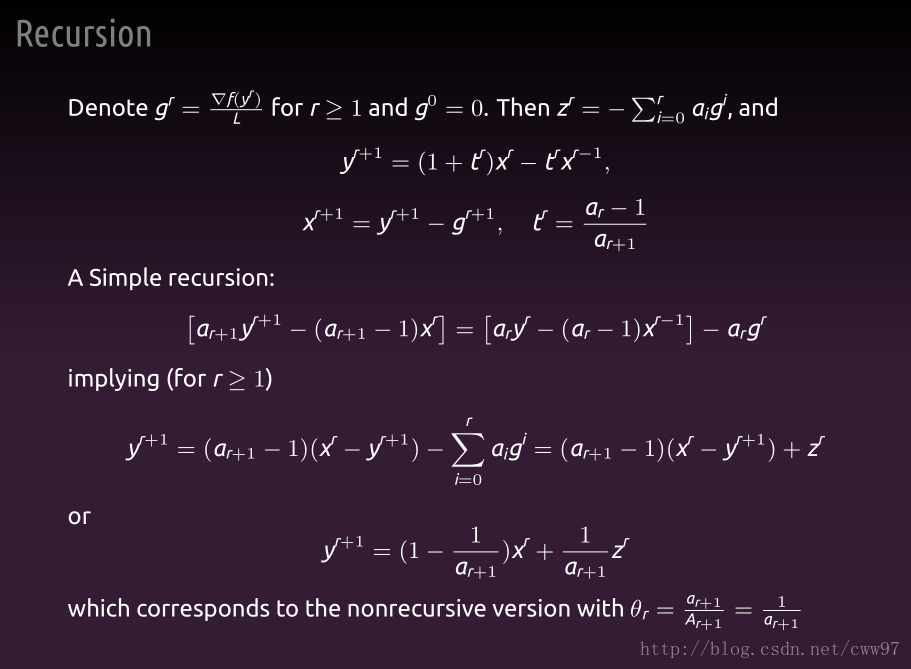

快速梯度下降

"""

Numerical Computation & Optimization

homework1: gradient

cww97

Name: Chen WeiWen

stuID: 10152510217

2017/12/14

from cww970329@qq.com

to num_com_opt@163.com

"""

import numpy as np

N = 1000

def gradient(A, b):

x = np.mat(np.zeros([N, 1]))

#while np.linalg.norm(A*x-b) > 1e-2:

for cnt in range(3000):

gx = A * x - b

s = 5e-4 * sum(A[i].T * gx[i] for i in range(N))

x -= s

return x

def fast_gradient(A, b):

x = np.mat(np.zeros([N, 1]))

x_old, a_old, a = np.copy(x), 0, 1

for cnt in range(1000):

a_new = 0.5 + 0.5 * np.sqrt(1+4*a*a)

t = (a-1) / a_new

y_new = (1+t) * x - t * x_old

gy = A * y_new - b

s = 3e-4 * sum(A[i].T * gy[i] for i in range(N))

x_new = y_new - s

a_old, a, x_old, x = a, a_new, x, x_new

# make you dont feel boring when waiting

print(cnt, np.linalg.norm(A*x-b))

return x

if __name__ == '__main__':

A = np.mat(np.random.normal(size=(N, N)))

x = np.mat(np.ones([N, 1]))

b = A * x

print('A =

', A, '

x = ', x.T, '.T

b = ', b.T, '.T')

print('梯度下降:')

print(gradient(A, b).T)

print('快速梯度下降:')

print(fast_gradient(A, b).T)