1、maven依赖

1 <dependency> 2 <groupId>org.apache.hadoop</groupId> 3 <artifactId>hadoop-common</artifactId> 4 <exclusions> 5 <exclusion> 6 <groupId>com.google.guava</groupId> 7 <artifactId>guava</artifactId> 8 </exclusion> 9 </exclusions> 10 <version>3.1.4</version> 11 </dependency> 12 <dependency> 13 <groupId>org.apache.hadoop</groupId> 14 <artifactId>hadoop-client</artifactId> 15 <exclusions> 16 <exclusion> 17 <groupId>com.google.guava</groupId> 18 <artifactId>guava</artifactId> 19 </exclusion> 20 </exclusions> 21 <version>3.1.4</version> 22 </dependency> 23 <dependency> 24 <groupId>org.apache.hadoop</groupId> 25 <artifactId>hadoop-hdfs</artifactId> 26 <exclusions> 27 <exclusion> 28 <groupId>com.google.guava</groupId> 29 <artifactId>guava</artifactId> 30 </exclusion> 31 </exclusions> 32 <version>3.1.4</version> 33 </dependency> 34 <!-- 版本选择应该和hadoop的sharehadoophdfslib目录下面的guava版本一致--> 35 <dependency> 36 <groupId>com.google.guava</groupId> 37 <artifactId>guava</artifactId> 38 <version>27.0-jre</version> 39 </dependency> 40 <dependency> 41 <groupId>org.apache.hadoop</groupId> 42 <artifactId>hadoop-auth</artifactId> 43 <version>3.1.4</version> 44 </dependency> 45 <dependency> 46 <groupId>jdk.tools</groupId> 47 <artifactId>jdk.tools</artifactId> 48 <version>1.8</version> 49 <scope>system</scope> 50 <systemPath>${JAVA_HOME}/lib/tools.jar</systemPath> 51 </dependency>

2、windowns10配置hadoop

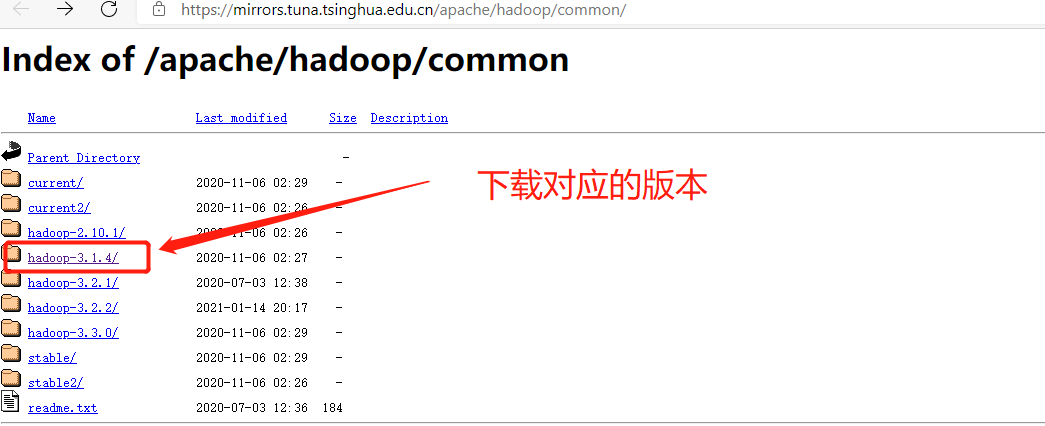

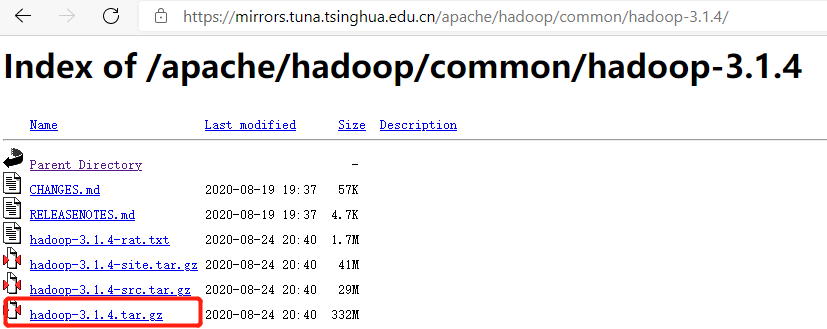

(1)、镜像下载地址

https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/

(2)、 解压Hadoop镜像文件到磁盘目录下,注意:可以解压在非C盘下,存储目录不要包含中文和空格

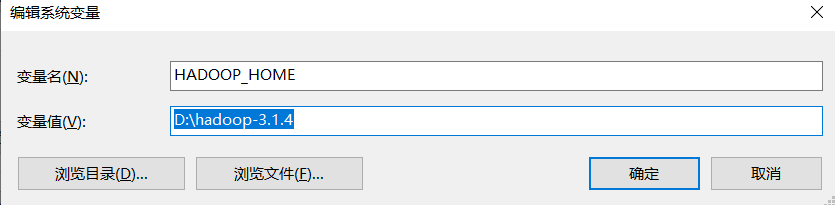

(3)、 配置HADOOP_HOME环境变量,并在系统环境变量Path中添加Hadoop环境变量

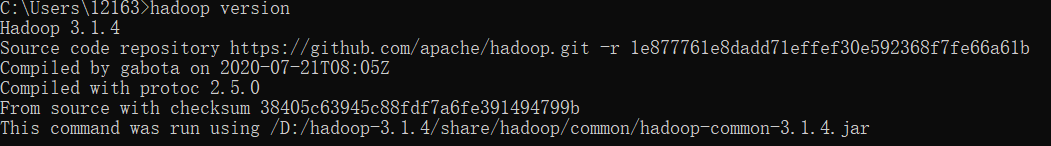

(4)、打开cmd窗口,输入hadoop version命令验证

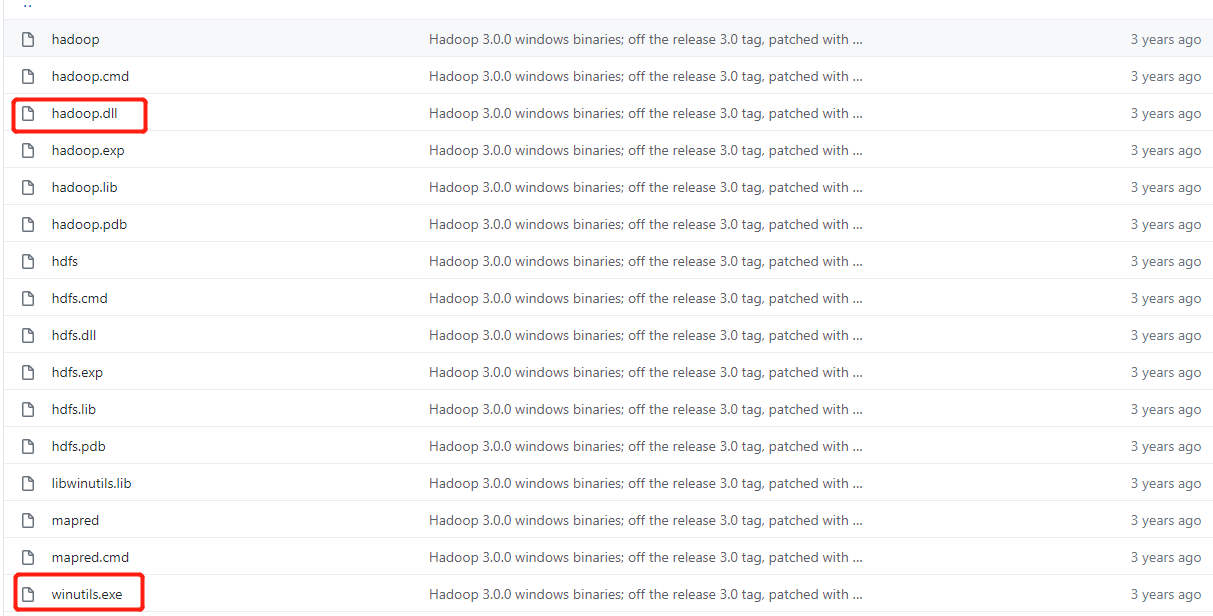

(5)、在githubs行将hadoop.dll和winutils.exe拷贝到hadoop的安装的bin目录下

github地址:https://github.com/steveloughran/winutils/tree/master/hadoop-3.0.0/bin

3、代码编写

public class TestHDFS {

public static void main(String[] args) throws IOException, URISyntaxException, InterruptedException {

Configuration configuration = new Configuration();

//1、获取hdfs客户端 指定HDFS中NameNode的地址和操作用户

FileSystem fs = FileSystem.get(new URI("hdfs://k8smaster:9000"),configuration,"root");

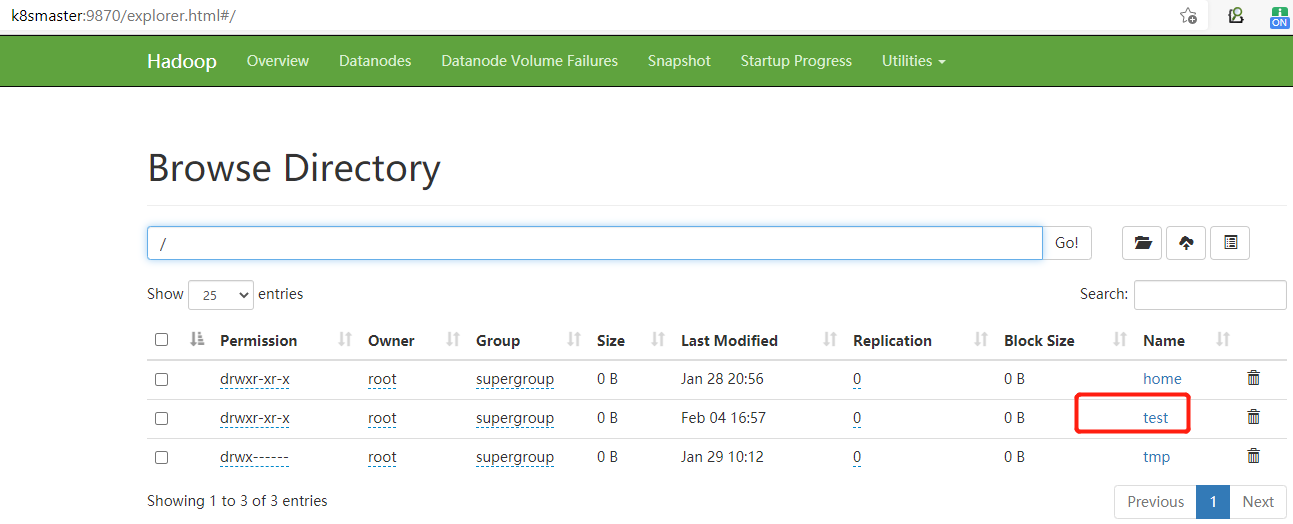

//2、查看指定路径是否存在

System.err.println(fs.exists(new Path("/home")));

//3、创建路径

System.err.println(fs.mkdirs(new Path("/test")));

//3、释放资源

fs.close();

System.err.println("===============执行完成============");

}

}

4、结果验证