今天处理bug的时候遇到一个问题如下:网卡收包时 DMA突然失效了, 地址有问题,看代码发现也没有什么问题, 由于是最新的10g网口驱动,不知道是不是有bug还是啥的,就和正常的igb驱动对比了一下大概思路;

突然发现10g网卡驱动有个位置没有同步导致指针异常;

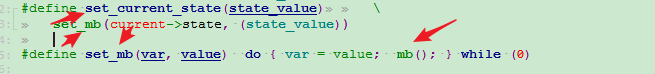

解决办法就是加入:smp_mb;

所以现在也来看看memory_barrier吧

之前写的memory barrier

简单的阅读https://www.kernel.org/doc/Documentation/memory-barriers.txt

What are memory barriers? - Varieties of memory barrier. - What may not be assumed about memory barriers? - Data dependency barriers (historical). - Control dependencies. - SMP barrier pairing. - Examples of memory barrier sequences. - Read memory barriers vs load speculation. - Multicopy atomicity. Explicit kernel barriers. - Compiler barrier.优化屏障 (Optimization Barrier) - CPU memory barriers.内存屏障 (Memory Barrier)

Compiler barrier:CPU越过内存屏障后,将刷新自己对存储器的缓冲状态。这条语句实际上不生成任何代码,但可使gcc在 barrier()之后刷新寄存器对变量的分配。也就是说,barrier()宏只约束gcc编译器,不约束运行时的CPU行为;所以还有一个CPU memory barriers

#define barrier() __asm__ __volatile__("" ::: "memory"):避免了编译器优化带来的内存乱序访问的问题了;

1 int a = 5, b = 6; 2 barrier(); 3 a = b;

在line 3,GCC不会用存放b的寄存器给a赋值,而是invalidate b的Cache line,重新读内存中的b值,赋值给a;---总是从缓存取被修饰的变量的值,而不是寄存器取值;

在 Linux 内核中,提供了一个宏 ACCESS_ONCE 来避免编译器对于连续的 ACCESS_ONCE 实例进行指令重排。其实 ACCESS_ONCE 实现源码如下:

#define ACCESS_ONCE(x) (*(volatile typeof(x) *)&(x))

可以看到 防止编译器对指令的重排都用到了volatile

是不是 volatile 这个关键字可以用来避免编译时内存乱序访问???-------而无法避免后面要说的运行时内存乱序访问问题---

NOTE:volatile不保证原子性(一般需使用CPU提供的LOCK指令);volatile不保证执行顺序(只是对编译器产生作用);

原子操作需要使用CPU提供的“lock”指令,CPU乱序需使用CPU内存屏障-----后面也记着吧

CPU memory barriers:rmb()不允许读操作穿过内存屏障;wmb()不允许写操作穿过屏障;而mb()二者都不允许------也就是volatile不提供内存屏障memory barrier

所以:多核环境中内存的可见性和CPU执行顺序不能通过volatile来保障,而是依赖于CPU内存屏障

#ifdef CONFIG_SMP #define smp_mb() mb() #else #define smp_mb() barrier() #endif

见:https://www.cnblogs.com/codestack/p/13595080.html

#ifdef CONFIG_X86_32 #define mb() asm volatile(ALTERNATIVE("lock; addl $0,0(%%esp)", "mfence", X86_FEATURE_XMM2) ::: "memory", "cc") #define rmb() asm volatile(ALTERNATIVE("lock; addl $0,0(%%esp)", "lfence", X86_FEATURE_XMM2) ::: "memory", "cc") #define wmb() asm volatile(ALTERNATIVE("lock; addl $0,0(%%esp)", "sfence", X86_FEATURE_XMM2) ::: "memory", "cc") #else #define mb() asm volatile("mfence":::"memory") #define rmb() asm volatile("lfence":::"memory") #define wmb() asm volatile("sfence" ::: "memory") #endif

64位x86,肯定有mfence、lfence和sfence三条指令,而32位的x86系统则不一定,

所以需要进一步查看cpu是否支持这三条新的指令,不行则用加锁的方式来增加内存屏障。

Implicit kernel memory barriers.--Memory barrier 常用场合包括:

- Lock acquisition functions.

- Interrupt disabling functions.

- Sleep and wake-up functions.

- Miscellaneous functions.================================= WHERE ARE MEMORY BARRIERS NEEDED? ================================= Under normal operation, memory operation reordering is generally not going to be a problem as a single-threaded linear piece of code will still appear to work correctly, even if it's in an SMP kernel. There are, however, four circumstances in which reordering definitely _could_ be a problem: (*) Interprocessor interaction. (*) Atomic operations. (*) Accessing devices. (*) Interrupts.

目前见得多的好像是:实现同步原语(synchronization primitives) 实现无锁数据结构(lock-free data structures)---dpdk里面见过

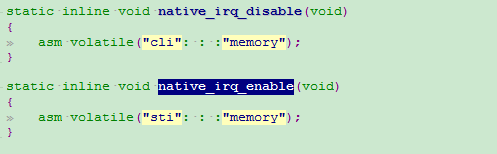

INTERRUPT DISABLING FUNCTIONS

-----------------------------

Functions that disable interrupts (ACQUIRE equivalent) and enable interrupts

(RELEASE equivalent) will act as compiler barriers only. So if memory or I/O

barriers are required in such a situation, they must be provided from some

other means.

SLEEP AND WAKE-UP FUNCTIONS

---------------------------

Sleeping and waking on an event flagged in global data can be viewed as an

interaction between two pieces of data: the task state of the task waiting for

the event and the global data used to indicate the event. To make sure that

these appear to happen in the right order, the primitives to begin the process

of going to sleep, and the primitives to initiate a wake up imply certain

barriers.

Firstly, the sleeper normally follows something like this sequence of events: for (;;) { set_current_state(TASK_UNINTERRUPTIBLE); if (event_indicated) break; schedule(); } A general memory barrier is interpolated automatically by set_current_state() after it has altered the task state: CPU 1 =============================== set_current_state(); smp_store_mb(); STORE current->state <general barrier> LOAD event_indicated set_current_state() may be wrapped by: prepare_to_wait(); prepare_to_wait_exclusive(); which therefore also imply a general memory barrier after setting the state. The whole sequence above is available in various canned forms, all of which interpolate the memory barrier in the right place: wait_event(); wait_event_interruptible(); wait_event_interruptible_exclusive(); wait_event_interruptible_timeout(); wait_event_killable(); wait_event_timeout(); wait_on_bit(); wait_on_bit_lock(); Secondly, code that performs a wake up normally follows something like this: event_indicated = 1; wake_up(&event_wait_queue); or: event_indicated = 1; wake_up_process(event_daemon); A general memory barrier is executed by wake_up() if it wakes something up. If it doesn't wake anything up then a memory barrier may or may not be executed; you must not rely on it. The barrier occurs before the task state is accessed, in particular, it sits between the STORE to indicate the event and the STORE to set TASK_RUNNING: CPU 1 (Sleeper) CPU 2 (Waker) =============================== =============================== set_current_state(); STORE event_indicated smp_store_mb(); wake_up(); STORE current->state ... <general barrier> <general barrier> LOAD event_indicated if ((LOAD task->state) & TASK_NORMAL) STORE task->state where "task" is the thread being woken up and it equals CPU 1's "current". To repeat, a general memory barrier is guaranteed to be executed by wake_up() if something is actually awakened, but otherwise there is no such guarantee. To see this, consider the following sequence of events, where X and Y are both initially zero: CPU 1 CPU 2 =============================== =============================== X = 1; Y = 1; smp_mb(); wake_up(); LOAD Y LOAD X If a wakeup does occur, one (at least) of the two loads must see 1. If, on the other hand, a wakeup does not occur, both loads might see 0. wake_up_process() always executes a general memory barrier. The barrier again occurs before the task state is accessed. In particular, if the wake_up() in the previous snippet were replaced by a call to wake_up_process() then one of the two loads would be guaranteed to see 1. The available waker functions include: complete(); wake_up(); wake_up_all(); wake_up_bit(); wake_up_interruptible(); wake_up_interruptible_all(); wake_up_interruptible_nr(); wake_up_interruptible_poll(); wake_up_interruptible_sync(); wake_up_interruptible_sync_poll(); wake_up_locked(); wake_up_locked_poll(); wake_up_nr(); wake_up_poll(); wake_up_process(); In terms of memory ordering, these functions all provide the same guarantees of a wake_up() (or stronger). [!] Note that the memory barriers implied by the sleeper and the waker do _not_ order multiple stores before the wake-up with respect to loads of those stored values after the sleeper has called set_current_state(). For instance, if the sleeper does: set_current_state(TASK_INTERRUPTIBLE); if (event_indicated) break; __set_current_state(TASK_RUNNING); do_something(my_data); and the waker does: my_data = value; event_indicated = 1; wake_up(&event_wait_queue); there's no guarantee that the change to event_indicated will be perceived by the sleeper as coming after the change to my_data. In such a circumstance, the code on both sides must interpolate its own memory barriers between the separate data accesses. Thus the above sleeper ought to do: set_current_state(TASK_INTERRUPTIBLE); if (event_indicated) { smp_rmb(); do_something(my_data); } and the waker should do: my_data = value; smp_wmb(); event_indicated = 1; wake_up(&event_wait_queue);

=============================== IMPLICIT KERNEL MEMORY BARRIERS =============================== Some of the other functions in the linux kernel imply memory barriers, amongst which are locking and scheduling functions. This specification is a _minimum_ guarantee; any particular architecture may provide more substantial guarantees, but these may not be relied upon outside of arch specific code. LOCK ACQUISITION FUNCTIONS -------------------------- The Linux kernel has a number of locking constructs: (*) spin locks (*) R/W spin locks (*) mutexes (*) semaphores (*) R/W semaphores

内存屏障的体系结构语义:

- 只有一个主体(CPU或DMA控制器)访问内存时,无论如何也不需要barrier;但如果有两个或更多主体访问内存,且其中有一个在观测另一个,就需要barrier了。

- IA32 CPU调用有lock前缀的指令,或者如xchg这样的指令,会导致其它的CPU也触发一定的动作来同步自己的Cache。CPU的#lock引脚链接到北桥芯片(North Bridge)的#lock引脚,当带lock前缀的执行执行时,北桥芯片会拉起#lock电平,从而锁住总线,直到该指令执行完毕再放开。 而总线加锁会自动invalidate所有CPU对 _该指令设计的内存的Cache,因此barrier就能保证所有CPU的Cache一致性。

以下内容来自https://blog.csdn.net/Adam040606/article/details/50898070 这边文章 ----说的比较多!! 后续再来慢慢看

有篇http://www.wowotech.net/kernel_synchronization/Why-Memory-Barriers.html 翻译文章 说明了memory 和cpu 架构之间的关系--比较好 可以根据需要深入学习

下面这些 copy 来自网上 我也不是很懂---哈哈哈哈哈

考虑到DMA

- Wirte through策略。 这种情形比较简单。

-> 本CPU写内存,是write through的,因此无论什么时候DMA读内存,读到的都是正确数据。

-> DMA写内存,如果DMA要写的内存被本CPU缓存了,那么必须Invalidate这个Cache line。下次CPU读它,就

直接从内存读。

2. Write back策略。 这种情形相当复杂。

-> DMA读内存。被本CPU总线监视单元发现,而且本地Cache中有Modified数据,本CPU就截获DMA的内存读操作,

把自己Cache Line中的数据返回给它。

-> DMA写内存。而且所写的位置在本CPU的Cache中,这又分两种情况:

a@ Cache Line状态未被CPU修改过(即cache和内存一致),那么invalidate该cache line。

b@ Cache Line状态已经被修改过,又分2种情况:

<1> DMA写操作会替换CPU Cache line所对应的整行内存数据,那么DMA写,CPU则invalidate

自己的Cache Line。

<2> DMA写操作只替换Cache Line对应的内存数据的一部分,那么CPU必须捕获DMA写操作的新

数据(即DMA想把它写入内存的),用来更新Cache Line的相关部分。

对于单CPU而言,CPU cache中的内容和memory中的内容的同步要注意:

1. 虽然CPU可能会更改执行顺序,但CPU更改后的指令在单核环境中是正确的。

2. CPU中的cache和memory的同步只需要考虑 DMA和CPU同时对memory访问导致的同步问题,这种问题要在编写驱动的时候使用合适的指令和mb来保证。也就是说,在UP中,只需要考虑cpu和dma的同步问题。

在SMP中,需要考虑CPU之间,CPU和dma之间的同步问题。

SMP是一个共享总线的结构,一般来说,存在两层总线, host总线和PCI总线或者其它IO总线。host总线连接多个CPU和内存,host/PCI桥 (就是通常说的北桥)

PCI总线连接host/PCI桥和 PCI主从设备,及PCI/Isa桥。 就是通常说的南桥。

由此可见,PCI设备要将自己的register map到内存中,需要通过host/pci 桥, 要靠host/pci 桥访问host总线,然后到达内存。

内存的映射和访问这些工作由bridge+dma完成。

而多个CPU要访问内存,也要通过host总线。

由上可见, 一个CPU或者DMA要访问内存,必须锁总线,总线是共享的。同样为了使得内存的修改能被其它设备知晓,必须用signal通知机制,某个设备修改了内 存,必须有监听总线的机制,然后通过某个signal通知到设备,如dma访问内存的时候,cpu监控总线, 用HIT和HITM通知cpu修改的内容命令cache, 所以相关cache要invalidate,一般是64bit。这个过程是一级一级cache往上走的过程。

为了防止dma中的数据cache在CPU中,大家一般采用申明为volatile的方法,这种方法会导致效率不高,CPU每次必须lock 总线,访问内存才能获得相应的内容。

https://wudaijun.com/2019/04/cpu-cache-and-memory-model/

https://www.0xffffff.org/2017/02/21/40-atomic-variable-mutex-and-memory-barrier/

https://www.zhihu.com/question/31325454

https://wudaijun.com/2018/09/distributed-consistency/

https://wudaijun.com/2019/04/cpu-cache-and-memory-model/

void foo(void) { a = 1; smp_mb(); b = 1; } void bar(void) { while(b==0) continue; smp_mb(); assert(a == 1); }