1. 实验架构

| 主机名 | IP地址 |

|---|---|

| hdss11.host.com | 192.168.31.11 |

| hdss200.host.com | 192.168.31.200 |

| hdss37.host.com | 192.168.31.37 |

| hdss38.host.com | 192.168.31.38 |

| hdss39.host.com | 192.168.31.39 |

| hdss40.host.com | 192.168.31.40 |

2 实验准备工作

2.1 准备虚拟机

6台VM, 每台2c2g

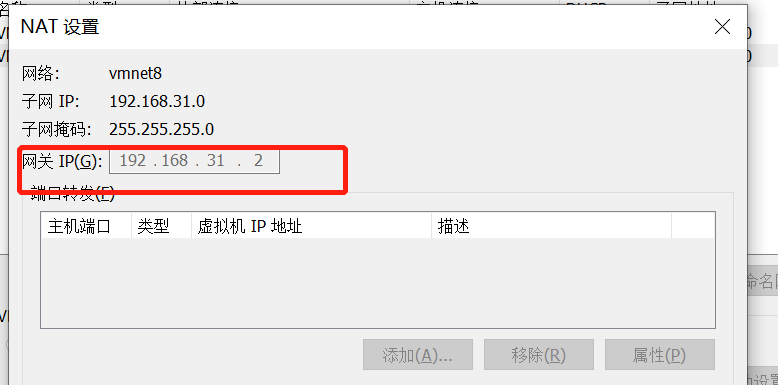

配置vmware网段为 192.168.31.0, 子网掩码为 255.255.255.0

配置vmware的网关为 192.169.31.2

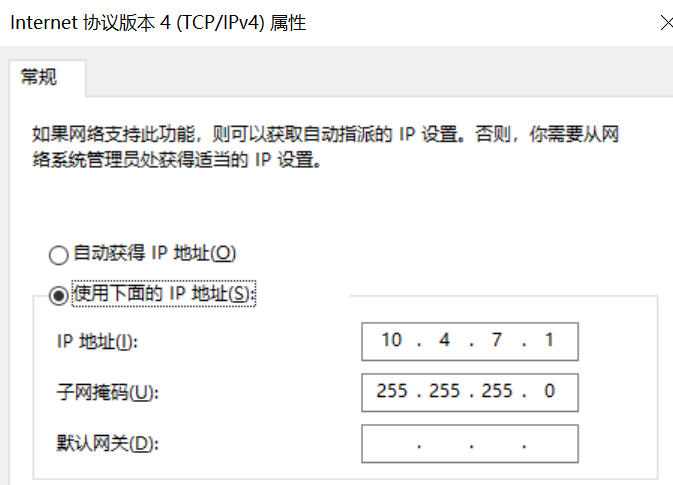

将vmnet8 网卡的地址配置为 10.4.7.1 , 子网掩码为 255.255.255.0

在 /etc/sysconfig/network-scripts/ifcfg-eth0 配置网卡

TYPE=Ethernet

BOOTPROTO=static

NAME=eth0

DEVICE=eth0

ONBOOT=yes

IPADDR=192.168.31.11

NETMASK=255.255.255.0

GATEWAY=192.168.31.2

DNS1=192.168.31.2

DNS2=223.5.5.5

重启网卡

systemctl restart network

2.2 配置基础环境

- 配置阿里的源, 并安装epel-release

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

yum makecache

yum -y install epel-release

- 关闭SElinux 和 firewalld

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/SELINUX=.*/SELINUX=disabled/g' /etc/selinux/config

yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save

- 安装必要的工具

yum -y install conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat vim bash-com*

yum -y install wget net-tools telnet tree nmap sysstat lrzsz dos2unix bind-utils

- 调整内核参数

cat > kubernetes.conf <<EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

sysctl -p /etc/sysctl.d/kubernetes.conf

- 调整系统时区

timedatectl set-timezone Asia/Shanghai

timedatectl set-local-rtc 0

- 重启依赖于系统事件的服务

systemctl restart rsyslog.service

systemctl restart crond.service

- 关闭系统不需要的服务

systemctl stop postfix.service && systemctl disable postfix.service

- 设置rsyslogd和systemd journald

mkdir /var/log/journal

mkdir /etc/systemd/journald.conf.d

cat > /etc/systemd/journald.conf.d/99-prophet.cof <<EOF

[Journal]

# 持久化保存到磁盘

Storage=persistent

# 压缩历史日志

Compress=yes

SyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

# 最大占用空间10G

SystemMaxUse=10G

# 单日志文件最大 200M

SystemMaxFileSize=200M

# 日志保存时间2周

MaxRetentionSec=2week

# 不将日志转发到syslog

ForwardToSyslog=no

EOF

systemctl restart systemd-journald.service

- Kube-proxy开启ipvs的前置条件

modprobe br_netfilter

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash

/etc/sysconfig/modules/ipvs.modules && lsmod |grep -e ip_vs -e nf_conntrack_ipv4

- 关闭NUMA

cp /etc/default/grub{,.bak}

sed -i 's#GRUB_CMDLINE_LINUX="rd.lvm.lv=centos/root rd.lvm.lv=centos/swap net.ifnames=0 rhgb quiet"#GRUB_CMDLINE_LINUX="rd.lvm.lv=centos/root rd.lvm.lv=centos/swap net.ifnames=0 rhgb quiet numa=off"#g' /etc/default/grub

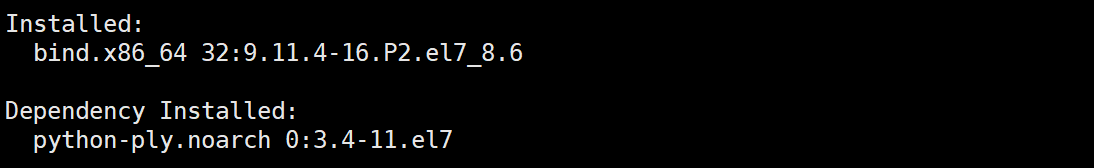

2.3 DNS服务初始化

192.168.31.11 上

2.3.1 安装bind

yum -y install bind

2.3.2 配置主配置文件

修改 主配置文件 /etc/named.conf

options {

listen-on port 53 { 192.168.31.11; }; //监听地址

listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

recursing-file "/var/named/data/named.recursing";

secroots-file "/var/named/data/named.secroots";

allow-query { any; }; //哪一些客户端可以通过当前主机这台DNS查到DNS关系

forwarders { 192.168.31.2; }; //上一层DNS, 如果当前DNS无法查询, 则往上一层找, 即网关

recursion yes; // DNS服务采用递归算法给客户端提供DNS查询

dnssec-enable yes;

dnssec-validation yes;

/* Path to ISC DLV key */

bindkeys-file "/etc/named.root.key";

managed-keys-directory "/var/named/dynamic";

pid-file "/run/named/named.pid";

session-keyfile "/run/named/session.key";

};

logging {

channel default_debug {

file "data/named.run";

severity dynamic;

};

};

zone "." IN {

type hint;

file "named.ca";

};

include "/etc/named.rfc1912.zones";

include "/etc/named.root.key";

2.3.3 配置区域配置文件

新建 区域配置文件 /etc/named.rfc1912.zones

zone "host.com" IN {

type master;

file "host.com.zone";

allow-update { 192.168.31.11; };

};

zone "od.com" IN {

type master;

file "od.com.zone";

allow-update { 192.168.31.11; };

};

2.3.4 配置区域数据文件

2.3.4.1 新建 区域数据文件 /var/named/host.com.zone

$ORIGIN host.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.host.com. dnsadmin.host.com. (

2020072401 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimun (1 day)

)

NS dns.host.com.

$TTL 60 ; 1 minute

dns A 192.168.31.11

hdss200 A 192.168.31.200

hdss37 A 192.168.31.37

hdss40 A 192.168.31.40

2.3.4.2 新建 区域数据文件 /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2020072401 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimun (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 192.168.31.11

harbor A 192.168.31.200

2.3.4.3 启动named服务

systemctl start named

systemctl enable named

2.3.4.4 验证dns服务是否可用

dig -t A hdss11.host.com @192.168.31.11 +short

dig -t A hdss200.host.com @192.168.31.11 +short

dig -t A dns.host.com @192.168.31.11 +short

dig -t A hdss40.host.com @192.168.31.11 +short

dig -t A dns.od.com @192.168.31.11 +short

dig -t A harbor.od.com @192.168.31.11 +short

2.3.5 设置本地dns服务(192.168.31.11)为客户端提供服务

192.168.31.11 / 192.168.31.37 / 192.168.31.38 / 192.168.31.39 / 192.168.31.40 / 192.168.31.200

sed -i 's/DNS1=192.168.31.2/DNS1=192.168.31.11/' /etc/sysconfig/network-scripts/ifcfg-eth0

systemctl restart network

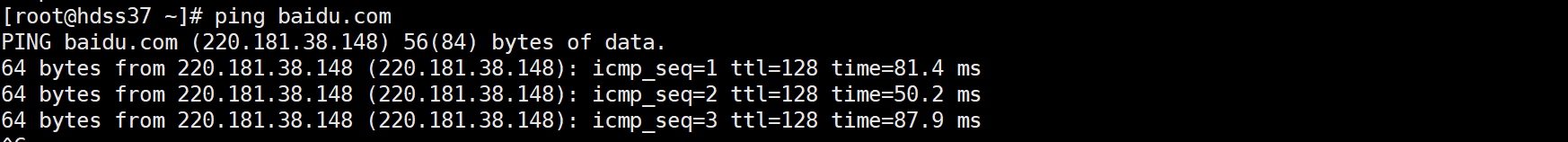

验证dns

dig -t A hdss11.host.com @192.168.31.11 +short

dig -t A hdss200.host.com @192.168.31.11 +short

dig -t A dns.host.com @192.168.31.11 +short

dig -t A hdss40.host.com @192.168.31.11 +short

dig -t A dns.od.com @192.168.31.11 +short

dig -t A harbor.od.com @192.168.31.11 +short

ping baidu.com

2.3

2.4 部署docker环境

2.5.1 安装

yum -y install yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum -y install docker-ce-18.06.3.ce

2.5.2 配置

mkdir /etc/docker

mkdir -p /data/docker

cat > /etc/docker/daemon.json <<EOF

{

"graph": "/data/docker",

"storage-driver": "overlay2",

"insecure-registries": ["registry.access.redhat.com", "quay.io", "harbor.od.com"],

"registry-mirrors": ["https://q2gr04ke.mirror.aliyuncs.com"],

"bip": "172.7.21.1/24",

"exec-opts": ["native.cgroupdriver=systemd"],

"live-restore": true

}

EOF

2.5.3 启动

systemctl start docker

systemctl enable docker

2.6 部署docker镜像仓库 harbor

hdss200.host.com(192.168.31.200)

2.6.1 安装docker-compose

https://docs.docker.com/compose/install/

while true;

do

curl -L "https://github.com/docker/compose/releases/download/1.26.0/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose;

if [[ $? -eq 0 ]];then

break;

fi;

done

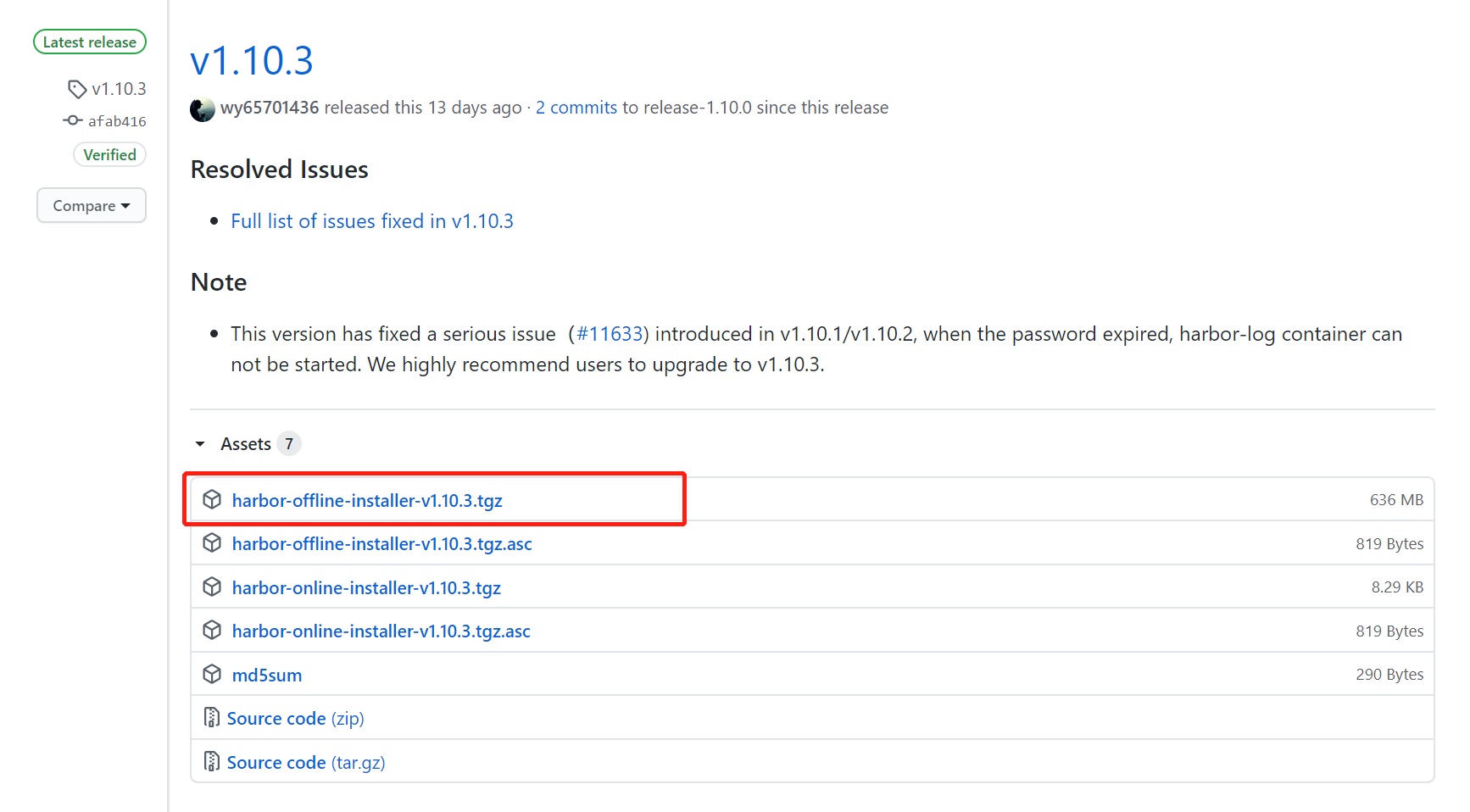

2.6.2 下载 harbor 1.10.3

从官网下载

https://github.com/goharbor/harbor/releases/download/v1.10.3/harbor-offline-installer-v1.10.3.tgz

2.6.3 使用nginx反向代理 harbor.od.com

使用域名 harbor.od.com 访问harbor

yum -y install nginx

配置虚拟主机 od.harbor.com.conf

server {

listen 80;

server_name harbor.od.com

location / {

proxy_pass http://127.0.0.1:180;

}

}

启动nginx

systemctl start nginx

systemctl enable nginx

2.6.4 修改区域数据文件 /var/named/od.com.zone

192.168.31.11 上操作

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2020072401 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimun (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 192.168.31.11

harbor A 192.168.31.200

重启named服务

systemctl restart named

dig -t A harbor.od.com +short

2.6.5 配置harbor

mkdir /opt/src

tar -xf harbor-offline-installer-v1.10.3.tgz -C /opt

mv /opt/harbor/ /opt/harbor-v1.10.3

ln -s /opt/harbor-v1.10.3/ /opt/harbor # 便于以后版本的升级

cd /opt/harbor

./prepare

修改配置文件 harbor.yml

hostname: harbor.od.com

http:

port: 180

data_volume: /data/harbor

log:

level: info

local:

rotate_count: 50

rotate_size: 200M

location: /data/harbor/logs

创建目录 /data/harbor/logs

mkdir -p /data/harbor/logs

2.6.6 启动harbor

/opt/harbor/install.sh

docker ps

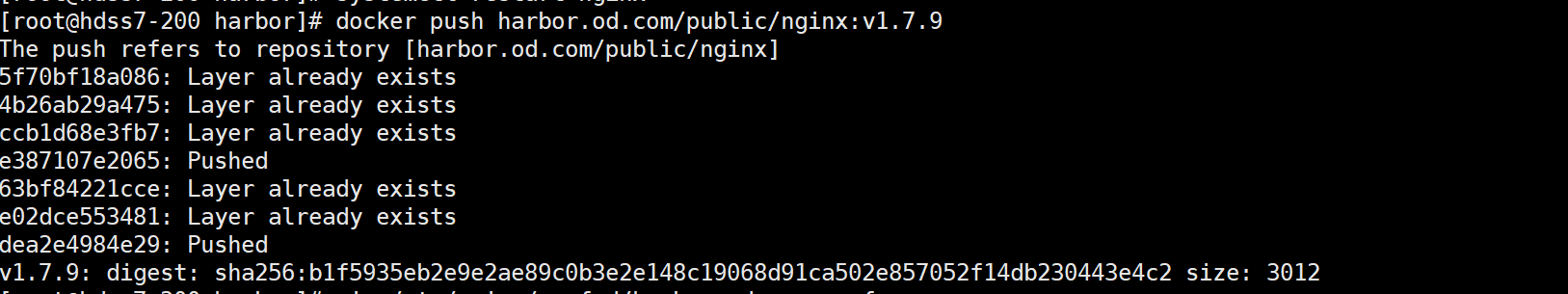

2.6.8 上传镜像

docker pull nginx:1.7.9

docker tag nginx:1.7.9 harbor.od.com/public/nginx:v1.7.9

docker login harbor.od.com

docker push harbor.od.com/public/nginx:v1.7.9

这个时候会有报错 413 Request Entity Too Large, 解决方案是 配置nginx主配置文件中最大上传文件的大小限制 ( client_max_body_size 50000m;)

vim /etc/nginx/nginx.conf

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

client_max_body_size 50000m;

systemctl restart nginx

docker push harbor.od.com/public/nginx:v1.7.9

2.4 配置keepalived 和 haproxy

haproxy: 负载均衡

keepalived: 高可用, 每台机器上都起着 192.168.31.10 这个vip

| 主机名 | IP地址 |

|---|---|

| hdss37.host.com | 192.168.31.37 |

| hdss38.host.com | 192.168.31.38 |

| hdss39.host.com | 192.168.31.39 |

2.4.1 配置haproxy

yum -y install haproxy

vim /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

tune.ssl.default-dh-param 2048

chroot /var/lib/haproxy

stats socket /var/lib/haproxy/stats

pidfile /var/run/haproxy.pid

maxconn 4000

#user haproxy

#group haproxy

daemon

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

log global

retries 3

option redispatch

stats uri /haproxy

stats refresh 30s

stats realm haproxy-status

stats auth admin:dxInCtFianKkL]36

stats hide-version

maxconn 65535

timeout connect 5000

timeout client 50000

timeout server 50000

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend kubernetes-apiserver

mode tcp

bind *:16443

option tcplog

default_backend kubernetes-apiserver

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

backend kubernetes-apiserver

mode tcp

balance roundrobin

server k8s-master01 192.168.31.37:6443 check

server k8s-master02 192.168.31.38:6443 check

server k8s-master02 192.168.31.39:6443 check

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

listen stats

bind *:1080

stats auth admin:awesomePassword

stats refresh 5s

stats realm HAProxy Statistics

stats uri /admin?stats

systemctl start haproxy.service

systemctl enable haproxy.service

2.4.2 配置keepalived

yum -y install keepalived.x86_64

- 创建keepalived 监控端口脚本

vim /etc/keepalived/check_port.sh

#!/bin/bash

#keepalived 监控端口脚本

#使用方法:

#在keepalived的配置文件中

#vrrp_script check_port {#创建一个vrrp_script脚本,检查配置

# script "/etc/keepalived/check_port.sh 6379" #配置监听的端口

# interval 2 #检查脚本的频率,单位(秒)

#}

CHK_PORT=$1

if [ -n "$CHK_PORT" ];then

PORT_PROCESS=`ss -lnt|grep $CHK_PORT|wc -l`

if [ $PORT_PROCESS -eq 0 ];then

echo "Port $CHK_PORT Is Not Used,End."

exit 1

fi

else

echo "Check Port Cant Be Empty!"

fi

chmod +x /etc/keepalived/check_port.sh

- 编辑配置文件

vim /etc/keepalived/keepalived.conf

192.168.31.37

! Configuration File for keepalived

global_defs {

router_id 192.168.31.37

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 16443"

interval 2

weight 20

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 251

priority 100

advert_int 1

mcast_src_ip 192.168.31.37

#nopreempt

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.31.10

}

}

注意!!!! nopreempt 这个配置表示非抢占式, 如果主keepalived节点(192.168.31.37)端口挂掉, 则vip会飘到备keepalived节点(192.168.31.38), 无论做什么操作, vip都不会回到主keepalived

192.168.31.38

! Configuration File for keepalived

global_defs {

router_id 192.168.31.38

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 16443"

interval 2

weight 20

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 251

priority 90

advert_int 1

mcast_src_ip 192.168.31.38

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.31.10

}

}

192.168.31.39

! Configuration File for keepalived

global_defs {

router_id 192.168.31.39

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 16443"

interval 2

weight 20

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 251

priority 80

advert_int 1

mcast_src_ip 192.168.31.39

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.31.10

}

}

- 启动服务

systemctl start keepalived.service

systemctl enable keepalived.service

3. 部署k8s

3.1 安装kubeadm, kubectl, kubelet

cat <<EOF >/etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum -y install kubeadm-1.17.9 kubectl-1.17.9 kubelet-1.17.9

3.2 生成部署的yaml文件

kubeadm config print init-defaults > kubeadm-config.yaml

3.3 修改部署文件

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.17.9

imageRepository: harbor.od.com/k8s

controlPlaneEndpoint: "192.168.31.10:16443"

apiServer:

certSANs:

- 192.168.31.37

- 192.168.31.38

- 192.168.31.39

networking:

serviceSubnet: 10.47.0.0/16

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

3.4 执行部署

kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

第一遍部署会有报错提示下载镜像, 下载相关镜像

docker pull registry.cn-shanghai.aliyuncs.com/giantswarm/kube-apiserver:1.17.9

docker pull registry.cn-shanghai.aliyuncs.com/giantswarm/kube-apiserver:v1.17.9

docker pull registry.cn-hangzhou.aliyuncs.com/kubernetes-service-catalog/kube-apiserver-amd64:v1.17.9

docker pull registry.cn-hangzhou.aliyuncs.com/kubernetes-service-catalog/kube-controller-manager-amd64:v1.17.9

docker pull registry.cn-hangzhou.aliyuncs.com/yayaw/kube-scheduler:v1.17.9

docker pull registry.cn-hangzhou.aliyuncs.com/kubernetes-service-catalog/kube-proxy-amd64:v1.17.9

docker tag registry.cn-hangzhou.aliyuncs.com/kubernetes-service-catalog/kube-proxy-amd64:v1.17.9 harbor.od.com/k8s/kube-proxy:v1.17.9

docker push harbor.od.com/k8s/kube-proxy:v1.17.9

docker tag registry.cn-hangzhou.aliyuncs.com/kubernetes-service-catalog/kube-apiserver-amd64:v1.17.9 harbor.od.com/k8s/kube-apiserver:v1.17.9

docker push harbor.od.com/k8s/kube-apiserver:v1.17.9

docker tag registry.cn-hangzhou.aliyuncs.com/kubernetes-service-catalog/kube-controller-manager-amd64:v1.17.9 harbor.od.com/k8s/kube-controller-manager:v1.17.9

docker push harbor.od.com/k8s/kube-controller-manager:v1.17.9

docker tag registry.cn-hangzhou.aliyuncs.com/yayaw/kube-scheduler:v1.17.9 harbor.od.com/k8s/kube-scheduler:v1.17.9

docker push harbor.od.com/k8s/kube-scheduler:v1.17.9

docker tag k8s.gcr.io/coredns:1.6.5 harbor.od.com/k8s/coredns:1.6.5

docker push harbor.od.com/k8s/coredns:1.6.5

docker tag k8s.gcr.io/etcd:3.4.3-0 harbor.od.com/k8s/etcd:3.4.3-0

docker push harbor.od.com/k8s/etcd:3.4.3-0

docker tag k8s.gcr.io/pause:3.1 harbor.od.com/k8s/pause:3.1

docker push harbor.od.com/k8s/pause:3.1

docker rmi registry.cn-hangzhou.aliyuncs.com/kubernetes-service-catalog/kube-proxy-amd64:v1.17.9

docker rmi registry.cn-hangzhou.aliyuncs.com/kubernetes-service-catalog/kube-controller-manager-amd64:v1.17.9

docker rmi registry.cn-hangzhou.aliyuncs.com/kubernetes-service-catalog/kube-apiserver-amd64:v1.17.9

docker rmi registry.cn-hangzhou.aliyuncs.com/yayaw/kube-scheduler:v1.17.9

docker rmi k8s.gcr.io/coredns:1.6.5

docker rmi k8s.gcr.io/etcd:3.4.3-0

docker rmi k8s.gcr.io/pause:3.1

- 再次部署

kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

3.5 部署calico网络

wget https://docs.projectcalico.org/v3.15/manifests/calico.yaml

- 下载相关镜像

docker pull calico/cni:v3.15.1

docker pull calico/node:v3.15.1

docker pull calico/kube-controllers:v3.15.1

docker pull calico/pod2daemon-flexvol:v3.15.1

docker tag calico/node:v3.15.1 harbor.od.com/k8s/calico/node:v3.15.1

docker push harbor.od.com/k8s/calico/node:v3.15.1

docker tag calico/pod2daemon-flexvol:v3.15.1 harbor.od.com/k8s/calico/pod2daemon-flexvol:v3.15.1

docker push harbor.od.com/k8s/calico/pod2daemon-flexvol:v3.15.1

docker tag calico/cni:v3.15.1 harbor.od.com/k8s/calico/cni:v3.15.1

docker push harbor.od.com/k8s/calico/cni:v3.15.1

docker tag calico/kube-controllers:v3.15.1 harbor.od.com/k8s/calico/kube-controllers:v3.15.1

docker push harbor.od.com/k8s/calico/kube-controllers:v3.15.1

- 将镜像地址改成本地镜像仓库地址, 然后部署

kubectl create -f calico.yaml