准备

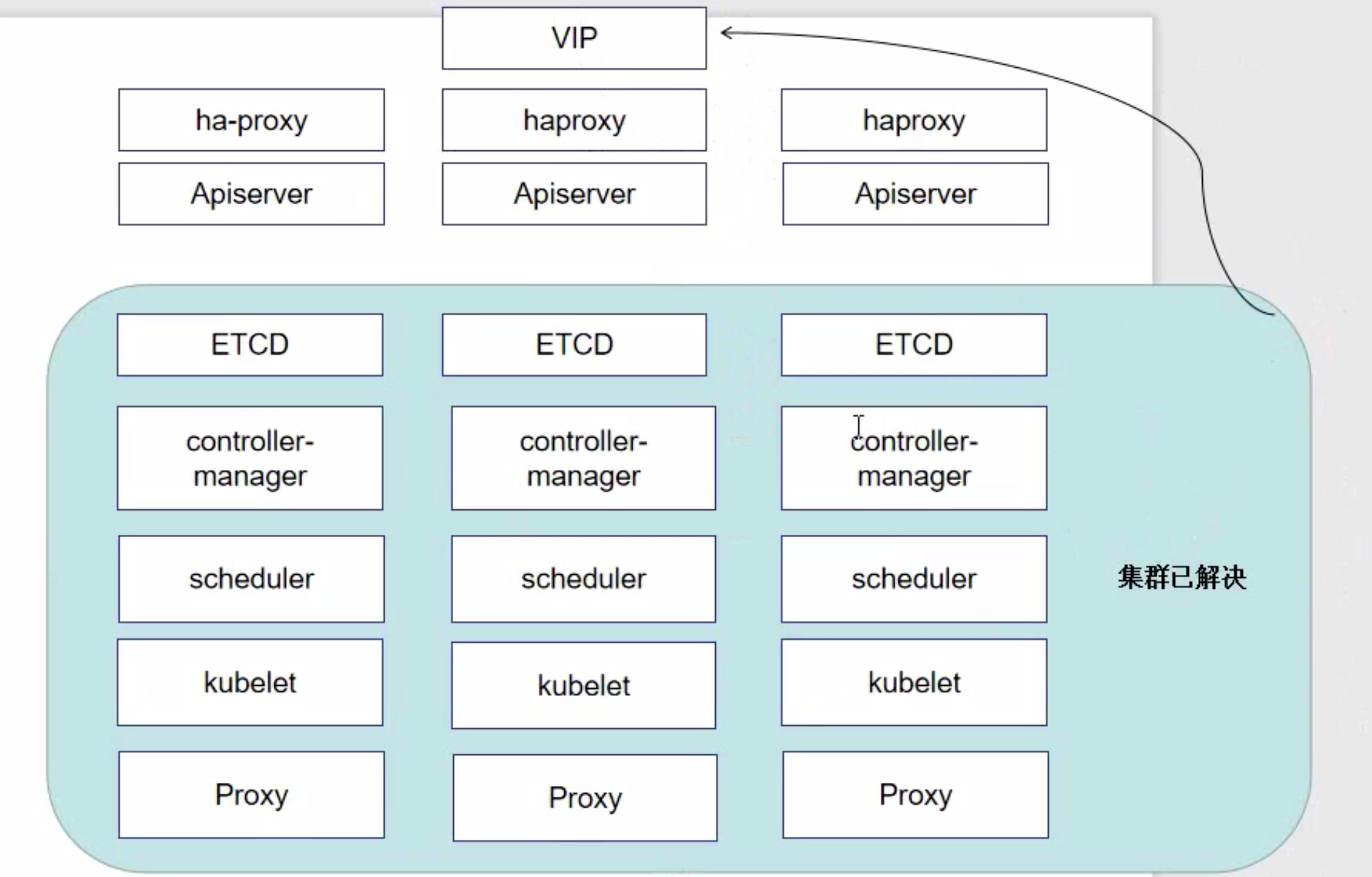

Apiserver 所有服务的总入口

ETCD 存储数据

controller-manager 控制器

scheduler 调度服务

kubelet 维持容器的生命周期, 运行cli

proxy 实现负载方案

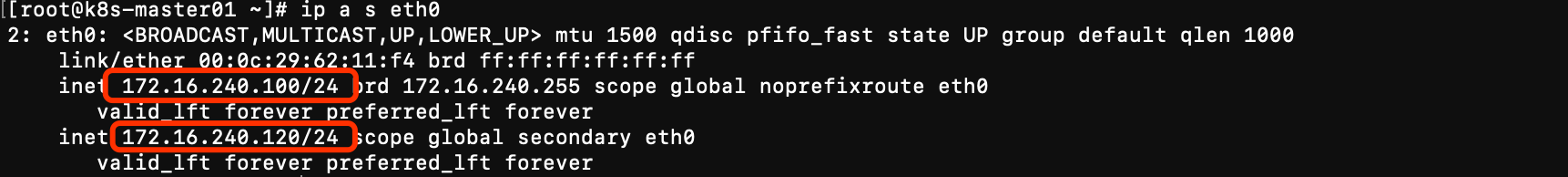

172.16.240.100 k8s-master01

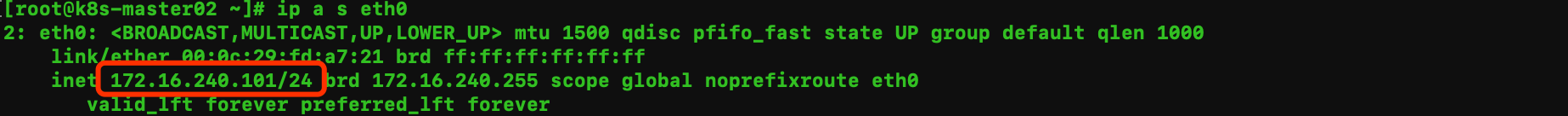

172.16.240.101 k8s-master02

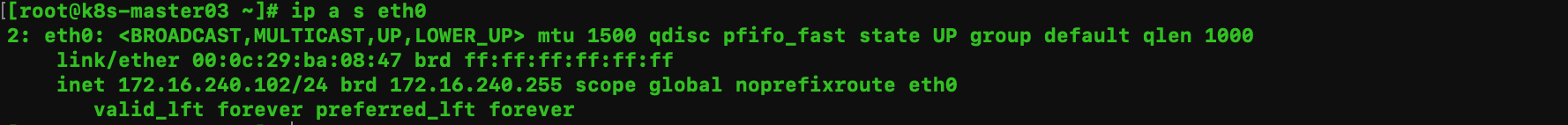

172.16.240.102 k8s-master03

172.16.240.103 k8s-nodetest

172.16.240.103 k8s-vip

172.16.240.200 registry

设置hosts文件

echo '172.16.240.100 k8s-master01' >> /etc/hosts

echo '172.16.240.101 k8s-master02' >> /etc/hosts

echo '172.16.240.102 k8s-master03' >> /etc/hosts

echo '172.16.240.103 k8s-nodetest' >> /etc/hosts

echo '172.16.240.120 k8s-vip' >> /etc/hosts

安装依赖包

yum -y install conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat

yum -y install bash-com*

设置防火墙为Iptables并设置规则

systemctl stop firewalld && systemctl disable firewalld

yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save

关闭SELINUX 和SWAP

swapoff -a && sed -i '/swap/ s/^(.*)$/#1/g' /etc/fstab

setenforce 0 && sed -i ' s/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

调整内核参数

cat > kubernetes.conf <<EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

sysctl -p /etc/sysctl.d/kubernetes.conf

调整系统时区

timedatectl set-timezone Asia/Shanghai

timedatectl set-local-rtc 0

重启依赖于系统事件的服务

systemctl restart rsyslog.service

systemctl restart crond.service

关闭系统不需要的服务

systemctl stop postfix.service && systemctl disable postfix.service

设置rsyslogd和systemd journald

mkdir /var/log/journal

mkdir /etc/systemd/journald.conf.d

cat > /etc/systemd/journald.conf.d/99-prophet.cof <<EOF

[Journal]

# 持久化保存到磁盘

Storage=persistent

# 压缩历史日志

Compress=yes

SyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

# 最大占用空间10G

SystemMaxUse=10G

# 单日志文件最大 200M

SystemMaxFileSize=200M

# 日志保存时间2周

MaxRetentionSec=2week

# 不将日志转发到syslog

ForwardToSyslog=no

EOF

systemctl restart systemd-journald.service

Kube-proxy开启ipvs的前置条件

modprobe br_netfilter

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash

/etc/sysconfig/modules/ipvs.modules && lsmod |grep -e ip_vs -e nf_conntrack_ipv4

安装docker

yum -y install yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum update -y

yum -y install docker-ce-18.06.3.ce

配置daemon

mkdir /etc/docker

cat >> /etc/docker/daemon.json<<EOF

{

"registry-mirrors": [

"https://1nj0zren.mirror.aliyuncs.com",

"https://docker.mirrors.ustc.edu.cn",

"http://f1361db2.m.daocloud.io",

"https://registry.docker-cn.com"

],

"insecure-registries" : [ "172.16.240.200:5000", "172.16.240.110:8999" ],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {"max-size": "100m"}

}

EOF

systemctl start docker

systemctl enable docker

升级内核

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

yum --enablerepo=elrepo-kernel install -y kernel-lt

grub2-set-default 'CentoS Linux(4.4.214-1.el7.elrepo.×86_64) 7 (Core)'

关闭NUMA

cp /etc/default/grub{,.bak}

sed -i 's#GRUB_CMDLINE_LINUX="rd.lvm.lv=centos/root rd.lvm.lv=centos/swap net.ifnames=0 rhgb quiet"#GRUB_CMDLINE_LINUX="rd.lvm.lv=centos/root rd.lvm.lv=centos/swap net.ifnames=0 rhgb quiet numa=off"#g' /etc/default/grub

默认配置文件备份, 生成新的grub配置文件

cp /boot/grub2/grub.cfg{,.bak}

grub2-mkconfig -o /boot/grub2/grub.cfg

下载高可用镜像

wise2c/keepalived-k8s:latest

wise2c/haproxy-k8s:latest

配置keepalived

参考文档 https://www.cnblogs.com/keep-live/p/11543871.html

- 安装 keepalived

yum install -y keepalived

- 备份keepalived的配置文件

cp /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg-back

- 修改 keepalived的配置文件

vim etc/haproxy/haproxy.cfg

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.240.120/24

}

}

说明:

- global_defs 只保留 router_id(每个节点都不同);

- 修改 interface(vip绑定的网卡),及 virtual_ipaddress(vip地址及掩码长度);

- 删除后面的示例

- 其他节点只需修改 state 为 BACKUP,优先级 priority 低于100即可。

- 启动服务

systemctl start keepalived && systemctl enable keepalived && systemctl status keepalived

- 查看状态

ip a s eth0

172.16.240.100 k8s-master01

172.16.240.101 k8s-master02

172.16.240.102 k8s-master03

结论: vip只会在一台机器上;如果两个机器都有vip,可能是防火墙拦截了vrrp协议。

配置haproxy

- 下载镜像 到

172.16.240.200 registry, 在三台master上从镜像仓库下载镜像

172.16.240.200 registry 操作

docker pull haproxy:2.1.3

docker tag haproxy:2.1.3 172.16.240.200:5000/haproxy:2.1.3

docker push 172.16.240.200:5000/haproxy:2.1.3

172.16.240.100 k8s-master01 / 172.16.240.101 k8s-master02/ 172.16.240.102 k8s-master03 上操作

docker pull 172.16.240.200:5000/haproxy:2.1.3

- 准备haproxy的配置文件

haproxy.cfg

global

log 127.0.0.1 local0

log 127.0.0.1 local1 notice

maxconn 4096

#chroot /usr/share/haproxy

#user haproxy

#group haproxy

daemon

defaults

log global

mode http

option httplog

option dontlognull

retries 3

option redispatch

timeout connect 5000

timeout client 50000

timeout server 50000

frontend stats-front

bind *:8081

mode http

default_backend stats-back

frontend fe_k8s_6444

bind *:6444

mode tcp

timeout client 1h

log global

option tcplog

default_backend be_k8s_6443

acl is_websocket hdr(Upgrade) -i WebSocket

acl is_websocket hdr_beg(Host) -i ws

backend stats-back

mode http

balance roundrobin

stats uri /haproxy/stats

stats auth pxcstats:secret

backend be_k8s_6443

mode tcp

timeout queue 1h

timeout server 1h

timeout connect 1h

log global

balance roundrobin

server k8s-master01 172.16.240.100:6443

server k8s-master02 172.16.240.101:6443

server k8s-master03 172.16.240.102:6443

- 启动镜像

docker run -d --restart=always --name haproxy-k8s -p 6444:6444 -e MasterIP1=172.16.240.100 -e MasterIP2=172.16.240.101 -e MasterIP3=172.16.240.102 -v /root/haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg 172.16.240.200:5000/haproxy:2.1.3

- 安装kubeadm, kubectl, kubelet

cat <<EOF >/etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum -y install kubeadm-1.17.4 kubectl-1.17.4 kubelet-1.17.4

systemctl enable kubelet

k8s 初始化主节点

kubeadm config print init-defaults > kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.17.3

imageRepository: 172.16.240.200:5000

controlPlaneEndpoint: 172.16.240.120:6444

apiServer:

timeoutForControlPlane: 4m0s

certSANs:

- 172.16.240.100

- 172.16.240.101

- 172.16.240.102

networking:

serviceSubnet: 192.168.0.0/16

podSubnet: "10.244.0.0/16"

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

说明:

- controlPlaneEndpoint 填写vip地址, 端口必须和 haproxy中的

frontend fe_k8s_6444下定义的bind的端口号一样 - certSANs 填写所有的master的ip地址

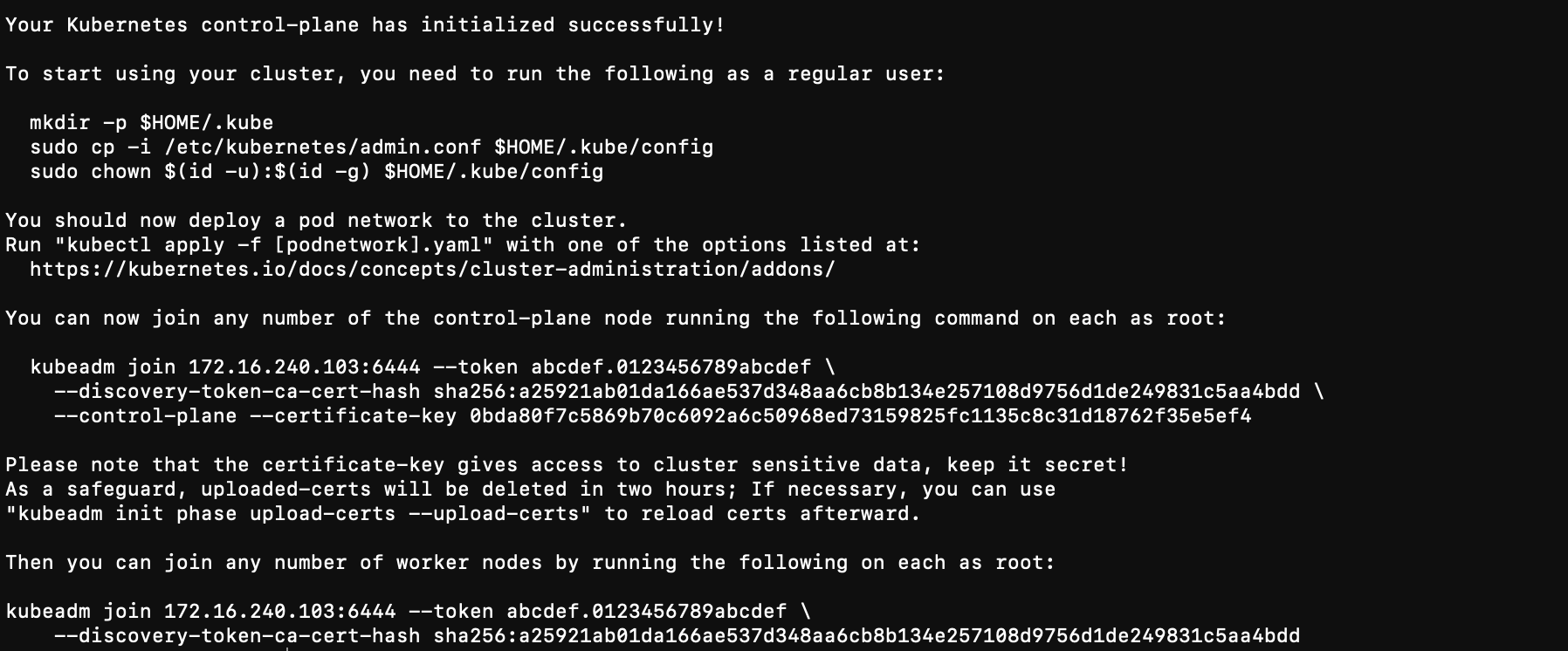

kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 查看server ip

- 加入master节点

kubeadm join 172.16.240.103:6444 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:a25921ab01da166ae537d348aa6cb8b134e257108d9756d1de249831c5aa4bdd --control-plane --certificate-key 0bda80f7c5869b70c6092a6c50968ed73159825fc1135c8c31d18762f35e5ef4

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 在172.16.240.100 k8s-master01节点上查看

kubectl get node

部署网络

如果不部署网络, 所有的节点都not ready

在 172.16.240.100 k8s-master01 上部署

wget https://docs.projectcalico.org/v3.10/manifests/calico.yaml

kubectl create -f calico.yaml

- 查看节点状态

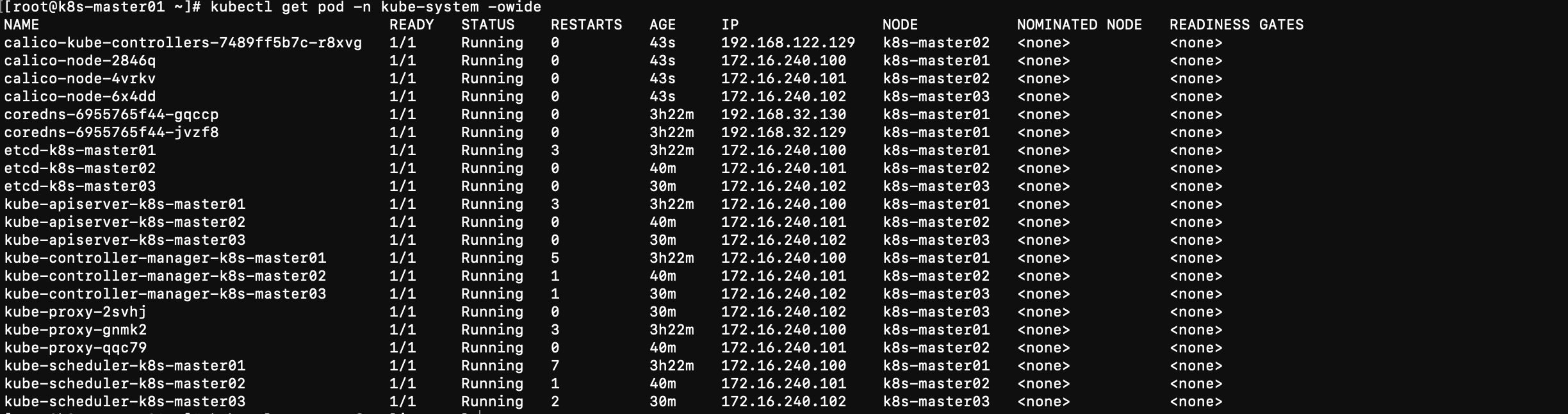

kubectl get pod -n kube-system -owide

部署dashboard

文档:https://www.jianshu.com/p/40c0405811ee

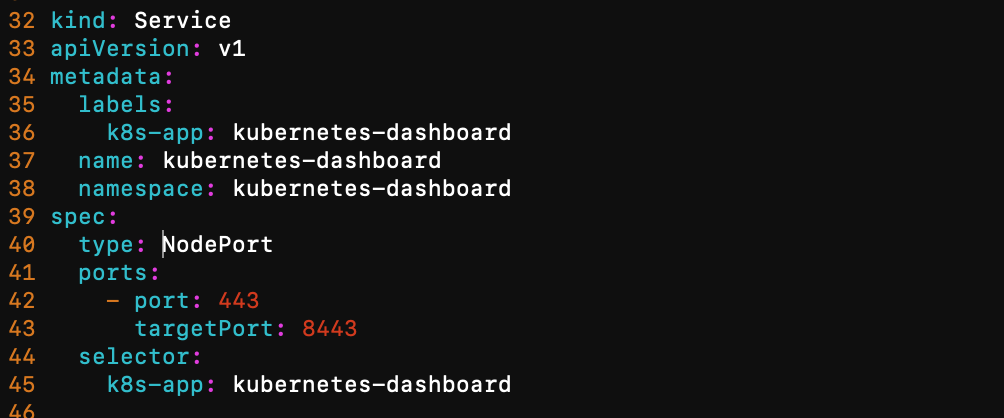

nodeport方式

将recommended.yaml 中镜像的地址改为本地镜像的地址

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc6/aio/deploy/recommended.yaml

mv recommended.yaml dashboard.yaml

修改yaml文件

- 将service type字段设置为nodeport

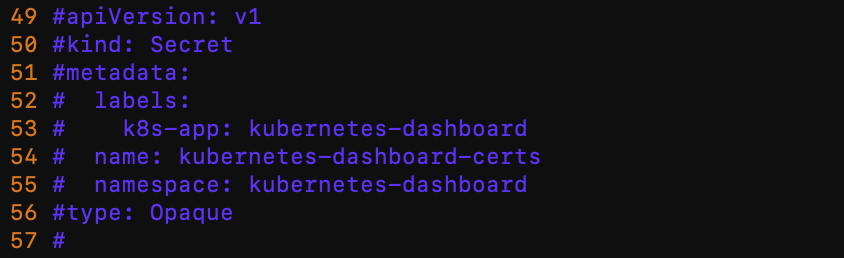

- 注释掉Dashboard Secret ,不然后面访问显示网页不安全,证书过期,我们自己生成证书

- 将镜像修改为镜像仓库地址

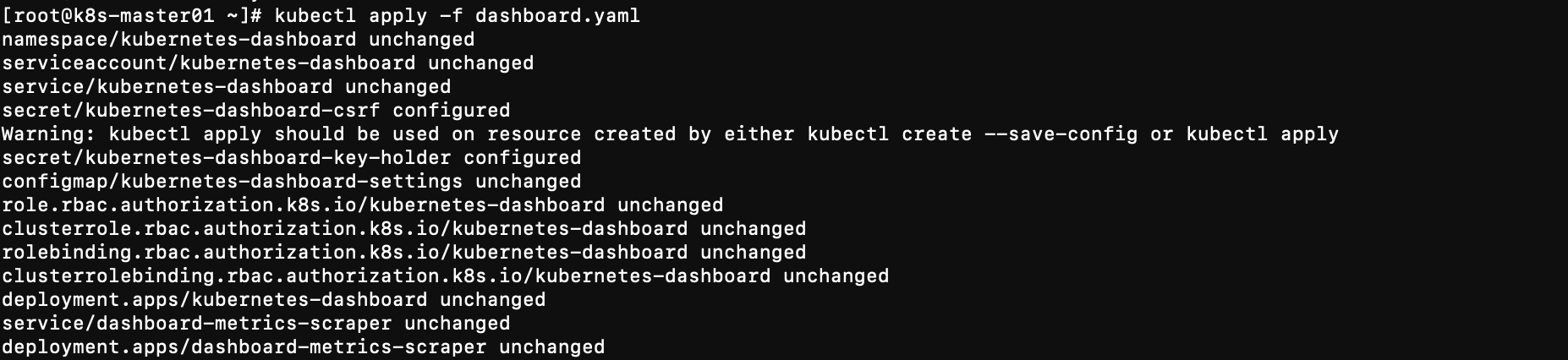

kubectl apply -f dashboard.yaml

设置权限文件

- admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

- admin-user-role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

kubectl create -f admin-user.yaml

kubectl create -f admin-user-role-binding.yaml

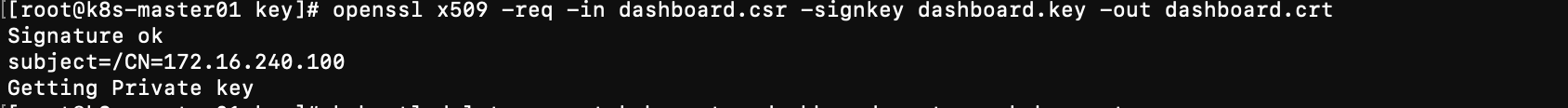

生成证书

mkdir key && cd key

openssl genrsa -out dashboard.key 2048

openssl req -new -out dashboard.csr -key dashboard.key -subj '/CN=172.16.240.100'

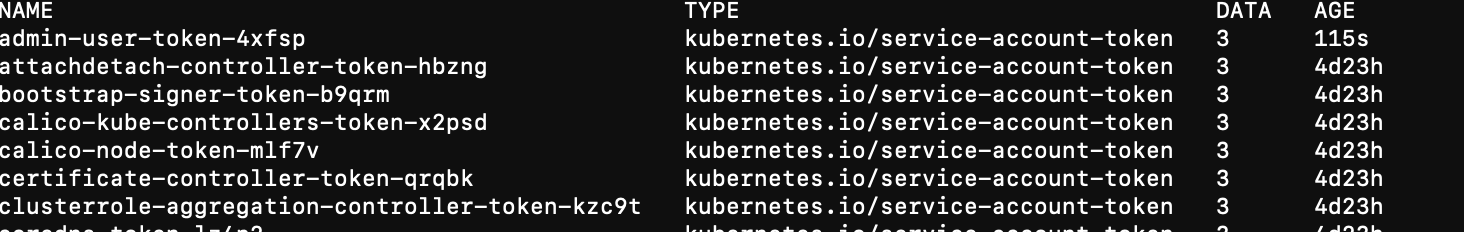

- 查看secret

openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

- 删除默认的secret

kubectl delete secret kubernetes-dashboard-certs -n kube-system

-

生成新的secret

这里的secret必须在kubernetes-dashboard 名称空间生成, 否则dashboard会起不来, dashboard是启动在kubernetes-dashboard 这个名称空间, 所以secret 也必须在这个空间生成

kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kubernetes-dashboard

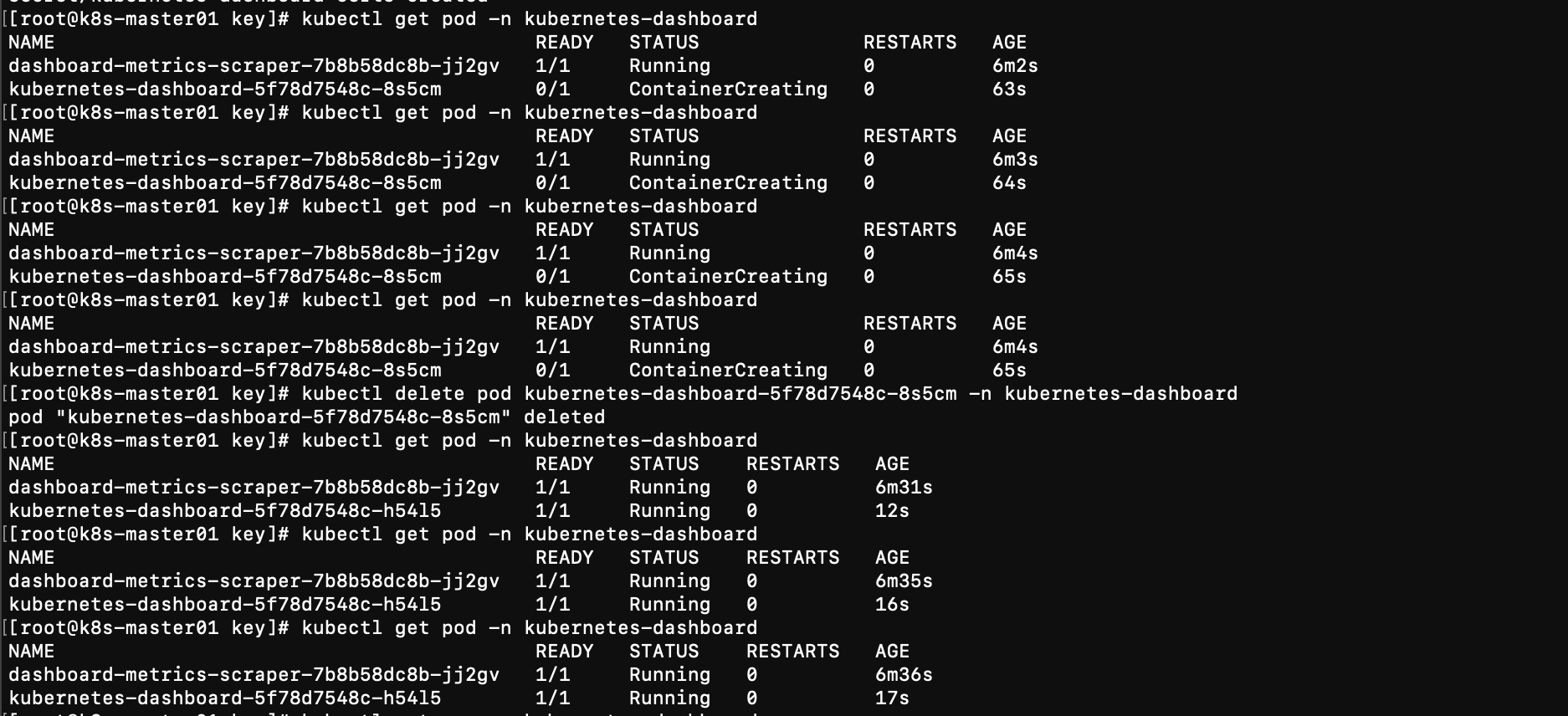

- 删除dashboard的pod, 相当于重启dashboard, 再次查看dashboard是否启动了

网页登录dashboard

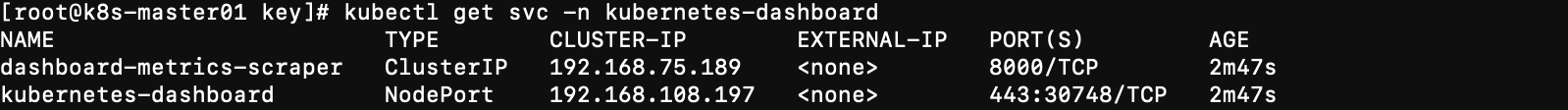

- 查看 dashboard暴露的接口

kubectl get svc -n kubernetes-dashboard

-

浏览器输入

https://172.16.240.100:30748/出现页面就没问题

- 获取token

kubectl -n kube-system get secret

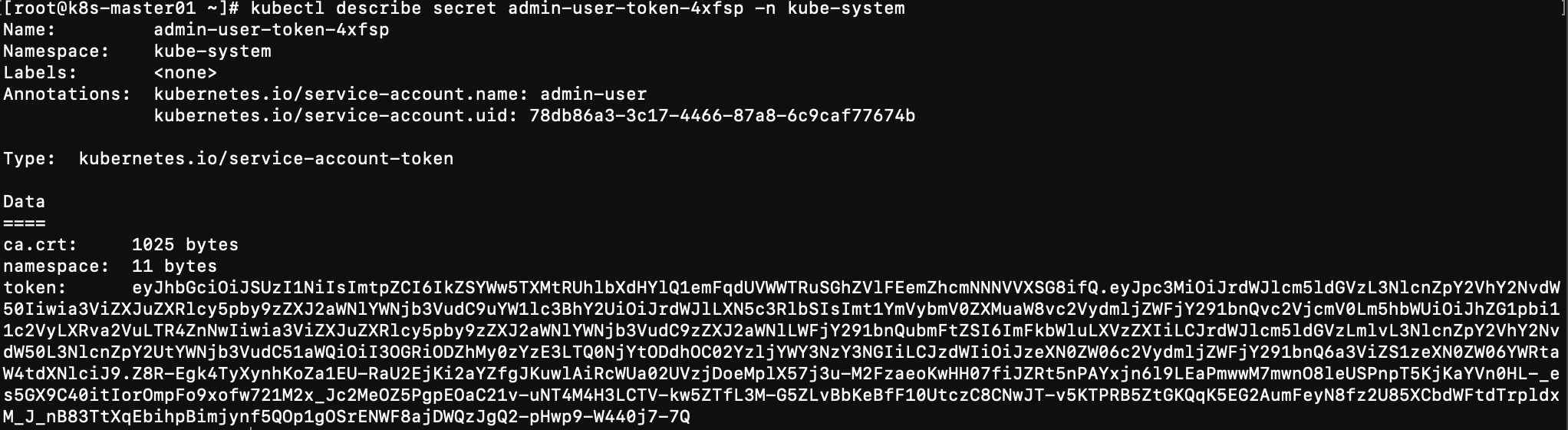

kubectl describe secret admin-user-token-4xfsp -n kube-system

k8s的一些命令

- 查看当前节点的健康状况

kubectl -n kube-system exec etcd-k8s-master01 -- etcdctl --endpoints=https://172.16.240.100:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key endpoint health

- 查看当前哪个节点活跃

kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

kubectl get endpoints kube-scheduler -n kube-system -o yaml

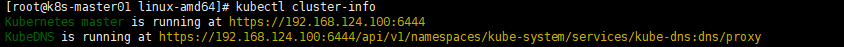

- 查看集群信息

kubectl cluster-info

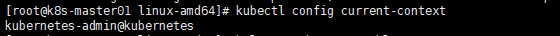

- 查看权限

kubectl config current-context

- 查看集群的角色

kubectl get clusterrole

- 删除 serviceaccounts

kubectl delete serviceaccount -n kube-system tiller

- 删除clusterrolebindings

kubectl delete clusterrolebindings -n kube-system tiller