作业①

1)爬取七天天气实验

要求:在中国气象网(http://www.weather.com.cn)给定城市集的7日天气预报,并保存在数据库。

代码部分

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import sqlite3

class WeatherDB:

def openDB(self):

self.con = sqlite3.connect("weathers.db")

self.cursor = self.con.cursor()

try:

self.cursor.execute(

"create table weathers (wCity varchar(16),wDate varchar(16),wWeather varchar(64),wTemp varchar(32),constraint pk_weather primary key (wCity,wDate))")

except:

self.cursor.execute("delete from weathers")

def closeDB(self):

self.con.commit()

self.con.close()

def insert(self, city, date, weather, temp):

try:

self.cursor.execute("insert into weathers (wCity,wDate,wWeather,wTemp) values(?,?,?,?)",

(city, date, weather, temp))

except Exception as err:

print(err)

def show(self):

self.cursor.execute("select * from weathers")

rows = self.cursor.fetchall()

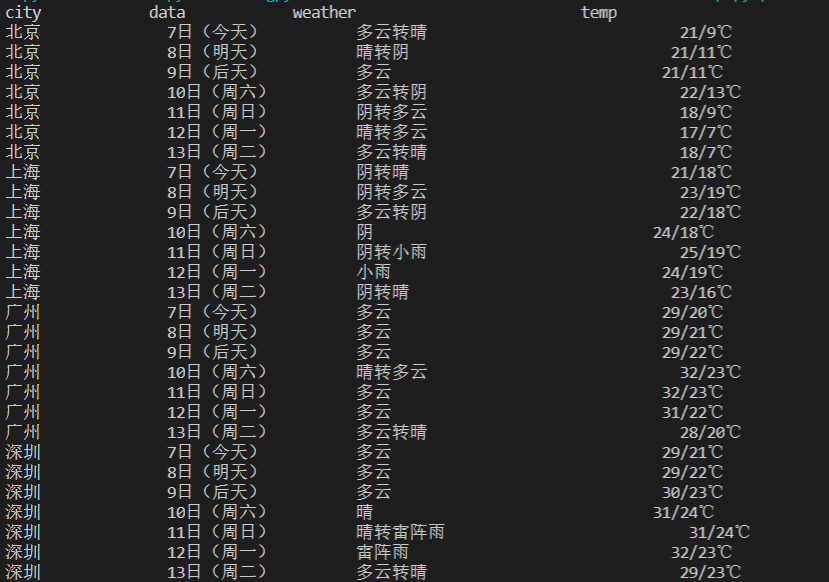

print("%-16s%-16s%-32s%-16s" % ("city", "data", "weather", "temp"))

for row in rows:

print("%-16s%-16s%-32s%-16s" % (row[0], row[1], row[2], row[3]))

class WeatherForecast:

def __init__(self):

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US;rv:1.9pre)Gecko/2008072421 Minefield/3.0.2pre"}

self.cityCode = {"北京": "101010100", "上海": "101020100", "广州": "101280101", "深圳": "101280601"}

def forecastCity(self, city):

if city not in self.cityCode.keys():

print(city + "code cannot be found")

return

url = "http://www.weather.com.cn/weather/" + self.cityCode[city] + ".shtml"

try:

req = urllib.request.Request(url, headers=self.headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

data = dammit.unicode_markup

soup = BeautifulSoup(data, "lxml")

lis = soup.select("ul[class='t clearfix'] li")

for li in lis:

try:

date = li.select('h1')[0].text

weather = li.select('p[class="wea"]')[0].text

temp = li.select('p[class="tem"] span')[0].text + "/" + li.select('p[class="tem"] i')[0].text

self.db.insert(city, date, weather, temp)

except Exception as err:

print(err)

except Exception as err:

print(err)

def process(self, cities):

self.db = WeatherDB()

self.db.openDB()

for city in cities:

self.forecastCity(city)

self.db.show()

self.db.closeDB()

ws = WeatherForecast()

ws.process(["北京", "上海", "广州", "深圳"])

运行结果

2)心得体会

本次实验主要是对书本上代码的复现,加强了自己对BeautifulSoup库的使用,对sqlite3有了一些了解,不过好像还是有bug,晚上爬取的时候是无法爬取到当天的天气情况的,这个有点懵。

作业②

要求:用requests和BeautifulSoup库方法定向爬取股票相关信息。

候选网站:东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock/

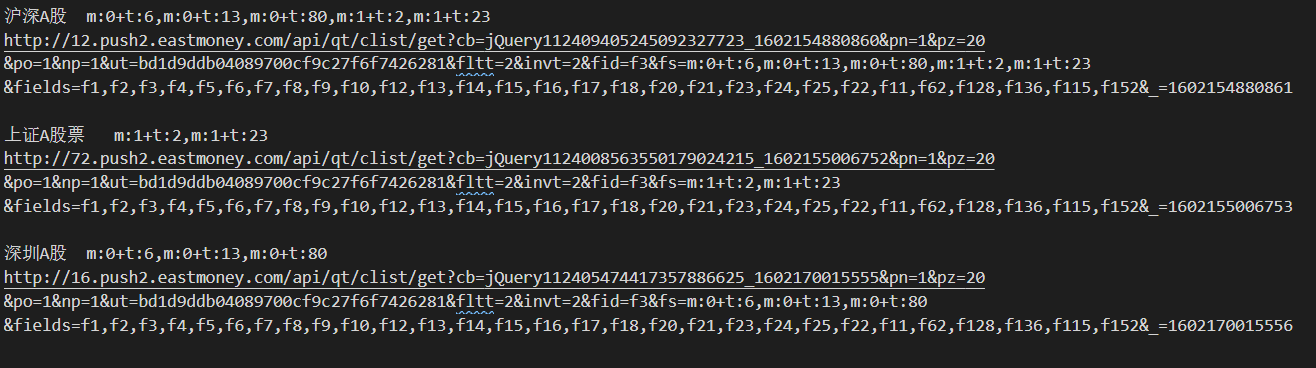

技巧:在谷歌浏览器中进入F12调试模式进行抓包,查找股票列表加载使用的url,并分析api返回的值,并根据所要求的参数可适当更改api的请求参数。根据URL可观察请求的参数f1、f2可获取不同的数值,根据情况可删减请求的参数。

参考链接:https://zhuanlan.zhihu.com/p/50099084

1)爬取股票信息实验

思路:因为网页是动态加载的,一开始入手的时候选用Beautiful库中的方法,但是这样其实是无法爬取到具体信息的,所以后面获取到实际的url,再通过正则表达式来匹配。

受大佬室友新指点,发现不同股票之间的差异字段是fs,而pn和pz是页码以及一页中股票的数量。

代码部分

import re

import requests

pn = input("请输入要爬取的页面:")

count = 1

i = 1

fs = ""

headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"

}

fs_list = { #通过比对多只股票的url,发现fs字段是区分不同股票的标志,这里就列出3种

"沪深A股":"fs=m:0+t:6,m:0+t:13,m:0+t:80,m:1+t:2,m:1+t:23",

"上证A股":"fs=m:1+t:2,m:1+t:23",

"深圳A股":"fs=m:0+t:6,m:0+t:13,m:0+t:80"

}

tplt = "{0:{13}<5}{1:{13}<5}{2:{13}<5}{3:{13}<5}{4:{13}<5}{5:{13}<5}{6:{13}<5}{7:{13}^10}{8:{13}<5}{9:{13}<5}{10:{13}<5}{11:{13}<5}{12:{13}<5}"

print(tplt.format("序号","股票代码","股票名称","最新报价","涨跌幅","涨跌额","成交量","成交额","振幅","最高","最低","今开","昨收",chr(12288)))

for key in fs_list:

print("正在爬取"+key+"的数据")

fs = fs_list[key]

for i in range(1,int(pn)+1):

url = "http://69.push2.eastmoney.com/api/qt/clist/get?cb=jQuery1124018833205583945567_1601998353892&pn="+str(i)+"&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&"+str(fs)+"&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1601998353939"

r = requests.get(url=url,headers=headers)

page_text = r.text

# print(page_text)

reg = '"diff":[(.*?)]' #把我们需要的数据爬取出来

list1 = re.findall(reg,page_text)

#print(list)

list2 = re.findall('{(.*?)}',list1[0],re.S) #把每支股票匹配出来

#print(len(list2))

#print(list2)

for j in list2:

list3 = []

list3.append(j.split(",")) #用逗号分割之后,方便我们提取需要的数据,接下来只要观察就行了

#print(list3)

num = list3[0][11].split(":")[-1].replace(""","") #把引号替换为空字符串,这里要转义

name = list3[0][13].split(":")[-1].replace(""","")

new_price = list3[0][1].split(":")[-1].replace(""","")

crease_range = list3[0][2].split(":")[-1].replace(""","")+"%"

crease_price = list3[0][3].split(":")[-1].replace(""","")

com_num = list3[0][4].split(":")[-1].replace(""","")

com_price = list3[0][5].split(":")[-1].replace(""","")

move_range = list3[0][6].split(":")[-1].replace(""","")+"%"

top = list3[0][14].split(":")[-1].replace(""","")

bottom = list3[0][15].split(":")[-1].replace(""","")

today = list3[0][16].split(":")[-1].replace(""","")

yestoday = list3[0][17].split(":")[-1].replace(""","")

print('%-10s%-10s%-10s%-10s%-10s%-10s%-10s%-10s%10s%10s%10s%10s%10s' %(count,num,name,new_price,crease_range,crease_price,com_num,com_price,move_range,top,bottom,today,yestoday))

count += 1

print("=================================================="+key+"第"+str(i)+"页内容打印成功===============================================")

运行结果

2)心得体会

本次实验花费了不少时间,一开始实验的时候用的是select,定位标签的时候调试了很久,定位到自己要爬取的内容时就没有返回值了,这才知道不能再使用Beautifulsoup来做了,后面就对比url也花费了不少时间,感觉本次实验收获还是蛮大的。

作业③

要求:根据自选3位数+学号后3位选取股票,获取印股票信息。抓包方法同作②。

候选网站:东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock/

1)爬取股票信息实验

思路:感觉有了第二次实验的铺垫,比较容易。不过自选的3位数股票不一定存在,所以这里采用了遍历,遇到后三位能够匹配的上的股票代码,就直接break。本次实验我只遍历了沪深A股,发现匹配地蛮快的,就没有把所有股票都遍历一遍了。

代码部分

import re

import requests

flag = 0

headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"

}

print("股票代码","股票名称","今日开","今日最高","今日最低")

for i in range(1,211):

url = "http://69.push2.eastmoney.com/api/qt/clist/get?cb=jQuery1124018833205583945567_1601998353892&pn="+str(i)+"&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80,m:1+t:2,m:1+t:23&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1601998353939"

r = requests.get(url=url,headers=headers)

page_text = r.text

reg = '"diff":[(.*?)]' #把我们需要的数据爬取出来

list1 = re.findall(reg,page_text)

list2 = re.findall('{(.*?)}',list1[0],re.S) #把每支股票匹配出来

for j in list2:

list3 = []

list3.append(j.split(",")) #用逗号分割之后,方便我们提取需要的数据,接下来只要观察就行了

num = list3[0][11].split(":")[-1].replace(""","") #把引号替换为空字符串,这里要转义

name = list3[0][13].split(":")[-1].replace(""","")

top = list3[0][14].split(":")[-1].replace(""","")

bottom = list3[0][15].split(":")[-1].replace(""","")

today = list3[0][16].split(":")[-1].replace(""","")

num = str(num)

if(num.endswith("106")):

print(num,name,today,top,bottom)

flag = 1

break

if(flag==1):

print("所需指定股票已获取!!!")

break

if(flag==0):

print("未找到指定股票")

运行结果

2)心得体会

emmmm,感觉自己可能曲解老师的题意了。。。。。。