环境信息

三台机器,操作系统CentOS 7.4:

hanyu-210 10.20.0.210

hanyu-211 10.20.0.211

hanyu-212 10.20.0.212

前提条件:

已搭建K8S集群(1个master 2个node节点)

1、搭建glusterFS集群(除非特别说明,否则三个节点都执行)

执行

[root@hanyu-210 k8s_glusterfs]# yum install centos-release-gluster

[root@hanyu-210 k8s_glusterfs]# yum install -y glusterfs glusterfs-server glusterfs-fuse glusterfs-rdma

配置 GlusterFS 集群

[root@hanyu-210 k8s_glusterfs]# systemctl start glusterd.service

[root@hanyu-210 k8s_glusterfs]# systemctl enable glusterd.service

hanyu-210节点执行

[root@hanyu-210 k8s_glusterfs]# gluster peer probe hanyu-210

[root@hanyu-210 k8s_glusterfs]# gluster peer probe hanyu-211

[root@hanyu-210 k8s_glusterfs]# gluster peer probe hanyu-212

创建数据目录

[root@hanyu-210 k8s_glusterfs]# mkdir -p /opt/gfs_data

创建复制卷

[root@hanyu-210 k8s_glusterfs]# gluster volume create k8s-volume replica 3 hanyu-210:/opt/gfs_data hanyu-211:/opt/gfs_data hanyu-212:/opt/gfs_data force

启动卷

[root@hanyu-210 k8s_glusterfs]# gluster volume start k8s-volume

查询卷状态

[root@hanyu-210 k8s_glusterfs]# gluster volume status

Status of volume: k8s-volume

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick hanyu-210:/opt/gfs_data 49152 0 Y 29445

Brick hanyu-212:/opt/gfs_data 49152 0 Y 32098

Self-heal Daemon on localhost N/A N/A Y 29466

Self-heal Daemon on hanyu-212 N/A N/A Y 32119

Task Status of Volume k8s-volume

------------------------------------------------------------------------------

There are no active volume tasks

[root@hanyu-210 k8s_glusterfs]# gluster volume info

Volume Name: k8s-volume

Type: Replicate

Volume ID: 7d7ecba3-7bc9-4e09-89ed-493b3a6a2454

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: hanyu-210:/opt/gfs_data

Brick2: hanyu-211:/opt/gfs_data

Brick3: hanyu-212:/opt/gfs_data

Options Reconfigured:

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off

验证glusterFS集群可用

选择其中一台主机执行

yum install -y glusterfs glusterfs-fuse

mkdir -p /root/test

mount -t glusterfs hanyu-210:k8s-volume /root/test

df -h

umount /root/test

2、使用glusterfs(以下均在k8s master节点执行)

创建glusterfs的endpoints:kubectl apply -f glusterfs-cluster.yaml

[root@hanyu-210 k8s_glusterfs]# cat glusterfs-cluster.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: glusterfs-cluster

namespace: default

subsets:

- addresses:

- ip: 10.20.0.210

- ip: 10.20.0.211

- ip: 10.20.0.212

ports:

- port: 49152

protocol: TCP

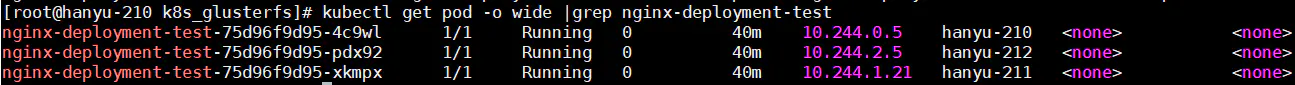

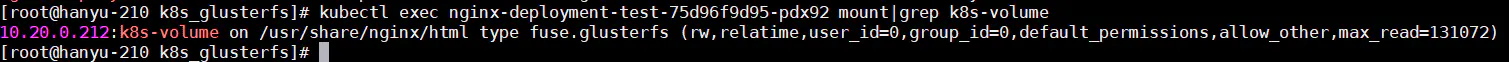

创建应用直接使用glusterfs作为存储卷:kubectl apply -f nginx_deployment_test.yaml

[root@hanyu-210 k8s_glusterfs]# cat nginx_deployment_test.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment-test

spec:

replicas: 3

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: storage001

mountPath: "/usr/share/nginx/html"

volumes:

- name: storage001

glusterfs:

endpoints: glusterfs-cluster

path: k8s-volume

readOnly: false

创建pv使用glusterfs:kubectl apply -f glusterfs-pv.yaml

[root@hanyu-210 k8s_glusterfs]# cat glusterfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: glusterfs-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

glusterfs:

endpoints: glusterfs-cluster

path: k8s-volume

readOnly: false

创建pvc声明:kubectl apply -f glusterfs-pvc.yaml

[root@hanyu-210 k8s_glusterfs]# cat glusterfs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: glusterfs-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi

创建应用使用pvc:kubectl apply -f nginx_deployment.yaml

[root@hanyu-210 k8s_glusterfs]# cat nginx_deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: storage001

mountPath: "/usr/share/nginx/html"

volumes:

- name: storage001

persistentVolumeClaim:

claimName: glusterfs-pvc

作者:sjyu_eadd

链接:https://www.jianshu.com/p/4ebf960b2075

来源:简书

著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。