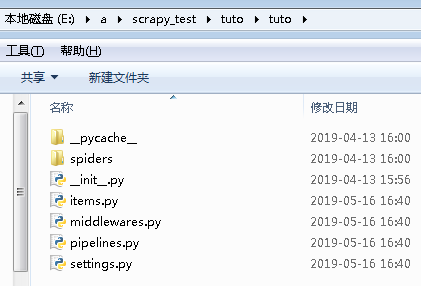

1.建立项目:

#建立名为tuto的项目 scrapy startproject tuto

2.进入项目目录:

cd tuto

3.建立域名任务:

#minyan任务名;后面是任务对应的域名 scrapy genspider minyan quotes.toscrape.com

cmd全程记录:

E:ascrapy_test>scrapy startproject tuto New Scrapy project 'tuto', using template directory 'c:pythonpython37libsite -packagesscrapy emplatesproject', created in: E:ascrapy_test uto You can start your first spider with: cd tuto scrapy genspider example example.com E:ascrapy_test>cd tuto E:ascrapy_test uto>scrapy genspider minyan quotes.toscrape.com Created spider 'minyan' using template 'basic' in module: tuto.spiders.minyan E:ascrapy_test uto>

4.pycharm打开项目,建立用于调试的文件:main.py

用到的函数解析:https://www.cnblogs.com/chenxi188/p/10876690.html

main.py:

# -*- coding: utf-8 -*- __author__='pasaulis' import sys,os from scrapy.cmdline import execute #获取到当前目录,添加到环境变量,方便运行cmd不必再去找目录 #.append()添加到环境变量;.dirname()输出不含文件名的路径;.abspath(__file__)得到当前文件的绝对路径 sys.path.append(os.path.dirname(os.path.abspath(__file__))) #调用命令运行爬虫scrapy crawl 爬虫名minyan.py中(name的值) execute(["scrapy","crawl","minyan"])

查看页面,确定需要解析哪些数据,在items.py里写出:

import scrapy class TutoItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() text=scrapy.Field() author=scrapy.Field() tags=scrapy.Field() #pass

minyan.py:

# -*- coding: utf-8 -*- import scrapy from tuto.items import TutoItem #从tuto文件夹下的items.py导入其中的类TutoItem用于创建列表 class MinyanSpider(scrapy.Spider): name = 'minyan' allowed_domains = ['quotes.toscrape.com'] start_urls = ['http://quotes.toscrape.com/'] def parse(self, response): quotes=response.css('.quote') for quote in quotes: item=TutoItem() item['text']=quote.css('.text::text').extract_first() item['author']=quote.css('.author::text').extract_first() item['tags']=quote.css('.keywords::attr(content)').extract_first() yield item #下一页 next=response.css('.next > a::attr(href)').extract_first() url=response.urljoin(next) #补全网址 yield scrapy.Request(url=url,callback=self.parse) #请求下一页,并回调自身方法

settings.py把此条改为false

# Obey robots.txt rules ROBOTSTXT_OBEY = False

运行代码:

在pycharm最下terminal里: scrapy crawl mingyan

或main.py里运行