目录

一、StorageClass

根据pvc的要求,去自动创建符合要求的pv。

按照pvc去创建pv

减少资源浪费

每一个存储类都包含 provisioner、parameters 和 reclaimPolicy 这三个参数域,当一个属于某个类的PersistentVolume 需要被动态提供时,将会使用上述的参数域。

1.部署

# 安装helm

[root@k8s-m-01 ~]# wget https://get.helm.sh/helm-v3.6.3-linux-amd64.tar.gz

[root@k8s-m-01 ~]# tar xf helm-v3.6.3-linux-amd64.tar.gz

[root@k8s-m-01 ~]# cd linux-amd64/

[root@k8s-m-01 linux-amd64]# ls

helm LICENSE README.md

#移动到/usr/local/bin

[root@k8s-m-01 linux-amd64]# mv helm /usr/local/bin/

# 存储卷的权限必须是777

# 创建目录

[root@m01 object]# mkdir -p $HOME/.cache/helm

[root@m01 object]# mkdir -p $HOME/.config/helm

[root@m01 object]# mkdir -p $HOME/.local/share/helm

# 测试安装

[root@m01 ~]# helm

The Kubernetes package manager

Common actions for Helm:

- helm search: search for charts

- helm pull: download a chart to your local directory to view

- helm install: upload the chart to Kubernetes

- helm list: list releases of charts

# 添加存储库

[root@k8s-m-01 ~]# helm repo add ckotzbauer https://ckotzbauer.github.io/helm-charts

[root@k8s-m-01 ~]# helm repo list #查看仓库列表

NAME URL

ckotzbauer https://ckotzbauer.github.io/helm-charts

# 安装存储类和nfs客户端

[root@m01 ~]# helm pull ckotzbauer/nfs-client-provisioner

#搜索

[root@k8s-m-01 ~]# helm search repo nfs-client

NAME CHART VERSION APP VERSION DESCRIPTION

ckotzbauer/nfs-client-provisioner 1.0.2 3.1.0 nfs-client is an automatic provisioner that use...

#下载、解压

[root@k8s-m-01 ~]# helm pull ckotzbauer/nfs-client-provisioner

[root@k8s-m-01 ~]# tar -xf nfs-client-provisioner-1.0.2.tgz

[root@k8s-m-01 ~]# cd nfs-client-provisioner

# 修改

[root@m01 nfs-client-provisioner]# vim values.yaml

accessModes: ReadWriteMany # 改成多路可读可写

reclaimPolicy: Retain # 回收策略

nfs:

server: 192.168.15.51

path: /nfs/v1

mountOptions: {}

# 编辑/etc/kubernetes/manifests/kube-apiserver.yaml

# 增加 - --feature-gates=RemoveSelfLink=false

# 重新部署

# 安装

[root@m01 nfs-client-provisioner]# helm install nfs-client ./

[root@m01 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

bash 1/1 Running 1 12h

nfs-client-nfs-client-provisioner-86b68fcb58-8vlkd 1/1 Running 0 25s

[root@m01 ~]# kubectl get storageclasses.storage.k8s.io

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client cluster.local/nfs-client-nfs-client-provisioner Delete Immediate true 80s

# 测试存储类

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-sc

spec:

storageClassName: nfs-client

accessModes:

- "ReadWriteMany"

resources:

requests:

storage: "6Gi"

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: test-sc

spec:

selector:

matchLabels:

app: test-sc

template:

metadata:

labels:

app: test-sc

spec:

containers:

- name: nginx

imagePullPolicy: IfNotPresent

image: nginx

volumeMounts:

- mountPath: /data/

name: test-sc-name

volumes:

- name: test-sc-name

persistentVolumeClaim:

claimName: test-sc

# 查看pv与pvc

[root@m01 ~]# kubectl get pv,pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/test-sc Bound pvc-6bbc1e22-8107-499b-9c18-da30e481d166 6Gi RWX

# 可以查看到自动创建的pv的大小正好是文件中自己指定的大小,不会造成资源浪费

二、k8s配置中心---ConfigMap

在生产环境中经常回遇到需要修改配置文件的情况,传统的修改方式会影响到服务的正常运行,操作繁琐,但是kubernetes从1.2版本引入了ConfigMap功能,用于将应用的配置信息与程序分离。

1.创建configmap的四种方式

# 1、 指定配置文件

[root@m01 ~]# ls

app deployment.yaml

[root@m01 ~]# kubectl create configmap file --from-file=deployment.yaml

configmap/file created

# 查看

[root@m01 ~]# kubectl create configmap file --from-file=deployment.yaml

configmap/file created

[root@m01 ~]# kubectl get configmaps

NAME DATA AGE

file 1 27s

kube-root-ca.crt 1 46h

[root@m01 ~]# kubectl describe configmaps file

Name: file

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

deployment.yaml:

----

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-sc

# 2、 指定配置目录

[root@m01 ~]# mkdir configmap

[root@m01 ~]# touch configmap/index.html

[root@m01 ~]# echo "index" > configmap/index.html

[root@m01 ~]# kubectl create configmap test1 --from-file=configmap/

configmap/test1 created # 把目录下的文件都写进去

# 查看

[root@m01 ~]# kubectl get configmaps

NAME DATA AGE

file 1 5m50s

kube-root-ca.crt 1 46h

test1 1 19s

[root@m01 ~]# kubectl describe configmaps test1

# 3、指定配置项

[root@m01 ~]# kubectl create configmap test2 --from-literal=key=value --from-literal=key1=value1

configmap/test2 created

[root@m01 ~]# kubectl get configmaps

NAME DATA AGE

file 1 10m

kube-root-ca.crt 1 46h

test1 1 4m37s

test2 2 8s

[root@m01 ~]# kubectl describe configmaps test2

# 4、 通过配置清单的方式创建configmap(常用)

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx

data:

default.conf: |-

server {

listen 90;

server_name www.baim0.xyz;

location / {

root /daima;

autoindex on;

autoindex_localtime on;

autoindex_exact_size on;

}

}

2.环境变量的使用

kind: ConfigMap

apiVersion: v1

metadata:

name: test-mysql

data:

MYSQL_ROOT_PASSWORD: "123456"

MYSQL_DATABASE: discuz

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: test-mysql

spec:

selector:

matchLabels:

app: test-mysql

template:

metadata:

labels:

app: test-mysql

spec:

containers:

- name: mysql

image: mysql:5.7

envFrom: # 引用数据中心定义的环境变量

- configMapRef:

name: test-mysql

3.使用

覆盖目录挂载

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- mountPath: /etc/nginx/conf.d

name: default-conf

volumes:

- name: default-conf

configMap:

name: nginx

items:

- key: default.conf

path: default.conf

# 查看

root@nginx-545c8479b7-hxc8m:/etc/nginx/conf.d# cat default.conf

server {

listen 90;

server_name www.baim0.xyz;

location / {

root /daima;

autoindex on;

autoindex_localtime on;

autoindex_exact_size on;

}

# 查看到端口是90,挂载

subPath单一挂载

之所以会产生这种挂载,是为了解决多配置文件挂载,互相覆盖的问题,这种模式只会覆盖单一的配置文件

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-conf

data:

nginx.conf: |-

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 4096;

include /etc/nginx/mime.types;

default_type application/octet-stream;

include /etc/nginx/conf.d/*.conf;

}

default.conf: |-

server {

listen 90;

server_name www.baim0.xyz;

location / {

root /daima;

autoindex on;

autoindex_localtime on;

autoindex_exact_size on;

}

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- mountPath: /etc/nginx/conf.d

name: nginx-default

- mountPath: /etc/nginx/nginx.conf # 精确到文件

name: nginx-conf

subPath: nginx.conf # 精确到文件

volumes:

- name: nginx-default

configMap:

name: nginx-conf

items:

- key: default.conf

path: default.conf

- name: nginx-conf

configMap:

name: nginx-conf

items:

- key: nginx.conf

path: nginx.conf

# 进入容器查看

[root@m01 k8s]# kubectl exec -it nginx-b46f4f758-68ggn -- bash

root@nginx-b46f4f758-68ggn:/etc/nginx# ls

conf.d fastcgi_params mime.types modules nginx.conf scgi_params uwsgi_params

# 文件都存在,单独覆盖了nginx.conf

root@nginx-b46f4f758-68ggn:/etc/nginx# cd conf.d/

root@nginx-b46f4f758-68ggn:/etc/nginx/conf.d# ls

default.conf

4.热更新

在不停服的前提下,可以更新配置文件

修改好项目配置的yaml文件

# [root@m01 k8s]# kubectl apply -f test.yaml

三、Secret

Secret解决了密码、token、密钥等敏感数据的配置问题,可以以Volume或者环境变量的方式去使用,有如下三种类型。

1.Opaque Secret

编写配置清单

# opaque的数据类型是一个map类型,要求value是base64的编码格式

[root@m01 k8s]# echo "123456" | base64

MTIzNDU2Cg==

apiVersion: v1

kind: Secret

metadata:

name: mysql-password

data:

password: MTIzNDU2Cg==

创建secret资源

[root@m01 k8s]# kubectl apply -f test.yaml

secret/mysql-password created

[root@m01 k8s]# kubectl get -f test.yaml

NAME TYPE DATA AGE

mysql-password Opaque 1 9s

使用

apiVersion: v1

kind: Secret

metadata:

name: mysql-password

data:

MYSQL_ROOT_PASSWORD: MTIzNDU2Cg==

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-secret

spec:

selector:

matchLabels:

app: mysql

template:

metadata:

name: mysql

labels:

app: mysql

spec:

containers:

- name: mysql

image: mysql:5.7

envFrom:

- secretRef:

name: mysql-password

# 验证启动

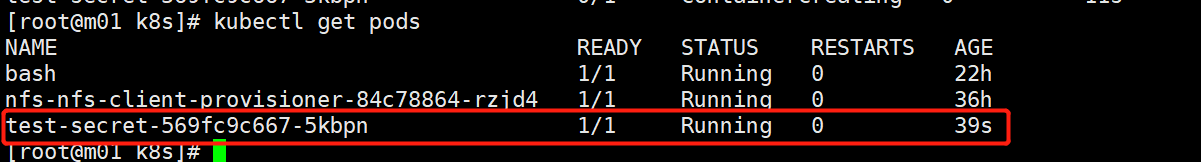

[root@m01 k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

bash 1/1 Running 0 22h

nfs-nfs-client-provisioner-84c78864-rzjd4 1/1 Running 0 36h

test-secret-6975b89b5-mqxp6 1/1 Running 0 4s

2.kubernetes.io/dockerconfigjson

export DOCKER_REGISTRY_SERVER=10.0.0.100

export DOCKER_USER=root

export DOCKER_PASSWORD=root@123

[root@m01 k8s]# kubectl create secret docker-registry aliyun --docker-server=${DOCKER_REGISTRY_SERVER} --docker-username=${DOCKER_USER} --docker-password=${DOCKER_PASSWORD}

spec:

imagePullSecrets:

- name: ailiyun

containers:

- name: mysql

image: registry.cn-shanghai.aliyuncs.com/baim0/mysql:5.7

envFrom:

- secretRef:

name: mysql-password

3.Service Account

Service Account 用 来 访 问 Kubernetes API , 由 Kubernetes 自 动 创 建 , 并 且 会 自 动 挂 载 到 Pod 的/run/secrets/kubernetes.io/serviceaccount 目录中。

root@test-mysql-c689b595-9r6sd:/run/secrets/kubernetes.io/serviceaccount# ls

ca.crt namespace token