(1)报错:unregister_netdevice: waiting for vethfa4b4ee to become free. Usage count = 1

踩了个内核的坑

docker 1.9.1

kernel 3.10.327(redhat7)

先记录下,还没有找到哪个内核版本修复了这个问题,知道的朋友也可以留个言。

https://bugzilla.kernel.org/show_bug.cgi?id=81211

(2)内核的kworker和docker锁住

[Wed Jan 31 14:16:11 2018] INFO: task docker:26326 blocked for more than 120 seconds.

[Wed Jan 31 14:16:11 2018] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[Wed Jan 31 14:16:11 2018] docker D 0000000000000000 0 26326 1 0x00000080

[Wed Jan 31 14:16:11 2018] ffff88050faefab0 0000000000000086 ffff880067d69700 ffff88050faeffd8

[Wed Jan 31 14:16:11 2018] ffff88050faeffd8 ffff88050faeffd8 ffff880067d69700 ffff88050faefbf0

[Wed Jan 31 14:16:11 2018] ffff88050faefbf8 7fffffffffffffff ffff880067d69700 0000000000000000

[Wed Jan 31 14:16:11 2018] Call Trace:

[Wed Jan 31 14:16:11 2018] [<ffffffff8163a909>] schedule+0x29/0x70

[Wed Jan 31 14:16:11 2018] [<ffffffff816385f9>] schedule_timeout+0x209/0x2d0

[Wed Jan 31 14:16:11 2018] [<ffffffff8108e4cd>] ? mod_timer+0x11d/0x240

[Wed Jan 31 14:16:11 2018] [<ffffffff8163acd6>] wait_for_completion+0x116/0x170

[Wed Jan 31 14:16:11 2018] [<ffffffff810b8c10>] ? wake_up_state+0x20/0x20

[Wed Jan 31 14:16:11 2018] [<ffffffff810ab676>] __synchronize_srcu+0x106/0x1a0

[Wed Jan 31 14:16:11 2018] [<ffffffff810ab190>] ? call_srcu+0x70/0x70

[Wed Jan 31 14:16:11 2018] [<ffffffff81219ebf>] ? __sync_blockdev+0x1f/0x40

[Wed Jan 31 14:16:11 2018] [<ffffffff810ab72d>] synchronize_srcu+0x1d/0x20

[Wed Jan 31 14:16:11 2018] [<ffffffffa000318d>] __dm_suspend+0x5d/0x220 [dm_mod]

[Wed Jan 31 14:16:11 2018] [<ffffffffa0004c9a>] dm_suspend+0xca/0xf0 [dm_mod]

[Wed Jan 31 14:16:11 2018] [<ffffffffa0009fe0>] ? table_load+0x380/0x380 [dm_mod]

[Wed Jan 31 14:16:11 2018] [<ffffffffa000a174>] dev_suspend+0x194/0x250 [dm_mod]

[Wed Jan 31 14:16:11 2018] [<ffffffffa0009fe0>] ? table_load+0x380/0x380 [dm_mod]

[Wed Jan 31 14:16:11 2018] [<ffffffffa000aa25>] ctl_ioctl+0x255/0x500 [dm_mod]

[Wed Jan 31 14:16:11 2018] [<ffffffffa000ace3>] dm_ctl_ioctl+0x13/0x20 [dm_mod]

[Wed Jan 31 14:16:11 2018] [<ffffffff811f1ef5>] do_vfs_ioctl+0x2e5/0x4c0

[Wed Jan 31 14:16:11 2018] [<ffffffff811f2171>] SyS_ioctl+0xa1/0xc0

[Wed Jan 31 14:16:11 2018] [<ffffffff816408d9>] ? do_async_page_fault+0x29/0xe0

[Wed Jan 31 14:16:11 2018] [<ffffffff81645909>] system_call_fastpath+0x16/0x1b

[Wed Jan 31 14:16:11 2018] INFO: task kworker/u32:4:8305 blocked for more than 120 seconds.

[Wed Jan 31 14:16:11 2018] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[Wed Jan 31 14:16:11 2018] kworker/u32:4 D ffff8805111e00e0 0 8305 2 0x00000080

[Wed Jan 31 14:16:11 2018] Workqueue: kdmremove do_deferred_remove [dm_mod]

[Wed Jan 31 14:16:11 2018] ffff88026e7cfcf0 0000000000000046 ffff88052402ae00 ffff88026e7cffd8

[Wed Jan 31 14:16:11 2018] ffff88026e7cffd8 ffff88026e7cffd8 ffff88052402ae00 ffff8805111e00d8

[Wed Jan 31 14:16:11 2018] ffff8805111e00dc ffff88052402ae00 00000000ffffffff ffff8805111e00e0

[Wed Jan 31 14:16:11 2018] Call Trace:

[Wed Jan 31 14:16:11 2018] [<ffffffff8163b9e9>] schedule_preempt_disabled+0x29/0x70

[Wed Jan 31 14:16:11 2018] [<ffffffff816396e5>] __mutex_lock_slowpath+0xc5/0x1c0

[Wed Jan 31 14:16:11 2018] [<ffffffff81638b4f>] mutex_lock+0x1f/0x2f

[Wed Jan 31 14:16:11 2018] [<ffffffffa0002e9d>] __dm_destroy+0xad/0x340 [dm_mod]

[Wed Jan 31 14:16:11 2018] [<ffffffffa00047e3>] dm_destroy+0x13/0x20 [dm_mod]

[Wed Jan 31 14:16:11 2018] [<ffffffffa0008d6d>] dm_hash_remove_all+0x6d/0x130 [dm_mod]

[Wed Jan 31 14:16:11 2018] [<ffffffffa000b50a>] dm_deferred_remove+0x1a/0x20 [dm_mod]

[Wed Jan 31 14:16:11 2018] [<ffffffffa0000dae>] do_deferred_remove+0xe/0x10 [dm_mod]

[Wed Jan 31 14:16:11 2018] [<ffffffff8109d5fb>] process_one_work+0x17b/0x470

[Wed Jan 31 14:16:11 2018] [<ffffffff8109e3cb>] worker_thread+0x11b/0x400

[Wed Jan 31 14:16:11 2018] [<ffffffff8109e2b0>] ? rescuer_thread+0x400/0x400

[Wed Jan 31 14:16:11 2018] [<ffffffff810a5aef>] kthread+0xcf/0xe0

[Wed Jan 31 14:16:11 2018] [<ffffffff810a5a20>] ? kthread_create_on_node+0x140/0x140

[Wed Jan 31 14:16:11 2018] [<ffffffff81645858>] ret_from_fork+0x58/0x90

重启docker后,大概率出现僵尸进程。

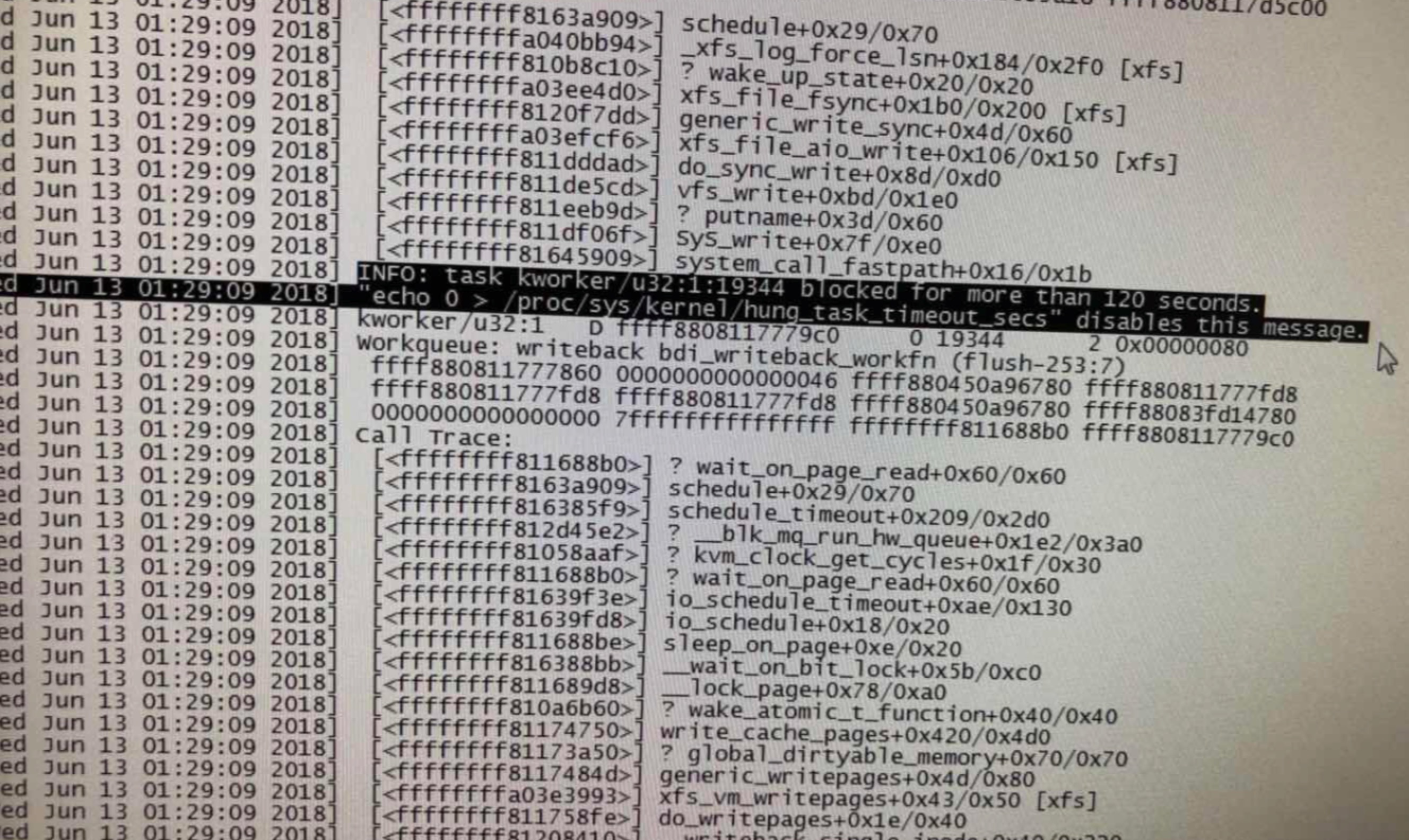

(3)INFO: task blocked for more than 120 seconds.

This is a know bug. By default Linux uses up to 40% of the available memory for file system caching. After this mark has been reached the file system flushes all outstanding data to disk causing all following IOs going synchronous. For flushing out this data to disk this there is a time limit of 120 seconds by default. In the case here the IO subsystem is not fast enough to flush the data withing 120 seconds. This especially happens on systems with a lof of memory.

The problem is solved in later kernels and there is not “fix” from Oracle. I fixed this by lowering the mark for flushing the cache from 40% to 10% by setting “vm.dirty_ratio=10” in /etc/sysctl.conf. This setting does not influence overall database performance since you hopefully use Direct IO and bypass the file system cache completely.

解决方法:

https://blog.csdn.net/electrocrazy/article/details/79377214

linux会设置40%的可用内存用来做系统cache,当flush数据时这40%内存中的数据由于和IO同步问题导致超时(120s),所将40%减小到10%,避免超时。

修改内核参数:

vim /etc/sysctrl.conf

vm.dirty_background_ratio = 5

vm.dirty_ratio = 10

sysctl -p生效

综上,如果是redhat 建议升级到3.10.0-862,升到最新稳定版本总没错。