配置免密度登录

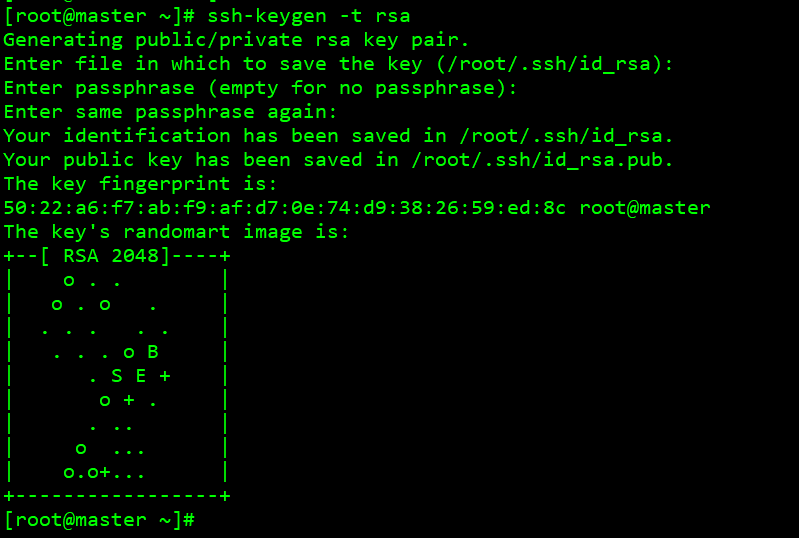

执行 ssh-keygen -t rsa

#建立 ssh 目录,一路敲回车, 生成的密钥对 id_rsa, id_rsa.pub,

默认存储在~/.ssh 目录下

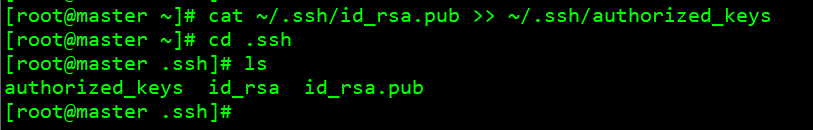

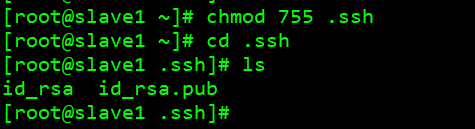

chmod 755 .ssh #赋予 755 权限 cd .ssh #ls – l id_rsa id_rsa.pub

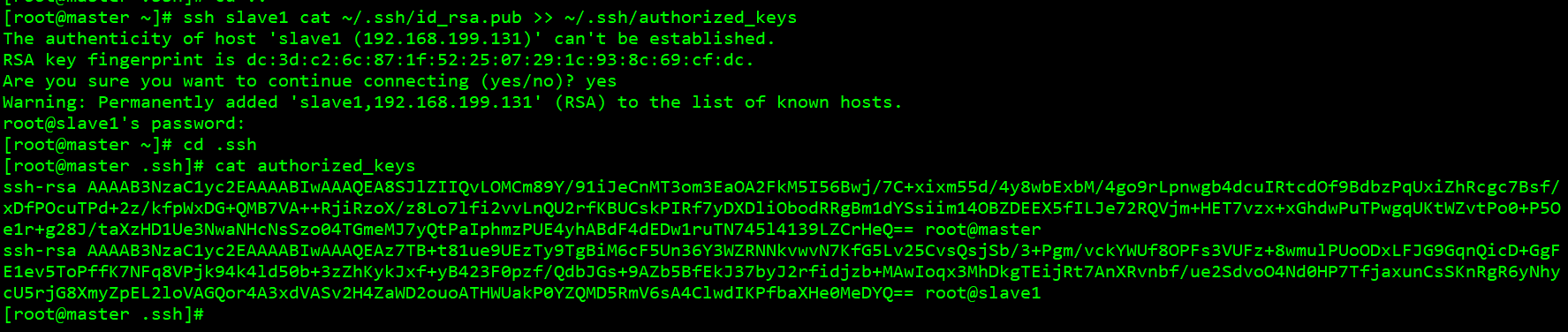

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

把公用密匙添加到 authorized_keys 文件中(此文件最后一定要赋予 644 权限)

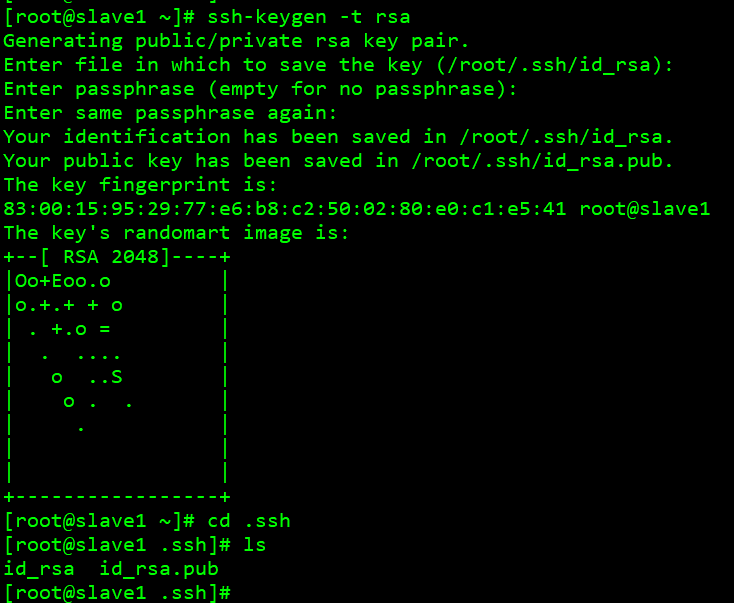

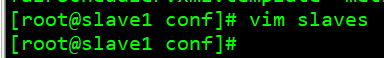

现在给slave1节点设置公钥

执行 ssh-keygen -t rsa

#建立 ssh 目录,一路敲回车, 生成的密钥对 id_rsa, id_rsa.pub,

默认存储在~/.ssh 目录下

chmod 755 .ssh #赋予 755 权限 cd .ssh #ls – l id_rsa id_rsa.pub

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

把公用密匙添加到 authorized_keys 文件中(此文件最后一定要赋予 644 权限)

ssh slave1 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

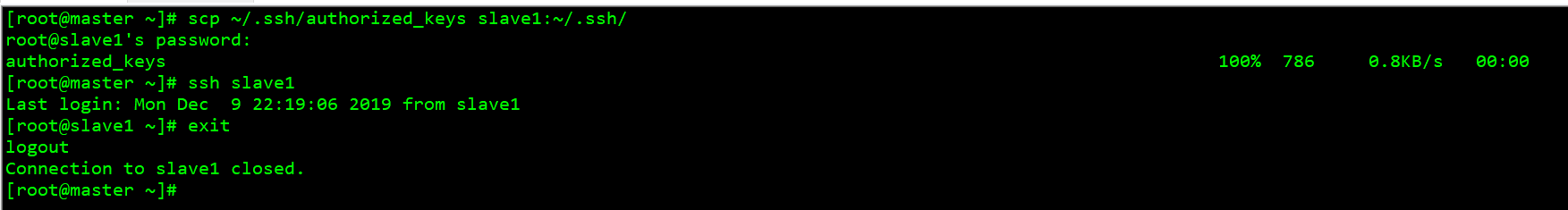

有几个 slave 节点就需要运行几次命令, slave1 是节点名称 scp ~/.ssh/authorized_keys slave1:~/.ssh/ #把 authorized_keys 文件拷贝回每一个节点, slave1 是节点名称

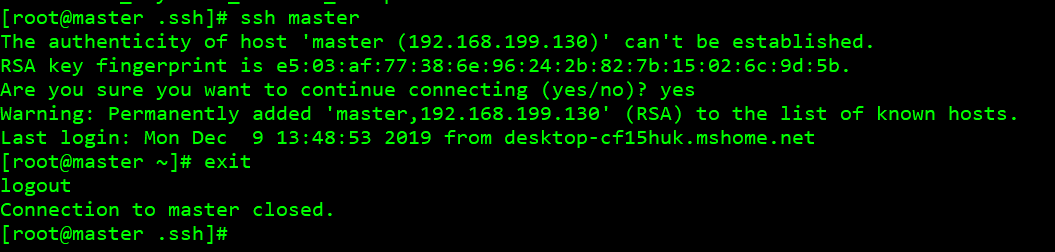

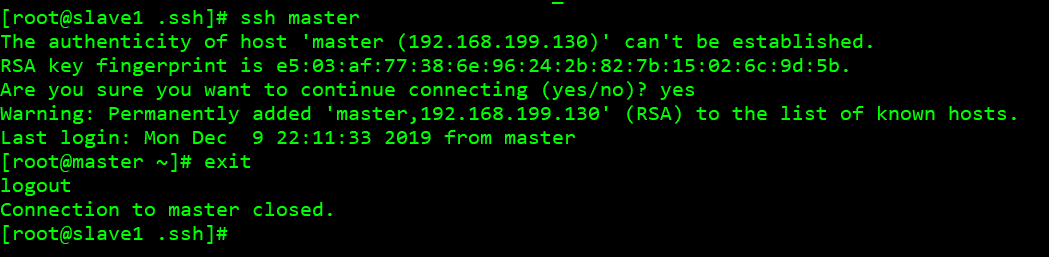

可以看到能相互之间实现了免密码登录。

解压 Scala 和 Spark

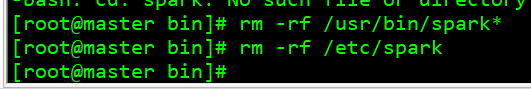

1、 删除 cdh 中的 Spark:

rm -rf /usr/bin/spark*

rm -rf /etc/spark

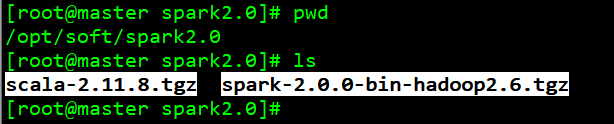

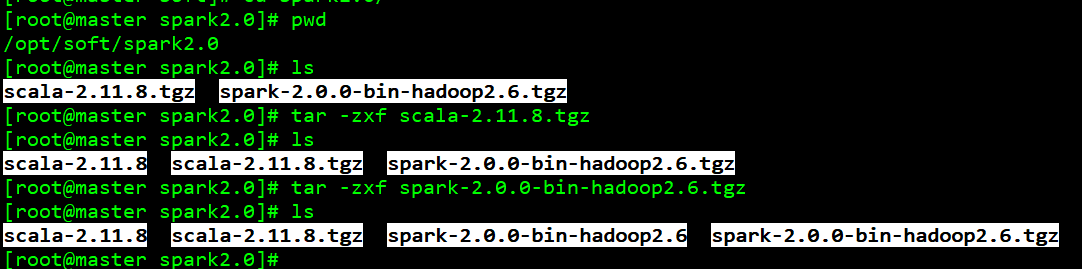

2、 上传至 spark-2.0.0-preview-bin-hadoop2.6.tgz 和 scala-2.11.8.tgz 至 /opt/soft/spark2.0 下, 并进行解压

tar -zxf scala-2.11.8.tgz tar -zxf spark-2.0.0-bin-hadoop2.6.tgz

vi /etc/profile, 增加如下内容:

export HADOOP_HOME=/opt/cloudera/parcels/CDH/lib/hadoop

export SPARK_HOME=/opt/soft/spark2.0/spark-2.0.0-bin-hadoop2.6

export SCALA_HOME=/opt/soft/spark2.0/scala-2.11.8

export JAVA_HOME=/usr/java/jdk1.7.0_67-cloudera

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin:$SPARK_HOME/bin:$HADOOP_HOME=/bin:$SCALA_HOME/bin

export HADOOP_CONF_DIR=/etc/hadoop/conf

source /etc/profile 起效

两个节点都配置环境变量

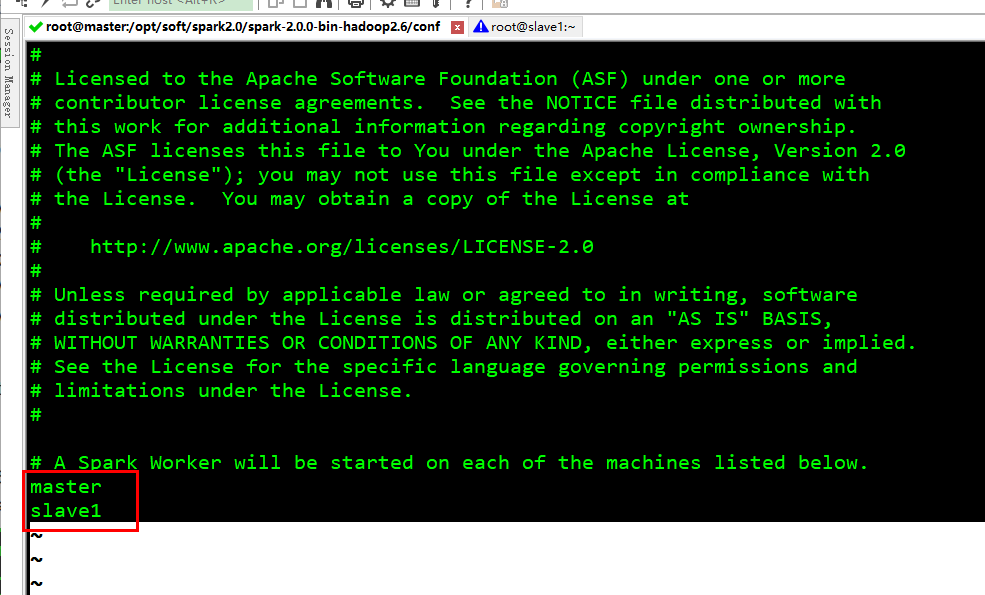

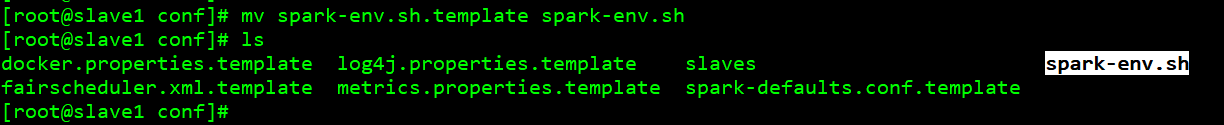

3、 修改 SPARK_HOME/conf 下

mv slaves.template slaves , slaves 里配置工作节点主机名列表

mv spark-env.sh.template spark-env.sh , spark-env.sh 配置一些环境变量, 由于我们用 Yarn 模式,

这里面不用配置

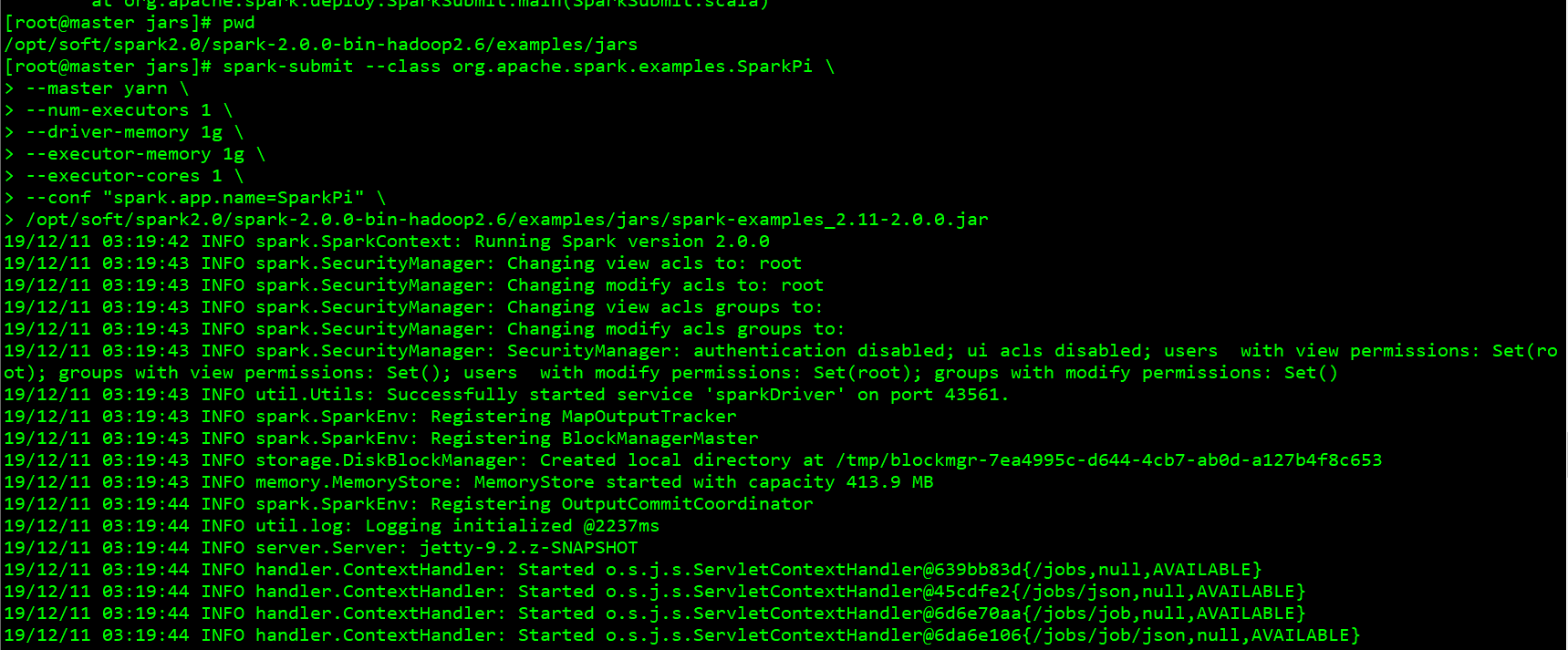

4、 运行测试

在 2.0 之前, Spark 在 YARN 中有 yarn-cluster 和 yarn-client 两种运行模式, 建议前者。

而在 2.0 里--master 的 yarn-cluster 和 yarn-client 都 deprecated 了, 统一用 yarn 。

用 run-example 方便测试环境:

run-example SparkPi local 模式运行

分布式模式运行:

spark-submit --class org.apache.spark.examples.SparkPi

--master yarn

--num-executors 1

--driver-memory 1g

--executor-memory 1g

--executor-cores 1

--conf "spark.app.name=SparkPi"

/opt/soft/spark2.0/spark-2.0.0-bin-hadoop2.6/examples/jars/spark-examples_2.11-2.0.0.jar

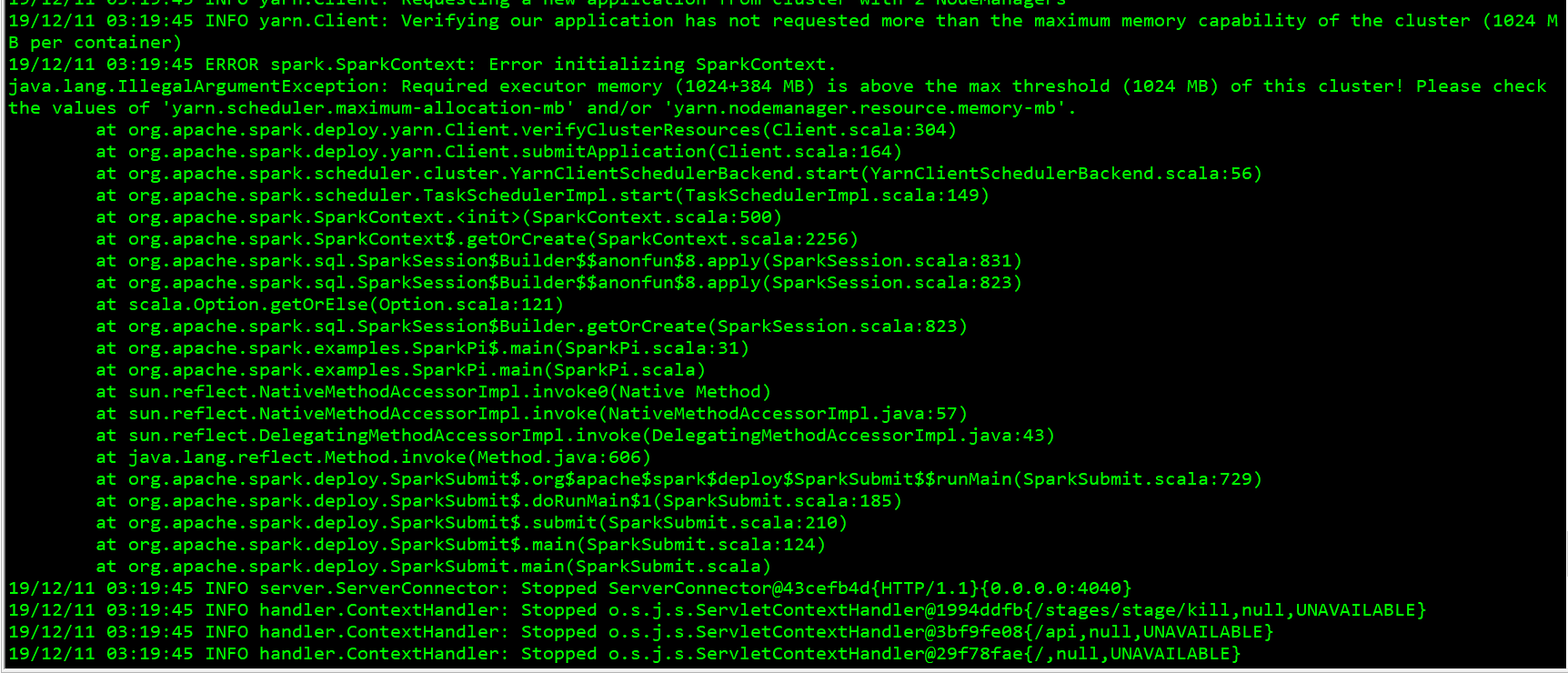

可以看到报错了

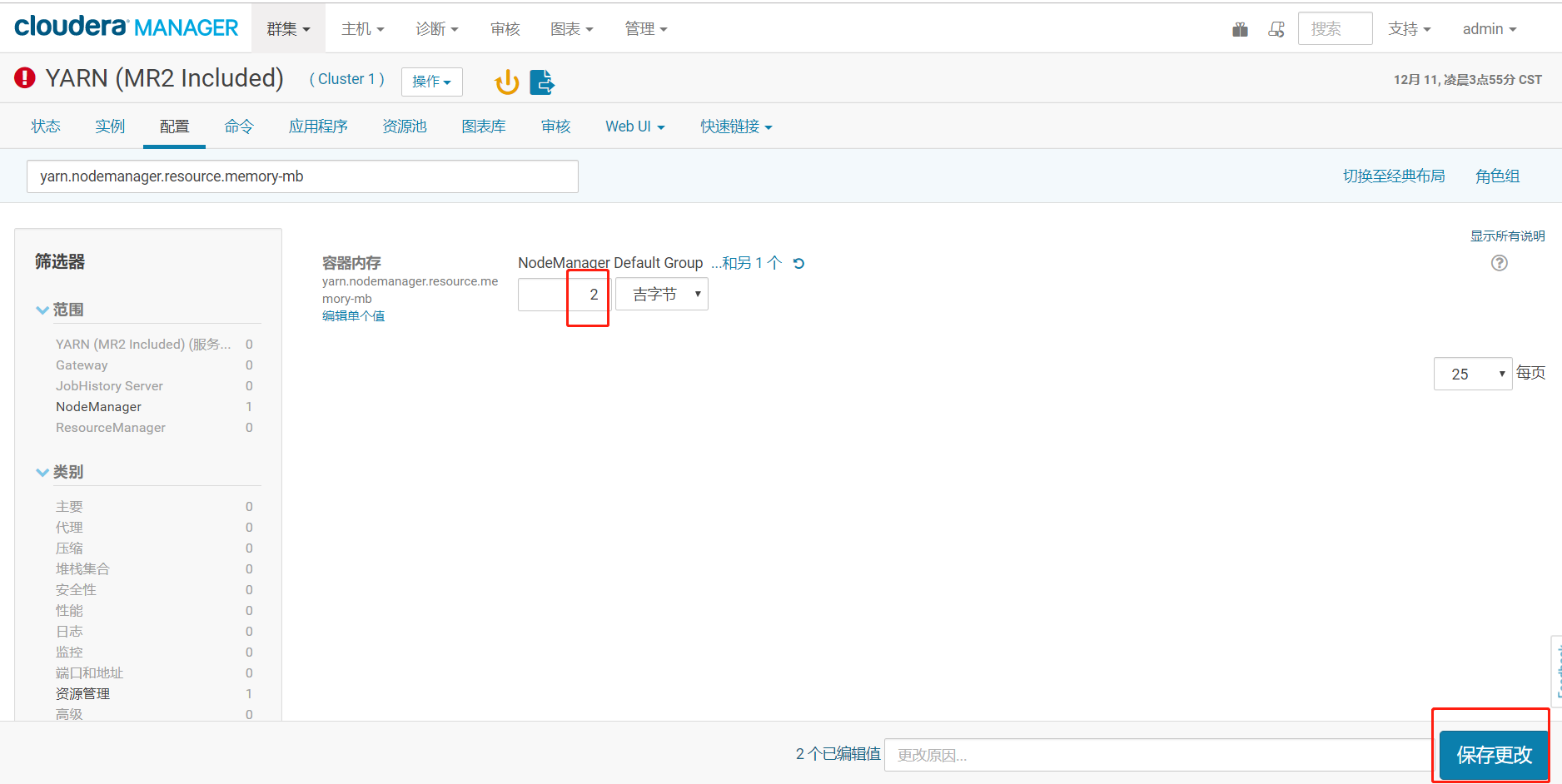

内存不足, 报错的话, 在 cm 里进行 yarn 的配置, 如下 2 个设置为 2g:

yarn.scheduler.maximum-allocation-mb

yarn.nodemanager.resource.memory-mb

搜索

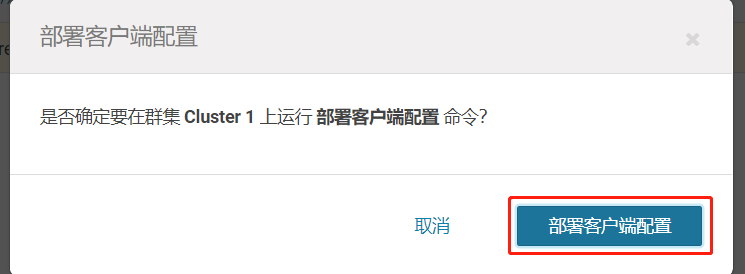

部署客户端配置的作用: 把 cm 界面里修改过的参数同步到每个节点的 xml 配置文件里。

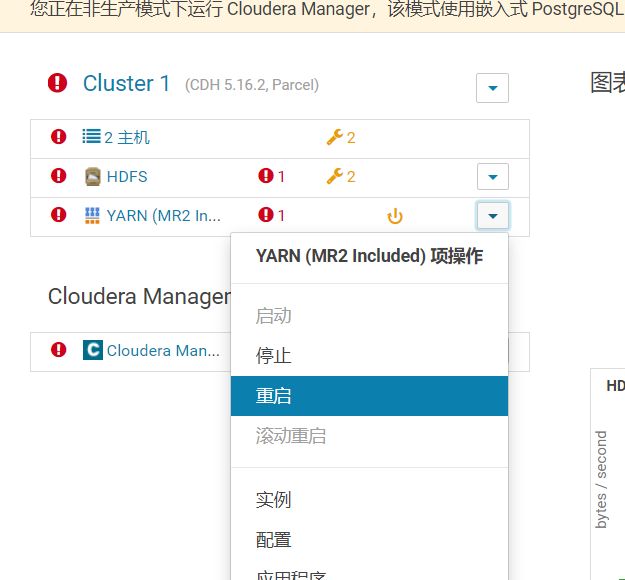

然后重启 Yarn 服务起效

再次运行

19/12/11 04:50:11 INFO server.ServerConnector: Stopped ServerConnector@3eac12e0{HTTP/1.1}{0.0.0.0:4041}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@1994ddfb{/stages/stage/kill,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@3bf9fe08{/api,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@29f78fae{/,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@37204e58{/static,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@4183d912{/executors/threadDump/json,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@3ef0642{/executors/threadDump,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@1a25417f{/executors/json,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@496168c4{/executors,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@4902985e{/environment/json,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@5742691b{/environment,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@6f0b6d81{/storage/rdd/json,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@3d2b710e{/storage/rdd,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@424ace42{/storage/json,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@23e5c67f{/storage,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@4c254927{/stages/pool/json,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@5e2acdad{/stages/pool,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@7775e958{/stages/stage/json,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@7fc94782{/stages/stage,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@12859445{/stages/json,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@66ff8927{/stages,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@6da6e106{/jobs/job/json,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@6d6e70aa{/jobs/job,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@45cdfe2{/jobs/json,null,UNAVAILABLE}

19/12/11 04:50:11 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@639bb83d{/jobs,null,UNAVAILABLE}

19/12/11 04:50:11 INFO ui.SparkUI: Stopped Spark web UI at http://192.168.199.130:4041

19/12/11 04:50:11 WARN cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: Attempted to request executors before the AM has registered!

19/12/11 04:50:11 INFO cluster.YarnClientSchedulerBackend: Stopped

19/12/11 04:50:11 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

19/12/11 04:50:11 INFO memory.MemoryStore: MemoryStore cleared

19/12/11 04:50:11 INFO storage.BlockManager: BlockManager stopped

19/12/11 04:50:11 INFO storage.BlockManagerMaster: BlockManagerMaster stopped

19/12/11 04:50:11 WARN metrics.MetricsSystem: Stopping a MetricsSystem that is not running

19/12/11 04:50:11 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

19/12/11 04:50:11 INFO spark.SparkContext: Successfully stopped SparkContext

Exception in thread "main" org.apache.hadoop.security.AccessControlException: Permission denied: user=root, access=WRITE, inode="/user":hdfs:supergroup:drwxr-xr-x

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:279)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:260)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:240)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:162)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:152)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3885)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3868)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkAncestorAccess(FSDirectory.java:3850)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkAncestorAccess(FSNamesystem.java:6826)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInternal(FSNamesystem.java:4562)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInt(FSNamesystem.java:4532)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4505)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:884)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.mkdirs(AuthorizationProviderProxyClientProtocol.java:328)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:641)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2278)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2274)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1924)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2272)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:526)

at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:106)

at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:73)

at org.apache.hadoop.hdfs.DFSClient.primitiveMkdir(DFSClient.java:2744)

at org.apache.hadoop.hdfs.DFSClient.mkdirs(DFSClient.java:2713)

at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:870)

at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:866)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirsInternal(DistributedFileSystem.java:866)

at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirs(DistributedFileSystem.java:859)

at org.apache.hadoop.fs.FileSystem.mkdirs(FileSystem.java:1817)

at org.apache.hadoop.fs.FileSystem.mkdirs(FileSystem.java:597)

at org.apache.spark.deploy.yarn.Client.prepareLocalResources(Client.scala:385)

at org.apache.spark.deploy.yarn.Client.createContainerLaunchContext(Client.scala:834)

at org.apache.spark.deploy.yarn.Client.submitApplication(Client.scala:167)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:56)

at org.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:149)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:500)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2256)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$8.apply(SparkSession.scala:831)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$8.apply(SparkSession.scala:823)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:823)

at org.apache.spark.examples.SparkPi$.main(SparkPi.scala:31)

at org.apache.spark.examples.SparkPi.main(SparkPi.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:729)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:185)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:210)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:124)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=root, access=WRITE, inode="/user":hdfs:supergroup:drwxr-xr-x

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkFsPermission(DefaultAuthorizationProvider.java:279)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:260)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.check(DefaultAuthorizationProvider.java:240)

at org.apache.hadoop.hdfs.server.namenode.DefaultAuthorizationProvider.checkPermission(DefaultAuthorizationProvider.java:162)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:152)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3885)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:3868)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkAncestorAccess(FSDirectory.java:3850)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkAncestorAccess(FSNamesystem.java:6826)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInternal(FSNamesystem.java:4562)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInt(FSNamesystem.java:4532)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4505)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:884)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.mkdirs(AuthorizationProviderProxyClientProtocol.java:328)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:641)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2278)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2274)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1924)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2272)

at org.apache.hadoop.ipc.Client.call(Client.java:1469)

at org.apache.hadoop.ipc.Client.call(Client.java:1400)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232)

at com.sun.proxy.$Proxy16.mkdirs(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.mkdirs(ClientNamenodeProtocolTranslatorPB.java:539)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy17.mkdirs(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.primitiveMkdir(DFSClient.java:2742)

... 30 more

19/12/11 04:50:11 INFO util.ShutdownHookManager: Shutdown hook called

19/12/11 04:50:11 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-b384a292-7755-4080-a42d-29b44ad13b95

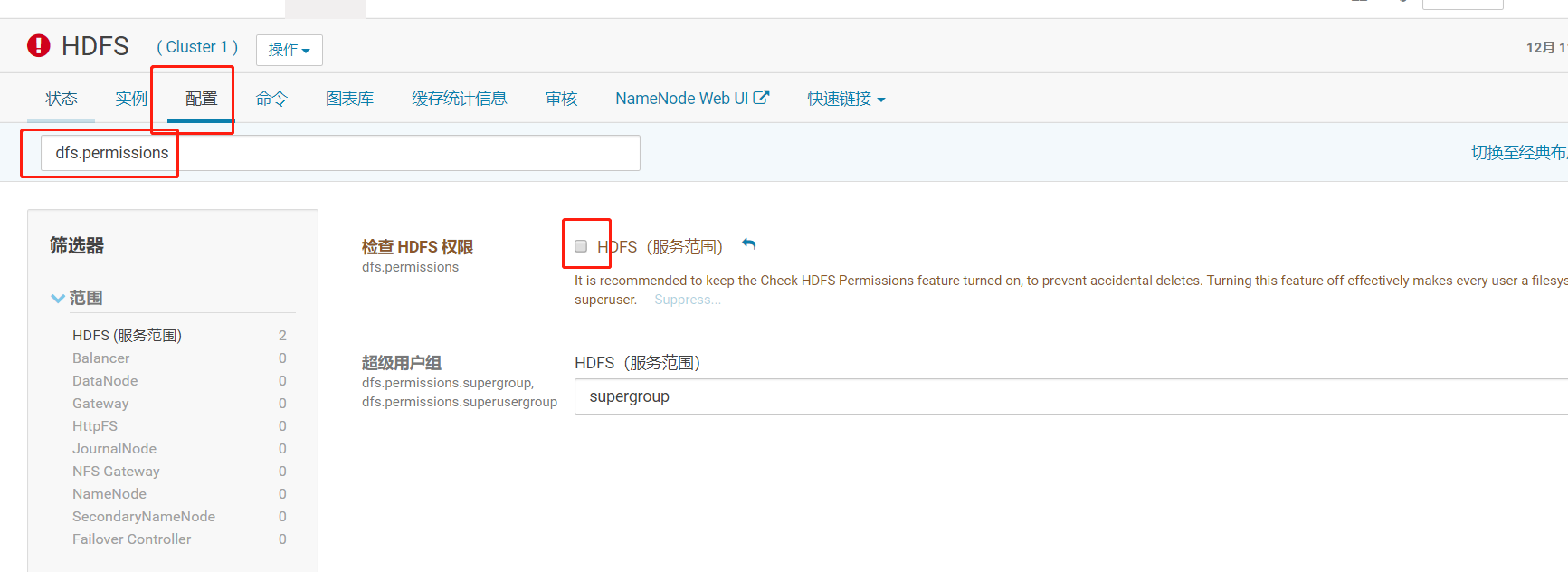

这个问题这样解决

去掉这个沟,默认是选上的,把它去掉了就可以

再重启一下hdfs

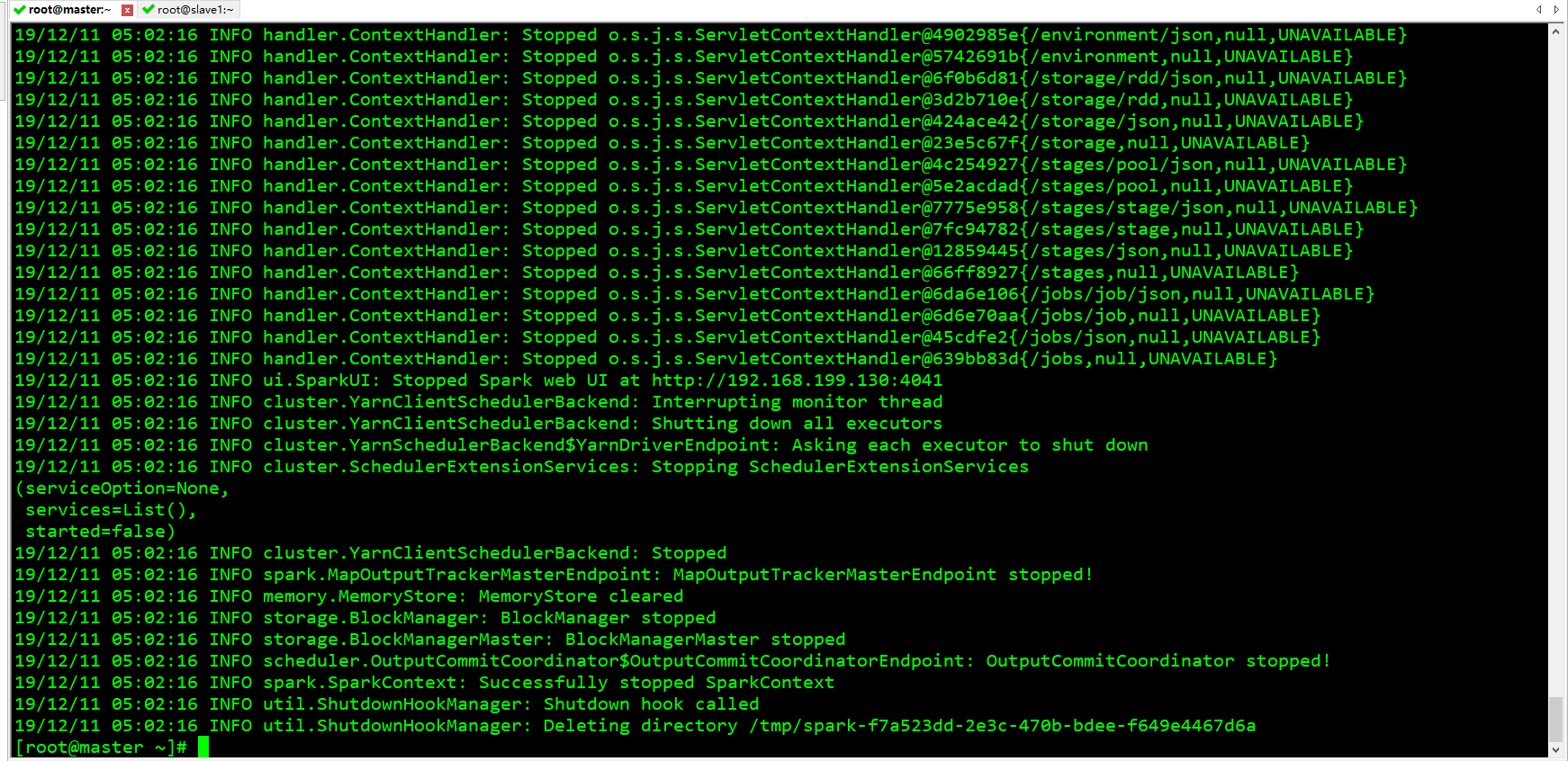

再次运行

可以看到成功了!!!