本文写的非常好,但是翻译的质量不是很好,因此本文基于翻译做了修改。

转自http://www.oschina.net/translate/what-every-programmer-should-know-about-memory-part1

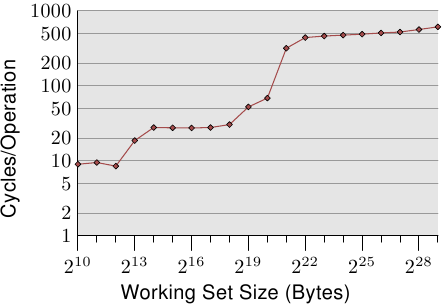

| [ Editor's introduction: Ulrich Drepper recently approached us asking if we would be interested in publishing a lengthy document he had written on how memory and software interact. We did not have to look at the text for long to realize that it would be of interest to many LWN readers. Memory usage is often the determining factor in how software performs, but good information on how to avoid memory bottlenecks is hard to find. This series of articles should change that situation.

The original document prints out at over 100 pages. We will be splitting it into about seven segments, each run 1-2 weeks after its predecessor. Once the entire series is out, Ulrich will be releasing the full text. Reformatting the text from the original LaTeX has been a bit of a challenge, but the results, hopefully, will be good. For ease of online reading, Ulrich's footnotes have been placed {inline in the text}. Hyperlinked cross-references (and [bibliography references]) will not be possible until the full series is published. Many thanks to Ulrich for allowing LWN to publish this material; we hope that it will lead to more memory-efficient software across our systems in the near future.] |

译者信息

[编辑的话: Ulrich Drepper最近问我们,是不是有兴趣发表一篇他写的内存方面的长文。我们不用看太多就已经知道,LWN的读者们会喜欢这篇文章的。内存的使用常常是软件性能的决定性因子,而如何避免内存瓶颈的好文章却不好找。这篇文章应该会有所帮助。 他的原文很长,超过100页。我们把它分成了7篇,每隔一到两周发表一篇。7篇发完后,Ulrich会把全文发出来。 对原文重新格式化是个很有挑战性的工作,但愿结果会不错吧。为了便于网上阅读,我们把Ulrich的脚注{放在了文章里},而互相引用的超链接(和[参考书目])要等到全文出来才能提供。 非常感谢Ultich,感谢他让LWN发表这篇文章,期待大家在不久的将来都能写出内存优化很棒的软件。] |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

1 IntroductionIn the early days computers were much simpler. The various components of a system, such as the CPU, memory, mass storage, and network interfaces, were developed together and, as a result, were quite balanced in their performance. For example, the memory and network interfaces were not (much) faster than the CPU at providing data. This situation changed once the basic structure of computers stabilized and hardware developers concentrated on optimizing individual subsystems. Suddenly the performance of some components of the computer fell significantly behind and bottlenecks developed. This was especially true for mass storage and memory subsystems which, for cost reasons, improved more slowly relative to other components. |

译者信息

1 简介早期计算机比现在更为简单。系统的各种组件例如CPU,内存,大容量存储器和网口,由于被共同开发因而有非常均衡的表现。例如,内存和网口并不比CPU在提供数据的时候更(特别的)快。 随着计算机稳定的基本结构,硬件开发人员开始致力于优化单个子系统,情况开始发生变化。于是电脑一些组件的性能大大的落后因而成为了瓶颈。由于成本原因,大容量存储器和内存子系统相对于其他组件来说改善得更为缓慢。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

The slowness of mass storage has mostly been dealt with using software techniques: operating systems keep most often used (and most likely to be used) data in main memory, which can be accessed at a rate orders of magnitude faster than the hard disk. Cache storage was added to the storage devices themselves, which requires no changes in the operating system to increase performance. {Changes are needed, however, to guarantee data integrity when using storage device caches.} For the purposes of this paper, we will not go into more details of software optimizations for the mass storage access. Unlike storage subsystems, removing the main memory as a bottleneck has proven much more difficult and almost all solutions require changes to the hardware. Today these changes mainly come in the following forms:

|

译者信息

大多情况下,采用软件技术解决大容量存储的慢的问题。如:操作系统将常用(且最有可能被用)的数据放在主存中,因为它比内存快上好几个数量级。或者将缓存加入存储设备中,这样就可以在不修改操作系统的前提下提升性能。{然而,为了在使用缓存时保证数据的完整性,仍然要作出一些修改。}这些内容不在本文的谈论范围之内,就不作赘述了。 与存储子系统不同,消除内存瓶颈非常困难,而且几乎每种方案都需要对硬件作出修改。目前,这些变更主要有以下这些方式:

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| For the most part, this document will deal with CPU caches and some effects of memory controller design. In the process of exploring these topics, we will explore DMA and bring it into the larger picture. However, we will start with an overview of the design for today's commodity hardware. This is a prerequisite to understanding the problems and the limitations of efficiently using memory subsystems. We will also learn about, in some detail, the different types of RAM and illustrate why these differences still exist.

This document is in no way all inclusive and final. It is limited to commodity hardware and further limited to a subset of that hardware. Also, many topics will be discussed in just enough detail for the goals of this paper. For such topics, readers are recommended to find more detailed documentation. |

译者信息

本文主要关心的是CPU缓存和内存控制器的设计的一些效果。在讨论这些主题的过程中,我们还会研究DMA。不过,我们首先会从当今商用硬件的设计谈起。这有助于我们理解目前在使用内存子系统时可能遇到的问题和限制。我们还会详细介绍RAM的分类,说明为什么会存在这么多不同类型的内存。 本文不会包括所有内容,也不会包括最终性质的内容。我们的讨论范围仅止于商用硬件,而且只限于其中的一小部分。另外,本文中的许多论题,我们只会点到为止,以达到本文目标为标准。对于这些论题,大家可以阅读其它文档,获得更详细的说明。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

When it comes to operating-system-specific details and solutions, the text exclusively describes Linux. At no time will it contain any information about other OSes. The author has no interest in discussing the implications for other OSes. If the reader thinks s/he has to use a different OS they have to go to their vendors and demand they write documents similar to this one. One last comment before the start. The text contains a number of occurrences of the term “usually” and other, similar qualifiers. The technology discussed here exists in many, many variations in the real world and this paper only addresses the most common, mainstream versions. It is rare that absolute statements can be made about this technology, thus the qualifiers. |

译者信息

当本文提到操作系统特定的细节和解决方案时,针对的都是Linux。无论何时都不会包含别的操作系统的任何信息,作者无意讨论其他操作系统的情况。如果读者认为他/她不得不使用别的操作系统,那么必须去要求供应商提供其操作系统类似于本文的文档。 在开始之前最后的一点说明,本文包含大量出现的术语“经常”和别的类似的限定词。这里讨论的技术在现实中存在于很多不同的实现,所以本文只阐述使用得最广泛最主流的版本。在阐述中很少有地方能用到绝对的限定词。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

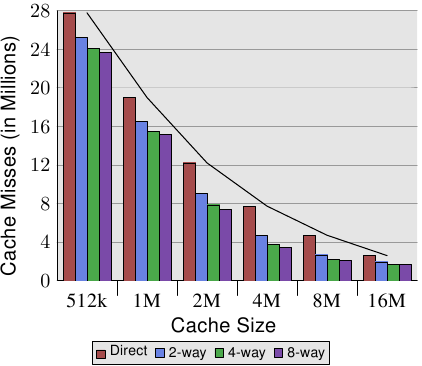

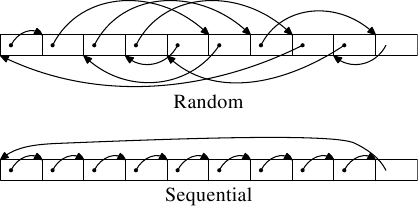

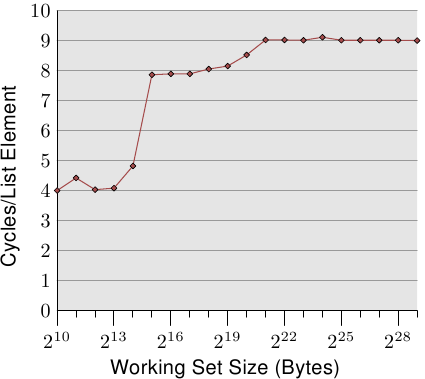

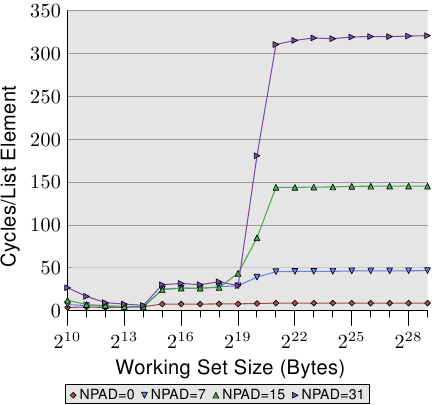

1.1 Document StructureThis document is mostly for software developers. It does not go into enough technical details of the hardware to be useful for hardware-oriented readers. But before we can go into the practical information for developers a lot of groundwork must be laid. To that end, the second section describes random-access memory (RAM) in technical detail. This section's content is nice to know but not absolutely critical to be able to understand the later sections. Appropriate back references to the section are added in places where the content is required so that the anxious reader could skip most of this section at first. The third section goes into a lot of details of CPU cache behavior. Graphs have been used to keep the text from being as dry as it would otherwise be. This content is essential for an understanding of the rest of the document. Section 4 describes briefly how virtual memory is implemented. This is also required groundwork for the rest. |

译者信息

1.1文档结构这个文档主要视为软件开发者而写的。本文不会涉及太多硬件细节,所以喜欢硬件的读者也许不会觉得有用。但是在我们讨论一些有用的细节之前,我们先要描述足够多的背景。 在这个基础上,本文的第二部分将描述RAM(随机寄存器)。懂得这个部分的内容很好,但是此部分的内容并不是懂得其后内容必须部分。我们会在之后引用不少之前的部分,所以心急的读者可以先跳过这本分。 第三部分会谈到不少关于CPU缓存行为模式的内容。我们会列出一些图标,这样你们不至于觉得太枯燥。第三部分对于理解整个文章非常重要。第四部分将简短的描述虚拟内存是怎么被实现的。这也是理解其他部分的背景知识。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Section 5 goes into a lot of detail about Non Uniform Memory Access (NUMA) systems. Section 6 is the central section of this paper. It brings together all the previous sections' information and gives programmers advice on how to write code which performs well in the various situations. The very impatient reader could start with this section and, if necessary, go back to the earlier sections to freshen up the knowledge of the underlying technology. Section 7 introduces tools which can help the programmer do a better job. Even with a complete understanding of the technology it is far from obvious where in a non-trivial software project the problems are. Some tools are necessary. In section 8 we finally give an outlook of technology which can be expected in the near future or which might just simply be good to have. |

译者信息

第五部分会提到许多关于Non Uniform Memory Access (NUMA)系统。 第六部分是本文的中心部分。在这个部分里面,我们将回顾其他许多部分中的信息,并且我们将给阅读本文的程序员许多在各种情况下的编程建议。如果你真的很心急,那么你可以直接阅读第六部分,并且我们建议你在必要的时候回到之前的章节回顾一下必要的背景知识。 本文的第七部分将介绍一些能够帮助程序员更好的完成任务的工具。即便在彻底理解了某一项技术的情况下,距离彻底理解在非测试环境下的程序还是很遥远的。我们需要借助一些工具。 第八部分,我们将展望一些在未来我们可能认为好用的科技。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

1.2 Reporting ProblemsThe author intends to update this document for some time. This includes updates made necessary by advances in technology but also to correct mistakes. Readers willing to report problems are encouraged to send email. 1.3 ThanksI would like to thank Johnray Fuller and especially Jonathan Corbet for taking on part of the daunting task of transforming the author's form of English into something more traditional. Markus Armbruster provided a lot of valuable input on problems and omissions in the text. 1.4 About this DocumentThe title of this paper is an homage to David Goldberg's classic paper “What Every Computer Scientist Should Know About Floating-Point Arithmetic” [goldberg]. Goldberg's paper is still not widely known, although it should be a prerequisite for anybody daring to touch a keyboard for serious programming. |

译者信息

1.2 反馈问题 作者会不定期更新本文档。这些更新既包括伴随技术进步而来的更新也包含更改错误。非常欢迎有志于反馈问题的读者发送电子邮件。 1.3 致谢 我首先需要感谢Johnray Fuller尤其是Jonathan Corbet,感谢他们将作者的英语转化成为更为规范的形式。Markus Armbruster提供大量本文中对于问题和缩写有价值的建议。 1.4 关于本文 本文题目对David Goldberg的经典文献《What Every Computer Scientist Should Know About Floating-Point Arithmetic》[goldberg]表示致敬。Goldberg的论文虽然不普及,但是对于任何有志于严格编程的人都会是一个先决条件。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

2 Commodity Hardware TodayUnderstanding commodity hardware is important because specialized hardware is in retreat. Scaling these days is most often achieved horizontally instead of vertically, meaning today it is more cost-effective to use many smaller, connected commodity computers instead of a few really large and exceptionally fast (and expensive) systems. This is the case because fast and inexpensive network hardware is widely available. There are still situations where the large specialized systems have their place and these systems still provide a business opportunity, but the overall market is dwarfed by the commodity hardware market. Red Hat, as of 2007, expects that for future products, the “standard building blocks” for most data centers will be a computer with up to four sockets, each filled with a quad core CPU that, in the case of Intel CPUs, will be hyper-threaded. {Hyper-threading enables a single processor core to be used for two or more concurrent executions with just a little extra hardware.} This means the standard system in the data center will have up to 64 virtual processors. Bigger machines will be supported, but the quad socket, quad CPU core case is currently thought to be the sweet spot and most optimizations are targeted for such machines. |

译者信息

2 商用硬件现状鉴于目前专用硬件正在逐渐淡出,理解商用硬件的现状变得十分重要。现如今,人们更多的采用水平扩展,也就是说,用大量小型、互联的商用计算机代替巨大、超快(但超贵)的系统。原因在于,快速而廉价的网络硬件已经崛起。那些大型的专用系统仍然有一席之地,但已被商用硬件后来居上。2007年,Red Hat认为,未来构成数据中心的“积木”将会是拥有最多4个插槽的计算机,每个插槽插入一个四核CPU,这些CPU都是超线程的。{超线程使单个处理器核心能同时处理两个以上的任务,只需加入一点点额外硬件}。也就是说,这些数据中心中的标准系统拥有最多64个虚拟处理器。当然可以支持更大的系统,但人们认为4插槽、4核CPU是最佳配置,绝大多数的优化都针对这样的配置。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

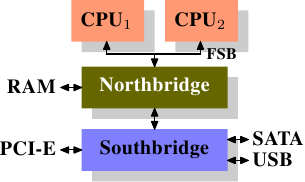

Large differences exist in the structure of commodity computers. That said, we will cover more than 90% of such hardware by concentrating on the most important differences. Note that these technical details tend to change rapidly, so the reader is advised to take the date of this writing into account. Over the years the personal computers and smaller servers standardized on a chipset with two parts: the Northbridge and Southbridge. Figure 2.1 shows this structure.

All CPUs (two in the previous example, but there can be more) are connected via a common bus (the Front Side Bus, FSB) to the Northbridge. The Northbridge contains, among other things, the memory controller, and its implementation determines the type of RAM chips used for the computer. Different types of RAM, such as DRAM, Rambus, and SDRAM, require different memory controllers. |

译者信息

在不同商用计算机之间,也存在着巨大的差异。不过,我们关注在主要的差异上,可以涵盖到超过90%以上的硬件。需要注意的是,这些技术上的细节往往日新月异,变化极快,因此大家在阅读的时候也需要注意本文的写作时间。 这么多年来,个人计算机和小型服务器被标准化到了一个芯片组上,它由两部分组成: 北桥和南桥,见图2.1。

CPU通过一条通用总线(前端总线,FSB)连接到北桥。北桥主要包括内存控制器和其它一些组件,内存控制器决定了RAM芯片的类型。不同的类型,包括DRAM、Rambus和SDRAM等等,要求不同的内存控制器。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

To reach all other system devices, the Northbridge must communicate with the Southbridge. The Southbridge, often referred to as the I/O bridge, handles communication with devices through a variety of different buses. Today the PCI, PCI Express, SATA, and USB buses are of most importance, but PATA, IEEE 1394, serial, and parallel ports are also supported by the Southbridge. Older systems had AGP slots which were attached to the Northbridge. This was done for performance reasons related to insufficiently fast connections between the Northbridge and Southbridge. However, today the PCI-E slots are all connected to the Southbridge. Such a system structure has a number of noteworthy consequences:

|

译者信息

为了连通其它系统设备,北桥需要与南桥通信。南桥又叫I/O桥,通过多条不同总线与设备们通信。目前,比较重要的总线有PCI、PCI Express、SATA和USB总线,除此以外,南桥还支持PATA、IEEE 1394、串行口和并行口等。比较老的系统上有连接北桥的AGP槽。那是由于南北桥间缺乏高速连接而采取的措施。现在的PCI-E都是直接连到南桥的。 这种结构有一些需要注意的地方:

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| A couple of bottlenecks are immediately apparent in this design. One such bottleneck involves access to RAM for devices. In the earliest days of the PC, all communication with devices on either bridge had to pass through the CPU, negatively impacting overall system performance. To work around this problem some devices became capable of direct memory access (DMA). DMA allows devices, with the help of the Northbridge, to store and receive data in RAM directly without the intervention of the CPU (and its inherent performance cost). Today all high-performance devices attached to any of the buses can utilize DMA. While this greatly reduces the workload on the CPU, it also creates contention for the bandwidth of the Northbridge as DMA requests compete with RAM access from the CPUs. This problem, therefore, must to be taken into account. |

译者信息 在上面这种设计中,瓶颈马上出现了。第一个瓶颈与设备对RAM的访问有关。早期,所有设备之间的通信都需要经过CPU,结果严重影响了整个系统的性能。为了解决这个问题,有些设备加入了直接内存访问(DMA)的能力。DMA允许设备在北桥的帮助下,无需CPU的干涉,直接读写RAM。到了今天,所有高性能的设备都可以使用DMA。虽然DMA大大降低了CPU的负担,却占用了北桥的带宽,与CPU形成了争用。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

A second bottleneck involves the bus from the Northbridge to the RAM. The exact details of the bus depend on the memory types deployed. On older systems there is only one bus to all the RAM chips, so parallel access is not possible. Recent RAM types require two separate buses (or channels as they are called for DDR2, see Figure 2.8) which doubles the available bandwidth. The Northbridge interleaves memory access across the channels. More recent memory technologies (FB-DRAM, for instance) add more channels. With limited bandwidth available, it is important to schedule memory access in ways that minimize delays. As we will see, processors are much faster and must wait to access memory, despite the use of CPU caches. If multiple hyper-threads, cores, or processors access memory at the same time, the wait times for memory access are even longer. This is also true for DMA operations. |

译者信息

第二个瓶颈来自北桥与RAM间的总线。总线的具体情况与内存的类型有关。在早期的系统上,只有一条总线,因此不能实现并行访问。近期的RAM需要两条独立总线(或者说通道,DDR2就是这么叫的,见图2.8),可以实现带宽加倍。北桥将内存访问交错地分配到两个通道上。更新的内存技术(如FB-DRAM)甚至加入了更多的通道。 由于带宽有限,我们需要以一种使延迟最小化的方式来对内存访问进行调度。我们将会看到,处理器的速度比内存要快得多,需要等待内存。如果有多个超线程核心或CPU同时访问内存,等待时间则会更长。对于DMA也是同样。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

There is more to accessing memory than concurrency, however. Access patterns themselves also greatly influence the performance of the memory subsystem, especially with multiple memory channels. Refer to Section 2.2 for more details of RAM access patterns. On some more expensive systems, the Northbridge does not actually contain the memory controller. Instead the Northbridge can be connected to a number of external memory controllers (in the following example, four of them).

|

译者信息

除了并发以外,访问模式也会极大地影响内存子系统、特别是多通道内存子系统的性能。关于访问模式,可参见2.2节。 在一些比较昂贵的系统上,北桥自己不含内存控制器,而是连接到外部的多个内存控制器上(在下例中,共有4个)。

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| The advantage of this architecture is that more than one memory bus exists and therefore total bandwidth increases. This design also supports more memory. Concurrent memory access patterns reduce delays by simultaneously accessing different memory banks. This is especially true when multiple processors are directly connected to the Northbridge, as in Figure 2.2. For such a design, the primary limitation is the internal bandwidth of the Northbridge, which is phenomenal for this architecture (from Intel). { For completeness it should be mentioned that such a memory controller arrangement can be used for other purposes such as “memory RAID” which is useful in combination with hotplug memory.} |

译者信息 这种架构的好处在于,多条内存总线的存在,使得总带宽也随之增加了。而且也可以支持更多的内存。通过同时访问不同内存区,还可以降低延时。对于像图2.2中这种多处理器直连北桥的设计来说,尤其有效。而这种架构的局限在于北桥的内部带宽,非常巨大(来自Intel)。{出于完整性的考虑,还需要补充一下,这样的内存控制器布局还可以用于其它用途,比如说「内存RAID」,它可以与热插拔技术一起使用。} |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

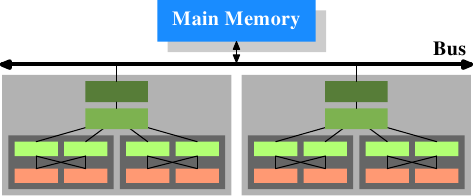

Using multiple external memory controllers is not the only way to increase memory bandwidth. One other increasingly popular way is to integrate memory controllers into the CPUs and attach memory to each CPU. This architecture is made popular by SMP systems based on AMD's Opteron processor. Figure 2.3 shows such a system. Intel will have support for the Common System Interface (CSI) starting with the Nehalem processors; this is basically the same approach: an integrated memory controller with the possibility of local memory for each processor.

With an architecture like this there are as many memory banks available as there are processors. On a quad-CPU machine the memory bandwidth is quadrupled without the need for a complicated Northbridge with enormous bandwidth. Having a memory controller integrated into the CPU has some additional advantages; we will not dig deeper into this technology here. |

译者信息

使用外部内存控制器并不是唯一的办法,另一个最近比较流行的方法是将控制器集成到CPU内部,将内存直连到每个CPU。这种架构的走红归功于基于AMD Opteron处理器的SMP系统。图2.3展示了这种架构。Intel则会从Nehalem处理器开始支持通用系统接口(CSI),基本上也是类似的思路——集成内存控制器,为每个处理器提供本地内存。

通过采用这样的架构,系统里有几个处理器,就可以有几个内存库(memory bank)。比如,在4 CPU的计算机上,不需要一个拥有巨大带宽的复杂北桥,就可以实现4倍的内存带宽。另外,将内存控制器集成到CPU内部还有其它一些优点,这里就不赘述了。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

There are disadvantages to this architecture, too. First of all, because the machine still has to make all the memory of the system accessible to all processors, the memory is not uniform anymore (hence the name NUMA - Non-Uniform Memory Architecture - for such an architecture). Local memory (memory attached to a processor) can be accessed with the usual speed. The situation is different when memory attached to another processor is accessed. In this case the interconnects between the processors have to be used. To access memory attached to CPU2 from CPU1 requires communication across one interconnect. When the same CPU accesses memory attached to CPU4 two interconnects have to be crossed. Each such communication has an associated cost. We talk about “NUMA factors” when we describe the extra time needed to access remote memory. The example architecture in Figure 2.3 has two levels for each CPU: immediately adjacent CPUs and one CPU which is two interconnects away. With more complicated machines the number of levels can grow significantly. There are also machine architectures (for instance IBM's x445 and SGI's Altix series) where there is more than one type of connection. CPUs are organized into nodes; within a node the time to access the memory might be uniform or have only small NUMA factors. The connection between nodes can be very expensive, though, and the NUMA factor can be quite high. |

译者信息

同样也有缺点。首先,系统仍然要让所有内存能被所有处理器所访问,导致内存不再是统一的资源(NUMA即得名于此)。处理器能以正常的速度访问本地内存(连接到该处理器的内存)。但它访问其它处理器的内存时,却需要使用处理器之间的互联通道。比如说,CPU 1如果要访问CPU 2的内存,则需要使用它们之间的互联通道。如果它需要访问CPU 4的内存,那么需要跨越两条互联通道。 使用互联通道是有代价的。在讨论访问远端内存的代价时,我们用「NUMA因子」这个词。在图2.3中,每个CPU有两个层级: 相邻的CPU,以及两个互联通道外的CPU。在更加复杂的系统中,层级也更多。甚至有些机器有不止一种连接,比如说IBM的x445和SGI的Altix系列。CPU被归入节点,节点内的内存访问时间是一致的,或者只有很小的NUMA因子。而在节点之间的连接代价很大,而且有巨大的NUMA因子。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Commodity NUMA machines exist today and will likely play an even greater role in the future. It is expected that, from late 2008 on, every SMP machine will use NUMA. The costs associated with NUMA make it important to recognize when a program is running on a NUMA machine. In Section 5 we will discuss more machine architectures and some technologies the Linux kernel provides for these programs. Beyond the technical details described in the remainder of this section, there are several additional factors which influence the performance of RAM. They are not controllable by software, which is why they are not covered in this section. The interested reader can learn about some of these factors in Section 2.1. They are really only needed to get a more complete picture of RAM technology and possibly to make better decisions when purchasing computers. The following two sections discuss hardware details at the gate level and the access protocol between the memory controller and the DRAM chips. Programmers will likely find this information enlightening since these details explain why RAM access works the way it does. It is optional knowledge, though, and the reader anxious to get to topics with more immediate relevance for everyday life can jump ahead to Section 2.2.5. |

译者信息

目前,已经有商用的NUMA计算机,而且它们在未来应该会扮演更加重要的角色。人们预计,从2008年底开始,每台SMP机器都会使用NUMA。每个在NUMA上运行的程序都应该认识到NUMA的代价。在第5节中,我们将讨论更多的架构,以及Linux内核为这些程序提供的一些技术。 除了本节中所介绍的技术之外,还有其它一些影响RAM性能的因素。它们无法被软件所左右,所以没有放在这里。如果大家有兴趣,可以在第2.1节中看一下。介绍这些技术,仅仅是因为它们能让我们绘制的RAM技术全图更为完整,或者是可能在大家购买计算机时能够提供一些帮助。 以下的两节主要介绍一些入门级的硬件知识,同时讨论内存控制器与DRAM芯片间的访问协议。这些知识解释了内存访问的原理,程序员可能会得到一些启发。不过,这部分并不是必读的,心急的读者可以直接跳到第2.2.5节。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

2.1 RAM TypesThere have been many types of RAM over the years and each type varies, sometimes significantly, from the other. The older types are today really only interesting to the historians. We will not explore the details of those. Instead we will concentrate on modern RAM types; we will only scrape the surface, exploring some details which are visible to the kernel or application developer through their performance characteristics. The first interesting details are centered around the question why there are different types of RAM in the same machine. More specifically, why there are both static RAM (SRAM {In other contexts SRAM might mean “synchronous RAM”.}) and dynamic RAM (DRAM). The former is much faster and provides the same functionality. Why is not all RAM in a machine SRAM? The answer is, as one might expect, cost. SRAM is much more expensive to produce and to use than DRAM. Both these cost factors are important, the second one increasing in importance more and more. To understand these difference we look at the implementation of a bit of storage for both SRAM and DRAM. In the remainder of this section we will discuss some low-level details of the implementation of RAM. We will keep the level of detail as low as possible. To that end, we will discuss the signals at a “logic level” and not at a level a hardware designer would have to use. That level of detail is unnecessary for our purpose here. |

译者信息

2.1 RAM类型这些年来,出现了许多不同类型的RAM,各有差异,有些甚至有非常巨大的不同。那些很古老的类型已经乏人问津,我们就不仔细研究了。我们主要专注于几类现代RAM,剖开它们的表面,研究一下内核和应用开发人员们可以看到的一些细节。 第一个有趣的细节是,为什么在同一台机器中有不同的RAM?或者说得更详细一点,为什么既有静态RAM(SRAM {SRAM还可以表示「同步内存」。}),又有动态RAM(DRAM)。功能相同,前者更快。那么,为什么不全部使用SRAM?答案是,代价。无论在生产还是在使用上,SRAM都比DRAM要贵得多。生产和使用,这两个代价因子都很重要,后者则是越来越重要。为了理解这一点,我们分别看一下SRAM和DRAM一个位的存储的实现过程。 在本节的余下部分,我们将讨论RAM实现的底层细节。我们将尽量控制细节的层面,比如,在「逻辑的层面」讨论信号,而不是硬件设计师那种层面,因为那毫无必要。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

2.1.1 Static RAM

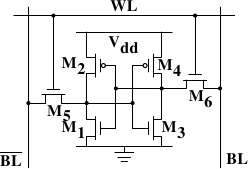

Figure 2.4 shows the structure of a 6 transistor SRAM cell. The core of this cell is formed by the four transistorsM1toM4which form two cross-coupled inverters. They have two stable states, representing 0 and 1 respectively. The state is stable as long as power onVddis available. If access to the state of the cell is needed the word access lineWLis raised. This makes the state of the cell immediately available for reading onBLandBL. If the cell state must be overwritten theBLandBLlines are first set to the desired values and thenWLis raised. Since the outside drivers are stronger than the four transistors (M1throughM4) this allows the old state to be overwritten. See [sramwiki] for a more detailed description of the way the cell works. For the following discussion it is important to note that

There are other, slower and less power-hungry, SRAM forms available, but those are not of interest here since we are looking at fast RAM. These slow variants are mainly interesting because they can be more easily used in a system than dynamic RAM because of their simpler interface. |

译者信息

2.1.1 静态RAM

图2.4展示了6晶体管SRAM的一个单元。核心是4个晶体管M1-M4,它们组成两个交叉耦合的反相器。它们有两个稳定的状态,分别代表0和1。只要保持Vdd有电,状态就是稳定的。 当需要访问单元的状态时,升起字访问线WL。BL和BL上就可以读取状态。如果需要覆盖状态,先将BL和BL设置为期望的值,然后升起WL。由于外部的驱动强于内部的4个晶体管,所以旧状态会被覆盖。 更多详情,可以参考[sramwiki]。为了下文的讨论,需要注意以下问题: 一个单元需要6个晶体管。也有采用4个晶体管的SRAM,但有缺陷。 维持状态需要恒定的电源。 升起WL后立即可以读取状态。信号与其它晶体管控制的信号一样,是直角的(快速在两个状态间变化)。 状态稳定,不需要刷新循环。 SRAM也有其它形式,不那么费电,但比较慢。由于我们需要的是快速RAM,因此不在关注范围内。这些较慢的SRAM的主要优点在于接口简单,比动态RAM更容易使用。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

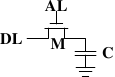

2.1.2 Dynamic RAM Dynamic RAM is, in its structure, much simpler than static RAM. Figure 2.5 shows the structure of a usual DRAM cell design. All it consists of is one transistor and one capacitor. This huge difference in complexity of course means that it functions very differently than static RAM.

A dynamic RAM cell keeps its state in the capacitorC. The transistorMis used to guard the access to the state. To read the state of the cell the access lineALis raised; this either causes a current to flow on the data lineDLor not, depending on the charge in the capacitor. To write to the cell the data lineDLis appropriately set and thenALis raised for a time long enough to charge or drain the capacitor. There are a number of complications with the design of dynamic RAM. The use of a capacitor means that reading the cell discharges the capacitor. The procedure cannot be repeated indefinitely, the capacitor must be recharged at some point. Even worse, to accommodate the huge number of cells (chips with 109 or more cells are now common) the capacity to the capacitor must be low (in the femto-farad range or lower). A fully charged capacitor holds a few 10's of thousands of electrons. Even though the resistance of the capacitor is high (a couple of tera-ohms) it only takes a short time for the capacity to dissipate. This problem is called “leakage”. |

译者信息

2.1.2 动态RAM动态RAM比静态RAM要简单得多。图2.5展示了一种普通DRAM的结构。它只含有一个晶体管和一个电容器。显然,这种复杂性上的巨大差异意味着功能上的迥异。

动态RAM的状态是保持在电容器C中。晶体管M用来控制访问。如果要读取状态,升起访问线AL,这时,可能会有电流流到数据线DL上,也可能没有,取决于电容器是否有电。如果要写入状态,先设置DL,然后升起AL一段时间,直到电容器充电或放电完毕。 动态RAM的设计有几个复杂的地方。由于读取状态时需要对电容器放电,所以这一过程不能无限重复,不得不在某个点上对它重新充电。 更糟糕的是,为了容纳大量单元(现在一般在单个芯片上容纳10的9次方以上的RAM单元),电容器的容量必须很小(0.000000000000001法拉以下)。这样,完整充电后大约持有几万个电子。即使电容器的电阻很大(若干兆欧姆),仍然只需很短的时间就会耗光电荷,称为「泄漏」。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

This leakage is why a DRAM cell must be constantly refreshed. For most DRAM chips these days this refresh must happen every 64ms. During the refresh cycle no access to the memory is possible. For some workloads this overhead might stall up to 50% of the memory accesses (see [highperfdram]). A second problem resulting from the tiny charge is that the information read from the cell is not directly usable. The data line must be connected to a sense amplifier which can distinguish between a stored 0 or 1 over the whole range of charges which still have to count as 1. A third problem is that charging and draining a capacitor is not instantaneous. The signals received by the sense amplifier are not rectangular, so a conservative estimate as to when the output of the cell is usable has to be used. The formulas for charging and discharging a capacitor are |

译者信息

这种泄露就是现在的大部分DRAM芯片每隔64ms就必须进行一次刷新的原因。在刷新期间,对于该芯片的访问是不可能的,这甚至会造成半数任务的延宕。(相关内容请察看【highperfdram】一章) 这个问题的另一个后果就是无法直接读取芯片单元中的信息,而必须通过信号放大器将0和1两种信号间的电势差增大。 最后一个问题在于电容器的冲放电是需要时间的,这就导致了信号放大器读取的信号并不是典型的矩形信号。所以当放大器输出信号的时候就需要一个小小的延宕,相关公式如下 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

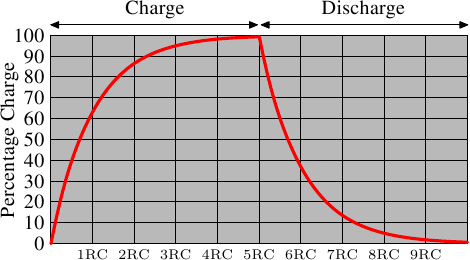

This means it takes some time (determined by the capacity C and resistance R) for the capacitor to be charged and discharged. It also means that the current which can be detected by the sense amplifiers is not immediately available. Figure 2.6 shows the charge and discharge curves. The X—axis is measured in units of RC (resistance multiplied by capacitance) which is a unit of time.

Unlike the static RAM case where the output is immediately available when the word access line is raised, it will always take a bit of time until the capacitor discharges sufficiently. This delay severely limits how fast DRAM can be. The simple approach has its advantages, too. The main advantage is size. The chip real estate needed for one DRAM cell is many times smaller than that of an SRAM cell. The SRAM cells also need individual power for the transistors maintaining the state. The structure of the DRAM cell is also simpler and more regular which means packing many of them close together on a die is simpler. Overall, the (quite dramatic) difference in cost wins. Except in specialized hardware — network routers, for example — we have to live with main memory which is based on DRAM. This has huge implications on the programmer which we will discuss in the remainder of this paper. But first we need to look into a few more details of the actual use of DRAM cells. |

译者信息

这就意味着需要一些时间(时间长短取决于电容C和电阻R)来对电容进行冲放电。另一个负面作用是,信号放大器的输出电流不能立即就作为信号载体使用。图2.6显示了冲放电的曲线,x轴表示的是单位时间下的R*C

与静态RAM可以即刻读取数据不同的是,当要读取动态RAM的时候,必须花一点时间来等待电容的冲放电完全。这一点点的时间最终限制了DRAM的速度。 当然了,这种读取方式也是有好处的。最大的好处在于缩小了规模。一个动态RAM的尺寸是小于静态RAM的。这种规模的减小不单单建立在动态RAM的简单结构之上,也是由于减少了静态RAM的各个单元独立的供电部分。以上也同时导致了动态RAM模具的简单化。 综上所述,由于不可思议的成本差异,除了一些特殊的硬件(包括路由器什么的)之外,我们的硬件大多是使用DRAM的。这一点深深的影响了咱们这些程序员,后文将会对此进行讨论。在此之前,我们还是先了解下DRAM的更多细节。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

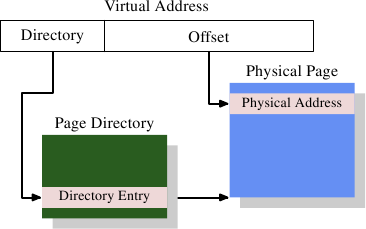

2.1.3 DRAM Access A program selects a memory location using a virtual address. The processor translates this into a physical address and finally the memory controller selects the RAM chip corresponding to that address. To select the individual memory cell on the RAM chip, parts of the physical address are passed on in the form of a number of address lines. It would be completely impractical to address memory locations individually from the memory controller: 4GB of RAM would require 232 address lines. Instead the address is passed encoded as a binary number using a smaller set of address lines. The address passed to the DRAM chip this way must be demultiplexed first. A demultiplexer with N address lines will have 2N output lines. These output lines can be used to select the memory cell. Using this direct approach is no big problem for chips with small capacities. |

译者信息

2.1.3 DRAM 访问 一个程序选择了一个内存位置使用到了一个虚拟地址。处理器转换这个到物理地址最后将内存控制选择RAM芯片匹配了那个地址。在RAM芯片去选择单个内存单元,部分的物理地址以许多地址行的形式被传递。 它单独地去处理来自于内存控制器的内存位置将完全不切实际:4G的RAM将需要 232 地址行。地址传递DRAM芯片的这种方式首先必须被路由器解析。一个路由器的N多地址行将有2N 输出行。这些输出行能被使用到选择内存单元。使用这个直接方法对于小容量芯片不再是个大问题 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

But if the number of cells grows this approach is not suitable anymore. A chip with 1Gbit {I hate those SI prefixes. For me a giga-bit will always be 230 and not 109 bits.} capacity would need 30 address lines and 230select lines. The size of a demultiplexer increases exponentially with the number of input lines when speed is not to be sacrificed. A demultiplexer for 30 address lines needs a whole lot of chip real estate in addition to the complexity (size and time) of the demultiplexer. Even more importantly, transmitting 30 impulses on the address lines synchronously is much harder than transmitting “only” 15 impulses. Fewer lines have to be laid out at exactly the same length or timed appropriately. {Modern DRAM types like DDR3 can automatically adjust the timing but there is a limit as to what can be tolerated.}

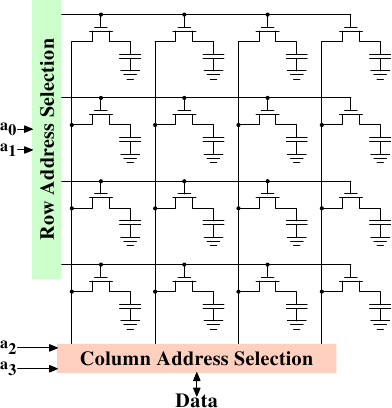

Figure 2.7 shows a DRAM chip at a very high level. The DRAM cells are organized in rows and columns. They could all be aligned in one row but then the DRAM chip would need a huge demultiplexer. With the array approach the design can get by with one demultiplexer and one multiplexer of half the size. {Multiplexers and demultiplexers are equivalent and the multiplexer here needs to work as a demultiplexer when writing. So we will drop the differentiation from now on.} This is a huge saving on all fronts. In the example the address linesa0anda1through the row address selection (RAS) demultiplexer select the address lines of a whole row of cells. When reading, the content of all cells is thusly made available to the column address selection (CAS) {The line over the name indicates that the signal is negated} multiplexer. Based on the address linesa2anda3the content of one column is then made available to the data pin of the DRAM chip. This happens many times in parallel on a number of DRAM chips to produce a total number of bits corresponding to the width of the data bus. |

译者信息

但如果许多的单元生成这种方法不在适合。一个1G的芯片容量(我反感那些SI前缀,对于我一个giga-bit将总是230 而不是109字节)将需要30地址行和230 选项行。一个路由器的大小及许多的输入行以指数方式递增当速度不被牺牲时。一个30地址行路由器需要一大堆芯片的真实身份另外路由器也就复杂起来了。更重要的是,传递30脉冲在地址行同步要比仅仅传递15脉冲困难的多。较少列能精确布局相同长度或恰当的时机(现代DRAM类型像DDR3能自动调整时序但这个限制能让他什么都能忍受)

图2.7展示了一个很高级别的一个DRAM芯片,DRAM被组织在行和列里。他们能在一行中对奇但DRAM芯片需要一个大的路由器。通过阵列方法设计能被一个路由器和一个半的multiplexer获得{多路复用器(multiplexer)和路由器是一样的,这的multiplexer需要以路由器身份工作当写数据时候。那么从现在开始我们开始讨论其区别.}这在所有方面会是一个大的存储。例如地址linesa0和a1通过行地址选择路由器来选择整个行的芯片的地址列,当读的时候,所有的芯片目录能使其纵列选择路由器可用,依据地址linesa2和a3一个纵列的目录用于数据DRAM芯片的接口类型。这发生了许多次在许多DRAM芯片产生一个总记录数的字节匹配给一个宽范围的数据总线。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

For writing, the new cell value is put on the data bus and, when the cell is selected using theRASandCAS, it is stored in the cell. A pretty straightforward design. There are in reality — obviously — many more complications. There need to be specifications for how much delay there is after the signal before the data will be available on the data bus for reading. The capacitors do not unload instantaneously, as described in the previous section. The signal from the cells is so weak that it needs to be amplified. For writing it must be specified how long the data must be available on the bus after theRASandCASis done to successfully store the new value in the cell (again, capacitors do not fill or drain instantaneously). These timing constants are crucial for the performance of the DRAM chip. We will talk about this in the next section. A secondary scalability problem is that having 30 address lines connected to every RAM chip is not feasible either. Pins of a chip are a precious resources. It is “bad” enough that the data must be transferred as much as possible in parallel (e.g., in 64 bit batches). The memory controller must be able to address each RAM module (collection of RAM chips). If parallel access to multiple RAM modules is required for performance reasons and each RAM module requires its own set of 30 or more address lines, then the memory controller needs to have, for 8 RAM modules, a whopping 240+ pins only for the address handling. |

译者信息

对于写操作,内存单元的数据新值被放到了数据总线,当使用RAS和CAS方式选中内存单元时,数据是存放在内存单元内的。这是一个相当直观的设计,在现实中——很显然——会复杂得多,对于读,需要规范从发出信号到数据在数据总线上变得可读的时延。电容不会像前面章节里面描述的那样立刻自动放电,从内存单元发出的信号是如此这微弱以至于它需要被放大。对于写,必须规范从数据RAS和CAS操作完成后到数据成功的被写入内存单元的时延(当然,电容不会立刻自动充电和放电)。这些时间常量对于DRAM芯片的性能是至关重要的,我们将在下章讨论它。 另一个关于伸缩性的问题是,用30根地址线连接到每一个RAM芯片是行不通的。芯片的针脚是非常珍贵的资源,以至数据必须能并行传输就并行传输(比如:64位为一组)。内存控制器必须有能力解析每一个RAM模块(RAM芯片集合)。如果因为性能的原因要求并发行访问多个RAM模块并且每个RAM模块需要自己独占的30或多个地址线,那么对于8个RAM模块,仅仅是解析地址,内存控制器就需要240+之多的针脚。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

To counter these secondary scalability problems DRAM chips have, for a long time, multiplexed the address itself. That means the address is transferred in two parts. The first part consisting of address bitsa0anda1in the example in Figure 2.7) select the row. This selection remains active until revoked. Then the second part, address bitsa2anda3, select the column. The crucial difference is that only two external address lines are needed. A few more lines are needed to indicate when theRASandCASsignals are available but this is a small price to pay for cutting the number of address lines in half. This address multiplexing brings its own set of problems, though. We will discuss them in Section 2.2. |

译者信息 在很长一段时间里,地址线被复用以解决DRAM芯片的这些次要的可扩展性问题。这意味着地址被转换成两部分。第一部分由地址位a0和a1选择行(如图2.7)。这个选择保持有效直到撤销。然后是第二部分,地址位a2和a3选择列。关键差别在于:只需要两根外部地址线。需要一些很少的线指明RAS和CAS信号有效,但是把地址线的数目减半所付出的代价更小。可是地址复用也带来自身的一些问题。我们将在2.2章中提到。 在很长一段时间里,地址线被复用以解决DRAM芯片的这些次要的可扩展性问题。这意味着地址被转换成两部分。第一部分由地址位a0和a1选择行(如图2.7)。这个选择保持有效直到撤销。然后是第二部分,地址位a2和a3选择列。关键差别在于:只需要两根外部地址线。需要一些很少的线指明RAS和CAS信号有效,但是把地址线的数目减半所付出的代价更小。可是地址复用也带来自身的一些问题。我们将在2.2章中提到。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

2.1.4 Conclusions Do not worry if the details in this section are a bit overwhelming. The important things to take away from this section are:

The following section will go into more details about the actual process of accessing DRAM memory. We are not going into more details of accessing SRAM, which is usually directly addressed. This happens for speed and because the SRAM memory is limited in size. SRAM is currently used in CPU caches and on-die where the connections are small and fully under control of the CPU designer. CPU caches are a topic which we discuss later but all we need to know is that SRAM cells have a certain maximum speed which depends on the effort spent on the SRAM. The speed can vary from only slightly slower than the CPU core to one or two orders of magnitude slower. |

译者信息

2.1.4 总结 如果这章节的内容有些难以应付,不用担心。纵观这章节的重点,有:

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

2.2 DRAM Access Technical DetailsIn the section introducing DRAM we saw that DRAM chips multiplex the addresses in order to save resources. We also saw that accessing DRAM cells takes time since the capacitors in those cells do not discharge instantaneously to produce a stable signal; we also saw that DRAM cells must be refreshed. Now it is time to put this all together and see how all these factors determine how the DRAM access has to happen. We will concentrate on current technology; we will not discuss asynchronous DRAM and its variants as they are simply not relevant anymore. Readers interested in this topic are referred to [highperfdram] and [arstechtwo]. We will also not talk about Rambus DRAM (RDRAM) even though the technology is not obsolete. It is just not widely used for system memory. We will concentrate exclusively on Synchronous DRAM (SDRAM) and its successors Double Data Rate DRAM (DDR). |

译者信息

2.2 DRAM访问细节在上文介绍DRAM的时候,我们已经看到DRAM芯片为了节约资源,对地址进行了复用。而且,访问DRAM单元是需要一些时间的,因为电容器的放电并不是瞬时的。此外,我们还看到,DRAM需要不停地刷新。在这一节里,我们将把这些因素拼合起来,看看它们是如何决定DRAM的访问过程。 我们将主要关注在当前的科技上,不会再去讨论异步DRAM以及它的各种变体。如果对它感兴趣,可以去参考[highperfdram]及[arstechtwo]。我们也不会讨论Rambus DRAM(RDRAM),虽然它并不过时,但在系统内存领域应用不广。我们将主要介绍同步DRAM(SDRAM)及其后继者双倍速DRAM(DDR)。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Synchronous DRAM, as the name suggests, works relative to a time source. The memory controller provides a clock, the frequency of which determines the speed of the Front Side Bus (FSB) — the memory controller interface used by the DRAM chips. As of this writing, frequencies of 800MHz, 1,066MHz, or even 1,333MHz are available with higher frequencies (1,600MHz) being announced for the next generation. This does not mean the frequency used on the bus is actually this high. Instead, today's buses are double- or quad-pumped, meaning that data is transported two or four times per cycle. Higher numbers sell so the manufacturers like to advertise a quad-pumped 200MHz bus as an “effective” 800MHz bus. For SDRAM today each data transfer consists of 64 bits — 8 bytes. The transfer rate of the FSB is therefore 8 bytes multiplied by the effective bus frequency (6.4GB/s for the quad-pumped 200MHz bus). That sounds a lot but it is the burst speed, the maximum speed which will never be surpassed. As we will see now the protocol for talking to the RAM modules has a lot of downtime when no data can be transmitted. It is exactly this downtime which we must understand and minimize to achieve the best performance. |

译者信息

同步DRAM,顾名思义,是参照一个时间源工作的。由内存控制器提供一个时钟,时钟的频率决定了前端总线(FSB)的速度。FSB是内存控制器提供给DRAM芯片的接口。在我写作本文的时候,FSB已经达到800MHz、1066MHz,甚至1333MHz,并且下一代的1600MHz也已经宣布。但这并不表示时钟频率有这么高。实际上,目前的总线都是双倍或四倍传输的,每个周期传输2次或4次数据。报的越高,卖的越好,所以这些厂商们喜欢把四倍传输的200MHz总线宣传为“有效的”800MHz总线。 以今天的SDRAM为例,每次数据传输包含64位,即8字节。所以FSB的传输速率应该是有效总线频率乘于8字节(对于4倍传输200MHz总线而言,传输速率为6.4GB/s)。听起来很高,但要知道这只是峰值速率,实际上无法达到的最高速率。我们将会看到,与RAM模块交流的协议有大量时间是处于非工作状态,不进行数据传输。我们必须对这些非工作时间有所了解,并尽量缩短它们,才能获得最佳的性能。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

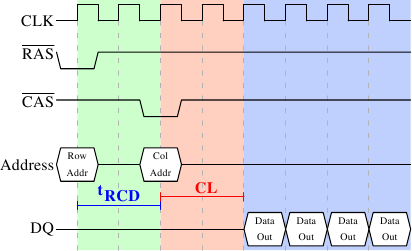

2.2.1 Read Access Protocol

Figure 2.8 shows the activity on some of the connectors of a DRAM module which happens in three differently colored phases. As usual, time flows from left to right. A lot of details are left out. Here we only talk about the bus clock,RASandCASsignals, and the address and data buses. A read cycle begins with the memory controller making the row address available on the address bus and lowering theRASsignal. All signals are read on the rising edge of the clock (CLK) so it does not matter if the signal is not completely square as long as it is stable at the time it is read. Setting the row address causes the RAM chip to start latching the addressed row. TheCASsignal can be sent aftertRCD(RAS-to-CASDelay) clock cycles. The column address is then transmitted by making it available on the address bus and lowering theCASline. Here we can see how the two parts of the address (more or less halves, nothing else makes sense) can be transmitted over the same address bus. |

译者信息

2.2.1 读访问协议

图2.8展示了某个DRAM模块一些连接器上的活动,可分为三个阶段,图上以不同颜色表示。按惯例,时间为从左向右流逝。这里忽略了许多细节,我们只关注时钟频率、RAS与CAS信号、地址总线和数据总线。首先,内存控制器将行地址放在地址总线上,并降低RAS信号,读周期开始。所有信号都在时钟(CLK)的上升沿读取,因此,只要信号在读取的时间点上保持稳定,就算不是标准的方波也没有关系。设置行地址会促使RAM芯片锁住指定的行。 CAS信号在tRCD(RAS到CAS时延)个时钟周期后发出。内存控制器将列地址放在地址总线上,降低CAS线。这里我们可以看到,地址的两个组成部分是怎么通过同一条总线传输的。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Now the addressing is complete and the data can be transmitted. The RAM chip needs some time to prepare for this. The delay is usually calledCASLatency (CL). In Figure 2.8 theCASlatency is 2. It can be higher or lower, depending on the quality of the memory controller, motherboard, and DRAM module. The latency can also have half values. With CL=2.5 the first data would be available at the first falling flank in the blue area. With all this preparation to get to the data it would be wasteful to only transfer one data word. This is why DRAM modules allow the memory controller to specify how much data is to be transmitted. Often the choice is between 2, 4, or 8 words. This allows filling entire lines in the caches without a newRAS/CASsequence. It is also possible for the memory controller to send a newCASsignal without resetting the row selection. In this way, consecutive memory addresses can be read from or written to significantly faster because theRASsignal does not have to be sent and the row does not have to be deactivated (see below). Keeping the row “open” is something the memory controller has to decide. Speculatively leaving it open all the time has disadvantages with real-world applications (see [highperfdram]). Sending newCASsignals is only subject to the Command Rate of the RAM module (usually specified as Tx, where x is a value like 1 or 2; it will be 1 for high-performance DRAM modules which accept new commands every cycle). In this example the SDRAM spits out one word per cycle. This is what the first generation does. DDR is able to transmit two words per cycle. This cuts down on the transfer time but does not change the latency. In principle, DDR2 works the same although in practice it looks different. There is no need to go into the details here. It is sufficient to note that DDR2 can be made faster, cheaper, more reliable, and is more energy efficient (see [ddrtwo] for more information). |

译者信息

至此,寻址结束,是时候传输数据了。但RAM芯片任然需要一些准备时间,这个时间称为CAS时延(CL)。在图2.8中CL为2。这个值可大可小,它取决于内存控制器、主板和DRAM模块的质量。CL还可能是半周期。假设CL为2.5,那么数据将在蓝色区域内的第一个下降沿准备就绪。 既然数据的传输需要这么多的准备工作,仅仅传输一个字显然是太浪费了。因此,DRAM模块允许内存控制指定本次传输多少数据。可以是2、4或8个字。这样,就可以一次填满高速缓存的整条线,而不需要额外的RAS/CAS序列。另外,内存控制器还可以在不重置行选择的前提下发送新的CAS信号。这样,读取或写入连续的地址就可以变得非常快,因为不需要发送RAS信号,也不需要把行置为非激活状态(见下文)。是否要将行保持为“打开”状态是内存控制器判断的事情。让它一直保持打开的话,对真正的应用会有不好的影响(参见[highperfdram])。CAS信号的发送仅与RAM模块的命令速率(Command Rate)有关(常常记为Tx,其中x为1或2,高性能的DRAM模块一般为1,表示在每个周期都可以接收新命令)。 在上图中,SDRAM的每个周期输出一个字的数据。这是第一代的SDRAM。而DDR可以在一个周期中输出两个字。这种做法可以减少传输时间,但无法降低时延。DDR2尽管看上去不同,但在本质上也是相同的做法。对于DDR2,不需要再深入介绍了,我们只需要知道DDR2更快、更便宜、更可靠、更节能(参见[ddrtwo])就足够了。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

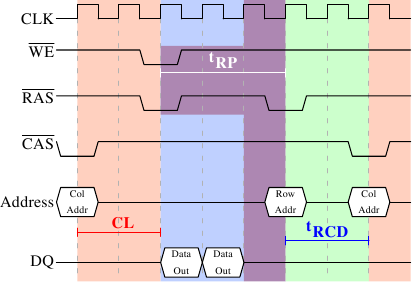

2.2.2 Precharge and Activation Figure 2.8 does not cover the whole cycle. It only shows parts of the full cycle of accessing DRAM. Before a newRASsignal can be sent the currently latched row must be deactivated and the new row must be precharged. We can concentrate here on the case where this is done with an explicit command. There are improvements to the protocol which, in some situations, allows this extra step to be avoided. The delays introduced by precharging still affect the operation, though.

Figure 2.9 shows the activity starting from oneCASsignal to theCASsignal for another row. The data requested with the firstCASsignal is available as before, after CL cycles. In the example two words are requested which, on a simple SDRAM, takes two cycles to transmit. Alternatively, imagine four words on a DDR chip. |

译者信息

2.2.2 预充电与激活图2.8并不完整,它只画出了访问DRAM的完整循环的一部分。在发送RAS信号之前,必须先把当前锁住的行置为非激活状态,并对新行进行预充电。在这里,我们主要讨论由于显式发送指令而触发以上行为的情况。协议本身作了一些改进,在某些情况下是可以省略这个步骤的,但预充电带来的时延还是会影响整个操作。

图2.9显示的是两次CAS信号的时序图。第一次的数据在CL周期后准备就绪。图中的例子里,是在SDRAM上,用两个周期传输了两个字的数据。如果换成DDR的话,则可以传输4个字。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Even on DRAM modules with a command rate of one the precharge command cannot be issued right away. It is necessary to wait as long as it takes to transmit the data. In this case it takes two cycles. This happens to be the same as CL but that is just a coincidence. The precharge signal has no dedicated line; instead, some implementations issue it by lowering the Write Enable (WE) andRASline simultaneously. This combination has no useful meaning by itself (see [micronddr] for encoding details). Once the precharge command is issued it takestRP(Row Precharge time) cycles until the row can be selected. In Figure 2.9 much of the time (indicated by the purplish color) overlaps with the memory transfer (light blue). This is good! ButtRPis larger than the transfer time and so the nextRASsignal is stalled for one cycle. |

译者信息

即使是在一个命令速率为1的DRAM模块上,也无法立即发出预充电命令,而要等数据传输完成。在上图中,即为两个周期。刚好与CL相同,但只是巧合而已。预充电信号并没有专用线,某些实现是用同时降低写使能(WE)线和RAS线的方式来触发。这一组合方式本身没有特殊的意义(参见[micronddr])。 发出预充电信命令后,还需等待tRP(行预充电时间)个周期之后才能使行被选中。在图2.9中,这个时间(紫色部分)大部分与内存传输的时间(淡蓝色部分)重合。不错。但tRP大于传输时间,因此下一个RAS信号只能等待一个周期。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

If we were to continue the timeline in the diagram we would find that the next data transfer happens 5 cycles after the previous one stops. This means the data bus is only in use two cycles out of seven. Multiply this with the FSB speed and the theoretical 6.4GB/s for a 800MHz bus become 1.8GB/s. That is bad and must be avoided. The techniques described in Section 6 help to raise this number. But the programmer usually has to do her share. There is one more timing value for a SDRAM module which we have not discussed. In Figure 2.9 the precharge command was only limited by the data transfer time. Another constraint is that an SDRAM module needs time after aRASsignal before it can precharge another row (denoted astRAS). This number is usually pretty high, in the order of two or three times thetRPvalue. This is a problem if, after aRASsignal, only oneCASsignal follows and the data transfer is finished in a few cycles. Assume that in Figure 2.9 the initialCASsignal was preceded directly by aRASsignal and thattRASis 8 cycles. Then the precharge command would have to be delayed by one additional cycle since the sum oftRCD, CL, andtRP(since it is larger than the data transfer time) is only 7 cycles. |

译者信息

如果我们补充完整上图中的时间线,最后会发现下一次数据传输发生在前一次的5个周期之后。这意味着,数据总线的7个周期中只有2个周期才是真正在用的。再用它乘于FSB速度,结果就是,800MHz总线的理论速率6.4GB/s降到了1.8GB/s。真是太糟了。第6节将介绍一些技术,可以帮助我们提高总线有效速率。程序员们也需要尽自己的努力。 SDRAM还有一些定时值,我们并没有谈到。在图2.9中,预充电命令仅受制于数据传输时间。除此之外,SDRAM模块在RAS信号之后,需要经过一段时间,才能进行预充电(记为tRAS)。它的值很大,一般达到tRP的2到3倍。如果在某个RAS信号之后,只有一个CAS信号,而且数据只传输很少几个周期,那么就有问题了。假设在图2.9中,第一个CAS信号是直接跟在一个RAS信号后免的,而tRAS为8个周期。那么预充电命令还需要被推迟一个周期,因为tRCD、CL和tRP加起来才7个周期。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

DDR modules are often described using a special notation: w-x-y-z-T. For instance: 2-3-2-8-T1. This means:

There are numerous other timing constants which affect the way commands can be issued and are handled. Those five constants are in practice sufficient to determine the performance of the module, though. It is sometimes useful to know this information for the computers in use to be able to interpret certain measurements. It is definitely useful to know these details when buying computers since they, along with the FSB and SDRAM module speed, are among the most important factors determining a computer's speed. The very adventurous reader could also try to tweak a system. Sometimes the BIOS allows changing some or all these values. SDRAM modules have programmable registers where these values can be set. Usually the BIOS picks the best default value. If the quality of the RAM module is high it might be possible to reduce the one or the other latency without affecting the stability of the computer. Numerous overclocking websites all around the Internet provide ample of documentation for doing this. Do it at your own risk, though and do not say you have not been warned. |

译者信息

DDR模块往往用w-z-y-z-T来表示。例如,2-3-2-8-T1,意思是: w 2 CAS时延(CL) 当然,除以上的参数外,还有许多其它参数影响命令的发送与处理。但以上5个参数已经足以确定模块的性能。 在解读计算机性能参数时,这些信息可能会派上用场。而在购买计算机时,这些信息就更有用了,因为它们与FSB/SDRAM速度一起,都是决定计算机速度的关键因素。 喜欢冒险的读者们还可以利用它们来调优系统。有些计算机的BIOS可以让你修改这些参数。SDRAM模块有一些可编程寄存器,可供设置参数。BIOS一般会挑选最佳值。如果RAM模块的质量足够好,我们可以在保持系统稳定的前提下将减小以上某个时延参数。互联网上有大量超频网站提供了相关的文档。不过,这是有风险的,需要大家自己承担,可别怪我没有事先提醒哟。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

2.2.3 Recharging A mostly-overlooked topic when it comes to DRAM access is recharging. As explained in Section 2.1.2, DRAM cells must constantly be refreshed. This does not happen completely transparently for the rest of the system. At times when a row {Rows are the granularity this happens with despite what [highperfdram] and other literature says (see [micronddr]).} is recharged no access is possible. The study in [highperfdram] found that “[s]urprisingly, DRAM refresh organization can affect performance dramatically”. Each DRAM cell must be refreshed every 64ms according to the JEDEC specification. If a DRAM array has 8,192 rows this means the memory controller has to issue a refresh command on average every 7.8125µs (refresh commands can be queued so in practice the maximum interval between two requests can be higher). It is the memory controller's responsibility to schedule the refresh commands. The DRAM module keeps track of the address of the last refreshed row and automatically increases the address counter for each new request. There is really not much the programmer can do about the refresh and the points in time when the commands are issued. But it is important to keep this part to the DRAM life cycle in mind when interpreting measurements. If a critical word has to be retrieved from a row which currently is being refreshed the processor could be stalled for quite a long time. How long each refresh takes depends on the DRAM module. |

译者信息

2.2.3 重充电谈到DRAM的访问时,重充电是常常被忽略的一个主题。在2.1.2中曾经介绍,DRAM必须保持刷新。……行在充电时是无法访问的。[highperfdram]的研究发现,“令人吃惊,DRAM刷新对性能有着巨大的影响”。 根据JEDEC规范,DRAM单元必须保持每64ms刷新一次。对于8192行的DRAM,这意味着内存控制器平均每7.8125µs就需要发出一个刷新命令(在实际情况下,由于刷新命令可以纳入队列,因此这个时间间隔可以更大一些)。刷新命令的调度由内存控制器负责。DRAM模块会记录上一次刷新行的地址,然后在下次刷新请求时自动对这个地址进行递增。 对于刷新及发出刷新命令的时间点,程序员无法施加影响。但我们在解读性能参数时有必要知道,它也是DRAM生命周期的一个部分。如果系统需要读取某个重要的字,而刚好它所在的行正在刷新,那么处理器将会被延迟很长一段时间。刷新的具体耗时取决于DRAM模块本身。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

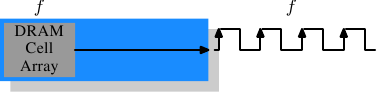

2.2.4 Memory Types It is worth spending some time on the current and soon-to-be current memory types in use. We will start with SDR (Single Data Rate) SDRAMs since they are the basis of the DDR (Double Data Rate) SDRAMs. SDRs were pretty simple. The memory cells and the data transfer rate were identical.

In Figure 2.10 the DRAM cell array can output the memory content at the same rate it can be transported over the memory bus. If the DRAM cell array can operate at 100MHz, the data transfer rate of the bus is thus 100Mb/s. The frequency f for all components is the same. Increasing the throughput of the DRAM chip is expensive since the energy consumption rises with the frequency. With a huge number of array cells this is prohibitively expensive. {Power = Dynamic Capacity × Voltage2 × Frequency.} In reality it is even more of a problem since increasing the frequency usually also requires increasing the voltage to maintain stability of the system. DDR SDRAM (called DDR1 retroactively) manages to improve the throughput without increasing any of the involved frequencies.

|

译者信息

2.2.4 内存类型我们有必要花一些时间来了解一下目前流行的内存,以及那些即将流行的内存。首先从SDR(单倍速)SDRAM开始,因为它们是DDR(双倍速)SDRAM的基础。SDR非常简单,内存单元和数据传输率是相等的。

在图2.10中,DRAM单元阵列能以等同于内存总线的速率输出内容。假设DRAM单元阵列工作在100MHz上,那么总线的数据传输率可以达到100Mb/s。所有组件的频率f保持相同。由于提高频率会导致耗电量增加,所以提高吞吐量需要付出很高的的代价。如果是很大规模的内存阵列,代价会非常巨大。{功率 = 动态电容 x 电压2 x 频率}。而且,提高频率还需要在保持系统稳定的情况下提高电压,这更是一个问题。因此,就有了DDR SDRAM(现在叫DDR1),它可以在不提高频率的前提下提高吞吐量。

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| The difference between SDR and DDR1 is, as can be seen in Figure 2.11 and guessed from the name, that twice the amount of data is transported per cycle. I.e., the DDR1 chip transports data on the rising and falling edge. This is sometimes called a “double-pumped” bus. To make this possible without increasing the frequency of the cell array a buffer has to be introduced. This buffer holds two bits per data line. This in turn requires that, in the cell array in Figure 2.7, the data bus consists of two lines. Implementing this is trivial: one only has the use the same column address for two DRAM cells and access them in parallel. The changes to the cell array to implement this are also minimal.

The SDR DRAMs were known simply by their frequency (e.g., PC100 for 100MHz SDR). To make DDR1 DRAM sound better the marketers had to come up with a new scheme since the frequency did not change. They came with a name which contains the transfer rate in bytes a DDR module (they have 64-bit busses) can sustain: 100MHz × 64bit × 2 = 1,600MB/s |

译者信息

我们从图2.11上可以看出DDR1与SDR的不同之处,也可以从DDR1的名字里猜到那么几分,DDR1的每个周期可以传输两倍的数据,它的上升沿和下降沿都传输数据。有时又被称为“双泵(double-pumped)”总线。为了在不提升频率的前提下实现双倍传输,DDR引入了一个缓冲区。缓冲区的每条数据线都持有两位。它要求内存单元阵列的数据总线包含两条线。实现的方式很简单,用同一个列地址同时访问两个DRAM单元。对单元阵列的修改也很小。 SDR DRAM是以频率来命名的(例如,对应于100MHz的称为PC100)。为了让DDR1听上去更好听,营销人员们不得不想了一种新的命名方案。这种新方案中含有DDR模块可支持的传输速率(DDR拥有64位总线): 100MHz x 64位 x 2 = 1600MB/s |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Hence a DDR module with 100MHz frequency is called PC1600. With 1600 > 100 all marketing requirements are fulfilled; it sounds much better although the improvement is really only a factor of two. { I will take the factor of two but I do not have to like the inflated numbers.}

To get even more out of the memory technology DDR2 includes a bit more innovation. The most obvious change that can be seen in Figure 2.12 is the doubling of the frequency of the bus. Doubling the frequency means doubling the bandwidth. Since this doubling of the frequency is not economical for the cell array it is now required that the I/O buffer gets four bits in each clock cycle which it then can send on the bus. This means the changes to the DDR2 modules consist of making only the I/O buffer component of the DIMM capable of running at higher speeds. This is certainly possible and will not require measurably more energy, it is just one tiny component and not the whole module. The names the marketers came up with for DDR2 are similar to the DDR1 names only in the computation of the value the factor of two is replaced by four (we now have a quad-pumped bus). Figure 2.13 shows the names of the modules in use today.

|

译者信息

于是,100MHz频率的DDR模块就被称为PC1600。由于1600 > 100,营销方面的需求得到了满足,听起来非常棒,但实际上仅仅只是提升了两倍而已。{我接受两倍这个事实,但不喜欢类似的数字膨胀戏法。}

为了更进一步,DDR2有了更多的创新。在图2.12中,最明显的变化是,总线的频率加倍了。频率的加倍意味着带宽的加倍。如果对单元阵列的频率加倍,显然是不经济的,因此DDR2要求I/O缓冲区在每个时钟周期读取4位。也就是说,DDR2的变化仅在于使I/O缓冲区运行在更高的速度上。这是可行的,而且耗电也不会显著增加。DDR2的命名与DDR1相仿,只是将因子2替换成4(四泵总线)。图2.13显示了目前常用的一些模块的名称。

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| There is one more twist to the naming. The FSB speed used by CPU, motherboard, and DRAM module is specified by using the effective frequency. I.e., it factors in the transmission on both flanks of the clock cycle and thereby inflates the number. So, a 133MHz module with a 266MHz bus has an FSB “frequency” of 533MHz.

The specification for DDR3 (the real one, not the fake GDDR3 used in graphics cards) calls for more changes along the lines of the transition to DDR2. The voltage will be reduced from 1.8V for DDR2 to 1.5V for DDR3. Since the power consumption equation is calculated using the square of the voltage this alone brings a 30% improvement. Add to this a reduction in die size plus other electrical advances and DDR3 can manage, at the same frequency, to get by with half the power consumption. Alternatively, with higher frequencies, the same power envelope can be hit. Or with double the capacity the same heat emission can be achieved. |

译者信息

在命名方面还有一个拧巴的地方。FSB速度是用有效频率来标记的,即把上升、下降沿均传输数据的因素考虑进去,因此数字被撑大了。所以,拥有266MHz总线的133MHz模块有着533MHz的FSB“频率”。 DDR3要求更多的改变(这里指真正的DDR3,而不是图形卡中假冒的GDDR3)。电压从1.8V下降到1.5V。由于耗电是与电压的平方成正比,因此可以节约30%的电力。加上管芯(die)的缩小和电气方面的其它进展,DDR3可以在保持相同频率的情况下,降低一半的电力消耗。或者,在保持相同耗电的情况下,达到更高的频率。又或者,在保持相同热量排放的情况下,实现容量的翻番。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

The cell array of DDR3 modules will run at a quarter of the speed of the external bus which requires an 8 bit I/O buffer, up from 4 bits for DDR2. See Figure 2.14 for the schematics.

Initially DDR3 modules will likely have slightly higherCASlatencies just because the DDR2 technology is more mature. This would cause DDR3 to be useful only at frequencies which are higher than those which can be achieved with DDR2, and, even then, mostly when bandwidth is more important than latency. There is already talk about 1.3V modules which can achieve the sameCASlatency as DDR2. In any case, the possibility of achieving higher speeds because of faster buses will outweigh the increased latency. |

译者信息

DDR3模块的单元阵列将运行在内部总线的四分之一速度上,DDR3的I/O缓冲区从DDR2的4位提升到8位。见图2.14。

一开始,DDR3可能会有较高的CAS时延,因为DDR2的技术相比之下更为成熟。由于这个原因,DDR3可能只会用于DDR2无法达到的高频率下,而且带宽比时延更重要的场景。此前,已经有讨论指出,1.3V的DDR3可以达到与DDR2相同的CAS时延。无论如何,更高速度带来的价值都会超过时延增加带来的影响。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

One possible problem with DDR3 is that, for 1,600Mb/s transfer rate or higher, the number of modules per channel may be reduced to just one. In earlier versions this requirement held for all frequencies, so one can hope that the requirement will at some point be lifted for all frequencies. Otherwise the capacity of systems will be severely limited. Figure 2.15 shows the names of the expected DDR3 modules. JEDEC agreed so far on the first four types. Given that Intel's 45nm processors have an FSB speed of 1,600Mb/s, the 1,866Mb/s is needed for the overclocking market. We will likely see more of this towards the end of the DDR3 lifecycle.

|

译者信息

DDR3可能会有一个问题,即在1600Mb/s或更高速率下,每个通道的模块数可能会限制为1。在早期版本中,这一要求是针对所有频率的。我们希望这个要求可以提高一些,否则系统容量将会受到严重的限制。 图2.15显示了我们预计中各DDR3模块的名称。JEDEC目前同意了前四种。由于Intel的45nm处理器是1600Mb/s的FSB,1866Mb/s可以用于超频市场。随着DDR3的发展,可能会有更多类型加入。

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| All DDR memory has one problem: the increased bus frequency makes it hard to create parallel data busses. A DDR2 module has 240 pins. All connections to data and address pins must be routed so that they have approximately the same length. Even more of a problem is that, if more than one DDR module is to be daisy-chained on the same bus, the signals get more and more distorted for each additional module. The DDR2 specification allow only two modules per bus (aka channel), the DDR3 specification only one module for high frequencies. With 240 pins per channel a single Northbridge cannot reasonably drive more than two channels. The alternative is to have external memory controllers (as in Figure 2.2) but this is expensive.

What this means is that commodity motherboards are restricted to hold at most four DDR2 or DDR3 modules. This restriction severely limits the amount of memory a system can have. Even old 32-bit IA-32 processors can handle 64GB of RAM and memory demand even for home use is growing, so something has to be done. |

译者信息

所有的DDR内存都有一个问题:不断增加的频率使得建立并行数据总线变得十分困难。一个DDR2模块有240根引脚。所有到地址和数据引脚的连线必须被布置得差不多一样长。更大的问题是,如果多于一个DDR模块通过菊花链连接在同一个总线上,每个模块所接收到的信号随着模块的增加会变得越来越扭曲。DDR2规范允许每条总线(又称通道)连接最多两个模块,DDR3在高频率下只允许每个通道连接一个模块。每条总线多达240根引脚使得单个北桥无法以合理的方式驱动两个通道。替代方案是增加外部内存控制器(如图2.2),但这会提高成本。 这意味着商品主板所搭载的DDR2或DDR3模块数将被限制在最多四条,这严重限制了系统的最大内存容量。即使是老旧的32位IA-32处理器也可以使用64GB内存。即使是家庭对内存的需求也在不断增长,所以,某些事必须开始做了。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

One answer is to add memory controllers into each processor as explained in Section 2. AMD does it with the Opteron line and Intel will do it with their CSI technology. This will help as long as the reasonable amount of memory a processor is able to use can be connected to a single processor. In some situations this is not the case and this setup will introduce a NUMA architecture and its negative effects. For some situations another solution is needed. Intel's answer to this problem for big server machines, at least for the next years, is called Fully Buffered DRAM (FB-DRAM). The FB-DRAM modules use the same components as today's DDR2 modules which makes them relatively cheap to produce. The difference is in the connection with the memory controller. Instead of a parallel data bus FB-DRAM utilizes a serial bus (Rambus DRAM had this back when, too, and SATA is the successor of PATA, as is PCI Express for PCI/AGP). The serial bus can be driven at a much higher frequency, reverting the negative impact of the serialization and even increasing the bandwidth. The main effects of using a serial bus are

|

译者信息

一种解法是,在处理器中加入内存控制器,我们在第2节中曾经介绍过。AMD的Opteron系列和Intel的CSI技术就是采用这种方法。只要我们能把处理器要求的内存连接到处理器上,这种解法就是有效的。如果不能,按照这种思路就会引入NUMA架构,当然同时也会引入它的缺点。而在有些情况下,我们需要其它解法。 Intel针对大型服务器方面的解法(至少在未来几年),是被称为全缓冲DRAM(FB-DRAM)的技术。FB-DRAM采用与DDR2相同的器件,因此造价低廉。不同之处在于它们与内存控制器的连接方式。FB-DRAM没有用并行总线,而用了串行总线(Rambus DRAM had this back when, too, 而SATA是PATA的继任者,就像PCI Express是PCI/AGP的继承人一样)。串行总线可以达到更高的频率,串行化的负面影响,甚至可以增加带宽。使用串行总线后

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| An FB-DRAM module has only 69 pins, compared with the 240 for DDR2. Daisy chaining FB-DRAM modules is much easier since the electrical effects of the bus can be handled much better. The FB-DRAM specification allows up to 8 DRAM modules per channel.

Compared with the connectivity requirements of a dual-channel Northbridge it is now possible to drive 6 channels of FB-DRAM with fewer pins: 2×240 pins versus 6×69 pins. The routing for each channel is much simpler which could also help reducing the cost of the motherboards. Fully duplex parallel busses are prohibitively expensive for the traditional DRAM modules, duplicating all those lines is too costly. With serial lines (even if they are differential, as FB-DRAM requires) this is not the case and so the serial bus is designed to be fully duplexed, which means, in some situations, that the bandwidth is theoretically doubled alone by this. But it is not the only place where parallelism is used for bandwidth increase. Since an FB-DRAM controller can run up to six channels at the same time the bandwidth can be increased even for systems with smaller amounts of RAM by using FB-DRAM. Where a DDR2 system with four modules has two channels, the same capacity can handled via four channels using an ordinary FB-DRAM controller. The actual bandwidth of the serial bus depends on the type of DDR2 (or DDR3) chips used on the FB-DRAM module. |

译者信息

FB-DRAM只有69个脚。通过菊花链方式连接多个FB-DRAM也很简单。FB-DRAM规范允许每个通道连接最多8个模块。 在对比下双通道北桥的连接性,采用FB-DRAM后,北桥可以驱动6个通道,而且脚数更少——6x69对比2x240。每个通道的布线也更为简单,有助于降低主板的成本。 全双工的并行总线过于昂贵。而换成串行线后,这不再是一个问题,因此串行总线按全双工来设计的,这也意味着,在某些情况下,仅靠这一特性,总线的理论带宽已经翻了一倍。还不止于此。由于FB-DRAM控制器可同时连接6个通道,因此可以利用它来增加某些小内存系统的带宽。对于一个双通道、4模块的DDR2系统,我们可以用一个普通FB-DRAM控制器,用4通道来实现相同的容量。串行总线的实际带宽取决于在FB-DRAM模块中所使用的DDR2(或DDR3)芯片的类型。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

We can summarize the advantages like this:

|

译者信息

我们可以像这样总结这些优势: DDR2 FB-DRAM |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Pins 240 69 Channels 2 6 DIMMs/Channel 2 8 Max Memory 16GB 192GB Throughput ~10GB/s ~40GB/s

There are a few drawbacks to FB-DRAMs if multiple DIMMs on one channel are used. The signal is delayed—albeit minimally—at each DIMM in the chain, which means the latency increases. But for the same amount of memory with the same frequency FB-DRAM can always be faster than DDR2 and DDR3 since only one DIMM per channel is needed; for large memory systems DDR simply has no answer using commodity components. |

译者信息

如果在单个通道上使用多个DIMM,会有一些问题。信号在每个DIMM上都会有延迟(尽管很小),也就是说,延迟是递增的。不过,如果在相同频率和相同容量上进行比较,FB-DRAM总是能快过DDR2及DDR3,因为FB-DRAM只需要在每个通道上使用一个DIMM即可。而如果说到大型内存系统,那么DDR更是没有商用组件的解决方案。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

2.2.5 Conclusions This section should have shown that accessing DRAM is not an arbitrarily fast process. At least not fast compared with the speed the processor is running and with which it can access registers and cache. It is important to keep in mind the differences between CPU and memory frequencies. An Intel Core 2 processor running at 2.933GHz and a 1.066GHz FSB have a clock ratio of 11:1 (note: the 1.066GHz bus is quad-pumped). Each stall of one cycle on the memory bus means a stall of 11 cycles for the processor. For most machines the actual DRAMs used are slower, thusly increasing the delay. Keep these numbers in mind when we are talking about stalls in the upcoming sections. The timing charts for the read command have shown that DRAM modules are capable of high sustained data rates. Entire DRAM rows could be transported without a single stall. The data bus could be kept occupied 100%. For DDR modules this means two 64-bit words transferred each cycle. With DDR2-800 modules and two channels this means a rate of 12.8GB/s. But, unless designed this way, DRAM access is not always sequential. Non-continuous memory regions are used which means precharging and newRASsignals are needed. This is when things slow down and when the DRAM modules need help. The sooner the precharging can happen and theRASsignal sent the smaller the penalty when the row is actually used. Hardware and software prefetching (see Section 6.3) can be used to create more overlap in the timing and reduce the stall. Prefetching also helps shift memory operations in time so that there is less contention at later times, right before the data is actually needed. This is a frequent problem when the data produced in one round has to be stored and the data required for the next round has to be read. By shifting the read in time, the write and read operations do not have to be issued at basically the same time. |

译者信息

2.2.5 结论通过本节,大家应该了解到访问DRAM的过程并不是一个快速的过程。至少与处理器的速度相比,或与处理器访问寄存器及缓存的速度相比,DRAM的访问不算快。大家还需要记住CPU和内存的频率是不同的。Intel Core 2处理器运行在2.933GHz,而1.066GHz FSB有11:1的时钟比率(注: 1.066GHz的总线为四泵总线)。那么,内存总线上延迟一个周期意味着处理器延迟11个周期。绝大多数机器使用的DRAM更慢,因此延迟更大。在后续的章节中,我们需要讨论延迟这个问题时,请把以上的数字记在心里。 前文中读命令的时序图表明,DRAM模块可以支持高速数据传输。每个完整行可以被毫无延迟地传输。数据总线可以100%被占。对DDR而言,意味着每个周期传输2个64位字。对于DDR2-800模块和双通道而言,意味着12.8GB/s的速率。 但是,除非是特殊设计,DRAM的访问并不总是串行的。访问不连续的内存区意味着需要预充电和RAS信号。于是,各种速度开始慢下来,DRAM模块急需帮助。预充电的时间越短,数据传输所受的惩罚越小。 硬件和软件的预取(参见第6.3节)可以在时序中制造更多的重叠区,降低延迟。预取还可以转移内存操作的时间,从而减少争用。我们常常遇到的问题是,在这一轮中生成的数据需要被存储,而下一轮的数据需要被读出来。通过转移读取的时间,读和写就不需要同时发出了。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

2.3 Other Main Memory UsersBeside the CPUs there are other system components which can access the main memory. High-performance cards such as network and mass-storage controllers cannot afford to pipe all the data they need or provide through the CPU. Instead, they read or write the data directly from/to the main memory (Direct Memory Access, DMA). In Figure 2.1 we can see that the cards can talk through the South- and Northbridge directly with the memory. Other buses, like USB, also require FSB bandwidth—even though they do not use DMA—since the Southbridge is connected to the Northbridge through the FSB, too. While DMA is certainly beneficial, it means that there is more competition for the FSB bandwidth. In times with high DMA traffic the CPU might stall more than usual while waiting for data from the main memory. There are ways around this given the right hardware. With an architecture as in Figure 2.3 one can make sure the computation uses memory on nodes which are not affected by DMA. It is also possible to attach a Southbridge to each node, equally distributing the load on the FSB of all the nodes. There are a myriad of possibilities. In Section 6 we will introduce techniques and programming interfaces which help achieving the improvements which are possible in software. |

译者信息

2.3 主存的其它用户除了CPU外,系统中还有其它一些组件也可以访问主存。高性能网卡或大规模存储控制器是无法承受通过CPU来传输数据的,它们一般直接对内存进行读写(直接内存访问,DMA)。在图2.1中可以看到,它们可以通过南桥和北桥直接访问内存。另外,其它总线,比如USB等也需要FSB带宽,即使它们并不使用DMA,但南桥仍要通过FSB连接到北桥。 DMA当然有很大的优点,但也意味着FSB带宽会有更多的竞争。在有大量DMA流量的情况下,CPU在访问内存时必然会有更大的延迟。我们可以用一些硬件来解决这个问题。例如,通过图2.3中的架构,我们可以挑选不受DMA影响的节点,让它们的内存为我们的计算服务。还可以在每个节点上连接一个南桥,将FSB的负荷均匀地分担到每个节点上。除此以外,还有许多其它方法。我们将在第6节中介绍一些技术和编程接口,它们能够帮助我们通过软件的方式改善这个问题。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Finally it should be mentioned that some cheap systems have graphics systems without separate, dedicated video RAM. Those systems use parts of the main memory as video RAM. Since access to the video RAM is frequent (for a 1024x768 display with 16 bpp at 60Hz we are talking 94MB/s) and system memory, unlike RAM on graphics cards, does not have two ports this can substantially influence the systems performance and especially the latency. It is best to ignore such systems when performance is a priority. They are more trouble than they are worth. People buying those machines know they will not get the best performance. Continue to: |

译者信息

最后,还需要提一下某些廉价系统,它们的图形系统没有专用的显存,而是采用主存的一部分作为显存。由于对显存的访问非常频繁(例如,对于1024x768、16bpp、60Hz的显示设置来说,需要95MB/s的数据速率),而主存并不像显卡上的显存,并没有两个端口,因此这种配置会对系统性能、尤其是时延造成一定的影响。如果大家对系统性能要求比较高,最好不要采用这种配置。这种系统带来的问题超过了本身的价值。人们在购买它们时已经做好了性能不佳的心理准备。 继续阅读: |

Part 2 CPU Cache

|

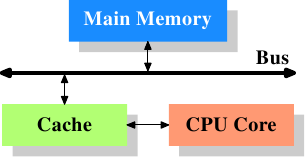

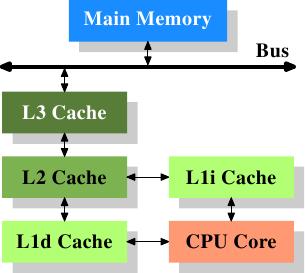

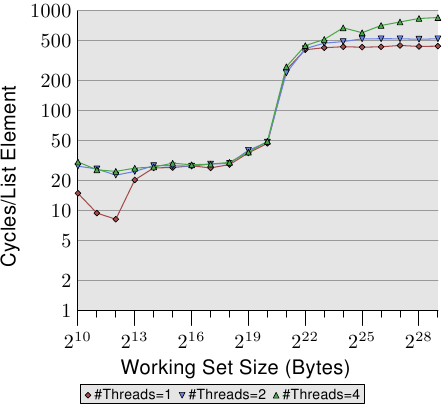

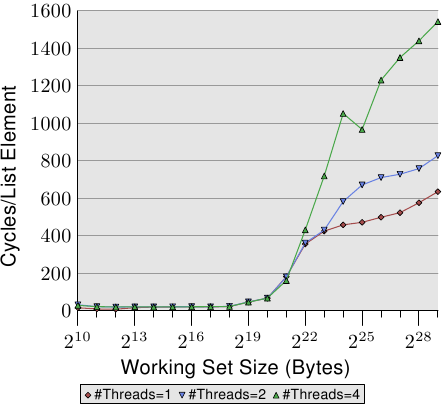

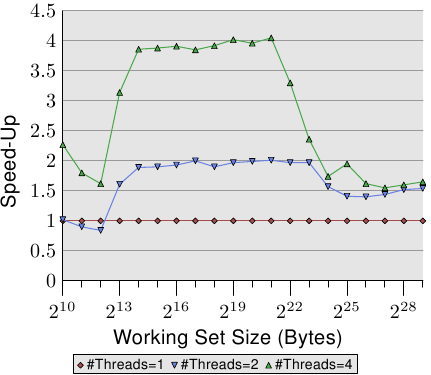

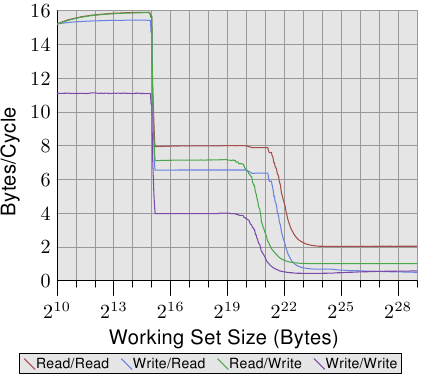

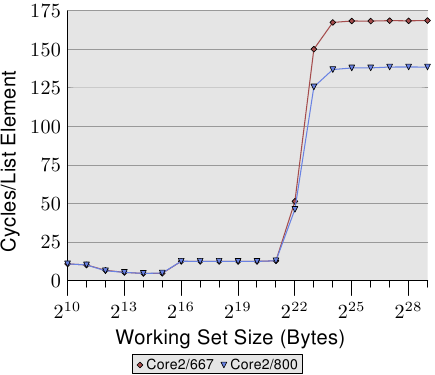

CPUs are today much more sophisticated than they were only 25 years ago. In those days, the frequency of the CPU core was at a level equivalent to that of the memory bus. Memory access was only a bit slower than register access. But this changed dramatically in the early 90s, when CPU designers increased the frequency of the CPU core but the frequency of the memory bus and the performance of RAM chips did not increase proportionally. This is not due to the fact that faster RAM could not be built, as explained in the previous section. It is possible but it is not economical. RAM as fast as current CPU cores is orders of magnitude more expensive than any dynamic RAM. |

译者信息 现在的CPU比25年前要精密得多了。在那个年代,CPU的频率与内存总线的频率基本在同一层面上。内存的访问速度仅比寄存器慢那么一点点。但是,这一局面在上世纪90年代被打破了。CPU的频率大大提升,但内存总线的频率与内存芯片的性能却没有得到成比例的提升。并不是因为造不出更快的内存,只是因为太贵了。内存如果要达到目前CPU那样的速度,那么它的造价恐怕要贵上好几个数量级。 现在的CPU比25年前要精密得多了。在那个年代,CPU的频率与内存总线的频率基本在同一层面上。内存的访问速度仅比寄存器慢那么一点点。但是,这一局面在上世纪90年代被打破了。CPU的频率大大提升,但内存总线的频率与内存芯片的性能却没有得到成比例的提升。并不是因为造不出更快的内存,只是因为太贵了。内存如果要达到目前CPU那样的速度,那么它的造价恐怕要贵上好几个数量级。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

If the choice is between a machine with very little, very fast RAM and a machine with a lot of relatively fast RAM, the second will always win given a working set size which exceeds the small RAM size and the cost of accessing secondary storage media such as hard drives. The problem here is the speed of secondary storage, usually hard disks, which must be used to hold the swapped out part of the working set. Accessing those disks is orders of magnitude slower than even DRAM access. Fortunately it does not have to be an all-or-nothing decision. A computer can have a small amount of high-speed SRAM in addition to the large amount of DRAM. One possible implementation would be to dedicate a certain area of the address space of the processor as containing the SRAM and the rest the DRAM. The task of the operating system would then be to optimally distribute data to make use of the SRAM. Basically, the SRAM serves in this situation as an extension of the register set of the processor. |

译者信息

如果有两个选项让你选择,一个是速度非常快、但容量很小的内存,一个是速度还算快、但容量很多的内存,如果你的工作集比较大,超过了前一种情况,那么人们总是会选择第二个选项。原因在于辅存(一般为磁盘)的速度。由于工作集超过主存,那么必须用辅存来保存交换出去的那部分数据,而辅存的速度往往要比主存慢上好几个数量级。 好在这问题也并不全然是非甲即乙的选择。在配置大量DRAM的同时,我们还可以配置少量SRAM。将地址空间的某个部分划给SRAM,剩下的部分划给DRAM。一般来说,SRAM可以当作扩展的寄存器来使用。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

While this is a possible implementation, it is not viable. Ignoring the problem of mapping the physical resources of such SRAM-backed memory to the virtual address spaces of the processes (which by itself is terribly hard) this approach would require each process to administer in software the allocation of this memory region. The size of the memory region can vary from processor to processor (i.e., processors have different amounts of the expensive SRAM-backed memory). Each module which makes up part of a program will claim its share of the fast memory, which introduces additional costs through synchronization requirements. In short, the gains of having fast memory would be eaten up completely by the overhead of administering the resources. |

译者信息 上面的做法看起来似乎可以,但实际上并不可行。首先,将SRAM内存映射到进程的虚拟地址空间就是个非常复杂的工作,而且,在这种做法中,每个进程都需要管理这个SRAM区内存的分配。每个进程可能有大小完全不同的SRAM区,而组成程序的每个模块也需要索取属于自身的SRAM,更引入了额外的同步需求。简而言之,快速内存带来的好处完全被额外的管理开销给抵消了。 上面的做法看起来似乎可以,但实际上并不可行。首先,将SRAM内存映射到进程的虚拟地址空间就是个非常复杂的工作,而且,在这种做法中,每个进程都需要管理这个SRAM区内存的分配。每个进程可能有大小完全不同的SRAM区,而组成程序的每个模块也需要索取属于自身的SRAM,更引入了额外的同步需求。简而言之,快速内存带来的好处完全被额外的管理开销给抵消了。 |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

So, instead of putting the SRAM under the control of the OS or user, it becomes a resource which is transparently used and administered by the processors. In this mode, SRAM is used to make temporary copies of (to cache, in other words) data in main memory which is likely to be used soon by the processor. This is possible because program code and data has temporal and spatial locality. This means that, over short periods of time, there is a good chance that the same code or data gets reused. For code this means that there are most likely loops in the code so that the same code gets executed over and over again (the perfect case forspatial locality). Data accesses are also ideally limited to small regions. Even if the memory used over short time periods is not close together there is a high chance that the same data will be reused before long (temporal locality). For code this means, for instance, that in a loop a function call is made and that function is located elsewhere in the address space. The function may be distant in memory, but calls to that function will be close in time. For data it means that the total amount of memory used at one time (the working set size) is ideally limited but the memory used, as a result of the random access nature of RAM, is not close together. Realizing that locality exists is key to the concept of CPU caches as we use them today. |

译者信息 因此,SRAM是作为CPU自动使用和管理的一个资源,而不是由OS或者用户管理的。在这种模式下,SRAM用来复制保存(或者叫缓存)主内存中有可能即将被CPU使用的数据。这意味着,在较短时间内,CPU很有可能重复运行某一段代码,或者重复使用某部分数据。从代码上看,这意味着CPU执行了一个循环,所以相同的代码一次又一次地执行(空间局部性的绝佳例子)。数据访问也相对局限在一个小的区间内。即使程序使用的物理内存不是相连的,在短期内程序仍然很有可能使用同样的数据(时间局部性)。这个在代码上表现为,程序在一个循环体内调用了入口一个位于另外的物理地址的函数。这个函数可能与当前指令的物理位置相距甚远,但是调用的时间差不大。在数据上表现为,程序使用的内存是有限的(相当于工作集的大小)。但是实际上由于RAM的随机访问特性,程序使用的物理内存并不是连续的。正是由于空间局部性和时间局部性的存在,我们才提炼出今天的CPU缓存概念。 因此,SRAM是作为CPU自动使用和管理的一个资源,而不是由OS或者用户管理的。在这种模式下,SRAM用来复制保存(或者叫缓存)主内存中有可能即将被CPU使用的数据。这意味着,在较短时间内,CPU很有可能重复运行某一段代码,或者重复使用某部分数据。从代码上看,这意味着CPU执行了一个循环,所以相同的代码一次又一次地执行(空间局部性的绝佳例子)。数据访问也相对局限在一个小的区间内。即使程序使用的物理内存不是相连的,在短期内程序仍然很有可能使用同样的数据(时间局部性)。这个在代码上表现为,程序在一个循环体内调用了入口一个位于另外的物理地址的函数。这个函数可能与当前指令的物理位置相距甚远,但是调用的时间差不大。在数据上表现为,程序使用的内存是有限的(相当于工作集的大小)。但是实际上由于RAM的随机访问特性,程序使用的物理内存并不是连续的。正是由于空间局部性和时间局部性的存在,我们才提炼出今天的CPU缓存概念。 |