环境

-

Linux :centos 7

-

redis:redis-5.0.9

-

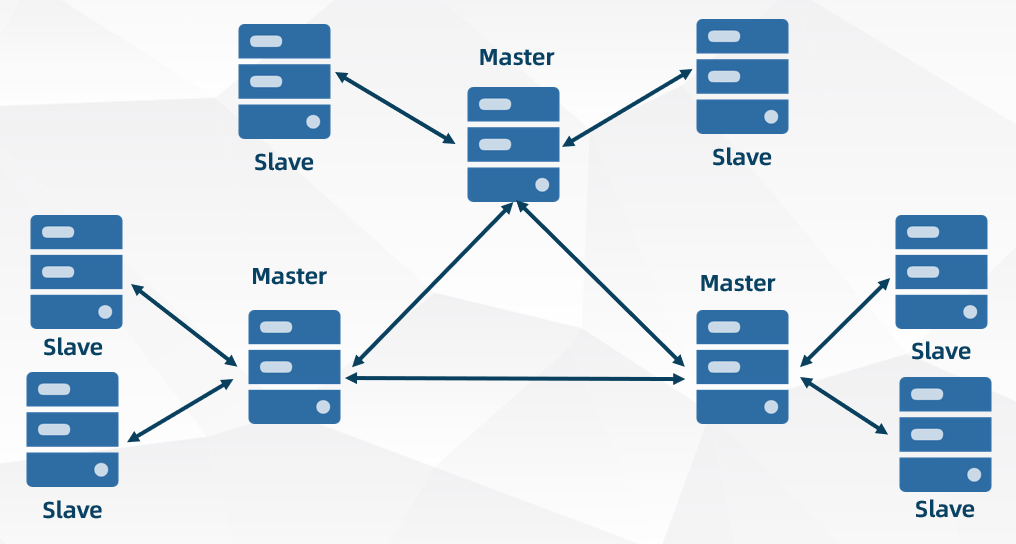

集群环境

集群节点(node) 描述 node-01 1主2从 node-02 1主2从 node-03 1主2从

步骤一:在 node-01 中开启集群配置

[root@node-01 redis-5.0.9]# vim 6379redis.conf

daemonize yes #后台运行

cluster-enabled yes #开启集群

cluster-config-file nodes-6379.conf #集群的配置文件(首次启动自动生成)

cluster-node-timeout 15000 #请求超时时长

[root@node-01 redis-5.0.9]# vim 6380redis.conf

daemonize yes

cluster-enabled yes

cluster-config-file nodes-6380.conf

cluster-node-timeout 15000

[root@node-01 redis-5.0.9]# vim 6381redis.conf

daemonize yes

cluster-enabled yes

cluster-config-file nodes-6381.conf

cluster-node-timeout 15000

步骤二:拷贝 node-01 节点 redis 配置到 node-02、node-03

[root@node-01 redis-5.0.9]# scp 6379redis.conf node-02:$PWD

[root@node-01 redis-5.0.9]# scp 6380redis.conf node-02:$PWD

[root@node-01 redis-5.0.9]# scp 6381redis.conf node-02:$PWD

[root@node-01 redis-5.0.9]# scp 6379redis.conf node-03:$PWD

[root@node-01 redis-5.0.9]# scp 6380redis.conf node-03:$PWD

[root@node-01 redis-5.0.9]# scp 6381redis.conf node-03:$PWD

步骤三:分别启动 node-01、node-02、node-03 的 redis 服务器

注:启动 redis 服务器之前要先清除数据,即删除目录里所有

*.aof 文件、 *.rdb 文件 和 nodes-*文件

[root@node-01 redis-5.0.9]# src/redis-server 6379redis.conf

[root@node-01 redis-5.0.9]# src/redis-server 6380redis.conf

[root@node-01 redis-5.0.9]# src/redis-server 6381redis.conf

[root@node-02 redis-5.0.9]# src/redis-server 6379redis.conf

[root@node-02 redis-5.0.9]# src/redis-server 6380redis.conf

[root@node-02 redis-5.0.9]# src/redis-server 6381redis.conf

[root@node-03 redis-5.0.9]# src/redis-server 6379redis.conf

[root@node-03 redis-5.0.9]# src/redis-server 6380redis.conf

[root@node-03 redis-5.0.9]# src/redis-server 6381redis.conf

步骤四:查看 Redis 服务器是否启动成功

[root@node-01 redis-5.0.9]# ps -ef | grep redis

root 1572 1 0 14:39 ? 00:00:00 src/redis-server *:6379 [cluster]

root 1577 1 0 14:40 ? 00:00:00 src/redis-server *:6380 [cluster]

root 1582 1 0 14:40 ? 00:00:00 src/redis-server *:6381 [cluster]

root 1628 1200 0 14:45 pts/0 00:00:00 grep --color=auto redis

[root@node-02 redis-5.0.9]# ps -ef | grep redis

root 1572 1 0 14:39 ? 00:00:00 src/redis-server *:6379 [cluster]

root 1577 1 0 14:40 ? 00:00:00 src/redis-server *:6380 [cluster]

root 1582 1 0 14:40 ? 00:00:00 src/redis-server *:6381 [cluster]

root 1628 1200 0 14:45 pts/0 00:00:00 grep --color=auto redis

[root@node-03 redis-5.0.9]# ps -ef | grep redis

root 1572 1 0 14:39 ? 00:00:00 src/redis-server *:6379 [cluster]

root 1577 1 0 14:40 ? 00:00:00 src/redis-server *:6380 [cluster]

root 1582 1 0 14:40 ? 00:00:00 src/redis-server *:6381 [cluster]

root 1628 1200 0 14:45 pts/0 00:00:00 grep --color=auto redis

以上显示 node-01、node-02 和 node-03 所有的 Redis 服务器都启动成功

步骤五:将 node-01、node-02 和 node-3 的 Redis 服务器部署为集群

[root@node-01 redis-5.0.9]# src/redis-cli --cluster create 192.168.229.21:6379 192.168.229.22:6379 192.168.229.23:6379 192.168.229.21:6380 192.168.229.22:6380 192.168.229.23:6380 192.168.229.21:6381 192.168.229.22:6381 192.168.229.23:6381 --cluster-replicas 2

>>> Performing hash slots allocation on 9 nodes...

# 集群主节点分配槽位

Master[0] -> Slots 0 - 5460 #集群主节点1分配的槽位

Master[1] -> Slots 5461 - 10922 #集群主节点2分配的槽位

Master[2] -> Slots 10923 - 16383 #集群主节点3分配的槽位

# 集群主节点分配从节点

Adding replica 192.168.229.22:6380 to 192.168.229.21:6379

Adding replica 192.168.229.23:6380 to 192.168.229.21:6379

Adding replica 192.168.229.21:6381 to 192.168.229.22:6379

Adding replica 192.168.229.23:6381 to 192.168.229.22:6379

Adding replica 192.168.229.22:6381 to 192.168.229.23:6379

Adding replica 192.168.229.21:6380 to 192.168.229.23:6379

# 集群主节点和从节点列表

M: 00b296e636d06837ecc1f17768b3800c41c2ef7e 192.168.229.21:6379

slots:[0-5460] (5461 slots) master

M: ed5302936686947da2f27346915ddcffd97b4b01 192.168.229.22:6379

slots:[5461-10922] (5462 slots) master

M: 56f2373e93031b1853cc552d2e87b3271137b000 192.168.229.23:6379

slots:[10923-16383] (5461 slots) master

S: 1c1bb17d0ef38a8cf75a60cb18ef56fb44361a79 192.168.229.21:6380

replicates 56f2373e93031b1853cc552d2e87b3271137b000

S: 670f70b2b4360b8c1a09b68cbc61eb26437b0ff0 192.168.229.22:6380

replicates 00b296e636d06837ecc1f17768b3800c41c2ef7e

S: 5a20c0b201dfbc34199bf38bae1ccd3dc20adc6b 192.168.229.23:6380

replicates 00b296e636d06837ecc1f17768b3800c41c2ef7e

S: cebc39c8ee5d8069599c7ae648c658f86a36fe07 192.168.229.21:6381

replicates ed5302936686947da2f27346915ddcffd97b4b01

S: 47c3cfc28913c60f3570f7a8d571d0efb0fcbe4d 192.168.229.22:6381

replicates 56f2373e93031b1853cc552d2e87b3271137b000

S: 1fe5ae70e20f4d333e5e00059fa57f8d57d77f9a 192.168.229.23:6381

replicates ed5302936686947da2f27346915ddcffd97b4b01

Can I set the above configuration? (type 'yes' to accept): yes # 这是输入 yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.......

>>> Performing Cluster Check (using node 192.168.229.21:6379)

M: 00b296e636d06837ecc1f17768b3800c41c2ef7e 192.168.229.21:6379

slots:[0-5460] (5461 slots) master

2 additional replica(s)

S: 5a20c0b201dfbc34199bf38bae1ccd3dc20adc6b 192.168.229.23:6380

slots: (0 slots) slave

replicates 00b296e636d06837ecc1f17768b3800c41c2ef7e

M: 56f2373e93031b1853cc552d2e87b3271137b000 192.168.229.23:6379

slots:[10923-16383] (5461 slots) master

2 additional replica(s)

S: 1c1bb17d0ef38a8cf75a60cb18ef56fb44361a79 192.168.229.21:6380

slots: (0 slots) slave

replicates 56f2373e93031b1853cc552d2e87b3271137b000

M: ed5302936686947da2f27346915ddcffd97b4b01 192.168.229.22:6379

slots:[5461-10922] (5462 slots) master

2 additional replica(s)

S: cebc39c8ee5d8069599c7ae648c658f86a36fe07 192.168.229.21:6381

slots: (0 slots) slave

replicates ed5302936686947da2f27346915ddcffd97b4b01

S: 1fe5ae70e20f4d333e5e00059fa57f8d57d77f9a 192.168.229.23:6381

slots: (0 slots) slave

replicates ed5302936686947da2f27346915ddcffd97b4b01

S: 47c3cfc28913c60f3570f7a8d571d0efb0fcbe4d 192.168.229.22:6381

slots: (0 slots) slave

replicates 56f2373e93031b1853cc552d2e87b3271137b000

S: 670f70b2b4360b8c1a09b68cbc61eb26437b0ff0 192.168.229.22:6380

slots: (0 slots) slave

replicates 00b296e636d06837ecc1f17768b3800c41c2ef7e

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

- --cluster create:表示创建集群节点

- --cluster-replicas 2:表示为集群中的主节点创建 2 个从节点

步骤六:启动Redis 客户端连接集群主节点

-c :表示连接集群

-h:表示集群的主节点IP地址

-p:表示集群的主节点端口号

[root@node-01 redis-5.0.9]# src/redis-cli -c -h 192.168.229.21 -p 6379 # redis 客户端连接集群主节点

192.168.229.22:6379> cluster info #查看集群信息

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:9

cluster_size:3

cluster_current_epoch:12

cluster_my_epoch:1

cluster_stats_messages_ping_sent:6633

cluster_stats_messages_pong_sent:2231

cluster_stats_messages_meet_sent:2

cluster_stats_messages_sent:8866

cluster_stats_messages_ping_received:2225

cluster_stats_messages_pong_received:2207

cluster_stats_messages_meet_received:6

cluster_stats_messages_fail_received:2

cluster_stats_messages_auth-req_received:3

cluster_stats_messages_received:4443

192.168.229.21:6379> cluster nodes # 查看集群中各节点信息

192.168.229.22:6379@16379 myself,master - 0 1615900052000 2 connected 5461-10922 #集群主节点

192.168.229.21:6379@16379 master - 0 1615900058000 1 connected 0-5460 #集群主节点

192.168.229.22:6380@16380 slave 0 1615900055542 5 connected

192.168.229.23:6380@16380 slave 0 1615900059581 6 connected

192.168.229.23:6379@16379 master - 0 1615900057559 3 connected 10923-16383 #集群主节点

192.168.229.23:6381@16381 slave 0 1615900060594 9 connected

192.168.229.22:6381@16381 slave 0 1615900058569 8 connected

192.168.229.21:6380@16380 slave 0 1615900057000 4 connected

192.168.229.21:6381@16381 slave 0 1615900059000 7 connected

步骤七:集群环境测试

连接集群 node-01 主节点,并添加数据如下:

192.168.229.21:6379> set name zhangsan

-> Redirected to slot [5798] located at 192.168.229.22:6379

OK

192.168.229.22:6379> get name

"zhangsan"

可以看到,数据并没有存储到 node-01 的主节点上,而是数据存储在 node-02 的主节点上,而且 Redis 客户端也自动从node-01 转到了 node-02。

为何数据存储到 node-02 上,而不是 node-01 呢,原因是集群为数据分配的槽位是 slot [5798],node-02 的槽位范围为 5461 - 10922,当然应该存储在 node-02 节点上。

我们到 node-02 节点上查看数据,如下:

192.168.229.22:6379> get name

"zhangsan"

步骤八:集群故障转移测试

为了触发故障转移,我们可以做的最简单的事情(也就是在分布式系统中可能出现的语义上最简单的故障)是使单个进程崩溃。

也可以直接 shutdown 关闭 redis 服务器

[root@node-01 redis-5.0.9]# src/redis-cli -c -h 192.168.229.22 -p 6379 debug segfault

Error: Server closed the connection

可以看到,node-02 主节点被我们搞崩溃了,这时查看集群节点状态,如下:

[root@node-02 redis-5.0.9]# src/redis-cli -c -h 192.168.229.22 -p 6380

192.168.229.22:6380> cluster nodes

192.168.229.23:6379@16379 master - 0 1615900421261 3 connected 10923-16383

192.168.229.22:6379@16379 master,fail - 16159003931 2 disconnected 5461-10922 # node-02:6379已故障下线

192.168.229.21:6381@16381 slave 0 1615900419000 7 connected

192.168.229.23:6381@16381 slave 0 1615900419236 9 connected

192.168.229.21:6380@16380 slave 0 1615900420247 4 connected

192.168.229.22:6380@16380 myself,slave 0 1615900417000 5 connected # node-02:6380 升级为新的主节点

192.168.229.23:6380@16380 slave 0 1615900420000 6 connected

192.168.229.22:6381@16381 slave 0 1615900417000 8 connected

192.168.229.21:6379@16379 master - 0 1615900417212 1 connected 0-5460

以上结果显示,故障转移成功,node-02:6380 升级为新的主节点。现在,再将 node-02:6379 重启,如下:

[root@node-02 redis-5.0.9]# src/redis-server 6379redis.conf

[root@node-02 redis-5.0.9]# src/redis-cli -c -h 192.168.229.22 -p 6380

192.168.229.22:6380> cluster nodes

192.168.229.23:6379@16379 master - 0 1615900858050 3 connected 10923-16383

192.168.229.22:6379@16379 slave 0 1615900856026 12 connected #降为从节点

192.168.229.21:6381@16381 slave 0 1615900857040 12 connected

192.168.229.23:6381@16381 master - 0 1615900855019 12 connected 5461-10922

192.168.229.21:6380@16380 slave 0 1615900856000 4 connected

192.168.229.22:6380@16380 myself,slave 0 1615900855000 5 connected

192.168.229.23:6380@16380 slave 0 1615900855000 6 connected

192.168.229.22:6381@16381 slave 0 1615900854005 8 connected

192.168.229.21:6379@16379 master - 0 1615900854000 1 connected 0-5460

以上结果显示,重启后原集群主节点已变为从节点。至此,集群环境搭建成功:)

步骤九:关闭集群

[root@node-02 redis-5.0.9]# src/redis-cli -c -h 192.168.229.21 -p 6381 shutdown

[root@node-02 redis-5.0.9]# src/redis-cli -c -h 192.168.229.21 -p 6380 shutdown

[root@node-02 redis-5.0.9]# src/redis-cli -c -h 192.168.229.21 -p 6379 shutdown

[root@node-02 redis-5.0.9]# src/redis-cli -c -h 192.168.229.22 -p 6381 shutdown

[root@node-02 redis-5.0.9]# src/redis-cli -c -h 192.168.229.22 -p 6380 shutdown

[root@node-02 redis-5.0.9]# src/redis-cli -c -h 192.168.229.22 -p 6379 shutdown

[root@node-02 redis-5.0.9]# src/redis-cli -c -h 192.168.229.23 -p 6381 shutdown

[root@node-02 redis-5.0.9]# src/redis-cli -c -h 192.168.229.23 -p 6380 shutdown

[root@node-02 redis-5.0.9]# src/redis-cli -c -h 192.168.229.23 -p 6379 shutdown