pom:

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.48</version>

</dependency>

val conf = new SparkConf().setMaster("local").setAppName("test") val ss = SparkSession.builder().config(conf).getOrCreate() val sc = ss.sparkContext sc.setLogLevel("INFO") val pro = new Properties() pro.put("url", "jdbc:mysql://192.168.75.85/spark") pro.put("user", "root") pro.put("password", "aa123456") pro.put("driver", "com.mysql.jdbc.Driver") val oneDF : DataFrame = ss.read.jdbc(pro.get("url").toString, "one", pro) val scoreDF : DataFrame = ss.read.jdbc(pro.get("url").toString, "score", pro) oneDF.createTempView("oneNew") scoreDF.createTempView("scoreNew") val resDF: DataFrame = ss.sql("select a.name aName, b.name as bName, a.age, b.num from oneNew as a join scoreNew as b on a.name = b.name") resDF.show() resDF.printSchema()

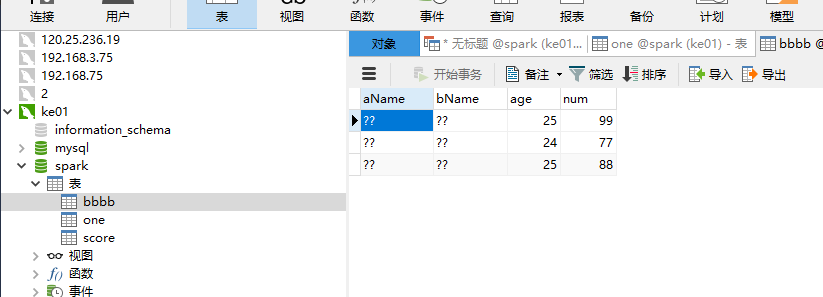

//数据写入到了mysql,需要表bbbb不存在,如果存在会保存失败 resDF.write.jdbc(pro.get("url").toString,"bbbb",pro)

结果

+-----+-----+---+---+ |aName|bName|age|num| +-----+-----+---+---+ | 小柯| 小柯| 25| 99| | 寂寞| 寂寞| 24| 77| | 真的| 真的| 25| 88| +-----+-----+---+---+ root |-- aName: string (nullable = true) |-- bName: string (nullable = true) |-- age: integer (nullable = true) |-- num: integer (nullable = true)

优化:

.config("spark.sql.shuffle.partitions", 1) 以上代码启动日志中分区数量200,可以增加该配置减少分区数量,