作业一

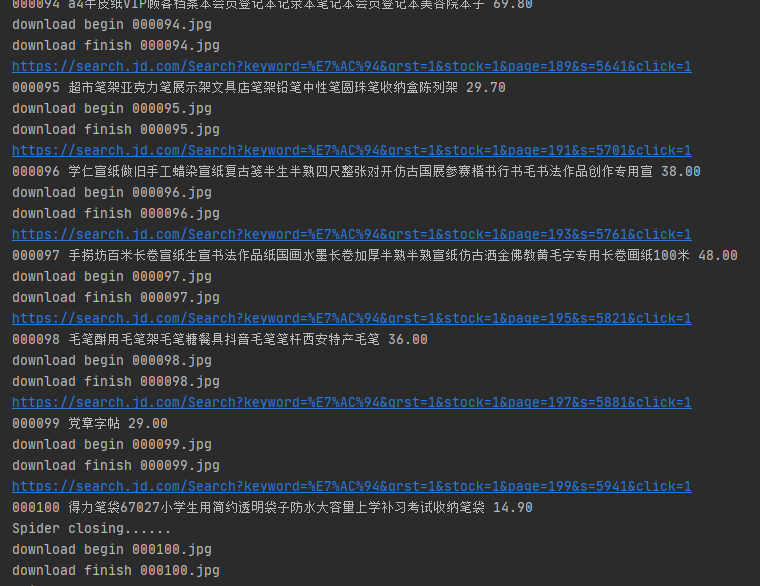

使用Selenium框架爬取京东商城某类商品信息及图片。

成果截图

代码

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

import urllib.request

import threading

import sqlite3

import os

import datetime

from selenium.webdriver.common.keys import Keys

import time

class MySpider:

headers = {

"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

imagePath = "download"

def startUp(self, url, key):

# # Initializing Chrome browser

chrome_options = Options()

chrome_options.add_argument('--headless')

chrome_options.add_argument('--disable-gpu')

self.driver = webdriver.Chrome(chrome_options=chrome_options)

# Initializing variables

self.threads = []

self.No = 0

self.imgNo = 0

# Initializing database

try:

self.con = sqlite3.connect("phones.db")

self.cursor = self.con.cursor()

try:

# 如果有表就删除

self.cursor.execute("drop table phones")

except:

pass

try:

sql = "create table phones (mNo varchar(32) primary key, mMark varchar(256),mPrice varchar(32),mNote varchar(1024),mFile varchar(256))"

self.cursor.execute(sql)

except:

pass

except Exception as err:

print(err)

# Initializing images folder

try:

if not os.path.exists(MySpider.imagePath):

os.mkdir(MySpider.imagePath)

images = os.listdir(MySpider.imagePath)

for img in images:

s = os.path.join(MySpider.imagePath, img)

os.remove(s)

except Exception as err:

print(err)

self.driver.get(url)

keyInput = self.driver.find_element_by_id("key")

keyInput.send_keys(key)

keyInput.send_keys(Keys.ENTER)

def closeUp(self):

try:

self.con.commit()

self.con.close()

self.driver.close()

except Exception as err:

print(err);

def insertDB(self, mNo, mMark, mPrice, mNote, mFile):

try:

sql = "insert into phones (mNo,mMark,mPrice,mNote,mFile) values (?,?,?,?,?)"

self.cursor.execute(sql, (mNo, mMark, mPrice, mNote, mFile))

except Exception as err:

print(err)

def showDB(self):

try:

con = sqlite3.connect("phones.db")

cursor =con.cursor()

print("%-8s%-16s%-8s%-16s%s"%("No", "Mark", "Price", "Image", "Note"))

cursor.execute("select mNo,mMark,mPrice,mFile,mNote from phones order by mNo")

rows = cursor.fetchall()

for row in rows:

print("%-8s %-16s %-8s %-16s %s" % (row[0], row[1], row[2], row[3],row[4]))

con.close()

except Exception as err:

print(err)

def download(self, src1, src2, mFile):

data = None

if src1:

try:

req = urllib.request.Request(src1, headers=MySpider.headers)

resp = urllib.request.urlopen(req, timeout=10)

data = resp.read()

except:

pass

if not data and src2:

try:

req = urllib.request.Request(src2, headers=MySpider.headers)

resp = urllib.request.urlopen(req, timeout=10)

data = resp.read()

except:

pass

if data:

print("download begin", mFile)

fobj = open(MySpider.imagePath + "\" + mFile, "wb")

fobj.write(data)

fobj.close()

print("download finish", mFile)

def processSpider(self):

try:

time.sleep(1)

print(self.driver.current_url)

lis =self.driver.find_elements_by_xpath("//div[@id='J_goodsList']//li[@class='gl-item']")

for li in lis:

# We find that the image is either in src or in data-lazy-img attribute

try:

src1 = li.find_element_by_xpath(".//div[@class='p-img']//a//img").get_attribute("src")

except:

src1 = ""

try:

src2 = li.find_element_by_xpath(".//div[@class='p-img']//a//img").get_attribute("data-lazy-img")

except:

src2 = ""

try:

price = li.find_element_by_xpath(".//div[@class='p-price']//i").text

except:

price = "0"

try:

note = li.find_element_by_xpath(".//div[@class='p-name p-name-type-2']//em").text

mark = note.split(" ")[0]

mark = mark.replace("爱心东东

", "")

mark = mark.replace(",", "")

note = note.replace("爱心东东

", "")

note = note.replace(",", "")

except:

note = ""

mark = ""

try:

price = li.find_element_by_xpath(".//div[@class='p-price']//i").text

except:

price = "0"

try:

note = li.find_element_by_xpath(".//div[@class='p-name p-name-type-2']//em").text

mark = note.split(" ")[0]

mark = mark.replace("爱心东东

", "")

mark = mark.replace(",", "")

note = note.replace("爱心东东

", "")

note = note.replace(",", "")

except:

note = ""

mark = ""

self.No = self.No + 1

no = str(self.No)

while len(no) < 6:

no = "0" + no

print(no, mark, price)

if src1:

src1 = urllib.request.urljoin(self.driver.current_url, src1)

p = src1.rfind(".")

mFile = no + src1[p:]

elif src2:

src2 = urllib.request.urljoin(self.driver.current_url, src2)

p = src2.rfind(".")

mFile = no + src2[p:]

if src1 or src2:

T = threading.Thread(target=self.download, args=(src1, src2, mFile))

T.setDaemon(False)

T.start()

self.threads.append(T)

else:

mFile = ""

self.insertDB(no, mark, price, note, mFile)

# 取下一页的数据,直到最后一页

try:

self.driver.find_element_by_xpath("//span[@class='p-num']//a[@class='pn-next disabled']")

except:

nextPage = self.driver.find_element_by_xpath("//span[@class='p-num']//a[@class='pn-next']")

time.sleep(10)

nextPage.click()

self.processSpider()

except Exception as err:

print(err)

def executeSpider(self, url, key):

starttime = datetime.datetime.now()

print("Spider starting......")

self.startUp(url, key)

print("Spider processing......")

self.processSpider()

print("Spider closing......")

self.closeUp()

for t in self.threads:

t.join()

print("Spider completed......")

endtime = datetime.datetime.now()

elapsed = (endtime - starttime).seconds

print("Total ", elapsed, " seconds elapsed")

url = "http://www.jd.com"

spider = MySpider()

while True:

print("1.爬取")

print("2.显示")

print("3.退出")

s = input("请选择(1,2,3):")

if s == "1":

spider.executeSpider(url, "笔")

continue

elif s == "2":

spider.showDB()

continue

elif s == "3":

break

心得体会

复现了代码,理解了一下过程,Xpath的路径搜索是比较重要的,而代码框架正好可以为二三题提供模板,方法可以仿照使用

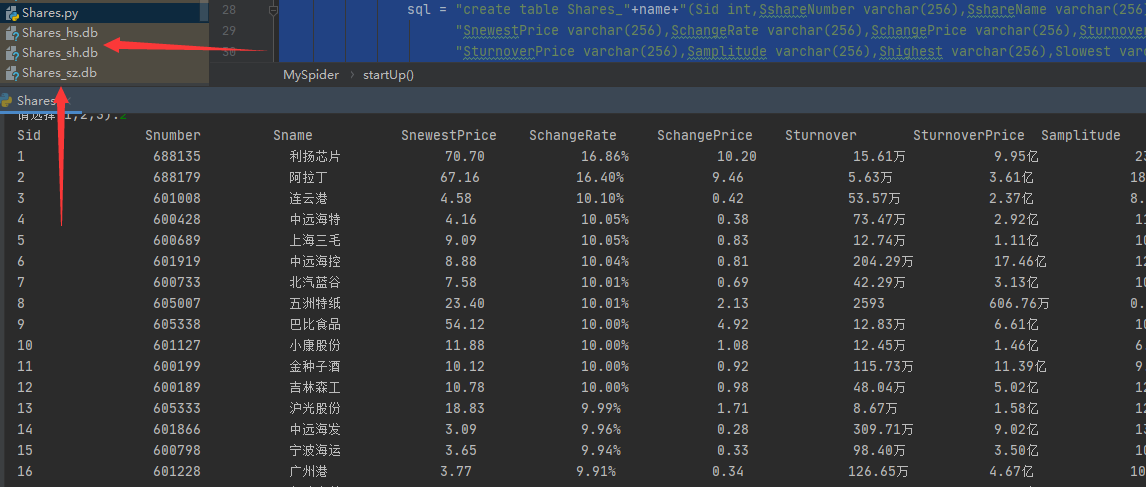

作业二

使用Selenium框架+ MySQL数据库存储技术路线爬取“沪深A股”、“上证A股”、“深证A股”3个板块的股票数据信息。

成果截图

代码

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

import urllib.request

import threading

import sqlite3

import os

import datetime

from selenium.webdriver.common.keys import Keys

import time

class MySpider():

def startUp(self, url,name):

# # Initializing Chrome browser

chrome_options = Options()

chrome_options.add_argument('--headless')

chrome_options.add_argument('--disable-gpu')

self.time=1

self.driver = webdriver.Chrome(chrome_options=chrome_options)

# Initializing database

try:

self.con = sqlite3.connect("Shares_"+name+".db")

self.cursor = self.con.cursor()

try:

# 如果有表就删除

self.cursor.execute("drop table Shares_"+name)

except:

pass

try:

sql = "create table Shares_"+name+"(Sid int,SshareNumber varchar(256),SshareName varchar(256),"

"SnewestPrice varchar(256),SchangeRate varchar(256),SchangePrice varchar(256),Sturnover varchar(256),"

"SturnoverPrice varchar(256),Samplitude varchar(256),Shighest varchar(256),Slowest varchar(256),"

"Stoday varchar(256),Syesterday varchar(256))"

self.cursor.execute(sql)

except:

pass

except Exception as err:

print(err)

time.sleep(3)

self.driver.get(url)

def closeUp(self):

try:

self.con.commit()

self.con.close()

self.driver.close()

except Exception as err:

print(err);

def insertDB(self, Sid, Snumber, Sname, SnewestPrice, SchangeRate, SchangePrice, Sturnover, SturnoverPrice

, Samplitude, Shighest, Slowest, Stoday, Syesterday,name):

try:

sql = "insert into Shares_"+name+" (Sid,SshareNumber,SshareName,SnewestPrice,SchangeRate,SchangePrice,Sturnover,SturnoverPrice"

",Samplitude,Shighest,Slowest,Stoday,Syesterday) values(?,?,?,?,?,?,?,?,?,?,?,?,?)"

self.cursor.execute(sql, (

Sid, Snumber, Sname, SnewestPrice, SchangeRate, SchangePrice, Sturnover, SturnoverPrice

, Samplitude, Shighest, Slowest, Stoday, Syesterday))

except Exception as err:

print(err)

def showDB(self,name):

try:

con = sqlite3.connect("Shares_"+name+".db")

cursor = con.cursor()

print("%-16s%-16s%-16s%-16s%-16s%-16s%-16s%-16s%-16s%-16s%-16s%-16s%-16s" % (

"Sid", "Snumber", "Sname", "SnewestPrice", "SchangeRate", "SchangePrice", "Sturnover", "SturnoverPrice",

"Samplitude", "Shighest", "Slowest", "Stoday", "Syesterday"))

cursor.execute("select * from Shares_"+name+" order by Sid")

rows = cursor.fetchall()

for row in rows:

print("%-16s %-16s %-16s %-16s %-16s %-16s %-16s %-16s %-16s %-16s %-16s %-16s %-16s " % (

row[0], row[1], row[2], row[3], row[4], row[5], row[6], row[7], row[8], row[9], row[10], row[11],

row[12]))

con.close()

except Exception as err:

print(err)

def processSpider(self,name):

try:

time.sleep(3)

print(self.driver.current_url)

print(4)

list = self.driver.find_elements_by_xpath("//table[@id='table_wrapper-table']/tbody/tr")

print(list)

for li in list:

try:

id = li.find_elements_by_xpath("./td[position()=1]")[0].text

shareNumber = li.find_elements_by_xpath("./td[position()=2]/a")[0].text

shareName = li.find_elements_by_xpath("./td[position()=3]/a")[0].text

newestPrice = li.find_elements_by_xpath("./td[position()=5]/span")[0].text

changeRate = li.find_elements_by_xpath("./td[position()=6]/span")[0].text

changePrice = li.find_elements_by_xpath("./td[position()=7]/span")[0].text

turnover = li.find_elements_by_xpath("./td[position()=8]")[0].text

turnoverPrice = li.find_elements_by_xpath("./td[position()=9]")[0].text

amplitude = li.find_elements_by_xpath("./td[position()=10]")[0].text

highest = li.find_elements_by_xpath("./td[position()=11]/span")[0].text

lowest = li.find_elements_by_xpath("./td[position()=12]/span")[0].text

today = li.find_elements_by_xpath("./td[position()=13]/span")[0].text

yesterday = li.find_elements_by_xpath("./td[position()=14]")[0].text

print(id,shareNumber)

except Exception as err:

print("err")

self.insertDB(id, shareNumber, shareName, newestPrice, changeRate, changePrice,

turnover, turnoverPrice, amplitude, highest, lowest, today, yesterday,name)

try:

self.driver.find_element_by_xpath("//div[@class='dataTables_wrapper']/div[@class='dataTables_paginate "

"paging_input']/span[@class='paginate_page']/a[@class='next paginate_"

"button disabled']")

except:

next = self.driver.find_element_by_xpath("//div[@class='dataTables_wrapper']"

"/div[@class='dataTables_paginate paging_input']"

"/a[@class='next paginate_button']")

time.sleep(10)

next.click()

self.time+=1

if self.time<4:

self.processSpider(name)

except Exception as err:

print(err)

def executeSpider(self, url,name):

starttime = datetime.datetime.now()

print("Spider starting......")

self.startUp(url,name)

print("Spider processing......")

self.processSpider(name)

print("Spider closing......")

self.closeUp()

print("Spider completed......")

endtime = datetime.datetime.now()

elapsed = (endtime - starttime).seconds

print("Total ", elapsed, " seconds elapsed")

token = ["hs", "sh", "sz"]

for t in token:

url = "http://quote.eastmoney.com/center/gridlist.html#" + t + "_a_board"

spider = MySpider()

while True:

print("1.爬取")

print("2.显示")

print("3.退出")

s = input("请选择(1,2,3):")

if s == "1":

spider.executeSpider(url,t)

continue

elif s == "2":

spider.showDB(t)

continue

elif s == "3":

break心得体会

实现三个板块,本来想代码点击板块然后爬信息,但是要鼠标放上去才可以跳出选板块的模块,无法定位,就只好强行修改url,并且在代码中因为数据量过大,就设置了只爬了四页,Xpath和position()=的使用和上一次爬股票的写法是一样的,就是下一页的button按钮需要寻找和观察

作业三

使用Selenium框架+MySQL爬取中国mooc网课程资源信息

运行截图

代码

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

import pymysql

import time

import selenium

import datetime

import sqlite3

class MySpider():

def startUp(self, url):

# # Initializing Chrome browser

chrome_options = Options()

# chrome_options.add_argument('--headless')

# chrome_options.add_argument('--disable-gpu')

self.driver = webdriver.Chrome(chrome_options=chrome_options)

self.No=1

# Initializing database

try:

self.con = pymysql.connect(host='127.0.0.1',port=3306,user='root',passwd='axx123123',charset='utf8',db='mydb')

self.cursor = self.con.cursor()

try:

# 如果有表就删除

self.cursor.execute("drop table Mooc")

except:

pass

try:

sql = "create table mooc (Mid int,cCourse varchar(256),cCollege varchar(256),cTeacher varchar(256)"

",cTeam varchar(256),cCount varchar(256),cProcess varchar(256),cBrief varchar(256))"

self.cursor.execute(sql)

except:

pass

except Exception as err:

print(err)

time.sleep(3)

self.driver.get(url)

def closeUp(self):

try:

self.con.commit()

self.con.close()

self.driver.close()

except Exception as err:

print(err)

def insertDB(self, Mid, cCourse, cCollege,cTeacher, cTeam, cCount, cProcess, cBrief):

try:

self.cursor.execute("insert into mooc (Mid, cCourse,cCollege, cTeacher, cTeam, cCount, cProcess, cBrief) "

"values(%s,%s,%s,%s,%s,%s,%s,%s)",

(Mid, cCourse, cCollege,cTeacher, cTeam, cCount, cProcess, cBrief))

except Exception as err:

print(err)

def showDB(self):

try:

con = sqlite3.connect("Mooc.db")

cursor = con.cursor()

print("%-16s%-16s%-16s%-16s%-16s%-16s%-16s%-16s" % (

"Mid", "cCourse", "cCollege","cTeacher", "cTeam", "cCount", "cProcess", "cBrief"))

cursor.execute("select * from Mooc order by Mid")

rows = cursor.fetchall()

for row in rows:

print("%-16s %-16s %-16s %-16s %-16s %-16s %-16s %-16s" % (

row[0], row[1], row[2], row[3], row[4], row[5], row[6], row[7]))

con.close()

except Exception as err:

print(err)

def login(self):

self.driver.maximize_window()

# login

try:

self.driver.find_element_by_xpath("//div[@id='g-body']/div[@id='app']/div[@class='_1FQn4']"

"/div[@class='_2g9hu']/div[@class='_2XYeR']/div[@class='_2yDxF _3luH4']"

"/div[@class='_1Y4Ni']/div[@role='button']").click()

time.sleep(3)

self.driver.find_element_by_xpath("//span[@class='ux-login-set-scan-code_ft_back']").click()

time.sleep(3)

self.driver.find_element_by_xpath("//ul[@class='ux-tabs-underline_hd']/li[position()=2]").click()

time.sleep(3)

login = self.driver.find_elements_by_tag_name("iframe")[1].get_attribute("id")

self.driver.switch_to.frame(login)

self.driver.find_element_by_xpath("//div[@class='u-input box']/input[@type='tel']").send_keys("xxxxxxx")

time.sleep(1)

self.driver.find_element_by_xpath(

"//div[@class='u-input box']/input[@class='j-inputtext dlemail']").send_keys("xxxxxxx")

time.sleep(1)

self.driver.find_element_by_xpath("//a[@class='u-loginbtn btncolor tabfocus ']").click()

time.sleep(5)

self.driver.find_element_by_xpath("//*[@id='app']/div/div/div[1]/div[1]/div[1]/span[1]/a").click()

last = self.driver.window_handles[-1]

self.driver.switch_to.window(last)

time.sleep(8)

except Exception as err:

print(err)

def processSpider(self):

try:

time.sleep(2)

list=self.driver.find_elements_by_xpath("//*[@id='app']/div/div/div[2]/div[2]/div/div[2]/div[1]/div[@class='_2mbYw']")

print(list)

for li in list:

li.click()

time.sleep(2)

new_tab=self.driver.window_handles[-1]

time.sleep(2)

self.driver.switch_to.window(new_tab)

time.sleep(2)

id=self.No

#print(id)

Course=self.driver.find_element_by_xpath("//*[@id='g-body']/div[1]/div/div[3]/div/div[1]/div[1]/span[1]").text

#print(Course)

College=self.driver.find_element_by_xpath("//*[@id='j-teacher']/div/a/img").get_attribute("alt")

#print(College)

Teacher=self.driver.find_element_by_xpath("//*[@id='j-teacher']/div/div/div[2]/div/div/div/div/div/h3").text

#print(Teacher)

Teamlist=self.driver.find_elements_by_xpath("//*[@id='j-teacher']/div/div/div[2]/div/div[@class='um-list-slider_con']/div")

Team=''

for name in Teamlist:

main_name=name.find_element_by_xpath("./div/div/h3[@class='f-fc3']").text

Team+=str(main_name)+" "

#print(Team)

Count=self.driver.find_element_by_xpath("//*[@id='course-enroll-info']/div/div[2]/div[1]/span").text

Count=Count.split(" ")[1]

#print(Count)

Process=self.driver.find_element_by_xpath('//*[@id="course-enroll-info"]/div/div[1]/div[2]/div[1]/span[2]').text

#print(Process)

Brief=self.driver.find_element_by_xpath('//*[@id="j-rectxt2"]').text

#print(Brief)

time.sleep(2)

self.driver.close()

pre_tab=self.driver.window_handles[1]

self.driver.switch_to.window(pre_tab)

time.sleep(2)

self.No+=1

self.insertDB(id, Course, College, Teacher, Team, Count, Process, Brief)

try:

time.sleep(2)

nextpage = self.driver.find_element_by_xpath("//*[@id='app']/div/div/div[2]/div[2]/div/div[2]/div[2]/div/a[10]")

time.sleep(2)

nextpage.click()

self.processSpider()

except:

self.driver.find_element_by_xpath("//a[@class='_3YiUU _1BSqy']")

except Exception as err:

print(err)

def executeSpider(self, url):

starttime = datetime.datetime.now()

print("Spider starting......")

self.startUp(url)

print("Spider processing......")

self.login()

self.processSpider()

print("Spider closing......")

self.closeUp()

print("Spider completed......")

endtime = datetime.datetime.now()

elapsed = (endtime - starttime).seconds

print("Total ", elapsed, " seconds elapsed")

url = "https://www.icourse163.org/"

spider = MySpider()

spider.executeSpider(url)心得体会

登录界面可害惨了,可以点击其他方式登录和手机号登录,无法把内容输入到inputbox里面,找了半天才知道要把当前页面switch到iframe才可以输入,由于网速的问题,没跳转就运行find_element,然后报错以为是自己xpath写错,加上sleep就vans了,爬课程详细信息还要点进去,有switch到frame的教训,知道这个点进去还要switch到当前的页面才可以爬,然后还要关闭,再switch回去,暂时还没解决的问题就是,一页20个爬完,翻到下一页,因为页面留在下面,没显示第21个的框,代码自动化点不到,(只能翻页的时候手动将页面调到最上方),不过还算是熟练了这一系列的开发过程,xpath好香