1.首先要准备几样东西:

(1)要预测的图像,需要32×32大小;

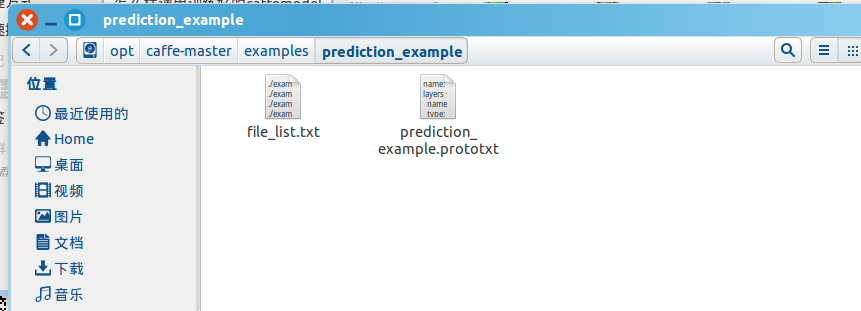

(2)网络配置文件,prototxt,以及每个图像的路径及其序号。

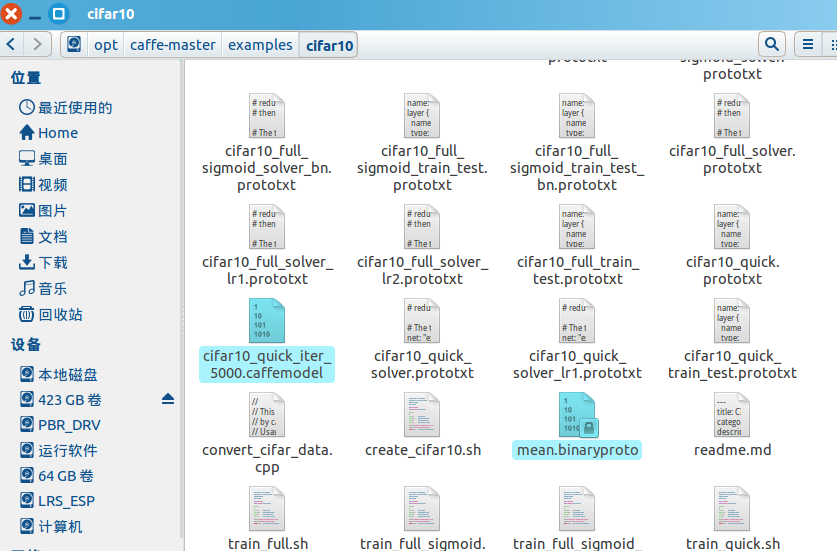

(3)训练好的caffemodel以及均值二进制文件,貌似可以定值,需要通过数据训练计算得到。

(3)预测的主程序

内容:

1 #include<cstring> 2 #include<cstdlib> 3 #include<vector> 4 #include<string> 5 #include<iostream> 6 #include<stdio.h> 7 #include<assert.h> 8 #include"caffe/caffe.hpp" 9 #include"caffe/util/io.hpp" 10 #include"caffe/blob.hpp" 11 usingnamespace caffe; 12 usingnamespace std; 13 int main(int argc,char** argv) 14 { 15 LOG(INFO)<< argv[0]<<" [GPU] [Device ID]"; 16 //Setting CPU or GPU 17 if(argc >=2&& strcmp(argv[1],"GPU")==0) 18 { 19 Caffe::set_mode(Caffe::GPU); 20 int device_id =0; 21 if(argc ==3) 22 { 23 device_id = atoi(argv[2]); 24 } 25 Caffe::SetDevice(device_id); 26 LOG(INFO)<<"Using GPU #"<< device_id; 27 } 28 else 29 { 30 LOG(INFO)<<"Using CPU"; 31 Caffe::set_mode(Caffe::CPU); 32 } 33 // Set to TEST Phase 34 //Caffe::set_phase(Caffe::TEST); 35 // Load net 36 // Assume you are in Caffe master directory,神经网络的配置文件 37 Net<float> net("./examples/prediction_example/prediction_example.prototxt",TEST); 38 // Load pre-trained net (binary proto) 39 // Assume you are already trained the cifar10 example.预训练网络加载 40 net.CopyTrainedLayersFrom("./examples/cifar10/cifar10_quick_iter_5000.caffemodel"); 41 float loss =0.0; 42 vector<Blob<float>*> results = net.ForwardPrefilled(&loss); 43 LOG(INFO)<<"Result size: "<< results.size(); 44 // Log how many blobs were loaded 45 LOG(INFO)<<"Blob size: "<< net.input_blobs().size(); 46 LOG(INFO)<<"-------------"; 47 LOG(INFO)<<" prediction : "; 48 // Get probabilities 49 const boost::shared_ptr<Blob<float>>& probLayer = net.blob_by_name("prob"); 50 constfloat* probs_out = probLayer->cpu_data(); 51 // Get argmax results 52 const boost::shared_ptr<Blob<float>>& argmaxLayer = net.blob_by_name("argmax"); 53 // Display results 54 LOG(INFO)<<"---------------------------------------------------------------"; 55 constfloat* argmaxs = argmaxLayer->cpu_data(); 56 for(int i =0; i < argmaxLayer->num(); i++) 57 { 58 LOG(INFO)<<"Pattern:"<< i <<" class:"<< argmaxs[i*argmaxLayer->height()+0]<<" Prob="<< probs_out[i*probLayer->height()+0]; 59 } 60 LOG(INFO)<<"-------------"; 61 return0; 62 }

2.结果:

1 anmeng@cam:/opt/caffe-master$ ./build/tools/prediction_example 2 WARNING:Logging before InitGoogleLogging() is written to STDERR 3 I0326 00:32:58.843086 3090 prediction_example.cpp:19]./build/tools/prediction_example [GPU][Device ID] 4 I0326 00:32:58.843185 3090 prediction_example.cpp:35]Using CPU 5 I0326 00:32:58.990399 3090 upgrade_proto.cpp:52]Attempting to upgrade input file specified using deprecated V1LayerParameter:./examples/prediction_example/prediction_example.prototxt 6 I0326 00:32:58.990525 3090 upgrade_proto.cpp:60]Successfully upgraded file specified using deprecated V1LayerParameter 7 I0326 00:32:58.990702 3090 net.cpp:49]Initializing net from parameters: 8 name:"CIFAR10_quick" 9 state { 10 phase: TEST 11 } 12 layer { 13 name:"data" 14 type:"ImageData" 15 top:"data" 16 top:"label" 17 transform_param { 18 mean_file:"./examples/cifar10/mean.binaryproto" 19 } 20 image_data_param { 21 source:"./examples/prediction_example/file_list.txt" 22 batch_size:7 23 new_height:32 24 new_32 25 } 26 } 27 layer { 28 name:"conv1" 29 type:"Convolution" 30 bottom:"data" 31 top:"conv1" 32 param { 33 lr_mult:1 34 } 35 param { 36 lr_mult:2 37 } 38 convolution_param { 39 num_output:32 40 pad:2 41 kernel_size:5 42 stride:1 43 weight_filler { 44 type:"gaussian" 45 std:0.0001 46 } 47 bias_filler { 48 type:"constant" 49 } 50 } 51 } 52 layer { 53 name:"pool1" 54 type:"Pooling" 55 bottom:"conv1" 56 top:"pool1" 57 pooling_param { 58 pool: MAX 59 kernel_size:3 60 stride:2 61 } 62 } 63 layer { 64 name:"relu1" 65 type:"ReLU" 66 bottom:"pool1" 67 top:"pool1" 68 } 69 layer { 70 name:"conv2" 71 type:"Convolution" 72 bottom:"pool1" 73 top:"conv2" 74 param { 75 lr_mult:1 76 } 77 param { 78 lr_mult:2 79 } 80 convolution_param { 81 num_output:32 82 pad:2 83 kernel_size:5 84 stride:1 85 weight_filler { 86 type:"gaussian" 87 std:0.01 88 } 89 bias_filler { 90 type:"constant" 91 } 92 } 93 } 94 layer { 95 name:"relu2" 96 type:"ReLU" 97 bottom:"conv2" 98 top:"conv2" 99 } 100 layer { 101 name:"pool2" 102 type:"Pooling" 103 bottom:"conv2" 104 top:"pool2" 105 pooling_param { 106 pool: AVE 107 kernel_size:3 108 stride:2 109 } 110 } 111 layer { 112 name:"conv3" 113 type:"Convolution" 114 bottom:"pool2" 115 top:"conv3" 116 param { 117 lr_mult:1 118 } 119 param { 120 lr_mult:2 121 } 122 convolution_param { 123 num_output:64 124 pad:2 125 kernel_size:5 126 stride:1 127 weight_filler { 128 type:"gaussian" 129 std:0.01 130 } 131 bias_filler { 132 type:"constant" 133 } 134 } 135 } 136 layer { 137 name:"relu3" 138 type:"ReLU" 139 bottom:"conv3" 140 top:"conv3" 141 } 142 layer { 143 name:"pool3" 144 type:"Pooling" 145 bottom:"conv3" 146 top:"pool3" 147 pooling_param { 148 pool: AVE 149 kernel_size:3 150 stride:2 151 } 152 } 153 layer { 154 name:"ip1" 155 type:"InnerProduct" 156 bottom:"pool3" 157 top:"ip1" 158 param { 159 lr_mult:1 160 } 161 param { 162 lr_mult:2 163 } 164 inner_product_param { 165 num_output:64 166 weight_filler { 167 type:"gaussian" 168 std:0.1 169 } 170 bias_filler { 171 type:"constant" 172 } 173 } 174 } 175 layer { 176 name:"ip2" 177 type:"InnerProduct" 178 bottom:"ip1" 179 top:"ip2" 180 param { 181 lr_mult:1 182 } 183 param { 184 lr_mult:2 185 } 186 inner_product_param { 187 num_output:10 188 weight_filler { 189 type:"gaussian" 190 std:0.1 191 } 192 bias_filler { 193 type:"constant" 194 } 195 } 196 } 197 layer { 198 name:"accuracy" 199 type:"Accuracy" 200 bottom:"ip2" 201 bottom:"label" 202 top:"accuracy" 203 include { 204 phase: TEST 205 } 206 } 207 layer { 208 name:"loss" 209 type:"SoftmaxWithLoss" 210 bottom:"ip2" 211 bottom:"label" 212 top:"loss" 213 } 214 layer { 215 name:"prob" 216 type:"Softmax" 217 bottom:"ip2" 218 top:"prob" 219 include { 220 phase: TEST 221 } 222 } 223 layer { 224 name:"argmax" 225 type:"ArgMax" 226 bottom:"prob" 227 top:"argmax" 228 include { 229 phase: TEST 230 } 231 argmax_param { 232 top_k:5 233 } 234 } 235 I0326 00:32:58.991350 3090 layer_factory.hpp:77]Creating layer data 236 I0326 00:32:58.991396 3090 net.cpp:91]CreatingLayer data 237 I0326 00:32:58.991411 3090 net.cpp:399] data -> data 238 I0326 00:32:58.991430 3090 net.cpp:399] data -> label 239 I0326 00:32:58.991448 3090 data_transformer.cpp:25]Loading mean file from:./examples/cifar10/mean.binaryproto 240 I0326 00:32:58.991549 3090 image_data_layer.cpp:38]Opening file ./examples/prediction_example/file_list.txt 241 I0326 00:32:58.991603 3090 image_data_layer.cpp:53] A total of 7 images. 242 I0326 00:32:58.995582 3090 image_data_layer.cpp:80] output data size:7,3,32,32 243 I0326 00:32:58.996433 3090 net.cpp:141]Setting up data 244 I0326 00:32:58.996472 3090 net.cpp:148]Top shape:733232(21504) 245 I0326 00:32:58.996482 3090 net.cpp:148]Top shape:7(7) 246 I0326 00:32:58.996489 3090 net.cpp:156]Memory required for data:86044 247 I0326 00:32:58.996500 3090 layer_factory.hpp:77]Creating layer label_data_1_split 248 I0326 00:32:58.996515 3090 net.cpp:91]CreatingLayer label_data_1_split 249 I0326 00:32:58.996523 3090 net.cpp:425] label_data_1_split <- label 250 I0326 00:32:58.996536 3090 net.cpp:399] label_data_1_split -> label_data_1_split_0 251 I0326 00:32:58.996549 3090 net.cpp:399] label_data_1_split -> label_data_1_split_1 252 I0326 00:32:58.996562 3090 net.cpp:141]Setting up label_data_1_split 253 I0326 00:32:58.996569 3090 net.cpp:148]Top shape:7(7) 254 I0326 00:32:58.996577 3090 net.cpp:148]Top shape:7(7) 255 I0326 00:32:58.996582 3090 net.cpp:156]Memory required for data:86100 256 I0326 00:32:58.996587 3090 layer_factory.hpp:77]Creating layer conv1 257 I0326 00:32:58.996606 3090 net.cpp:91]CreatingLayer conv1 258 I0326 00:32:58.996613 3090 net.cpp:425] conv1 <- data 259 I0326 00:32:58.996620 3090 net.cpp:399] conv1 -> conv1 260 I0326 00:32:59.145514 3090 net.cpp:141]Setting up conv1 261 I0326 00:32:59.145567 3090 net.cpp:148]Top shape:7323232(229376) 262 I0326 00:32:59.145577 3090 net.cpp:156]Memory required for data:1003604 263 I0326 00:32:59.145602 3090 layer_factory.hpp:77]Creating layer pool1 264 I0326 00:32:59.145617 3090 net.cpp:91]CreatingLayer pool1 265 I0326 00:32:59.145628 3090 net.cpp:425] pool1 <- conv1 266 I0326 00:32:59.145637 3090 net.cpp:399] pool1 -> pool1 267 I0326 00:32:59.145658 3090 net.cpp:141]Setting up pool1 268 I0326 00:32:59.145668 3090 net.cpp:148]Top shape:7321616(57344) 269 I0326 00:32:59.145673 3090 net.cpp:156]Memory required for data:1232980 270 I0326 00:32:59.145679 3090 layer_factory.hpp:77]Creating layer relu1 271 I0326 00:32:59.145689 3090 net.cpp:91]CreatingLayer relu1 272 I0326 00:32:59.145694 3090 net.cpp:425] relu1 <- pool1 273 I0326 00:32:59.145701 3090 net.cpp:386] relu1 -> pool1 (in-place) 274 I0326 00:32:59.145912 3090 net.cpp:141]Setting up relu1 275 I0326 00:32:59.145936 3090 net.cpp:148]Top shape:7321616(57344) 276 I0326 00:32:59.145943 3090 net.cpp:156]Memory required for data:1462356 277 I0326 00:32:59.145951 3090 layer_factory.hpp:77]Creating layer conv2 278 I0326 00:32:59.145963 3090 net.cpp:91]CreatingLayer conv2 279 I0326 00:32:59.145973 3090 net.cpp:425] conv2 <- pool1 280 I0326 00:32:59.145982 3090 net.cpp:399] conv2 -> conv2 281 I0326 00:32:59.146879 3090 net.cpp:141]Setting up conv2 282 I0326 00:32:59.146905 3090 net.cpp:148]Top shape:7321616(57344) 283 I0326 00:32:59.146913 3090 net.cpp:156]Memory required for data:1691732 284 I0326 00:32:59.146924 3090 layer_factory.hpp:77]Creating layer relu2 285 I0326 00:32:59.146934 3090 net.cpp:91]CreatingLayer relu2 286 I0326 00:32:59.146939 3090 net.cpp:425] relu2 <- conv2 287 I0326 00:32:59.146949 3090 net.cpp:386] relu2 -> conv2 (in-place) 288 I0326 00:32:59.147078 3090 net.cpp:141]Setting up relu2 289 I0326 00:32:59.147100 3090 net.cpp:148]Top shape:7321616(57344) 290 I0326 00:32:59.147106 3090 net.cpp:156]Memory required for data:1921108 291 I0326 00:32:59.147114 3090 layer_factory.hpp:77]Creating layer pool2 292 I0326 00:32:59.147121 3090 net.cpp:91]CreatingLayer pool2 293 I0326 00:32:59.147127 3090 net.cpp:425] pool2 <- conv2 294 I0326 00:32:59.147135 3090 net.cpp:399] pool2 -> pool2 295 I0326 00:32:59.147347 3090 net.cpp:141]Setting up pool2 296 I0326 00:32:59.147372 3090 net.cpp:148]Top shape:73288(14336) 297 I0326 00:32:59.147379 3090 net.cpp:156]Memory required for data:1978452 298 I0326 00:32:59.147387 3090 layer_factory.hpp:77]Creating layer conv3 299 I0326 00:32:59.147398 3090 net.cpp:91]CreatingLayer conv3 300 I0326 00:32:59.147408 3090 net.cpp:425] conv3 <- pool2 301 I0326 00:32:59.147416 3090 net.cpp:399] conv3 -> conv3 302 I0326 00:32:59.148576 3090 net.cpp:141]Setting up conv3 303 I0326 00:32:59.148602 3090 net.cpp:148]Top shape:76488(28672) 304 I0326 00:32:59.148608 3090 net.cpp:156]Memory required for data:2093140 305 I0326 00:32:59.148620 3090 layer_factory.hpp:77]Creating layer relu3 306 I0326 00:32:59.148628 3090 net.cpp:91]CreatingLayer relu3 307 I0326 00:32:59.148635 3090 net.cpp:425] relu3 <- conv3 308 I0326 00:32:59.148643 3090 net.cpp:386] relu3 -> conv3 (in-place) 309 I0326 00:32:59.148766 3090 net.cpp:141]Setting up relu3 310 I0326 00:32:59.148788 3090 net.cpp:148]Top shape:76488(28672) 311 I0326 00:32:59.148795 3090 net.cpp:156]Memory required for data:2207828 312 I0326 00:32:59.148802 3090 layer_factory.hpp:77]Creating layer pool3 313 I0326 00:32:59.148810 3090 net.cpp:91]CreatingLayer pool3 314 I0326 00:32:59.148816 3090 net.cpp:425] pool3 <- conv3 315 I0326 00:32:59.148824 3090 net.cpp:399] pool3 -> pool3 316 I0326 00:32:59.149027 3090 net.cpp:141]Setting up pool3 317 I0326 00:32:59.149052 3090 net.cpp:148]Top shape:76444(7168) 318 I0326 00:32:59.149058 3090 net.cpp:156]Memory required for data:2236500 319 I0326 00:32:59.149065 3090 layer_factory.hpp:77]Creating layer ip1 320 I0326 00:32:59.149077 3090 net.cpp:91]CreatingLayer ip1 321 I0326 00:32:59.149086 3090 net.cpp:425] ip1 <- pool3 322 I0326 00:32:59.149094 3090 net.cpp:399] ip1 -> ip1 323 I0326 00:32:59.149904 3090 net.cpp:141]Setting up ip1 324 I0326 00:32:59.149914 3090 net.cpp:148]Top shape:764(448) 325 I0326 00:32:59.149919 3090 net.cpp:156]Memory required for data:2238292 326 I0326 00:32:59.149927 3090 layer_factory.hpp:77]Creating layer ip2 327 I0326 00:32:59.149935 3090 net.cpp:91]CreatingLayer ip2 328 I0326 00:32:59.149941 3090 net.cpp:425] ip2 <- ip1 329 I0326 00:32:59.149948 3090 net.cpp:399] ip2 -> ip2 330 I0326 00:32:59.149968 3090 net.cpp:141]Setting up ip2 331 I0326 00:32:59.149976 3090 net.cpp:148]Top shape:710(70) 332 I0326 00:32:59.149981 3090 net.cpp:156]Memory required for data:2238572 333 I0326 00:32:59.149991 3090 layer_factory.hpp:77]Creating layer ip2_ip2_0_split 334 I0326 00:32:59.149998 3090 net.cpp:91]CreatingLayer ip2_ip2_0_split 335 I0326 00:32:59.150004 3090 net.cpp:425] ip2_ip2_0_split <- ip2 336 I0326 00:32:59.150012 3090 net.cpp:399] ip2_ip2_0_split -> ip2_ip2_0_split_0 337 I0326 00:32:59.150019 3090 net.cpp:399] ip2_ip2_0_split -> ip2_ip2_0_split_1 338 I0326 00:32:59.150027 3090 net.cpp:399] ip2_ip2_0_split -> ip2_ip2_0_split_2 339 I0326 00:32:59.150035 3090 net.cpp:141]Setting up ip2_ip2_0_split 340 I0326 00:32:59.150043 3090 net.cpp:148]Top shape:710(70) 341 I0326 00:32:59.150048 3090 net.cpp:148]Top shape:710(70) 342 I0326 00:32:59.150055 3090 net.cpp:148]Top shape:710(70) 343 I0326 00:32:59.150060 3090 net.cpp:156]Memory required for data:2239412 344 I0326 00:32:59.150066 3090 layer_factory.hpp:77]Creating layer accuracy 345 I0326 00:32:59.150076 3090 net.cpp:91]CreatingLayer accuracy 346 I0326 00:32:59.150082 3090 net.cpp:425] accuracy <- ip2_ip2_0_split_0 347 I0326 00:32:59.150089 3090 net.cpp:425] accuracy <- label_data_1_split_0 348 I0326 00:32:59.150095 3090 net.cpp:399] accuracy -> accuracy 349 I0326 00:32:59.150106 3090 net.cpp:141]Setting up accuracy 350 I0326 00:32:59.150113 3090 net.cpp:148]Top shape:(1) 351 I0326 00:32:59.150118 3090 net.cpp:156]Memory required for data:2239416 352 I0326 00:32:59.150125 3090 layer_factory.hpp:77]Creating layer loss 353 I0326 00:32:59.150133 3090 net.cpp:91]CreatingLayer loss 354 I0326 00:32:59.150140 3090 net.cpp:425] loss <- ip2_ip2_0_split_1 355 I0326 00:32:59.150146 3090 net.cpp:425] loss <- label_data_1_split_1 356 I0326 00:32:59.150152 3090 net.cpp:399] loss -> loss 357 I0326 00:32:59.150166 3090 layer_factory.hpp:77]Creating layer loss 358 I0326 00:32:59.150297 3090 net.cpp:141]Setting up loss 359 I0326 00:32:59.150308 3090 net.cpp:148]Top shape:(1) 360 I0326 00:32:59.150315 3090 net.cpp:151] with loss weight 1 361 I0326 00:32:59.150331 3090 net.cpp:156]Memory required for data:2239420 362 I0326 00:32:59.150337 3090 layer_factory.hpp:77]Creating layer prob 363 I0326 00:32:59.150346 3090 net.cpp:91]CreatingLayer prob 364 I0326 00:32:59.150352 3090 net.cpp:425] prob <- ip2_ip2_0_split_2 365 I0326 00:32:59.150360 3090 net.cpp:399] prob -> prob 366 I0326 00:32:59.150557 3090 net.cpp:141]Setting up prob 367 I0326 00:32:59.150569 3090 net.cpp:148]Top shape:710(70) 368 I0326 00:32:59.150575 3090 net.cpp:156]Memory required for data:2239700 369 I0326 00:32:59.150581 3090 layer_factory.hpp:77]Creating layer argmax 370 I0326 00:32:59.150593 3090 net.cpp:91]CreatingLayer argmax 371 I0326 00:32:59.150599 3090 net.cpp:425] argmax <- prob 372 I0326 00:32:59.150605 3090 net.cpp:399] argmax -> argmax 373 I0326 00:32:59.150619 3090 net.cpp:141]Setting up argmax 374 I0326 00:32:59.150626 3090 net.cpp:148]Top shape:715(35) 375 I0326 00:32:59.150631 3090 net.cpp:156]Memory required for data:2239840 376 I0326 00:32:59.150637 3090 net.cpp:219] argmax does not need backward computation. 377 I0326 00:32:59.150643 3090 net.cpp:219] prob does not need backward computation. 378 I0326 00:32:59.150650 3090 net.cpp:217] loss needs backward computation. 379 I0326 00:32:59.150655 3090 net.cpp:219] accuracy does not need backward computation. 380 I0326 00:32:59.150661 3090 net.cpp:217] ip2_ip2_0_split needs backward computation. 381 I0326 00:32:59.150666 3090 net.cpp:217] ip2 needs backward computation. 382 I0326 00:32:59.150672 3090 net.cpp:217] ip1 needs backward computation. 383 I0326 00:32:59.150678 3090 net.cpp:217] pool3 needs backward computation. 384 I0326 00:32:59.150683 3090 net.cpp:217] relu3 needs backward computation. 385 I0326 00:32:59.150689 3090 net.cpp:217] conv3 needs backward computation. 386 I0326 00:32:59.150696 3090 net.cpp:217] pool2 needs backward computation. 387 I0326 00:32:59.150701 3090 net.cpp:217] relu2 needs backward computation. 388 I0326 00:32:59.150707 3090 net.cpp:217] conv2 needs backward computation. 389 I0326 00:32:59.150712 3090 net.cpp:217] relu1 needs backward computation. 390 I0326 00:32:59.150717 3090 net.cpp:217] pool1 needs backward computation. 391 I0326 00:32:59.150723 3090 net.cpp:217] conv1 needs backward computation. 392 I0326 00:32:59.150729 3090 net.cpp:219] label_data_1_split does not need backward computation. 393 I0326 00:32:59.150735 3090 net.cpp:219] data does not need backward computation. 394 I0326 00:32:59.150741 3090 net.cpp:261]This network produces output accuracy 395 I0326 00:32:59.150746 3090 net.cpp:261]This network produces output argmax 396 I0326 00:32:59.150753 3090 net.cpp:261]This network produces output loss 397 I0326 00:32:59.150768 3090 net.cpp:274]Network initialization done. 398 I0326 00:32:59.151636 3090 upgrade_proto.cpp:52]Attempting to upgrade input file specified using deprecated V1LayerParameter:./examples/cifar10/cifar10_quick_iter_5000.caffemodel 399 I0326 00:32:59.152022 3090 upgrade_proto.cpp:60]Successfully upgraded file specified using deprecated V1LayerParameter 400 I0326 00:32:59.152045 3090 net.cpp:753]Ignoring source layer cifar 401 W0326 00:32:59.152138 3090 net.hpp:41] DEPRECATED:ForwardPrefilled() will be removed in a future version.UseForward(). 402 I0326 00:32:59.190719 3090 prediction_example.cpp:52]Result size:3 403 I0326 00:32:59.190753 3090 prediction_example.cpp:55]Blob size:0 404 I0326 00:32:59.190762 3090 prediction_example.cpp:58]------------- 405 I0326 00:32:59.190768 3090 prediction_example.cpp:59] prediction : 406 I0326 00:32:59.190779 3090 prediction_example.cpp:69]--------------------------------------------------------------- 407 I0326 00:32:59.190786 3090 prediction_example.cpp:73]Pattern:0 class:5Prob=2.69745e-06 408 I0326 00:32:59.190804 3090 prediction_example.cpp:73]Pattern:1 class:3Prob=4.0121e-05 409 I0326 00:32:59.190811 3090 prediction_example.cpp:73]Pattern:2 class:9Prob=0.00531695 410 I0326 00:32:59.190819 3090 prediction_example.cpp:73]Pattern:3 class:6Prob=0.00282228 411 I0326 00:32:59.190825 3090 prediction_example.cpp:73]Pattern:4 class:0Prob=1.68176e-05 412 I0326 00:32:59.190832 3090 prediction_example.cpp:73]Pattern:5 class:4Prob=0.991599 413 I0326 00:32:59.190840 3090 prediction_example.cpp:73]Pattern:6 class:2Prob=9.24821e-05 414 I0326 00:32:59.190847 3090 prediction_example.cpp:75]-------------

各个类别图示:

3.后记

上面是用CPU跑的,我还等了几秒钟,用了下GPU处理,瞬间,真的很快,Enter完就出结果了。

参考文献:

(1)https://github.com/ikkiChung/Prediction-Example-With-Caffe

(5)http://pz124578126.lofter.com/post/1cd68c28_72625df