看了北风的免费视频,只有一个案例,苦逼买不起几百上千的视频教程

先搭建简单的web项目,基于struts,使用到了bootstrap。

界面:

web.xml

1 <filter> 2 <filter-name>struts2</filter-name> 3 <filter-class>org.apache.struts2.dispatcher.ng.filter.StrutsPrepareAndExecuteFilter</filter-class> 4 </filter> 5 6 <filter-mapping> 7 <filter-name>struts2</filter-name> 8 <url-pattern>/*</url-pattern> 9 </filter-mapping>

struts.xml

1 <struts> 2 3 <constant name="struts.devMode" value="true" /> 4 <constant name="struts.action.extension" value="action,," /> 5 6 <package name="ajax" extends="json-default"> 7 8 <action name="sug" class="org.admln.suggestion.sug"> 9 <result type="json"></result> 10 </action> 11 </package> 12 13 </struts>

index.jsp

1 <%@ page language="java" contentType="text/html; charset=utf-8" 2 pageEncoding="utf-8"%> 3 <!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd"> 4 <html> 5 <head> 6 <meta http-equiv="Content-Type" content="text/html; charset=utf-8"> 7 <title>search demo</title> 8 <link rel="stylesheet" href="http://code.jquery.com/ui/1.9.2/themes/base/jquery-ui.css"/> 9 <script type="text/javascript" src="http://code.jquery.com/jquery-1.8.3.js"></script> 10 <script type="text/javascript" src="http://code.jquery.com/ui/1.9.2/jquery-ui.js"></script> 11 <link rel="stylesheet" href="./bootstrap/css/bootstrap.min.css"/> 12 13 <script type="text/javascript"> 14 $(document).ready(function() { 15 $("#query").autocomplete({ 16 source:function(request,response){ 17 $.ajax({ 18 url:"ajax/sug.action", 19 dataType:"json", 20 data:{ 21 query:$("#query").val() 22 }, 23 success:function(data) { 24 response($.map(data.result,function(item) { 25 return {value:item} 26 })); 27 } 28 }) 29 }, 30 minLength:1, 31 }); 32 }) 33 </script> 34 </head> 35 <body> 36 <div class="container"> 37 <h1>Search Demo</h1> 38 <div class="well"> 39 <form action="index.jsp"> 40 <label>Search</label><br/> 41 <input id="query" name="query"/> 42 <input type="submit" value="查询"/> 43 </form> 44 </div> 45 </div> 46 </body> 47 </html>

sug.java

1 package org.admln.suggestion; 2 3 import java.util.ArrayList; 4 import java.util.HashSet; 5 import java.util.List; 6 import java.util.Set; 7 8 import redis.clients.jedis.Jedis; 9 10 import com.opensymphony.xwork2.ActionSupport; 11 12 public class sug extends ActionSupport{ 13 String query; 14 15 Set<String> result; 16 17 public String execute() throws Exception { 18 return SUCCESS; 19 } 20 21 public String getQuery() { 22 return query; 23 } 24 25 public void setQuery(String query) { 26 this.query = query; 27 } 28 29 public Set<String> getResult() { 30 System.out.println(query); 31 32 Jedis jedis = new Jedis("192.168.126.133"); 33 result = jedis.zrevrange(query, 0, 5); 34 35 for(String t:result) { 36 System.out.println(t); 37 } 38 return result; 39 } 40 41 public void setResult(Set<String> result) { 42 this.result = result; 43 } 44 }

在centos上下载安装tomcat,修改配置文件

设置日志生成格式和目录:

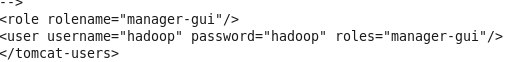

添加tomcat manager用户

然后部署war包

安装redis

。。。

编写hadoop代码

WordCount.java

1 package org.admln.mr; 2 3 import java.io.IOException; 4 import java.util.StringTokenizer; 5 6 import org.apache.hadoop.conf.Configuration; 7 import org.apache.hadoop.conf.Configured; 8 import org.apache.hadoop.fs.Path; 9 import org.apache.hadoop.io.IntWritable; 10 import org.apache.hadoop.io.LongWritable; 11 import org.apache.hadoop.io.Text; 12 import org.apache.hadoop.mapreduce.Job; 13 import org.apache.hadoop.mapreduce.Mapper; 14 import org.apache.hadoop.mapreduce.Reducer; 15 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 16 import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; 17 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 18 import org.apache.hadoop.util.Tool; 19 import org.apache.hadoop.util.ToolRunner; 20 21 public class WordCount extends Configured implements Tool { 22 23 public static class Map extends 24 Mapper<LongWritable, Text, Text, IntWritable> { 25 26 private final static IntWritable one = new IntWritable(1); 27 28 public void map(LongWritable key, Text value, Context context) 29 throws IOException, InterruptedException { 30 String line = value.toString(); 31 32 String a[] = line.split("""); 33 if (a[1].indexOf("index.jsp?query") > 0) { 34 String b[] = a[1].split("query=| "); 35 Text word = new Text(b[2]); 36 context.write(word, one); 37 } 38 } 39 } 40 41 public static class Reduce extends 42 Reducer<Text, IntWritable, Text, IntWritable> { 43 44 public void reduce(Text key, Iterable<IntWritable> values, 45 Context context) throws IOException, InterruptedException { 46 int sum = 0; 47 48 for (IntWritable val : values) { 49 sum += val.get(); 50 } 51 context.write(key, new IntWritable(sum)); 52 } 53 } 54 55 public static void main(String[] args) throws Exception { 56 int ret = ToolRunner.run(new WordCount(), args); 57 System.exit(ret); 58 } 59 60 @Override 61 public int run(String[] args) throws Exception { 62 Configuration conf = getConf(); 63 Job job = new Job(conf, "Load Redis"); 64 job.setJarByClass(WordCount.class); 65 job.setOutputKeyClass(Text.class); 66 job.setOutputValueClass(IntWritable.class); 67 68 job.setMapperClass(Map.class); 69 job.setReducerClass(Reduce.class); 70 job.setInputFormatClass(TextInputFormat.class); 71 job.setOutputFormatClass(RedisOutputFormat.class); 72 73 FileInputFormat.setInputPaths(job, new Path(args[0])); 74 FileOutputFormat.setOutputPath(job, new Path(args[1])); 75 76 return job.waitForCompletion(true) ? 0 : 1; 77 } 78 }

RedisOutputFormat.java

1 package org.admln.mr; 2 3 import java.io.IOException; 4 5 import org.apache.hadoop.mapreduce.RecordWriter; 6 import org.apache.hadoop.mapreduce.TaskAttemptContext; 7 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 8 9 import redis.clients.jedis.Jedis; 10 11 public class RedisOutputFormat<K,V> extends FileOutputFormat<K,V>{ 12 13 protected static class RedisRecordWriter<K,V> extends RecordWriter<K,V>{ 14 private Jedis jedis; 15 16 RedisRecordWriter(Jedis jedis) { 17 this.jedis=jedis; 18 } 19 public void close(TaskAttemptContext arg0) throws IOException,InterruptedException{ 20 jedis.disconnect(); 21 } 22 public void write(K key,V value) throws IOException, 23 InterruptedException{ 24 boolean nullkey = key == null; 25 boolean nullvalue = value == null; 26 27 if(nullkey||nullvalue){ 28 return; 29 } 30 31 String s = key.toString(); 32 for(int i=0;i<s.length();i++) { 33 String k = s.substring(0,i+1); 34 int score = Integer.parseInt(value.toString()); 35 jedis.zincrby(k,score,s); 36 } 37 } 38 39 } 40 public RecordWriter<K,V> getRecordWriter(TaskAttemptContext arg0) throws IOException, 41 InterruptedException{ 42 return new RedisRecordWriter<K,V>(new Jedis("127.0.0.1")); 43 } 44 }

(由于作者是1.X的hadoop,用了相比于现在旧的MR API)(跟着做了)

上传数据装载程序运行hadoop MR程序

查看结果

fatjar 在线安装地址:update site:http://kurucz-grafika.de/fatjar/ - http://kurucz-grafika.de/fatjar/

视频地址:http://pan.baidu.com/s/1c0s4zGs

工具地址:http://pan.baidu.com/s/1toy6q

疑问:为什么必须指定输出目录,不指定就会报错?

WordCount程序自定义了输出,OutputFormat类的主要职责是决定数据的存储位置以及写入的方式

RecordWriter 生成<key, value> 对到输出文件。

RecordWriter的实现把作业的输出结果写到 FileSystem。

为了把结果自定义到redis,所以有了RedisOutputFormat.java

它继承自抽象类FileOutputFormat,重写了抽象方法getRecordWriter。并自定义了要返回的RecordWriter类

实现了它的write和close两个方法(必须),用来自定义数据到redis。

hadoop自带OutputFormat类如TextOutputFormat.java,是将reduce产生的数据以行以文本的形式输出到格式好的目录中

所以自定义了OutputFormat后不会再往HDFS上写数据。但是还要写其他东西,好像是成功与否的标识文件

如我运行成功后的output目录:

这个文件里面什么内容都没有,就是个空文件,就是典型的标识文件。觉得意义不是很大,反而很麻烦,相信实际生产中应该自定义吧

(这估计就是为什么必须指定输出目录的原因)

在实际应用中应该是这样的,如果是小网站,日访问量不大的情况下这个项目就用不到hadoop,直接在java代码中没查询一次就把用户查询的词记录到redis一次;但是如果是日流量很大或者某一时间段特别集中的情况下应该就是使用hadoop在“空闲”时间比如晚上十二点去分析日志,批量加入redis