单线性回归

y=w*x+b

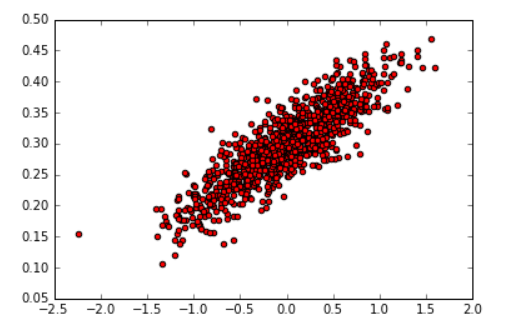

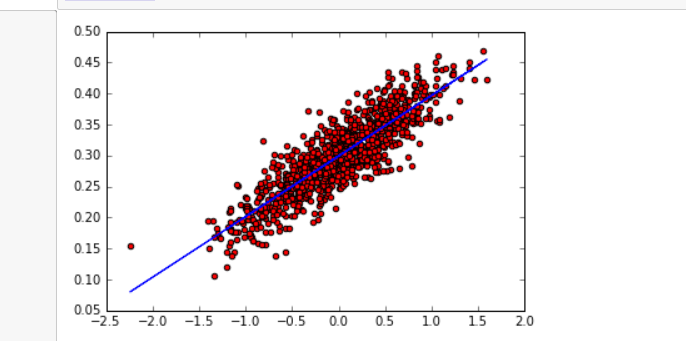

import numpy as np import tensorflow as tf import matplotlib.pyplot as plt # 随机生成1000个点,围绕在y=0.1x+0.3的直线周围 num_points = 1000 vectors_set = [] for i in range(num_points): x1 = np.random.normal(0.0, 0.55) y1 = x1 * 0.1 + 0.3 + np.random.normal(0.0, 0.03) vectors_set.append([x1, y1]) # 生成一些样本 x_data = [v[0] for v in vectors_set] y_data = [v[1] for v in vectors_set] plt.scatter(x_data,y_data,c='r') plt.show()

tf.square()函数是求平方;tf.reduce_mean()是求均值

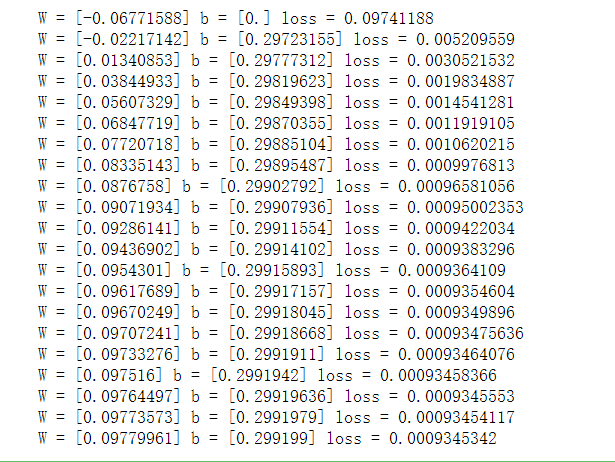

# 生成1维的W矩阵,取值是[-1,1]之间的随机数 with tf.name_scope('weight'): W = tf.Variable(tf.random_uniform([1], -1.0, 1.0), name='W') # 生成1维的b矩阵,初始值是0 with tf.name_scope('bias'): b = tf.Variable(tf.zeros([1]), name='b') # 经过计算得出预估值y with tf.name_scope('y'): y = W * x_data + b # 以预估值y和实际值y_data之间的均方误差作为损失 with tf.name_scope('loss'): loss = tf.reduce_mean(tf.square(y - y_data), name='loss') # 采用梯度下降法来优化参数,这里0.5是指定的学习率 optimizer = tf.train.GradientDescentOptimizer(0.5) # 训练的过程就是最小化这个误差值 train = optimizer.minimize(loss, name='train') sess = tf.Session() init = tf.global_variables_initializer() sess.run(init) # 初始化的W和b是多少 print ("W =", sess.run(W), "b =", sess.run(b), "loss =", sess.run(loss)) # 执行20次训练 for step in range(20): sess.run(train) # 输出训练好的W和b print ("W =", sess.run(W), "b =", sess.run(b), "loss =", sess.run(loss)) writer = tf.summary.FileWriter("logs/", sess.graph)

plt.scatter(x_data,y_data,c='r') plt.plot(x_data,sess.run(W)*x_data+sess.run(b)) plt.show()

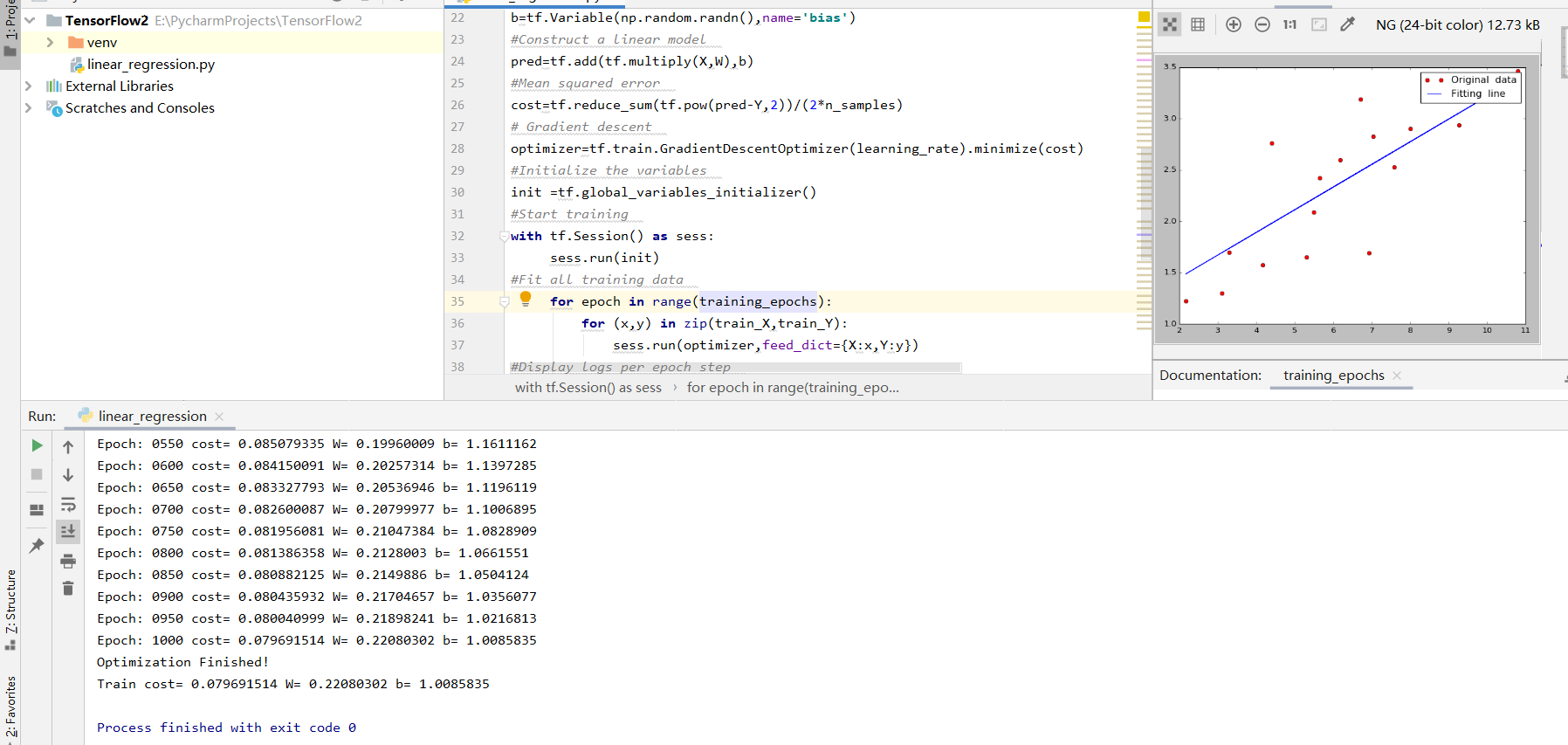

import tensorflow.compat.v1 as tf import numpy as np import matplotlib.pyplot as plt import os tf.disable_eager_execution() #保证sess.run()能够正常运行 os.environ["CUDA_VISIBLE_DEVICES"]="0" #Parameters learning_rate=0.01 training_epochs=1000 display_step=50 #training Data train_X=np.asarray([3.3,4.4,5.5,6.71,6.93,4.168,9.779,6.182,7.59,2.167, 7.042,10.791,5.313,7.997,5.654,9.27,3.1]) train_Y=np.asarray([1.7,2.76,2.09,3.19,1.694,1.573,3.366,2.596,2.53,1.221, 2.827,3.465,1.65,2.904,2.42,2.94,1.3]) n_samples=train_X.shape[0] #tf Graph Input X=tf.placeholder("float") Y=tf.placeholder("float") #Set model weights W=tf.Variable(np.random.randn(),name="weight") b=tf.Variable(np.random.randn(),name='bias') #Construct a linear model pred=tf.add(tf.multiply(X,W),b) #Mean squared error cost=tf.reduce_sum(tf.pow(pred-Y,2))/(2*n_samples) # Gradient descent optimizer=tf.train.GradientDescentOptimizer(learning_rate).minimize(cost) #Initialize the variables init =tf.global_variables_initializer() #Start training with tf.Session() as sess: sess.run(init) #Fit all training data for epoch in range(training_epochs): for (x,y) in zip(train_X,train_Y): sess.run(optimizer,feed_dict={X:x,Y:y}) #Display logs per epoch step if (epoch+1) % display_step==0: c=sess.run(cost,feed_dict={X:train_X,Y:train_Y}) print("Epoch:" ,'%04d' %(epoch+1),"cost=","{:.9f}".format(c),"W=",sess.run(W),"b=",sess.run(b)) print("Optimization Finished!") training_cost=sess.run(cost,feed_dict={X:train_X,Y:train_Y}) print("Train cost=",training_cost,"W=",sess.run(W),"b=",sess.run(b)) #Graphic display plt.plot(train_X,train_Y,'ro',label='Original data') plt.plot(train_X,sess.run(W)*train_X+sess.run(b),label="Fitting line") plt.legend() plt.show()