使用工具连接hive:

https://blog.csdn.net/weixin_44508906/article/details/91348665

配置hive-site.xml

在hive-site.xml中加入配置信息,ip需要修改

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>192.168.58.128</value>

</property>

配置core-site.xml文件

在core-site.xml中加入配置信息

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

启动hadoop&hiveserver2

启动hadoop集群

start-all.sh

启动hiveserver2

cd /usr/local/hive

./bin/hiveserver2

这里打开一个进程会停住,另开一个终端,

输入

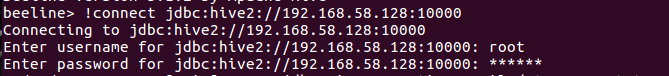

beeline

输入

!connect jdbc:hive2://192.168.58.128:10000

连接hive,之后输入在hive-site.xml文件中配置的username和password。

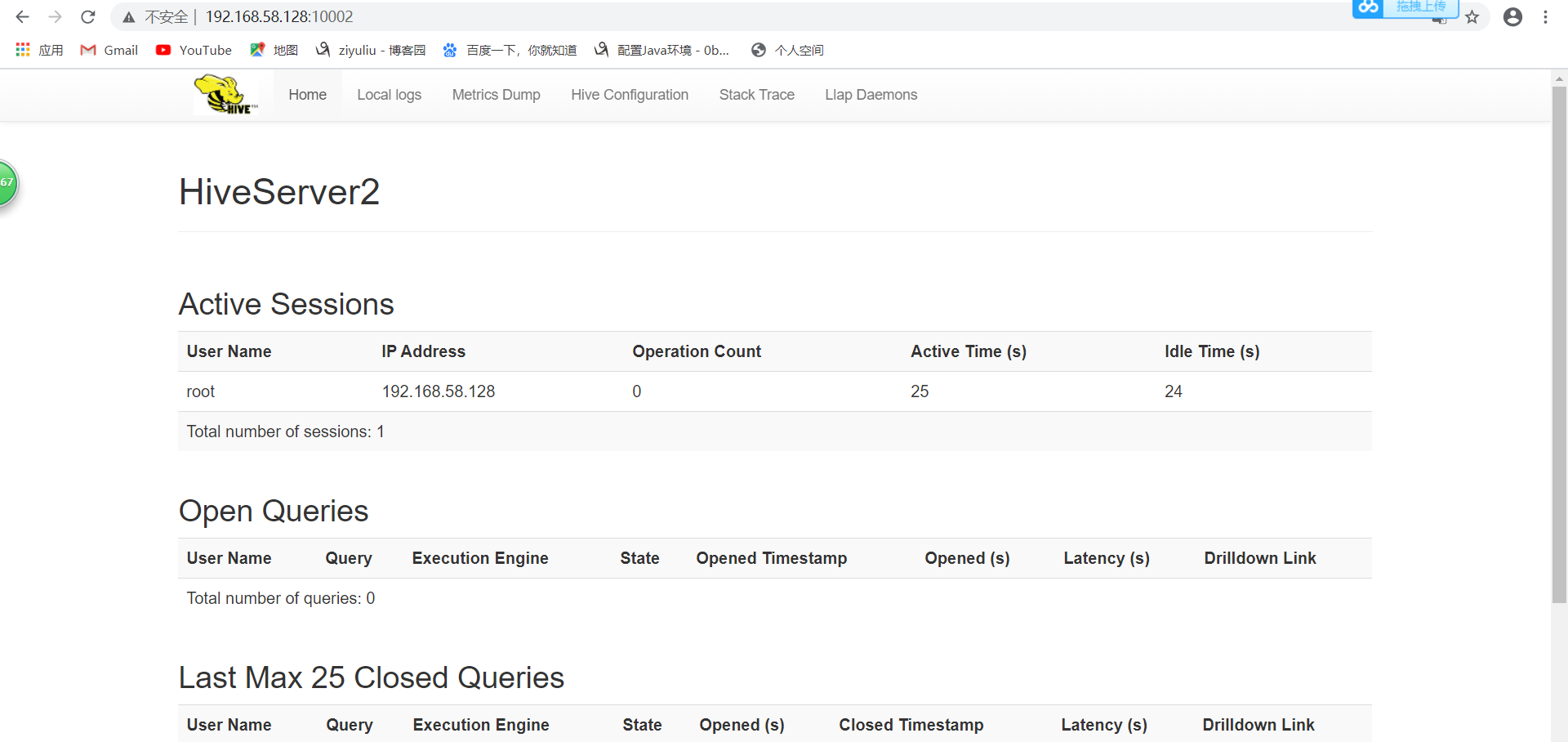

之后进入http://192.168.58.128:10002可查看连接状态

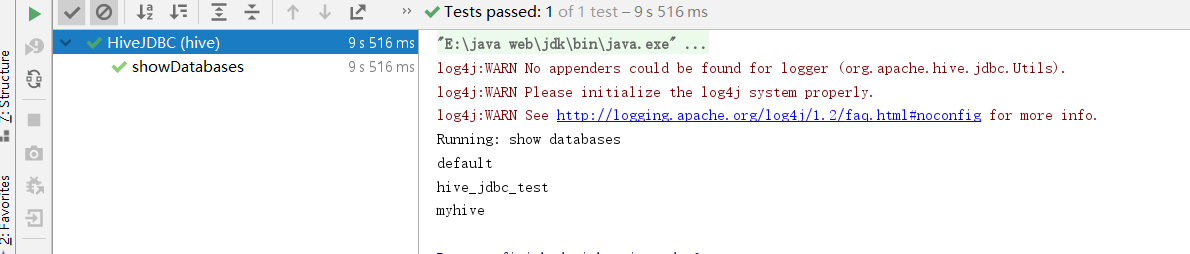

代码连接:

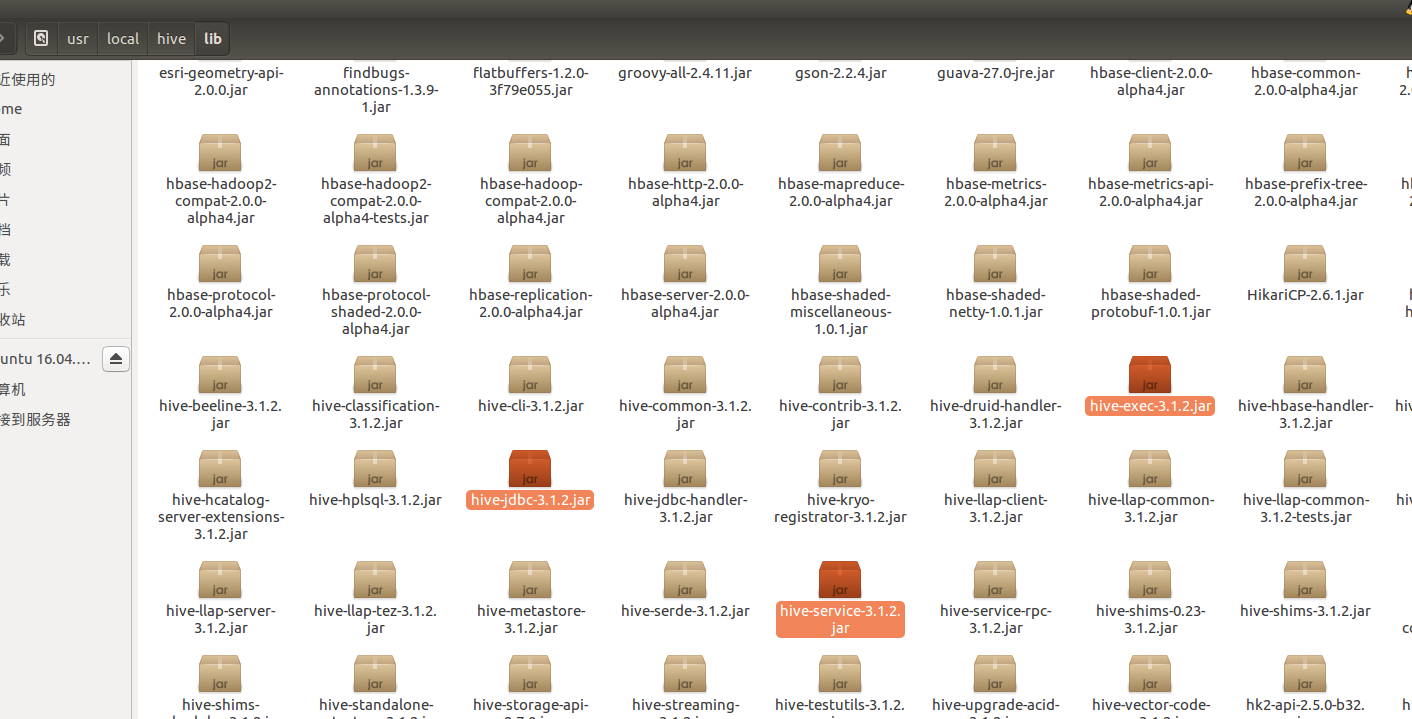

先从虚拟机上找到

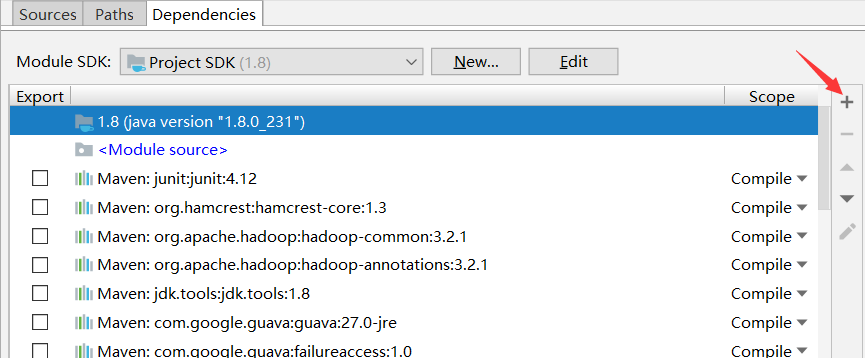

把这三个jar包加到file->Project Structure->Modules->Dependencies

点击ok

代码:

import org.junit.After; import org.junit.Before; import org.junit.Test; import java.sql.*; /** * JDBC 操作 Hive(注:JDBC 访问 Hive 前需要先启动HiveServer2) */ public class HiveJDBC { private static String driverName = "org.apache.hive.jdbc.HiveDriver"; private static String url = "jdbc:hive2://192.168.58.128:10000/myhive"; private static String user = "root"; private static String password = "123456"; private static Connection conn = null; private static Statement stmt = null; private static ResultSet rs = null; // 加载驱动、创建连接 @Before public void init() throws Exception { Class.forName(driverName); conn = DriverManager.getConnection(url,user,password); stmt = conn.createStatement(); } // 创建数据库 @Test public void createDatabase() throws Exception { String sql = "create database hive_jdbc_test"; System.out.println("Running: " + sql); stmt.execute(sql); } // 查询所有数据库 @Test public void showDatabases() throws Exception { String sql = "show databases"; System.out.println("Running: " + sql); rs = stmt.executeQuery(sql); while (rs.next()) { System.out.println(rs.getString(1)); } } // 创建表 @Test public void createTable() throws Exception { String sql = "create table emp( " + "empno int, " + "ename string, " + "job string, " + "mgr int, " + "hiredate string, " + "sal double, " + "comm double, " + "deptno int " + ") " + "row format delimited fields terminated by '\t'"; System.out.println("Running: " + sql); stmt.execute(sql); } // 查询所有表 @Test public void showTables() throws Exception { String sql = "show tables"; System.out.println("Running: " + sql); rs = stmt.executeQuery(sql); while (rs.next()) { System.out.println(rs.getString(1)); } } // 查看表结构 @Test public void descTable() throws Exception { String sql = "desc emp"; System.out.println("Running: " + sql); rs = stmt.executeQuery(sql); while (rs.next()) { System.out.println(rs.getString(1) + " " + rs.getString(2)); } } // 加载数据 @Test public void loadData() throws Exception { String filePath = "/home/hadoop/data/emp.txt"; String sql = "load data local inpath '" + filePath + "' overwrite into table emp"; System.out.println("Running: " + sql); stmt.execute(sql); } // 查询数据 @Test public void selectData() throws Exception { String sql = "select * from emp"; System.out.println("Running: " + sql); rs = stmt.executeQuery(sql); System.out.println("员工编号" + " " + "员工姓名" + " " + "工作岗位"); while (rs.next()) { System.out.println(rs.getString("empno") + " " + rs.getString("ename") + " " + rs.getString("job")); } } // 统计查询(会运行mapreduce作业) @Test public void countData() throws Exception { String sql = "select count(1) from emp"; System.out.println("Running: " + sql); rs = stmt.executeQuery(sql); while (rs.next()) { System.out.println(rs.getInt(1) ); } } // 删除数据库 @Test public void dropDatabase() throws Exception { String sql = "drop database if exists hive_jdbc_test"; System.out.println("Running: " + sql); stmt.execute(sql); } // 删除数据库表 @Test public void deopTable() throws Exception { String sql = "drop table if exists emp"; System.out.println("Running: " + sql); stmt.execute(sql); } // 释放资源 @After public void destory() throws Exception { if ( rs != null) { rs.close(); } if (stmt != null) { stmt.close(); } if (conn != null) { conn.close(); } } }

退出beeline

!quit

退出hiveserver2

ctrl + c