import requests

import re

from bs4 import BeautifulSoup

import jieba.analyse

from PIL import Image,ImageSequence

import numpy as np

import matplotlib.pyplot as plt

from wordcloud import WordCloud,ImageColorGenerator

# 将标签内容写入文件

def writeNewsDetail(tag):

f=open('dongman.txt','a',encoding='utf-8')

f.write(tag)

f.close()

# 获取总页数

def getPage(PageUrl):

res = requests.get(PageUrl)

res.encoding = "utf-8"

soup = BeautifulSoup(res.text, "html.parser")

n = soup.select(".main .pages a")[-2].text

print(n)

return int(n)

# 获取全部页码链接

def getgain(PageNumber):

for i in range(1,PageNumber+1):

NeedUrl = 'http://www.kisssub.org/sort-1-{}.html'.format(i)

print(NeedUrl)

# 获取单个页面页面所有链接

getList(NeedUrl)

# getinformation(NeedUrl)

# 获取单个页面页面所有链接

def getList(Url):

res = requests.get(Url)

res.encoding = "utf-8"

soup = BeautifulSoup(res.text, "html.parser")

# 获取单个页面所有的资源链接

page_url = soup.select('td[style="text-align:left;"] a')

# 将获得的资源链接进行单个输出,然后获取链接的页面信息

for i in page_url:

listurl = 'http://www.kisssub.org/' + i.get('href')

print(listurl)

getinformation(listurl)

return listurl

# 获取页面的信息

def getinformation(url):

res = requests.get(url)

res.encoding = 'utf-8'

soup = BeautifulSoup(res.text, 'html.parser')

# 获取标题

title = soup.select(".location a")[-1].text

print("标题:",title)

# 获取时间

times = soup.select(".basic_info p")[3].text

time = times.lstrip('发布时间:')[:20].strip()

print("时间:",time)

# 获取发布者

publishs = soup.select(".basic_info p")[0].text.strip()

publish = publishs.lstrip('发布代号: ')[:20]

print("发布者",publish)

# 获取下载数量

downloads = soup.select(".basic_info p")[4].text.strip()

a = re.match(".*?下载(.*),",downloads)

download = a.group(1).strip()

print("下载数量:",download)

#获取标签

tags = soup.select(".box .intro")[1].text.replace(" ", "")

tag =tags.replace(" ", "")

# # tags = soup.select(".box .intro")[1].text.strip("")

print("标签:",tag)

writeNewsDetail(tag)

# 生成词云

def getWord():

lyric = ''

# 打开文档,进行编译,防止错误

f = open('dongman.txt', 'r', encoding='utf-8')

# 将文档里面的数据进行单个读取,便于生成词云

for i in f:

lyric += f.read()

# 进行分析

result = jieba.analyse.textrank(lyric, topK=50, withWeight=True)

keywords = dict()

for i in result:

keywords[i[0]] = i[1]

print(keywords)

# 获取词云生成所需要的模板图片

image = Image.open('tim.jpg')

graph = np.array(image)

# 进行词云的设置

wc = WordCloud(font_path='./fonts/simhei.ttf', background_color='White', max_words=50, mask=graph)

wc.generate_from_frequencies(keywords)

image_color = ImageColorGenerator(graph)

plt.imshow(wc)

plt.imshow(wc.recolor(color_func=image_color))

plt.axis("off")

plt.show()

wc.to_file('dream.png')

PageUrl = 'http://www.kisssub.org/sort-1-1.html'

PageNumber = getPage(PageUrl)

getgain(PageNumber)

getWord()

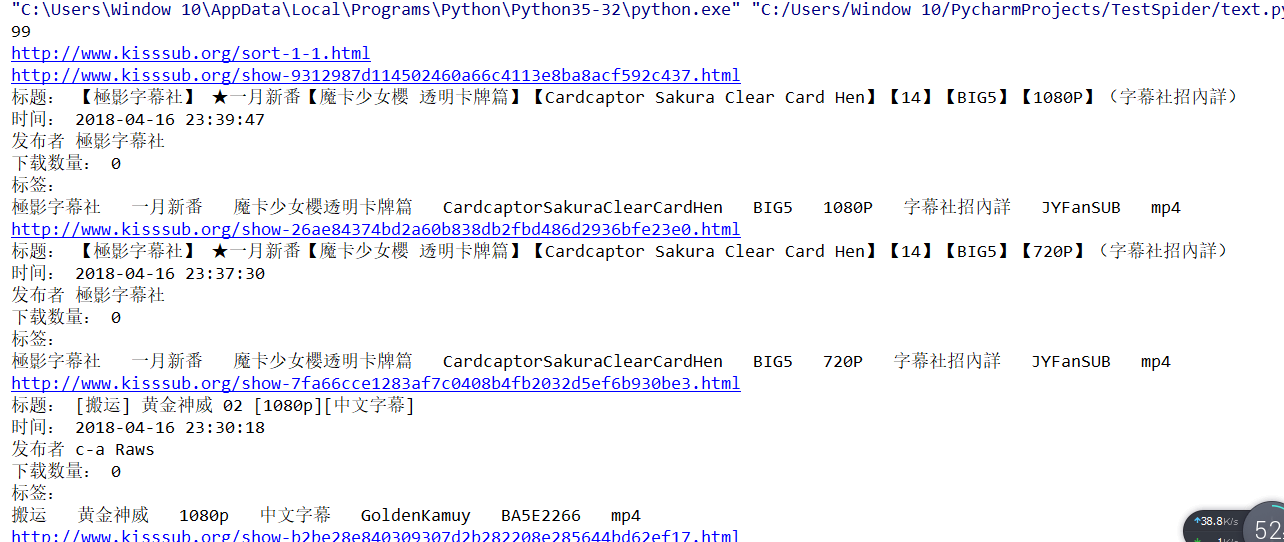

首先设置好几个函数,分别是获取总的页面个数、获取全部页码链接、页面信息展示、获取页面所有链接,大致定义好四个,在编写获得单个页面所有链接出了点问题,最后发现是soup没有弄好,

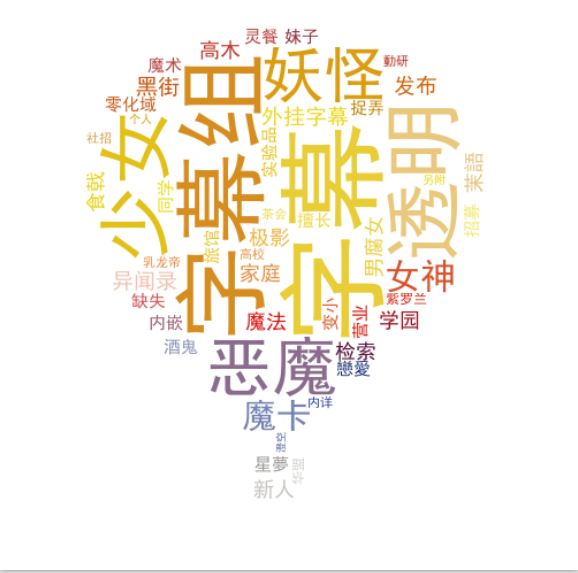

接下来就是进行词云生成,为了方便,我将收集到的标签信息用TXT格式保存,然后进行词云分析、生成。

这次爬取的是一个动漫资源网站,将网站里面的标题、时间、发布者、下载数量还有标签全部爬取,生成的词云也以标签为标准进行生成