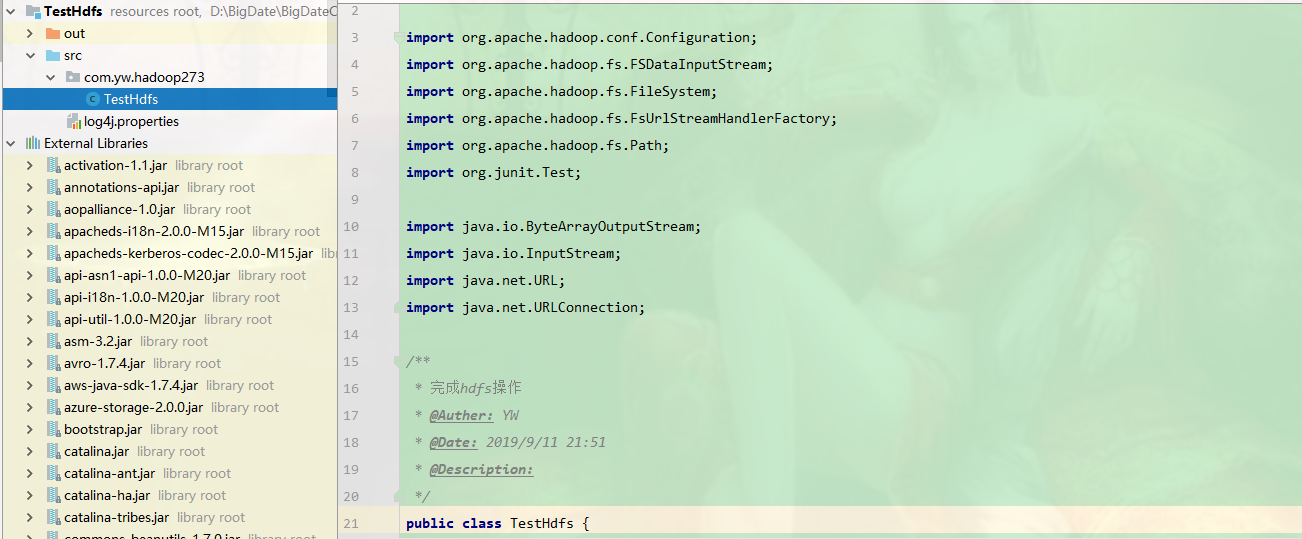

创建java 项目

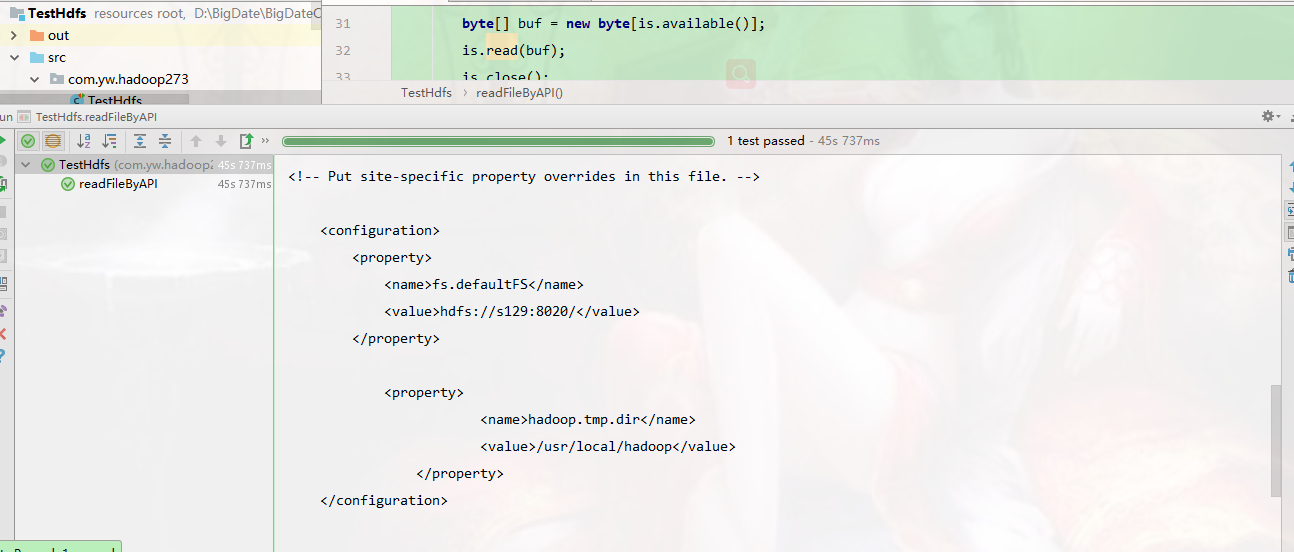

package com.yw.hadoop273; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.*; import org.apache.hadoop.io.IOUtils; import org.junit.Test; import java.io.ByteArrayOutputStream; import java.io.InputStream; import java.net.URL; import java.net.URLConnection; /** * 完成hdfs操作 * @Auther: YW * @Date: 2019/9/11 21:51 * @Description: */ public class TestHdfs { /** * 读取hdfs文件 */ @Test public void readFile() throws Exception{ //注册url流处理器工厂(hdfs) URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory()); URL url = new URL("hdfs://http://192.168.248.129:8020/usr/local/hadoop/core-site.xml"); URLConnection conn = url.openConnection(); InputStream is = conn.getInputStream(); byte[] buf = new byte[is.available()]; is.read(buf); is.close(); String str = new String(buf); System.out.println(str); } /** * 通过hadoop API访问文件 */ @Test public void readFileByAPI() throws Exception{ Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://192.168.248.129:8020/"); FileSystem fs = FileSystem.get(conf) ; Path p = new Path("/usr/local/hadoop/core-site.xml"); FSDataInputStream fis = fs.open(p); byte[] buf = new byte[1024]; int len = -1 ; ByteArrayOutputStream baos = new ByteArrayOutputStream(); while((len = fis.read(buf)) != -1){ baos.write(buf, 0, len); } fis.close(); baos.close(); System.out.println(new String(baos.toByteArray())); } /** * 通过hadoop API访问文件 */ @Test public void readFileByAPI2() throws Exception{ Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://192.168.248.129:8020/"); FileSystem fs = FileSystem.get(conf) ; ByteArrayOutputStream baos = new ByteArrayOutputStream(); Path p = new Path("/usr/local/hadoop/core-site.xml"); FSDataInputStream fis = fs.open(p); IOUtils.copyBytes(fis, baos, 1024); System.out.println(new String(baos.toByteArray())); } /** * mkdir 创建目录 */ @Test public void mkdir() throws Exception{ Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://192.168.248.129:8020/"); FileSystem fs = FileSystem.get(conf) ; fs.mkdirs(new Path("/usr/local/hadoop/myhadoop")); } /** * putFile 写文件 */ @Test public void putFile() throws Exception{ Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://192.168.248.129:8020/"); FileSystem fs = FileSystem.get(conf) ; FSDataOutputStream out = fs.create(new Path("/usr/local/hadoop/myhadoop/a.txt")); out.write("helloworld".getBytes()); out.close(); } /** * removeFile 删除目录 (注意权限) */ @Test public void removeFile() throws Exception{ Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://192.168.248.129:8020/"); FileSystem fs = FileSystem.get(conf) ; Path p = new Path("/usr/local/hadoop/myhadoop"); fs.delete(p, true); } }

注意权限的修改

hdfs dfs -chmod 777 /usr/local/hadoop/

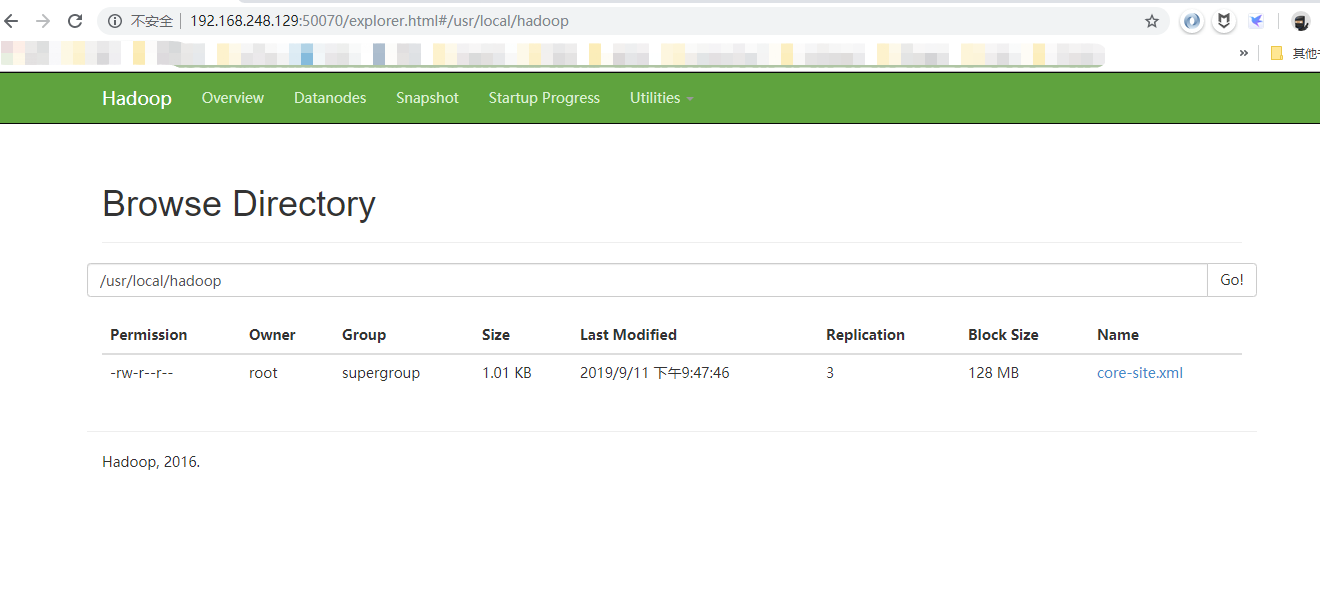

读到的内容

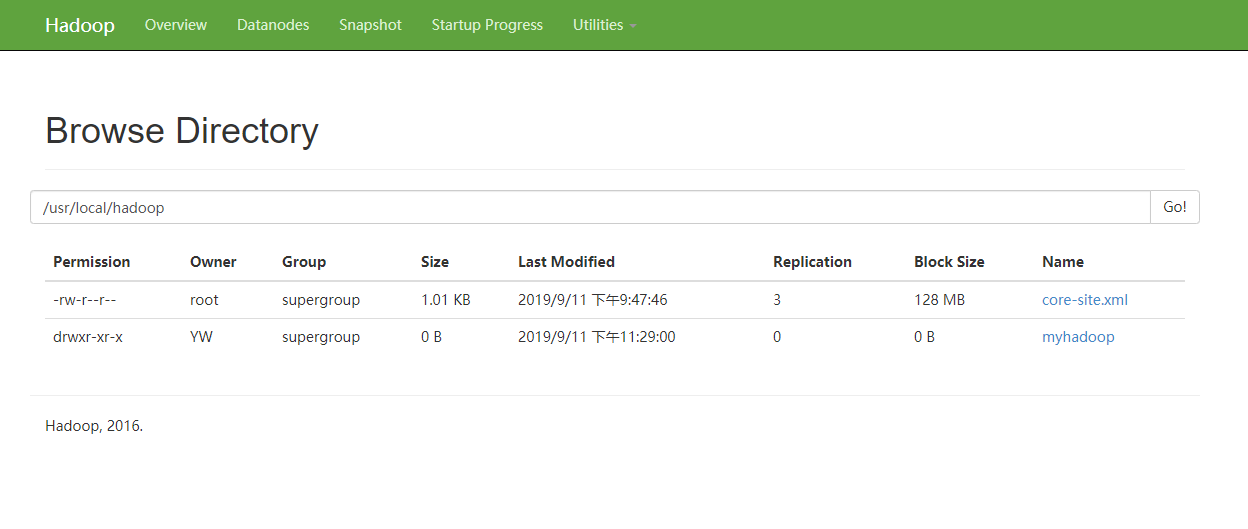

创建目录文件

完成。。