注:安装hive之前,请先搭建hadoop环境。http://www.cnblogs.com/YellowstonePark/p/7750213.html

1、安装Hive

下载hive

这里使用的是apache-hive-2.1.1-bin.tar.gz,下载地址:链接:http://pan.baidu.com/s/1jIKNw82 密码:fd0w

将下载的hive 上传到node01服务器的/opt目录下

[root@node01 ~]# cd /opt/ [root@node01 opt]# ll total 727676 -rw-r--r--. 1 root root 149756462 Oct 23 12:24 apache-hive-2.1.1-bin.tar.gz -rw-r--r--. 1 root root 266688029 Oct 23 12:24 hadoop-2.7.4.tar.gz -rw-r--r--. 1 root root 104659474 Oct 23 12:24 hbase-1.2.6-bin.tar.gz -rw-r--r--. 1 root root 185515842 Oct 23 12:26 jdk-8u144-linux-x64.tar.gz -rw-r--r--. 1 root root 3465760 Oct 23 12:24 mysql-connector-java-5.1.44.tar.gz -rw-r--r-- 1 root root 35042811 Oct 23 13:37 zookeeper-3.4.10.tar.gz

切换路径到/usr/app目录下

[root@node01 app]# cd /usr/app/

解压hive

[root@node01 app]# tar zxvf /opt/apache-hive-2.1.1-bin.tar.gz

创建hive软连接

[root@node01 app]# ln -s apache-hive-2.1.1-bin/ hive

添加hive环境变量

[root@node01 app]# vi /etc/profile

export JAVA_HOME=/usr/app/java export CLASS_PATH=$JAVA_HOME/lib:$JAVA_HOME/jre/lib export ZOOKEEPER_HOME=/usr/app/zookeeper export HADOOP_HOME=/usr/app/hadoop export HIVE_HOME=/usr/app/hive export PATH=$PATH:$JAVA_HOME/bin:$ZOOKEEPER_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin

使环境变量生效

[root@node01 app]# source /etc/profile

切换目录到conf下

[root@node01 app]# cd hive/conf/

创建hive-env.sh、hive-site.xml、hive-log4j2.properties、hive-exec-log4j2.properties

[root@node01 app]# cp hive-env.sh.template hive-env.sh [root@node01 app]# cp hive-default.xml.template hive-site.xml [root@node01 app]# cp hive-log4j2.properties.template hive-log4j2.properties [root@node01 app]# cp hive-exec-log4j2.properties.template hive-exec-log4j2.properties

编辑hive-env.sh,在文件开始添加以下变量

[root@node01 app]# vi hive-env.sh

export JAVA_HOME=/usr/app/java export HADOOP_HOME=/usr/app/hadoop export HIVE_HOME=/usr/app/hive export HIVE_CONF_DIR=/usr/app/hive/conf

创建以下hdfs目录并更改权限

[root@node01 conf]# hdfs dfs -mkdir -p /user/hive/warehouse [root@node01 conf]# hdfs dfs -mkdir -p /user/hive/tmp [root@node01 conf]# hdfs dfs -mkdir -p /user/hive/log [root@node01 conf]# hdfs dfs -chmod 777 /user/hive/warehouse [root@node01 conf]# hdfs dfs -chmod 777 /user/hive/tmp [root@node01 conf]# hdfs dfs -chmod 777 /user/hive/log

修改hive-site.xml 配置

[root@node01 conf]# vi hive-site.xml

<property>

<name>hive.exec.scratchdir</name>

<value>/user/hive/tmp</value>

<description>HDFS root scratch dir for Hive jobs which gets created with write all (733) permission. For each connecting user, an HDFS scratch dir: ${hive.exec.scratchdir}/<username> is created, with ${hive.scratch.dir.permission}.</description>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>hive.querylog.location</name>

<value>/user/hive/log</value>

<description>Location of Hive run time structured log file</description>

</property>

注:Hive Metastore 默认情况下, Hive 的元数据保存在内嵌的 Derby 数据库里, 但一般情况下生产环境会使用 MySQL 来存放 Hive 元数据。

2、安装MySQL

[root@node01 opt]# yum install mysql [root@node01 opt]# yum install mysql-server [root@node01 opt]# yum install mysql-devel [root@node01 opt]# service mysqld status [root@node01 opt]# service mysqld start [root@node01 opt]# service mysqld status [root@node01 opt]# chkconfig mysqld on

创建数据库和用户

[root@node01 conf]# mysql -u root -p

mysql> CREATE DATABASE hive;

mysql> USE hive;

mysql> CREATE USER 'hive'@'localhost' IDENTIFIED BY 'hive';

mysql> GRANT ALL ON hive.* TO 'hive'@'localhost' IDENTIFIED BY 'hive';

mysql> GRANT ALL ON hive.* TO 'hive'@'%' IDENTIFIED BY 'hive';

mysql> FLUSH PRIVILEGES;

mysql> quit;

修改hive-site.xml 需要在 hive-site.xml 文件中配置 MySQL 数据库连接信息

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

</property>

在运行hive命令前,确保以下两点

1、HDFS 已经启动。可以使用 start-dfs.sh 脚本来启动 HDFS。

2、mysql-connector-java-5.1.39.jar 已经加到 $HIVE_HOME/lib 目录下。

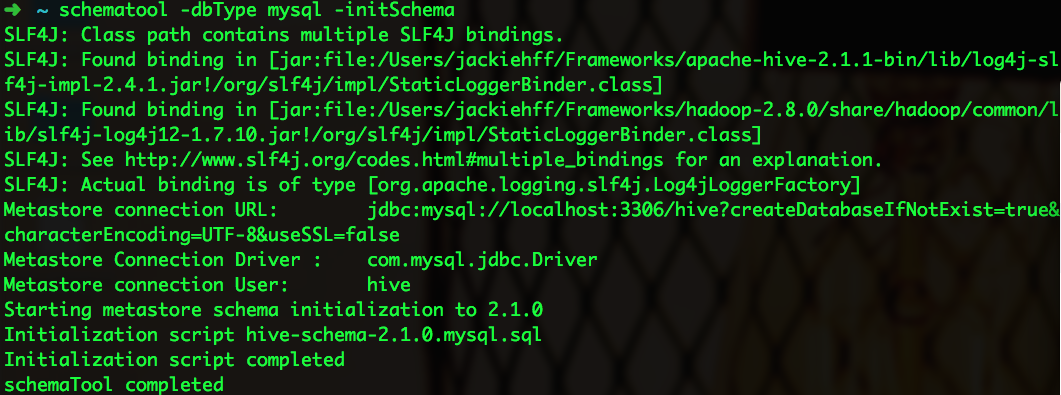

从 Hive 2.1 版本开始, 我们需要先运行 schematool 命令来执行初始化操作。

[root@node01 conf]# schematool schematool -dbType mysql -initSchema

启动hive

[root@node01 conf]# hive which: no hbase in (/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/usr/app/java/bin:/usr/app/zookeeper/bin:/usr/app/hadoop/bin:/usr/app/hadoop/sbin:/usr/app/hive/bin:/root/bin) SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/app/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/app/hadoop-2.7.4/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Logging initialized using configuration in file:/usr/app/apache-hive-2.1.1-bin/conf/hive-log4j2.properties Async: true Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. hive>

问题汇总

hive 启动时,提示以下信息:

Sat May 21 10:25:12 CST 2016 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

解决方法:在 hive-site.xml 配置文件中 javax.jdo.option.ConnectionURL 配置项的值上加上 useSSL=false。即最后的配置如下:

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

initSchema问题,提示以下信息:

Exception in thread "main" java.lang.RuntimeException: Hive metastore database is not initialized. Please use schematool (e.g. ./schematool -initSchema -dbType ...) to create the schema. If needed, don't forget to include the option to auto-create the underlying database in your JDBC connection string (e.g. ?createDatabaseIfNotExist=true for mysql)

解决方法:执行 schematool -initSchema -dbType mysql。即安装 Hive 后,在首次运行 hive 之前要执行以上命令。

相对路径问题,提示以下信息:

Exception in thread "main"java.lang.RuntimeException: java.lang.IllegalArgumentException:java.net.URISyntaxException: Relative path in absolute URI:${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

atorg.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:444)

atorg.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:672)

atorg.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:616)

atsun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

atsun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

atsun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

atjava.lang.reflect.Method.invoke(Method.java:606)

atorg.apache.hadoop.util.RunJar.main(RunJar.java:160)

Caused by: java.lang.IllegalArgumentException:java.net.URISyntaxException: Relative path in absolute URI:${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

atorg.apache.hadoop.fs.Path.initialize(Path.java:148)

atorg.apache.hadoop.fs.Path.<init>(Path.java:126)

atorg.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:487)

atorg.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:430)

... 7more

解决方法:将 hive-site.xml 中的 ${system:java.io.tmpdir} 和 ${system:user.name} 分别替换成 /tmp 和 ${user.name}

执行 schematool -dbType mysql -initSchema 时,提示以下信息:

The reference to entity "characterEncoding" must end with the ';' delimiter

解决办法:"&"在xml中这个是读不出的,正确写法将"&"转义"&"

有时候在使用xml作为配置文件的时候,应该要使用xml的编码规则来进行适当的设置。

下面给出xml中一些特殊符号的编码转换:

< < 小于号

> > 大于号

& & and字符

' ' 单引号

" " 双引号

Exception in thread "main" java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

at org.apache.hadoop.fs.Path.initialize(Path.java:205)

at org.apache.hadoop.fs.Path.<init>(Path.java:171)

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:644)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:563)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:531)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:641)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

at java.net.URI.checkPath(URI.java:1823)

at java.net.URI.<init>(URI.java:745)

at org.apache.hadoop.fs.Path.initialize(Path.java:202)

... 12 more

问题解决:将hive-site.xml配置文件件中的"system:java.io.tmpdir"全部替换为"/tmp"

Exception in thread "main" java.lang.RuntimeException: The root scratch dir: /user/hive/tmp on HDFS should be writable. Current permissions are: rwxrwxr-x at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:705) at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:635) at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:563) at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:531) at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705) at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:641) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.util.RunJar.run(RunJar.java:221) at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

问题解决: hdfs dfs -chmod 777 /user/hive/tmp 将指定路径权限更改为777