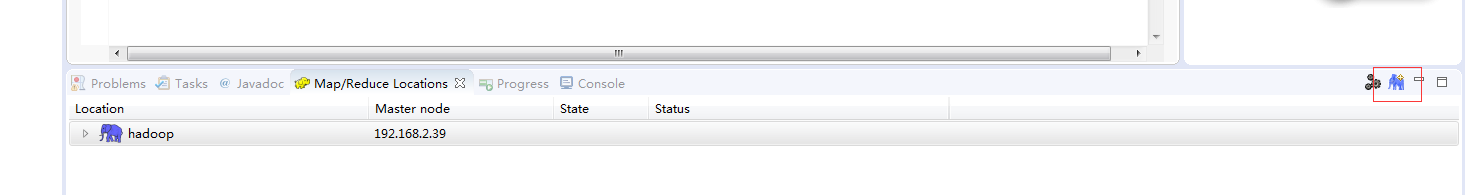

首先下载elipse hadoop插件,配置好hadoop的安装目录,然后

map/reduce 的host一定要和mapred-core.xml 中的一致,

<property>

<name>mapred.job.tracker</name>

<value>192.168.2.39:9001</value>

</property>

dfs和core-xml

<property>

<name>fs.default.name</name>

<value>hdfs://192.168.2.39:9000</value>

</property>

的节点一致

这个一致,否则会出错

上传文件代码

package upload;

import java.io.BufferedInputStream;

import java.io.FileInputStream;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

public class UploadFile {

public static void main(String[] args) {

try {

//in对应的是本地文件系统的目录

InputStream in = new BufferedInputStream(new FileInputStream("C:\Users\ASUS\Desktop\sql EF.txt"));

Configuration conf = new Configuration();

//获得hadoop系统的连接

FileSystem fs = FileSystem.get(URI.create("hdfs://192.168.2.39:9000/hdfsdata/sqlEF.txt"),conf);

//out对应的是Hadoop文件系统中的目录

OutputStream out = fs.create(new Path("hdfs://192.168.2.39:9000/hdfsdata/sqlEF.txt"));

IOUtils.copyBytes(in, out, 4096,true);//4096是4k字节

System.out.println("success");

} catch (Exception e) {

System.out.println(e.toString());

}

}

}