时间序列的相似性分析的理论和原理:

可按照以下几篇博客进行学习和查看分析即可

https://www.jianshu.com/p/e8e02cdc43d5?from=groupmessage

https://zhuanlan.zhihu.com/p/39450321

https://wenku.baidu.com/view/58dfefbc2b160b4e777fcf77.html

https://github.com/wannesm/dtaidistance

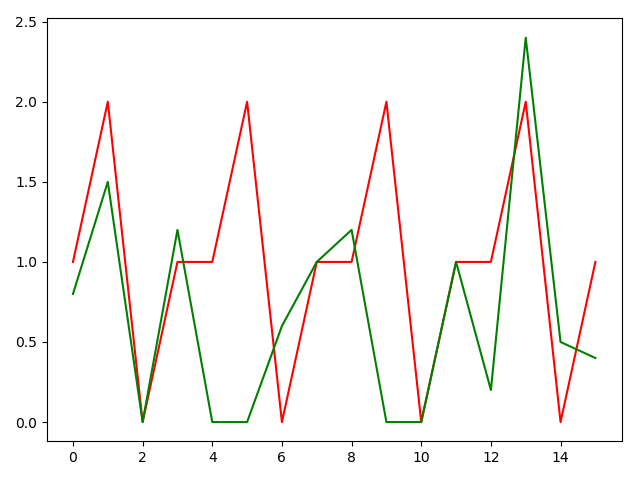

时间序列分析不同算法的实现:主要是进行相同时间段的数据的时序形状对比输出展示

#欧氏距离公式计算时间序列的相似度

import numpy as np

import matplotlib.pyplot as plt

def Oushidistance(s1,s2):

m=len(s1)

n=len(s2)

plt.plot(s1, "r", s2, "g")

plt.show()

s1 = (s1 - np.mean(s1)) / np.std(s1)

s2 = (s2 - np.mean(s2)) / np.std(s2)

distance=0

for i in range(m):

distance+=(s1[i]-s2[i])**2

return np.sqrt(distance)

if __name__ == '__main__':

s1 = np.array([1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1])

s2 = np.array([0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2])

s3 = np.array([0.8, 1.5, 0, 1.2, 0, 0, 0.6, 1, 1.2, 0, 0, 1, 0.2, 2.4, 0.5, 0.4])

s4 = np.array([1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1.5])

s5 = np.array([x+1 for x in s1])

print(Oushidistance(s1,s2))

print(Oushidistance(s1,s3))

print(Oushidistance(s1,s4))

print(Oushidistance(s1,s5))

#DWT算法的实现和对比分析展示

import numpy as np

import matplotlib.pyplot as plt

float_formatter = lambda x: "%.2f" % x

np.set_printoptions(formatter={'float_kind': float_formatter})

def TimeSeriesSimilarity(s1, s2):

l1 = len(s1)

l2 = len(s2)

plt.plot(s1, "r", s2, "g")

plt.show()

s1 = (s1 - np.mean(s1)) / np.std(s1)

s2 = (s2 - np.mean(s2)) / np.std(s2)

paths = np.full((l1 + 1, l2 + 1), np.inf) # 全部赋予无穷大

paths[0, 0] = 0

for i in range(l1):

for j in range(l2):

d = s1[i] - s2[j]

cost = d ** 2

paths[i + 1, j + 1] = cost + min(paths[i, j + 1], paths[i + 1, j], paths[i, j])

paths = np.sqrt(paths)

s = paths[l1, l2]

return s, paths.T

if __name__ == '__main__':

s1 = [1, 2, 0, 1, 1, 2]

s2 = [1, 0, 1]

s2 = [3,5,1,3,3,5]

plt.plot(s1,"r",s2,"g")

plt.show()

print(s1,s2)

distance, paths = TimeSeriesSimilarity(s1, s2)

print(distance)

s1 = np.array([1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1])

s2 = np.array([0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2])

s3 = np.array([0.8, 1.5, 0, 1.2, 0, 0, 0.6, 1, 1.2, 0, 0, 1, 0.2, 2.4, 0.5, 0.4])

s4 = np.array([1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1.5])

s5 = np.array([x + 1 for x in s1])

distance, paths = TimeSeriesSimilarity(s1, s2)

print(distance)

distance, paths = TimeSeriesSimilarity(s1, s3)

print(distance)

distance, paths = TimeSeriesSimilarity(s1, s4)

print(distance)

distance, paths = TimeSeriesSimilarity(s1, s5)

print(distance)

#基于形态相似度计算的时间序列相似度计算

import numpy as np

import matplotlib.pyplot as plt

def Oushidistance(s1,s2):

m=len(s1)

n=len(s2)

plt.plot(s1, "r", s2, "g")

plt.show()

s1 = (s1 - np.mean(s1)) / np.std(s1)

s2 = (s2 - np.mean(s2)) / np.std(s2)

distance=np.sqrt(np.sum((s1-s2)**2))

ASD=abs(np.sum(s1-s2))

SAD=np.sum(abs(s1-s2))

if SAD==0:

return 0

else:

distance1=distance*(2-ASD/SAD)

return distance1

if __name__ == '__main__':

s1 = np.array([1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1])

s2 = np.array([0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2])

s3 = np.array([0.8, 1.5, 0, 1.2, 0, 0, 0.6, 1, 1.2, 0, 0, 1, 0.2, 2.4, 0.5, 0.4])

s4 = np.array([1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1.5])

s5 = np.array([x + 1 for x in s1])

print(Oushidistance(s1,s2))

print(Oushidistance(s1,s3))

print(Oushidistance(s1,s4))

print(Oushidistance(s1,s5))

#改进版本的DWT算法1实现展示

import numpy as np

import math

def get_common_seq(best_path, threshold=1):

com_ls = []

pre = best_path[0]

length = 1

for i, element in enumerate(best_path):

if i == 0:

continue

cur = best_path[i]

if cur[0] == pre[0] + 1 and cur[1] == pre[1] + 1:

length = length + 1

else:

com_ls.append(length)

length = 1

pre = cur

com_ls.append(length)

return list(filter(lambda num: True if threshold < num else False, com_ls))

def calculate_attenuate_weight(seqLen, com_ls):

weight = 0

for comlen in com_ls:

weight = weight + (comlen * comlen) / (seqLen * seqLen)

return 1 - math.sqrt(weight)

def best_path(paths):

"""Compute the optimal path from the nxm warping paths matrix."""

i, j = int(paths.shape[0] - 1), int(paths.shape[1] - 1)

p = []

if paths[i, j] != -1:

p.append((i - 1, j - 1))

while i > 0 and j > 0:

c = np.argmin([paths[i - 1, j - 1], paths[i - 1, j], paths[i, j - 1]])

if c == 0:

i, j = i - 1, j - 1

elif c == 1:

i = i - 1

elif c == 2:

j = j - 1

if paths[i, j] != -1:

p.append((i - 1, j - 1))

p.pop()

p.reverse()

return p

def TimeSeriesSimilarity(s1, s2):

l1 = len(s1)

l2 = len(s2)

paths = np.full((l1 + 1, l2 + 1), np.inf) # 全部赋予无穷大

paths[0, 0] = 0

s1 = (s1 - np.mean(s1)) / np.std(s1)

s2 = (s2 - np.mean(s2)) / np.std(s2)

for i in range(l1):

for j in range(l2):

d = s1[i] - s2[j]

cost = d ** 2

paths[i + 1, j + 1] = cost + min(paths[i, j + 1], paths[i + 1, j], paths[i, j])

paths = np.sqrt(paths)

s = paths[l1, l2]

return s, paths.T

if __name__ == '__main__':

# 测试数据

s1 = np.array([1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1])

s2 = np.array([0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2])

s3 = np.array([0.8, 1.5, 0, 1.2, 0, 0, 0.6, 1, 1.2, 0, 0, 1, 0.2, 2.4, 0.5, 0.4])

# 原始算法

distance12, paths12 = TimeSeriesSimilarity(s1, s2)

distance13, paths13 = TimeSeriesSimilarity(s1, s3)

print("更新前s1和s2距离:" + str(distance12))

print("更新前s1和s3距离:" + str(distance13))

best_path12 = best_path(paths12)

best_path13 = best_path(paths13)

# 衰减系数

com_ls1 = get_common_seq(best_path12)

com_ls2 = get_common_seq(best_path13)

# print(len(best_path12), com_ls1)

# print(len(best_path13), com_ls2)

weight12 = calculate_attenuate_weight(len(best_path12), com_ls1)

weight13 = calculate_attenuate_weight(len(best_path13), com_ls2)

# 更新距离

print("更新后s1和s2距离:" + str(distance12 * weight12))

print("更新后s1和s3距离:" + str(distance13 * weight13))

#改进版本的DWT算法实现第二种方式

import numpy as np

float_formatter = lambda x: "%.2f" % x

np.set_printoptions(formatter={'float_kind': float_formatter})

def TimeSeriesSimilarityImprove(s1, s2):

# 取较大的标准差

sdt = np.std(s1, ddof=1) if np.std(s1, ddof=1) > np.std(s2, ddof=1) else np.std(s2, ddof=1)

# print("两个序列最大标准差:" + str(sdt))

l1 = len(s1)

l2 = len(s2)

paths = np.full((l1 + 1, l2 + 1), np.inf) # 全部赋予无穷大

sub_matrix = np.full((l1, l2), 0) # 全部赋予0

max_sub_len = 0

s1 = (s1 - np.mean(s1)) / np.std(s1)

s2 = (s2 - np.mean(s2)) / np.std(s2)

paths[0, 0] = 0

for i in range(l1):

for j in range(l2):

d = s1[i] - s2[j]

cost = d ** 2

paths[i + 1, j + 1] = cost + min(paths[i, j + 1], paths[i + 1, j], paths[i, j])

if np.abs(s1[i] - s2[j]) < sdt:

if i == 0 or j == 0:

sub_matrix[i][j] = 1

else:

sub_matrix[i][j] = sub_matrix[i - 1][j - 1] + 1

max_sub_len = sub_matrix[i][j] if sub_matrix[i][j] > max_sub_len else max_sub_len

paths = np.sqrt(paths)

s = paths[l1, l2]

#return s, paths.T, [max_sub_len]

weight = 0

for comlen in [max_sub_len]:

weight = weight + comlen / len(s1) * comlen / len(s2)

a=1 - weight

distance=s*a

return distance

if __name__ == '__main__':

# 测试数据

s1 = np.array([1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1])

s2 = np.array([0, 1, 1, 2, 0, 1, 1.7, 2, 0, 1, 1, 2, 0, 1, 1, 2])

s3 = np.array([0.8, 1.5, 0, 1.2, 0, 0, 0.6, 1, 1.2, 0, 0, 1, 0.2, 2.4, 0.5, 0.4])

s4 = np.array([1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 1, 1, 2, 0, 2])

s5 = np.array([x + 1 for x in s1])

print(TimeSeriesSimilarityImprove(s1, s2))

print(TimeSeriesSimilarityImprove(s1, s3))

print(TimeSeriesSimilarityImprove(s1, s4))

print(TimeSeriesSimilarityImprove(s1, s5))

时间序列分析对比展示图像