初识Scrapy框架

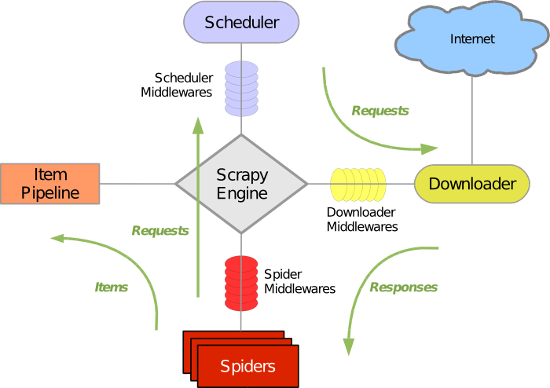

一、scrapy原理介绍

Scrapy一个开源和协作的框架,其最初是为了页面抓取 (更确切来说, 网络抓取 )所设计的,使用它可以以快速、简单、可扩展的方式从网站中提取所需的数据。

但目前Scrapy的用途十分广泛,可用于如数据挖掘、监测和自动化测试等领域,也可以应用在获取API所返回的数据(例如 Amazon Associates Web Services ) 或者通用的网络爬虫。

Scrapy 是基于twisted框架开发而来,twisted是一个流行的事件驱动的python网络框架。因此Scrapy使用了一种非阻塞(又名异步)的代码来实现并发。整体架构大致如下

Scrapy主要包括了以下组件:

- 引擎(Scrapy)

用来处理整个系统的数据流处理, 触发事务(框架核心) - 调度器(Scheduler)

用来接受引擎发过来的请求, 压入队列中, 并在引擎再次请求的时候返回. 可以想像成一个URL(抓取网页的网址或者说是链接)的优先队列, 由它来决定下一个要抓取的网址是什么, 同时去除重复的网址 - 下载器(Downloader)

用于下载网页内容, 并将网页内容返回给蜘蛛(Scrapy下载器是建立在twisted这个高效的异步模型上的) - 爬虫(Spiders)

爬虫是主要干活的, 用于从特定的网页中提取自己需要的信息, 即所谓的实体(Item)。用户也可以从中提取出链接,让Scrapy继续抓取下一个页面 - 项目管道(Pipeline)

负责处理爬虫从网页中抽取的实体,主要的功能是持久化实体、验证实体的有效性、清除不需要的信息。当页面被爬虫解析后,将被发送到项目管道,并经过几个特定的次序处理数据。 - 下载器中间件(Downloader Middlewares)

位于Scrapy引擎和下载器之间的框架,主要是处理Scrapy引擎与下载器之间的请求及响应。 - 爬虫中间件(Spider Middlewares)

介于Scrapy引擎和爬虫之间的框架,主要工作是处理蜘蛛的响应输入和请求输出。 - 调度中间件(Scheduler Middewares)

介于Scrapy引擎和调度之间的中间件,从Scrapy引擎发送到调度的请求和响应。

Scrapy运行流程大概如下:

- 引擎从调度器中取出一个链接(URL)用于接下来的抓取

- 引擎把URL封装成一个请求(Request)传给下载器

- 下载器把资源下载下来,并封装成应答包(Response)

- 爬虫解析Response

- 解析出实体(Item),则交给实体管道进行进一步的处理

- 解析出的是链接(URL),则把URL交给调度器等待抓取

Scrapy框架安装

#Windows平台 1、pip3 install wheel #安装后,便支持通过wheel文件安装软件,wheel文件官网:https://www.lfd.uci.edu/~gohlke/pythonlibs 3、pip3 install lxml 4、pip3 install pyopenssl 5、下载并安装pywin32:https://sourceforge.net/projects/pywin32/files/pywin32/ pip3 install pywin32 6、下载twisted的wheel文件:http://www.lfd.uci.edu/~gohlke/pythonlibs/#twisted 7、执行pip3 install 下载目录Twisted-17.9.0-cp36-cp36m-win_amd64.whl 8、pip3 install scrapy #Linux平台 1、pip3 install scrapy

二、基本使用

基本命令

1. scrapy startproject 项目名称 - 在当前目录中创建中创建一个项目文件(类似于Django) 2. scrapy genspider [-t template] <name> <domain> - 创建爬虫应用 如: scrapy genspider -t basic oldboy oldboy.com scrapy genspider -t xmlfeed autohome autohome.com.cn PS: 查看所有命令:scrapy genspider -l 查看模板命令:scrapy genspider -d 模板名称 3. scrapy list - 展示爬虫应用列表 4. scrapy crawl [爬虫应用名称] - 运行单独爬虫应用

详细的命令行工具

#1 查看帮助 scrapy -h scrapy <command> -h #2 有两种命令:其中Project-only必须切到项目文件夹下才能执行,而Global的命令则不需要 Global commands: startproject #创建项目 genspider #创建爬虫程序 settings #如果是在项目目录下,则得到的是该项目的配置 runspider #运行一个独立的python文件,不必创建项目 shell #scrapy shell url地址 在交互式调试,如选择器规则正确与否 fetch #独立于程单纯地爬取一个页面,可以拿到请求头 view #下载完毕后直接弹出浏览器,以此可以分辨出哪些数据是ajax请求 version #scrapy version 查看scrapy的版本,scrapy version -v查看scrapy依赖库的版本 Project-only commands: crawl #运行爬虫,必须创建项目才行,确保配置文件中ROBOTSTXT_OBEY = False check #检测项目中有无语法错误 list #列出项目中所包含的爬虫名 edit #编辑器,一般不用 parse #scrapy parse url地址 --callback 回调函数 #以此可以验证我们的回调函数是否正确 bench #scrapy bentch压力测试 #3 官网链接 https://docs.scrapy.org/en/latest/topics/commands.html

全局命令:所有文件夹都使用的命令,可以不依赖与项目文件也可以执行 项目的文件夹下执行的命令 1、scrapy startproject Myproject #创建项目 cd Myproject #切换到项目文件夹下 2、scrapy genspider baidu www.baidu.com #创建(一个虫子)爬虫程序,baidu是爬虫名,定位爬虫的名字 #写完域名以后默认会有一个url, 3、scrapy settings --get BOT_NAME #获取配置文件 #全局:4、scrapy runspider budui.py 5、scrapy runspider AMAZONspidersamazon.py #执行爬虫程序 在项目下:scrapy crawl amazon #指定爬虫名,定位爬虫程序来运行程序 #robots.txt 反爬协议:在目标站点建一个文件,里面规定了哪些能爬,哪些不能爬 # 有的国家觉得是合法的,有的是不合法的,这就产生了反爬协议 # 默认是ROBOTSTXT_OBEY = True # 修改为ROBOTSTXT_OBEY = False #默认不遵循反扒协议 6、scrapy shell https://www.baidu.com #直接超目标站点发请求 response response.status response.body view(response) 7、scrapy view https://www.taobao.com #如果页面显示内容不全,不全的内容则是ajax请求实现的,以此快速定位问题 8、scrapy version #查看版本 9、scrapy version -v #查看scrapy依赖库所依赖的版本 10、scrapy fetch --nolog http://www.logou.com #获取响应的内容 11、scrapy fetch --nolog --headers http://www.logou.com #获取响应的请求头 (venv3_spider) E: wistedscrapy框架AMAZON>scrapy fetch --nolog --headers http://www.logou.com > Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8 > Accept-Language: en > User-Agent: Scrapy/1.5.0 (+https://scrapy.org) > Accept-Encoding: gzip,deflate > < Content-Type: text/html; charset=UTF-8 < Date: Tue, 23 Jan 2018 15:51:32 GMT < Server: Apache >代表请求 <代表返回 10、scrapy shell http://www.logou.com #直接朝目标站点发请求 11、scrapy check #检测爬虫有没有错误 12、scrapy list #所有的爬虫名 13、scrapy parse http://quotes.toscrape.com/ --callback parse #验证回调函函数是否成功执行 14、scrapy bench #压力测试

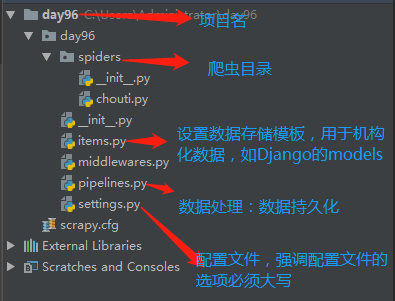

三、项目结构以及爬虫应用简介

文件说明:

- scrapy.cfg 项目的主配置信息。(真正爬虫相关的配置信息在settings.py文件中)

- items.py 设置数据存储模板,用于结构化数据,如:Django的Model

- pipelines 数据处理行为,如:一般结构化的数据持久化

- settings.py 配置文件,如:递归的层数、并发数,延迟下载等

- spiders 爬虫目录,如:创建文件,编写爬虫规则

注意:一般创建爬虫文件时,以网站域名命名

day96spiderchouti.py

# -*- coding: utf-8 -*- import scrapy import sys import io sys.stdout = io.TextIOWrapper(sys.stdout.buffer,encoding='gb18030') #windows 编码问题 from scrapy.selector import Selector,HtmlXPathSelector class ChoutiSpider(scrapy.Spider): name = 'chouti' #爬虫名称 allowed_domains = ['chouti.com'] #允许的域名 start_urls = [ 'http://dig.chouti.com/', #真实的url ] def parse(self, response): hxs = Selector(response=response).xpath('//a') for i in hxs: print(i)

小试牛刀

# -*- coding: utf-8 -*- import scrapy from scrapy.http import Request from scrapy.selector import Selector, HtmlXPathSelector from ..items import ChoutiItem from scrapy.http.cookies import CookieJar class ChoutiSpider(scrapy.Spider): name = "chouti" allowed_domains = ["chouti.com",] start_urls = ['http://dig.chouti.com/'] cookie_dict = None def parse(self, response): cookie_obj = CookieJar() cookie_obj.extract_cookies(response,response.request) self.cookie_dict = cookie_obj._cookies # 带上用户名密码+cookie yield Request( url="http://dig.chouti.com/login", method='POST', body = "phone=8615990000000&password=xxxxxx&oneMonth=1", headers={'Content-Type': "application/x-www-form-urlencoded; charset=UTF-8"}, cookies=cookie_obj._cookies, callback=self.check_login ) def check_login(self,response): print(response,'===登陆成功') yield Request(url="http://dig.chouti.com/",callback=self.good) def good(self,response): id_list = Selector(response=response).xpath('//div[@share-linkid]/@share-linkid').extract() for nid in id_list: url = "http://dig.chouti.com/link/vote?linksId=%s" % nid print(nid,url) yield Request( url=url, method="POST", cookies=self.cookie_dict, callback=self.show ) # page_urls = Selector(response=response).xpath('//div[@id="dig_lcpage"]//a/@href').extract() # for page in page_urls: # url = "http://dig.chouti.com%s" % page # yield Request(url=url,callback=self.good) def show(self,response): print("点赞成功--->",response)

在cmd终端进入项目目录执行如下命令即可执行此爬虫文件:

scrapy crawl dig #打印出所有日志文件 scrapy crawl dig --nolog #不打印日志文件

处理HTML的选择器--->xpath

#!/usr/bin/env python # -*- coding:utf-8 -*- from scrapy.selector import Selector, HtmlXPathSelector from scrapy.http import HtmlResponse html = """<!DOCTYPE html> <html> <head lang="en"> <meta charset="UTF-8"> <title></title> </head> <body> <ul> <li class="item-"><a id='i1' href="link.html">first item</a></li> <li class="item-0"><a id='i2' href="llink.html">first item</a></li> <li class="item-1"><a href="llink2.html">second item<span>vv</span></a></li> </ul> <div><a href="llink2.html">second item</a></div> </body> </html> """ response = HtmlResponse(url='http://example.com', body=html,encoding='utf-8') # hxs = HtmlXPathSelector(response) # print(hxs) # hxs = Selector(response=response).xpath('//a') # print(hxs) # hxs = Selector(response=response).xpath('//a[2]') # print(hxs) # hxs = Selector(response=response).xpath('//a[@id]') # print(hxs) # hxs = Selector(response=response).xpath('//a[@id="i1"]') # print(hxs) # hxs = Selector(response=response).xpath('//a[@href="link.html"][@id="i1"]') # print(hxs) # hxs = Selector(response=response).xpath('//a[contains(@href, "link")]') # print(hxs) # hxs = Selector(response=response).xpath('//a[starts-with(@href, "link")]') # print(hxs) # hxs = Selector(response=response).xpath('//a[re:test(@id, "id+")]') # print(hxs) # hxs = Selector(response=response).xpath('//a[re:test(@id, "id+")]/text()').extract() # print(hxs) # hxs = Selector(response=response).xpath('//a[re:test(@id, "id+")]/@href').extract() # print(hxs) # hxs = Selector(response=response).xpath('/html/body/ul/li/a/@href').extract() # print(hxs) # hxs = Selector(response=response).xpath('//body/ul/li/a/@href').extract_first() # print(hxs) # ul_list = Selector(response=response).xpath('//body/ul/li') # for item in ul_list: # v = item.xpath('./a/span') # # 或 # # v = item.xpath('a/span') # # 或 # # v = item.xpath('*/a/span') # print(v)

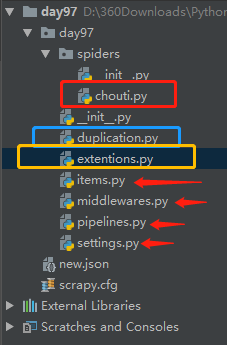

##day97 scrapy框架回顾 cmd命令: 创建工程文件夹- scrapy startproject [工程名称] 切换到工程名称文件夹下- cd [工程名称] 创建爬虫程序- scrapy genspider [虫子名称] [www.虫子名称.com] 编写代码: class ChoutiSpider(scrapy.Spider): name = 'chouti' allowed_domains = ['dig.chouti.com'] start_urls = ['https://dig.chouti.com/',] <a>name不能忽略 <b>allowed_domains = ['dig.chouti.com'] 允许的域名,只允许爬取抽屉网站。也可以多个 <c>start_urls 起始访问的url <d>可以重写start_requests ,指定初始请求的函数 def start_requests(self): for url in self.start_urls: yield Request(url,callback=self.parse) <e>响应response response.url response.text response.body response.meta = {'depth':'深度'} #当前深度DEPTH_LIMIT <f>采集数据 Selector(response=response),xpath('//div') Selector(response=response),xpath('//div[@id="username"]') Selector(response=response),xpath('//div[start-with(@id,"us"]') #匹配div标签,属性id 以'us'开头的 Selector(response=response),xpath('//div[re:test(@id,"us"]') #正则匹配div标签,属性id 以'us'开头的 Selector(response=response).xpath('//a[@id]') #找到所有带有id属性的a标签 Selector(response=response).xpath('//a/@id') #找到所有a标签的id属性 Selector(response=response).xpath('//a[@href="link.html"][@id="i1"]') #双条件,找到所有href属性为link.html且id属性为i1 的a标签 Selector(response=response).xpath('//a[contains(@href, "link")]') #href属性为包含link的所有a标签 Selector(response=response),xpath('//div/a') li_list = Selector(response=response),xpath('//div//li') for li in li_list: li.xpath('./a/@href').extract() #从当前li标签下开始找 li.xpath('.//a/text()').extract() #返回列表 li.xpath('.//a/text()').first_extract() #返回列表第一项 li.xpath('.//a/text()').extract()[0] #返回列表第一项 <g>yield Request(url='',callback='') yield Request(url='',callback=self.parse) #必须先引入Request,from scrapy.http.request import Request <h>yield Item(url='',title='',href='',) <i>进入pipelines class Day96Pipeline(object): def process_item(self, item, spider): if(spider.name =="chouti"): """ 当spider 是 chouti 时数据持久化到json文件 """ tpl = "%s %s "%(item["href"],item["title"]) f = open("new.json","a+",encoding="utf8") f.write(tpl) f.close() #注意要在settings.py 中配置 ITEM_PIPELINES = { 'day96.pipelines.Day96Pipeline': 300, # 'day96.pipelines.Day95Pipeline': 400, #值越高权重越大,越先执行pipelines中的类 # 'day96.pipelines.Day97Pipeline': 200, #值越高权重越大,越先执行pipelines中的类 } <f>采集数据 今日内容: 一、去重版 URL 1、在settings.py 设置 DUPEFILTER_CLASS = "day97.duplication.RepeatFilter" 2、参考chouti.py中导入的RFPDupeFilter文件 from scrapy.dupefilters import RFPDupeFilter,自定义一个去重类 3、重写duplication.py 的RepateFilter类 class RepeatFilter(object): def __init__(self): """ 2、对象初始化 """ self.visited_set = set() #可以自定制的将url放入缓存,数据库等 @classmethod def from_settings(cls, settings): """ 1、创建对象 :param settings: :return: """ return cls() def request_seen(self, request): """ 4、检查url是否已经访问过 :param request: :return: """ if request.url in self.visited_set: return True self.visited_set.add(request.url) return False def open(self): # can return deferred """ 3、开始爬取 :return: """ print('open>>>') pass def close(self, reason): # can return a deferred """ 5、停止爬取 :param reason: :return: """ print('close<<<') pass def log(self, request, spider): # log that a request has been filtered pass # @classmethod # from_settings() # 装饰器调用, # RepeatFilter.from_settings() #执行__init__() 初始化 4、配置settings DUPEFILTER_CLASS = "day97.duplication.RepeatFilter" # DUPEFILTER_CLASS = "scrapy.dupefilters.RFPDupeFilter" #默认的 # from scrapy.dupefilters import RFPDupeFilter #打开RFPDupeFilter 参考源码的默认写法 二、pipeline补充 class Day97Pipeline(object): def __init__(self, v): self.value = v @classmethod def from_crawler(cls, crawler): """ 初始化时候,用于创建pipeline对象 :param crawler: :return: """ val = crawler.settings.getint('MMMM') #获取settings.py 中的MMMM return cls(val) def open_spider(self, spider): """ 爬虫开始执行时调用 :param spider: :return: """ print("开始爬虫》》》") self.f = open("new.json", "a+", encoding="utf8") # 也可以链接上数据库 def process_item(self, item, spider): if (spider.name == "chouti"): """ 当spider 是 chouti 时数据持久化到json文件 """ tpl = "%s %s " % (item["href"], item["title"]) self.f.write(tpl) #交给下一个pipelines处理 return item #丢弃item,不交给pipelines # raise DropItem() elif (spider.name == "taobao"): """ 当spider 是 taobao 时数据持久化到数据库 """ pass elif (spider.name == "cnblogs"): """ 当spider 是 taobao 时数据持久化到html页面展示 """ pass else: pass def close_spider(self, spider): """ 爬虫关闭时调用 :param spider: :return: """ print("停止爬虫《《《") self.f.close() ##注意 #settings 里面的配置文件名称必须大写,否则crawler.settings.getint('MMMM') 找不到配置文件中的文件名 #process_item() 方法中,如果抛出异常DropItem 表示终止,否则基础交给后续的pipelines处理 #spider可判断是那个爬虫程序 三、cookies问题 四、扩展 settings.py EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, 'day97.extentions.Myextend': 300, } from scrapy import signals class Myextend: def __init__(self, crawler): self.crawler = crawler #在钩子上挂障碍物 #标准说法:在指定信号上注册操作 crawler.signals.connect(self.start,signals.engine_started) crawler.signals.connect(self.close,signals.spider_closed) @classmethod def from_crawler(cls, crawler): return cls(crawler) def start(self): print("signals.engine_started...") def close(self): print("signals.spider_closed...") 五、配置文件

案例讲解-抽屉网点赞(目前已失效,抽屉升级了反扒机制)

# -*- coding: utf-8 -*- import scrapy from scrapy.http import Request from scrapy.selector import Selector, HtmlXPathSelector from ..items import ChoutiItem from scrapy.http.cookies import CookieJar class ChoutiSpider(scrapy.Spider): name = "chouti" allowed_domains = ["chouti.com",] start_urls = ['http://dig.chouti.com/'] cookie_dict = None def parse(self, response): cookie_obj = CookieJar() cookie_obj.extract_cookies(response,response.request) self.cookie_dict = cookie_obj._cookies # 带上用户名密码+cookie yield Request( url="http://dig.chouti.com/login", method='POST', body = "phone=8615990076961&password=xr112358&oneMonth=1", headers={'Content-Type': "application/x-www-form-urlencoded; charset=UTF-8"}, cookies=cookie_obj._cookies, callback=self.check_login ) def check_login(self,response): print(response,'===登陆成功') yield Request(url="http://dig.chouti.com/",callback=self.good) def good(self,response): id_list = Selector(response=response).xpath('//div[@share-linkid]/@share-linkid').extract() for nid in id_list: url = "http://dig.chouti.com/link/vote?linksId=%s" % nid print(nid,url) yield Request( url=url, method="POST", cookies=self.cookie_dict, callback=self.show ) # page_urls = Selector(response=response).xpath('//div[@id="dig_lcpage"]//a/@href').extract() # for page in page_urls: # url = "http://dig.chouti.com%s" % page # yield Request(url=url,callback=self.good) def show(self,response): print("点赞成功--->",response)

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class ChoutiItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() title = scrapy.Field() href = scrapy.Field()

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html from scrapy.exceptions import DropItem class Day97Pipeline(object): def __init__(self, v): self.value = v @classmethod def from_crawler(cls, crawler): """ 初始化时候,用于创建pipeline对象 :param crawler: :return: """ val = crawler.settings.getint('MMMM') #获取settings.py 中的MMMM return cls(val) def open_spider(self, spider): """ 爬虫开始执行时调用 :param spider: :return: """ # print("开始爬虫》》》") self.f = open("new.json", "a+", encoding="utf8") # 也可以链接上数据库 def process_item(self, item, spider): if (spider.name == "chouti"): """ 当spider 是 chouti 时数据持久化到json文件 """ tpl = "%s %s " % (item["href"], item["title"]) self.f.write(tpl) #交给下一个pipelines处理 return item #丢弃item,不交给pipelines # raise DropItem() elif (spider.name == "taobao"): """ 当spider 是 taobao 时数据持久化到数据库 """ pass elif (spider.name == "cnblogs"): """ 当spider 是 taobao 时数据持久化到html页面展示 """ pass else: pass def close_spider(self, spider): """ 爬虫关闭时调用 :param spider: :return: """ # print("停止爬虫《《《") self.f.close() # class Day96Pipeline(object): # def __init__(self, v): # self.value = v # # @classmethod # def from_crawler(cls, crawler): # """ # 初始化时候,用于创建pipeline对象 # :param crawler: # :return: # """ # val = crawler.settings.getint('MMMM') #获取settings.py 中的MMMM # return cls(val) # # def open_spider(self, spider): # """ # 爬虫开始执行时调用 # :param spider: # :return: # """ # print("开始爬虫》》》") # self.f = open("new.json", "a+", encoding="utf8") # 也可以链接上数据库 # # def process_item(self, item, spider): # if (spider.name == "chouti"): # """ # 当spider 是 chouti 时数据持久化到json文件 # """ # tpl = "%s %s " % (item["href"], item["title"]) # self.f.write(tpl) # # elif (spider.name == "taobao"): # """ # 当spider 是 taobao 时数据持久化到数据库 # """ # pass # elif (spider.name == "cnblogs"): # """ # 当spider 是 taobao 时数据持久化到html页面展示 # """ # pass # else: # pass # # def close_spider(self, spider): # """ # 爬虫关闭时调用 # :param spider: # :return: # """ # print("停止爬虫《《《") # self.f.close()

# -*- coding: utf-8 -*- # Scrapy settings for day97 project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'day97' SPIDER_MODULES = ['day97.spiders'] NEWSPIDER_MODULE = 'day97.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent #伪造成浏览器的请求头 USER_AGENT = "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3472.3 Safari/537.36" # Obey robots.txt rules ROBOTSTXT_OBEY = False #不遵守协议 # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 #concurrent_request 并发数 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs # DOWNLOAD_DELAY = 2 #下载延迟2秒 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #针对每个域名最多放16个虫子去爬 #CONCURRENT_REQUESTS_PER_IP = 16 # # Disable cookies (enabled by default) #COOKIES_ENABLED = True #是否让response 帮你去爬取cookies(默认是爬取cookies) # COOKIES_ENABLED = False #是否在调试时打印cookies(默认是False) # Disable Telnet Console (enabled by default) # TELNETCONSOLE_ENABLED = True # Override the default request headers: #默认的请求头,在yield Request() 中也可以再传入请求头 #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares #中间件 # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'day97.middlewares.Day97SpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'day97.middlewares.Day97DownloaderMiddleware': 543, #} # Enable or disable extensions #扩展 # See https://doc.scrapy.org/en/latest/topics/extensions.html EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, 'day97.extentions.Myextend': 300, } # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { 'day97.pipelines.Day97Pipeline': 300, # 'day97.pipelines.Day96Pipeline': 200, } # Enable and configure the AutoThrottle extension (disabled by default) #download delay智能限速的(每次都设置为固定秒数 网站后台能识别到是爬虫) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) #缓存 # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage' DEPTH_LIMIT = 1 # DEPTH_PRIORITY = 0 #深度优先(默认是0),广度优先(1)。只能是 0或1 DUPEFILTER_CLASS = "day97.duplication.RepeatFilter"

class RepeatFilter(object): def __init__(self): """ 2、对象初始化 """ self.visited_set = set() #可以自定制的将url放入缓存,数据库等 @classmethod def from_settings(cls, settings): """ 1、创建对象 :param settings: :return: """ return cls() def request_seen(self, request): """ 4、检查url是否已经访问过 :param request: :return: """ if request.url in self.visited_set: return True self.visited_set.add(request.url) return False def open(self): # can return deferred """ 3、开始爬取 :return: """ # print('open>>>') pass def close(self, reason): # can return a deferred """ 5、停止爬取 :param reason: :return: """ # print('close<<<') pass def log(self, request, spider): # log that a request has been filtered pass # @classmethod # from_settings() # 装饰器调用, # RepeatFilter.from_settings() #执行__init__() 初始化

from scrapy import signals class Myextend: def __init__(self, crawler): self.crawler = crawler #在钩子上挂障碍物 #标准说法:在指定信号上注册操作 crawler.signals.connect(self.start,signals.engine_started) crawler.signals.connect(self.close,signals.spider_closed) @classmethod def from_crawler(cls, crawler): return cls(crawler) def start(self): print("signals.engine_started...") def close(self): print("signals.spider_closed...")

注意:settings.py中设置 DEPTH_LIMIT = 1来指定“递归”的层数。

格式化处理

爬虫实例比较简单的持久化处理,可以在parse方法中直接处理。如果对于想要获取更多的数据处理,则可以利用Scrapy的items将数据格式化,然后统一交由pipelines来处理。

import scrapy from scrapy.selector import HtmlXPathSelector from scrapy.http.request import Request from scrapy.http.cookies import CookieJar from scrapy import FormRequest class XiaoHuarSpider(scrapy.Spider): # 爬虫应用的名称,通过此名称启动爬虫命令 name = "xiaohuar" # 允许的域名 allowed_domains = ["xiaohuar.com"] start_urls = [ "http://www.xiaohuar.com/list-1-1.html", ] # custom_settings = { # 'ITEM_PIPELINES':{ # 'spider1.pipelines.JsonPipeline': 100 # } # } has_request_set = {} def parse(self, response): # 分析页面 # 找到页面中符合规则的内容(校花图片),保存 # 找到所有的a标签,再访问其他a标签,一层一层的搞下去 hxs = HtmlXPathSelector(response) items = hxs.select('//div[@class="item_list infinite_scroll"]/div') for item in items: src = item.select('.//div[@class="img"]/a/img/@src').extract_first() name = item.select('.//div[@class="img"]/span/text()').extract_first() school = item.select('.//div[@class="img"]/div[@class="btns"]/a/text()').extract_first() url = "http://www.xiaohuar.com%s" % src from ..items import XiaoHuarItem obj = XiaoHuarItem(name=name, school=school, url=url) yield obj urls = hxs.select('//a[re:test(@href, "http://www.xiaohuar.com/list-1-d+.html")]/@href') for url in urls: key = self.md5(url) if key in self.has_request_set: pass else: self.has_request_set[key] = url req = Request(url=url,method='GET',callback=self.parse) yield req @staticmethod def md5(val): import hashlib ha = hashlib.md5() ha.update(bytes(val, encoding='utf-8')) key = ha.hexdigest() return key

import scrapy class XiaoHuarItem(scrapy.Item): name = scrapy.Field() school = scrapy.Field() url = scrapy.Field()

import json import os import requests class JsonPipeline(object): def __init__(self): self.file = open('xiaohua.txt', 'w') def process_item(self, item, spider): v = json.dumps(dict(item), ensure_ascii=False) self.file.write(v) self.file.write(' ') self.file.flush() return item class FilePipeline(object): def __init__(self): if not os.path.exists('imgs'): os.makedirs('imgs') def process_item(self, item, spider): response = requests.get(item['url'], stream=True) file_name = '%s_%s.jpg' % (item['name'], item['school']) with open(os.path.join('imgs', file_name), mode='wb') as f: f.write(response.content) return item

ITEM_PIPELINES = { 'spider1.pipelines.JsonPipeline': 100, 'spider1.pipelines.FilePipeline': 300, } # 每行后面的整型值,确定了他们运行的顺序,item按数字从低到高的顺序,通过pipeline,通常将这些数字定义在0-1000范围内。

pipelines自定义开始 结束,如下:

from scrapy.exceptions import DropItem class CustomPipeline(object): def __init__(self,v): self.value = v def process_item(self, item, spider): # 操作并进行持久化 # return表示会被后续的pipeline继续处理 return item # 表示将item丢弃,不会被后续pipeline处理 # raise DropItem() @classmethod def from_crawler(cls, crawler): """ 初始化时候,用于创建pipeline对象 :param crawler: :return: """ val = crawler.settings.getint('MMMM') return cls(val) def open_spider(self,spider): """ 爬虫开始执行时,调用 :param spider: :return: """ print('000000') def close_spider(self,spider): """ 爬虫关闭时,被调用 :param spider: :return: """ print('111111')

可以根据spider.name 不同对数据做不同的存储