1.从新闻url获取新闻详情: 字典,anews

2.从列表页的url获取新闻url:列表append(字典) alist

3.生成所页列表页的url并获取全部新闻 :列表extend(列表) allnews

*每个同学爬学号尾数开始的10个列表页

4.设置合理的爬取间隔

import time

import random

time.sleep(random.random()*3)

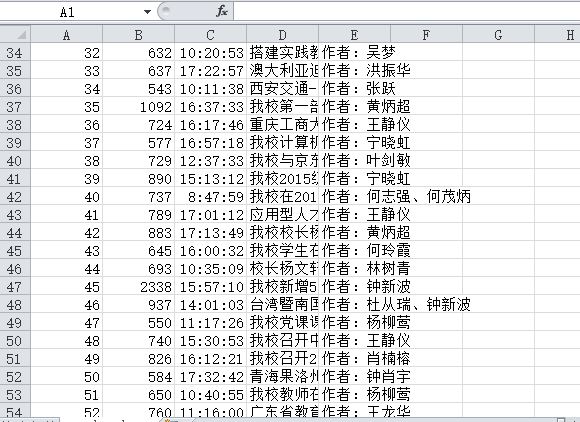

5.用pandas做简单的数据处理并保存

保存到csv或excel文件

newsdf.to_csv(r'F:duym爬虫gzccnews.csv')

保存到数据库

import sqlite3

with sqlite3.connect('gzccnewsdb.sqlite') as db:

newsdf.to_sql('gzccnewsdb',db)

# -*- coding: utf-8 -*-

import requests

from bs4 import BeautifulSoup

import re

import pandas

def resToSoup(url):

res=requests.get(url)

res.encoding = 'utf-8'

demo=res.text

return BeautifulSoup(demo,'lxml')

def getLiUrl(url,aUrl):

soup=resToSoup(url)

aTags=soup.select('ul[class="news-list"] a')

for x in aTags:

aUrl.append(x.get("href"))

def click(url):

id = re.findall('(d{1,5})',url)[-1]

clickUrl = 'http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80'.format(id)

res = requests.get(clickUrl)

click =int(res.text.split('.html')[-1].lstrip("('").rstrip("');"))

return click

def readInfo(aUrl,infoList):

for url in aUrl:

soup=resToSoup(url)

dict = {'title': '', 'time': '', 'writer': '','click':''}

dict['title']=soup.select('div[class="show-title"]')[0].text

dict['time']=soup.select('div[class="show-info"]')[0].text.split()[1]

dict['writer']=soup.select('div[class="show-info"]')[0].text.split()[2]

dict['click']=click(url)

infoList.append(dict)

startUrl="http://news.gzcc.cn/html/xiaoyuanxinwen/"

aUrl=[]

infoList=[]

for i in range(67,78):

url=startUrl+str(i)+".html"

getLiUrl(url,aUrl)

readInfo(aUrl,infoList)

print(infoList)

pandas_date = pandas.DataFrame(infoList);

pandas_date.to_csv(".pandas_date.csv", encoding="utf-8_sig");