一、环境

系统:centos 6.5

JDK:1.8

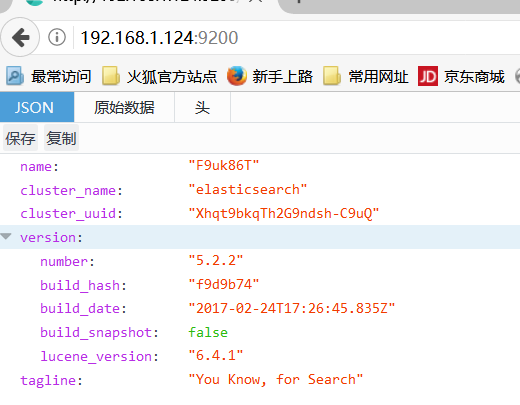

Elasticsearch-5.2.2

Logstash-5.2.2

kibana-5.2.2

二、安装

1、安装JDK

下载JDK:http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

本环境下载的是64位tar.gz包,将安装包拷贝至安装服务器/usr/local目录

[root@server~]# cd /usr/local/

[root@localhost local]# tar -xzvf jdk-8u111-linux-x64.tar.gz

配置环境变量

[root@localhost local]# vim /etc/profile

将下面的内容添加至文件末尾(假如服务器需要多个JDK版本,为了ELK不影响其它系统,也可以将环境变量的内容稍后添加到ELK的启动脚本中)

JAVA_HOME=/usr/local/jdk1.8.0_111

JRE_HOME=/usr/local/jdk1.8.0_111/jre

CLASSPATH=.:$JAVA_HOME/lib:/dt.jar:$JAVA_HOME/lib/tools.jar

PATH=$PATH:$JAVA_HOME/bin

export JAVA_HOME

export JRE_HOME

ulimit -u 4096

[root@server local]# source /etc/profile

配置limit相关参数

[root@server local]# vim /etc/security/limits.conf

添加以下内容

* soft nproc 65536

* hard nproc 65536

* soft nofile 65536

* hard nofile 65536

[root@server local]# vim /etc/sysctl.conf

vm.max_map_count=262144

[root@server local]# sysctl –p (y)

创建运行ELK的用户

[root@server local]# groupadd elasticsearch

[root@server local]# useradd -g elasticsearch elasticsearch

[root@server local]# mkdir /opt/elk

[root@server local]# chown elasticsearch. /opt/elk -R

2、安装ELK

以下由elasticsearch用户操作

以elasticsearch用户登录服务器

[root@server ~]# su - elasticsearch

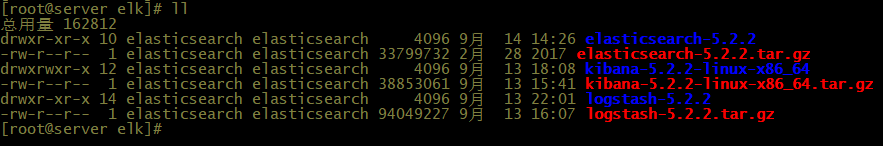

下载ELK安装包:https://www.elastic.co/downloads,并上传到服务器且解压,解压命令:tar -xzvf 包名

kibana5.2.2 下载地址:https://artifacts.elastic.co/downloads/kibana/kibana-5.2.2-linux-x86_64.tar.gz

elasticsearch 下载地址:https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-5.2.2.tar.gz

logstash下载地址:https://artifacts.elastic.co/downloads/logstash/logstash-5.2.2.tar.gz

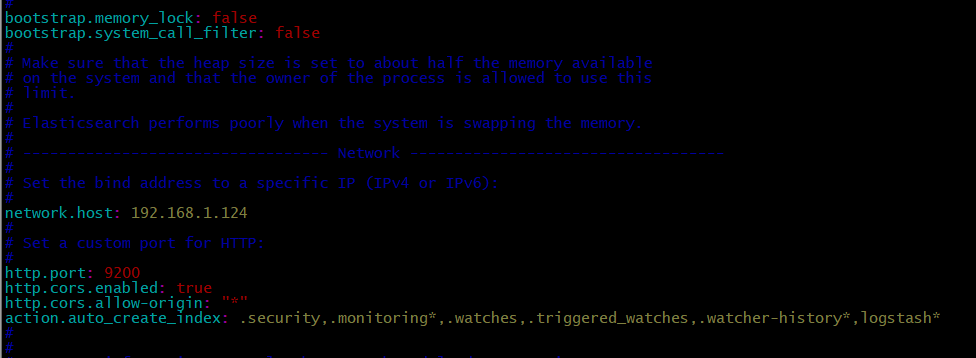

配置Elasticsearch(单节点ES)

[root@server elk]# cd elasticsearch-5.2.2

[root@server elasticsearch-5.2.2]# vim config/elasticsearch.yml

添加:

network.host: 192.168.1.124

http.port: 9200

bootstrap.system_call_filter: false

http.cors.enabled: true

http.cors.allow-origin: "*"

action.auto_create_index: .security,.monitoring*,.watches,.triggered_watches,.watcher-history*,logstash*

elasticsearch 默认启动会使用2g内存,如果自己测试需修改配置文件

[root@server elasticsearch-5.2.2]# vim config/jvm.options

-Xms2g --> 256m

-Xmx2g --> 256m

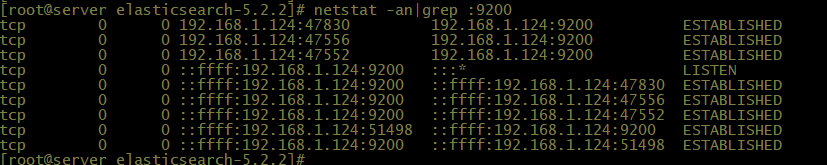

启动 elasticsearch

[root@server elasticsearch-5.2.2]# ./bin/elasticsearch -d

注: 启动完成后想看WEB端网页可以参考 以下网站安装head插件

http://www.cnblogs.com/valor-xh/p/6293485.html?utm_source=itdadao&utm_medium=referral

安装logstash

[root@server logstash-5.2.2]# pwd

/opt/elk/logstash-5.2.2

[root@server logstash-5.2.2]# mkdir config.d

[root@server config.d]# vim nginx_accss.conf

input {

file {

path => [ "/usr/local/nginx/logs/access.log" ]

start_position => "beginning"

ignore_older => 0

type => "nginx-access"

}

}

filter {

if [type] == "nginx-access" {

grok {

match => [

"message","%{IPORHOST:clientip} %{NGUSER:ident} %{NGUSER:auth} [%{HTTPDATE:timestamp}] "%{WORD:verb} %{URIPATHPARAM:request} HTTP/%{NUMBER:httpversion}" %{NUMBER:response} (?:%{NUMBER:bytes}|-) %{QS:referrer} %{QS:agent} %{NOTSPACE:http_x_forwarded_for}"

]

}

urldecode {

all_fields => true

}

date {

locale => "en"

match => ["timestamp" , "dd/MMM/YYYY:HH:mm:ss Z"]

}

geoip {

source => "clientip"

target => "geoip"

database => "/opt/elk/logstash-5.2.2/data/GeoLite2-City.mmdb"

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

}

mutate {

convert => [ "[geoip][coordinates]", "float" ]

convert => [ "response","integer" ]

convert => [ "bytes","integer" ]

replace => { "type" => "nginx_access" }

remove_field => "message"

}

}

}

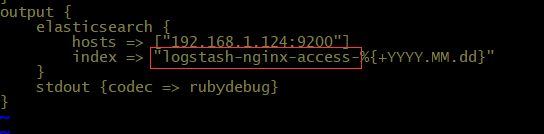

output {

elasticsearch {

hosts => ["192.168.1.124:9200"]

index => "logstash-nginx-access-%{+YYYY.MM.dd}"

}

stdout {codec => rubydebug}

}

简单说明:

logstash的配置文件须包含三个内容:

input{}:此模块是负责收集日志,可以从文件读取、从redis /kafka读取或者开启端口让产生日志的业务系统直接写入到logstash

filter{}:此模块是负责过滤收集到的日志,并根据过滤后对日志定义显示字段

output{}:此模块是负责将过滤后的日志输出到elasticsearch或者文件、redis等

logstash配置及说明: ELK系统分析Nginx日志并对数据进行可视化展示

测试配置文件是否有问题:

/opt/elk/logstash-5.2.2/bin/logstash -t -f /opt/elk/logstash-5.2.2/config.d/nginx_accss.conf

启动 logstash:

nohup /opt/elk/logstash-5.2.2/bin/logstash -f /opt/elk/logstash-5.2.2/config.d/nginx_accss.conf &

查看是否启动成功

tail -f nohup.out

出现以上内容表示启动成功

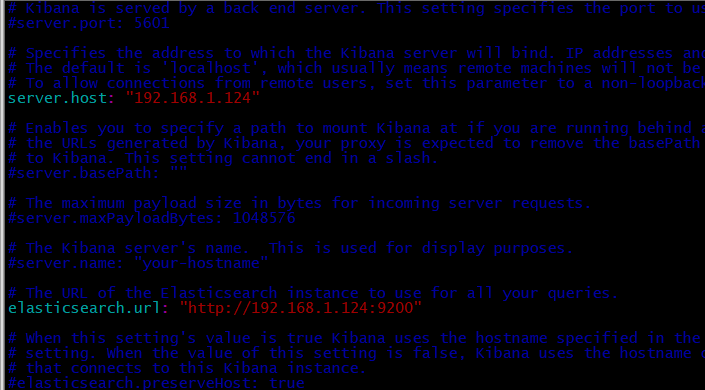

安装kibana

保存退出

启动kibana

nohup ./bin/kibana &

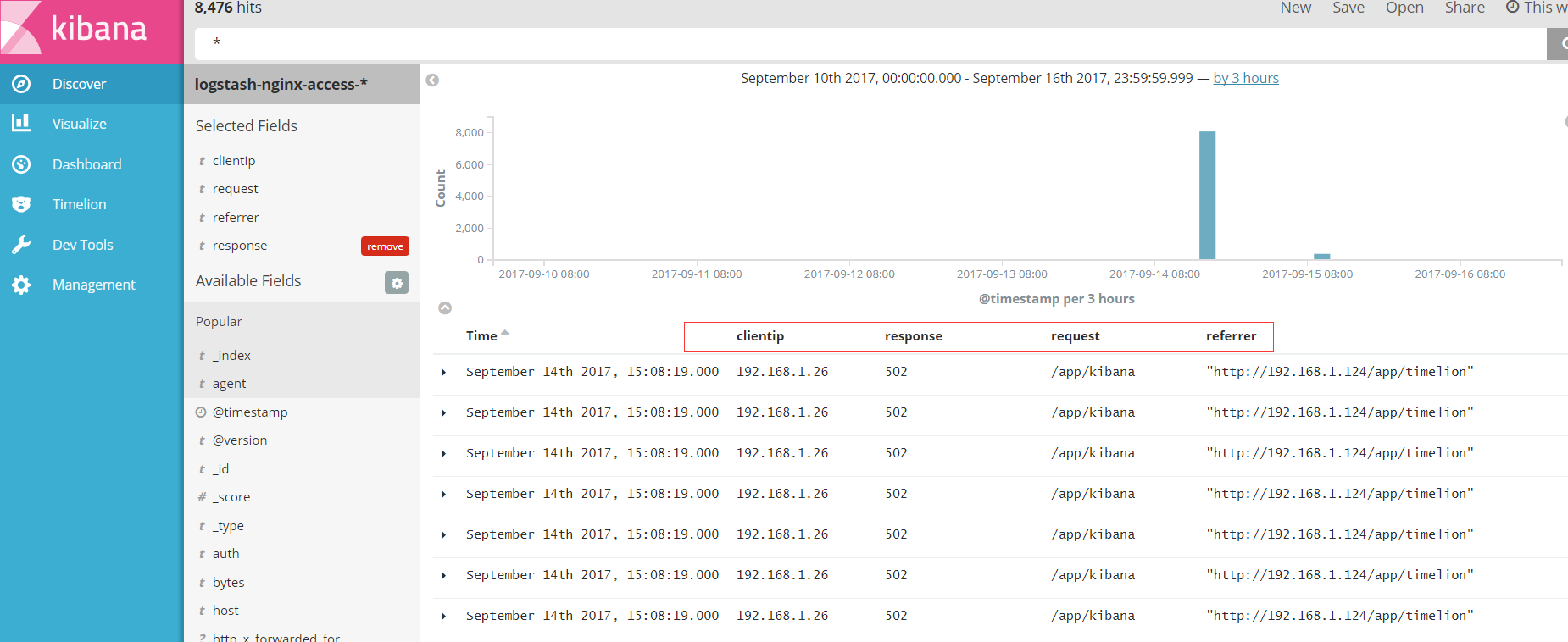

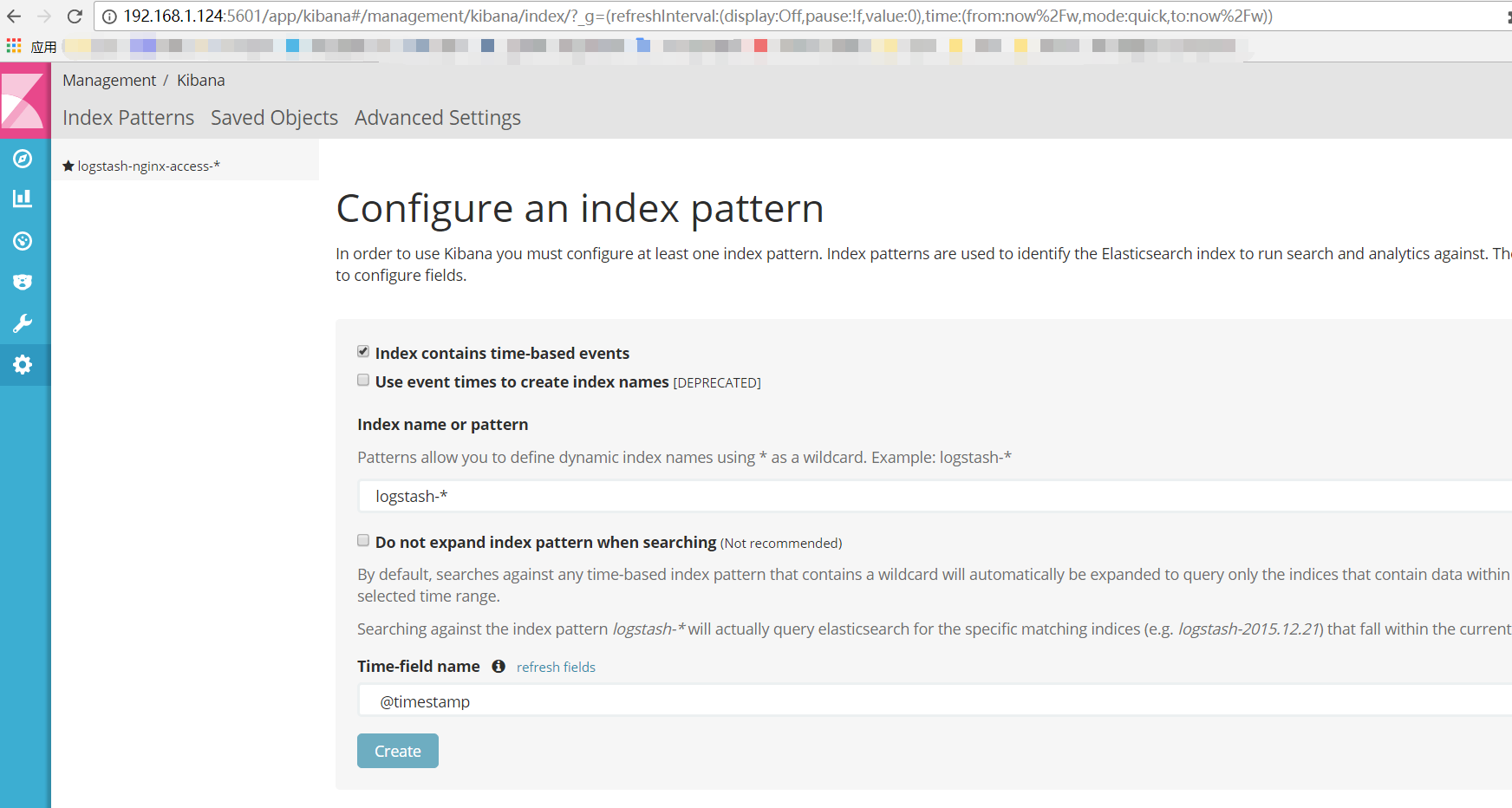

其中 logstash-nginx-access- 从 来的

来的

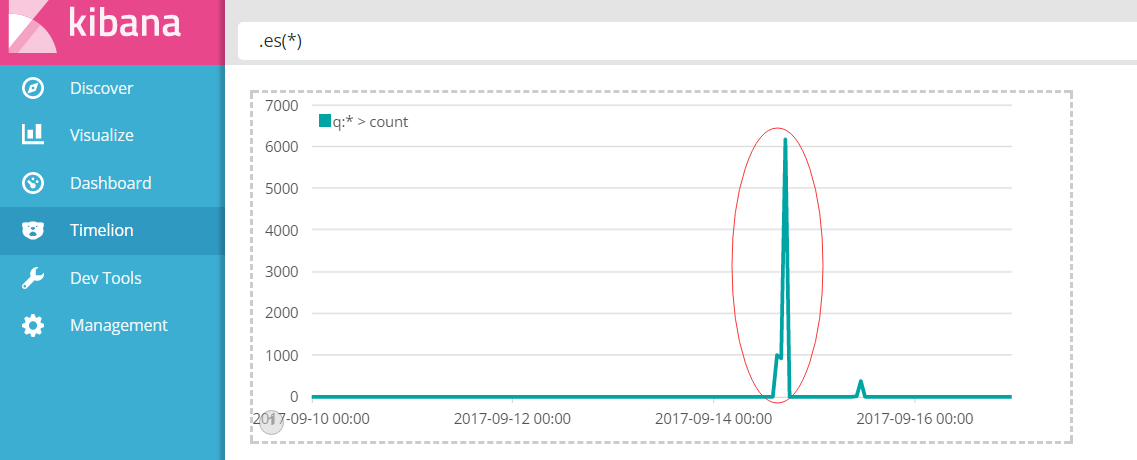

代表实时收集的日志条数