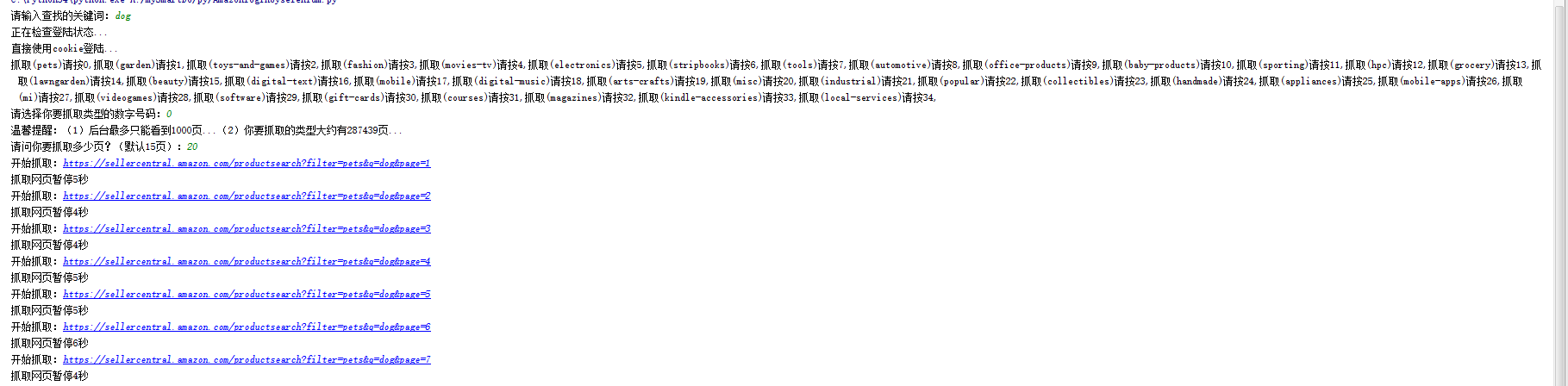

本文基于python3.4的selenium库打开浏览器,并将浏览器中的登陆cookie保存到本地,那么下次登陆就可以直接利用cookie了:

1 # !/usr/bin/python3.4 2 # -*- coding: utf-8 -*- 3 4 from selenium import webdriver 5 import time 6 import requests 7 from bs4 import BeautifulSoup 8 import os 9 import re 10 import random 11 import xlsxwriter 12 13 14 # 找出文件夹下所有xml后缀的文件,可选择递归 15 def listfiles(rootdir, prefix='.xml', iscur=False): 16 file = [] 17 for parent, dirnames, filenames in os.walk(rootdir): 18 if parent == rootdir: 19 for filename in filenames: 20 if filename.endswith(prefix): 21 file.append(filename) 22 if not iscur: 23 return file 24 else: 25 if iscur: 26 for filename in filenames: 27 if filename.endswith(prefix): 28 file.append(filename) 29 else: 30 pass 31 return file 32 33 34 # 抓取dp的正则表达式 35 def getdp(string): 36 reg = r'(http.+?/dp/)(.+)' 37 all = re.compile(reg) 38 alllist = re.findall(all, string) 39 return alllist[0][1] 40 41 42 # 抓取filter的正则表达式 43 # https://sellercentral.amazon.com/productsearch?filter=grocery&q=fish 44 def getfilter(string): 45 reg = r'(https.+?filter=)(.+?)(&)' 46 all = re.compile(reg) 47 alllist = re.findall(all, string) 48 return alllist[0][1] 49 50 51 # 抓取最大页数的正则 52 def getpagenum(string): 53 reg = r'(.+?()(d+)())' 54 all = re.compile(reg) 55 alllist = re.findall(all, string) 56 return alllist[0][1] 57 58 59 # 创建文件夹 60 def createjia(path): 61 try: 62 os.makedirs(path) 63 except: 64 pass 65 66 67 def timetochina(longtime, formats='{}天{}小时{}分钟{}秒'): 68 day = 0 69 hour = 0 70 minutue = 0 71 second = 0 72 try: 73 if longtime > 60: 74 second = longtime % 60 75 minutue = longtime // 60 76 else: 77 second = longtime 78 if minutue > 60: 79 hour = minutue // 60 80 minutue = minutue % 60 81 if hour > 24: 82 day = hour // 24 83 hour = hour % 24 84 return formats.format(day, hour, minutue, second) 85 except: 86 raise Exception('时间非法') 87 88 89 # 打开浏览器抓取cookie 90 def openbrowser(url): 91 # 打开谷歌浏览器 92 # Firefox() Chrome() 93 browser = webdriver.Chrome() 94 # browser = webdriver.Chrome(executable_path='C:/Python34/chromedriver.exe') 95 # 输入网址 96 browser.get(url) 97 # 打开浏览器时间 98 # print("等待10秒打开浏览器...") 99 # time.sleep(10) 100 101 # 找到id="ap_email"的对话框 102 # 清空输入框 103 browser.find_element_by_id("ap_email").clear() 104 browser.find_element_by_id("ap_password").clear() 105 106 # 输入账号密码 107 inputemail = input("请输入账号:") 108 inputpassword = input("请输入密码:") 109 browser.find_element_by_id("ap_email").send_keys(inputemail) 110 browser.find_element_by_id("ap_password").send_keys(inputpassword) 111 112 # 点击登陆sign in 113 # id="signInSubmit" 114 browser.find_element_by_id("signInSubmit").click() 115 116 # 等待登陆10秒 117 # print('等待登陆10秒...') 118 # time.sleep(10) 119 print("等待网址加载完毕...") 120 121 select = input("请观察浏览器网站是否已经登陆(y/n):") 122 while 1: 123 if select == "y" or select == "Y": 124 print("登陆成功!") 125 # 获取cookie 126 cookie = [item["name"] + ":" + item["value"] for item in browser.get_cookies()] 127 cookiestr = ';'.join(item for item in cookie) 128 print("正在复制网页cookie...") 129 130 # 写入本地txt 131 if "jp" in url: 132 path = "../data/Japcookie.txt" 133 else: 134 path = "../data/Amecookie.txt" 135 136 filecookie = open(path, "w") 137 filecookie.write(cookiestr) 138 filecookie.close() 139 140 time.sleep(1) 141 print("准备关闭浏览器...") 142 browser.quit() 143 # print(cookiestr) 144 break 145 146 elif select == "n" or select == "N": 147 selectno = input("账号密码错误请按0,验证码出现请按1...") 148 # 账号密码错误则重新输入 149 if selectno == "0": 150 151 # 找到id="ap_email"的对话框 152 # 清空输入框 153 browser.find_element_by_id("ap_email").clear() 154 browser.find_element_by_id("ap_password").clear() 155 156 # 输入账号密码 157 inputemail = input("请输入账号:") 158 inputpassword = input("请输入密码:") 159 browser.find_element_by_id("ap_email").send_keys(inputemail) 160 browser.find_element_by_id("ap_password").send_keys(inputpassword) 161 # 点击登陆sign in 162 # id="signInSubmit" 163 browser.find_element_by_id("signInSubmit").click() 164 165 elif selectno == "1": 166 # 验证码的id为id="ap_captcha_guess"的对话框 167 input("请在浏览器中输入验证码并登陆...") 168 select = input("请观察浏览器网站是否已经登陆(y/n):") 169 170 else: 171 print("请输入“y”或者“n”!") 172 select = input("请观察浏览器网站是否已经登陆(y/n):") 173 174 return cookiestr 175 176 177 def gethtml(url): 178 # 读取cookie 179 # 写入字典 180 mycookie = {} 181 if "jp" in url: 182 path = "../data/Japcookie.txt" 183 else: 184 path = "../data/Amecookie.txt" 185 186 try: 187 filecookie = open(path, "r") 188 cookies = filecookie.read().split(";") 189 for items in cookies: 190 item = items.split(":") 191 mycookie[item[0]] = item[1] 192 # print(mycookie) 193 filecookie.close() 194 except: 195 print("cookie为空...") 196 197 if "jp" in url: 198 referer = "https://sellercentral.amazon.co.jp/" 199 host = "www.amazon.co.jp" 200 else: 201 referer = "https://sellercentral.amazon.com/" 202 host = "www.amazon.com" 203 204 # 制作头部 205 header = { 206 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64; rv:49.0) Gecko/20100101 Firefox/49.0', 207 'Referer': referer, 208 'Host': host, 209 'Accept-Language': 'zh-CN,zh;q=0.8,en-US;q=0.5,en;q=0.3', 210 'Connection': 'keep-alive', 211 'Upgrade-Insecure-Requests': '1', 212 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 213 'Accept-Encoding': 'gzip, deflate, br' 214 } 215 216 htmlget = requests.get(url=url, headers=header, cookies=mycookie, timeout=60) 217 htmlcontent = htmlget.content.decode("UTF-8", "ignore") 218 219 return htmlcontent 220 221 222 def getinfo(html, Loginurl): 223 # BeautifulSoup解析需要的东西 224 soups = BeautifulSoup(html, "html.parser") 225 # 筛选出商品的div 226 sellyours = soups.find_all("div", attrs={"class": "a-box product"}) 227 information = [] 228 for item in sellyours: 229 # 一个一个商品筛选 230 # 第一次筛选,得到有“出售您的”的商品列表 231 temp = item.find("a", attrs={"class", "a-button-text"}) 232 233 if temp != None: 234 if "sellYoursClick" in temp["data-csm"]: 235 # 第二次筛选得到“无数字、无新品”字样的商品列表 236 temp = item.find("span", attrs={"class", "offerCountDetails"}) 237 if temp == None: 238 temp = item.find("div", attrs={"class", "a-fixed-right-grid-col description a-col-left"}) 239 240 # 得到详情页网址 241 hrefurl = temp.find('a').get('href') 242 # 得到所有当前class下的文本信息 243 # 包括title、UPC、EAN、Rank 244 try: 245 spans = temp.get_text() 246 except: 247 spans = "Nothing" 248 # 将得到的文本信息写入数组里面 249 temparr = spans.strip().split(" ") 250 # 正则得到Asin 251 asin = getdp(hrefurl) 252 temparr.append(asin) 253 temparr.append(hrefurl) 254 255 # 这里记录一份副本到txt中,防止程序中断什么都没保存 256 txtcontent = ' '.join(temparr) 257 filename = time.strftime('%Y%m%d', time.localtime()) 258 path = "../xls/" + filename 259 createjia(path) 260 file = open(path + "/" + filename + ".txt", "a") 261 file.write(" " + txtcontent) 262 file.close() 263 264 # 这里是解析详情页,如果详情页有price,就抓取review下来 265 # 并且将抓取的东西储存到数组,并写入excel中 266 # 解析详情页 267 htmldetail = gethtml(hrefurl) 268 269 if 'id="words"' in htmldetail or 'ap_email' in htmldetail or "Amazon.com Page Not Found" in htmldetail: 270 print("抓取得太快!需要重新登陆...") 271 openbrowser(Loginurl) 272 htmldetail = gethtml(hrefurl) 273 274 # BeautifulSoup解析需要的东西 275 soups = BeautifulSoup(htmldetail, "html.parser") 276 # 筛选出商品的centerCol列表 277 centerCols = soups.findAll('div', attrs={'id': "centerCol"}) 278 if centerCols: 279 for item in centerCols: 280 temp = item.find("td", attrs={"id": "priceblock_ourprice_lbl"}) 281 if temp == None: 282 # 得到评分等级 283 star = item.find("a", attrs={"id": "reviewStarsLinkedCustomerReviews"}).get_text() 284 # 得到评分人数 285 reviews = item.find("span", attrs={"id": "acrCustomerReviewText"}).get_text() 286 # 将抓取的东西写入数组 287 if star: 288 temparr.append(star.strip().replace(" out of 5 stars", "")) 289 else: 290 temparr.append("") 291 if reviews: 292 temparr.append(reviews.strip().replace(" customer reviews", "")) 293 else: 294 temparr.append("") 295 296 information.append(temparr) 297 print(information) 298 else: 299 temparr.append("") 300 temparr.append("") 301 information.append(temparr) 302 print(information) 303 return information 304 305 306 def begin(): 307 taoyanbai = ''' 308 ----------------------------------------- 309 | 欢迎使用后台爬虫系统 | 310 | 时间:2016年10月21日 | 311 | 出品:技术部 | 312 ----------------------------------------- 313 ''' 314 print(taoyanbai) 315 316 317 if __name__ == "__main__": 318 319 a = time.clock() 320 321 while 1: 322 try: 323 LoginWhere = int(input("抓取美国请按0,日本请按1:")) 324 if LoginWhere == 0: 325 Loginurl = "https://sellercentral.amazon.com/" 326 break 327 elif LoginWhere == 1: 328 Loginurl = "https://sellercentral.amazon.co.jp/" 329 break 330 except: 331 print("请正确输入0或1!!") 332 LoginWhere = int(input("抓取美国请按0,日本请按1:")) 333 334 keywords = input("请输入查找的关键词:") 335 keyword = keywords.replace(" ", "+") 336 337 print("正在检查登陆状态...") 338 339 if "jp" in Loginurl: 340 seekurl = "https://sellercentral.amazon.co.jp/productsearch?q=" + str(keyword) 341 else: 342 seekurl = "https://sellercentral.amazon.com/productsearch?q=" + str(keyword) 343 344 try: 345 htmlpage = gethtml(seekurl) 346 except Exception as err: 347 input("网络似乎有点问题...") 348 print(err) 349 exit() 350 351 while 1: 352 if 'ap_email' in htmlpage or "Amazon.com Page Not Found" in htmlpage or "<title>404" in htmlpage: 353 print("cookie已经过期,需要重新登陆...") 354 print("等待网页打开...") 355 openbrowser(Loginurl) 356 htmlpage = gethtml(seekurl) 357 else: 358 print("直接使用cookie登陆...") 359 break 360 361 # BeautifulSoup解析需要的东西 362 soups = BeautifulSoup(htmlpage, "html.parser") 363 # 筛选出类别及其网址 364 categorys = soups.findAll('ul', attrs={'class': "a-nostyle a-vertical"}) 365 categoryurl = [] 366 categoryname = "" 367 pagenum = [] 368 filtername = [] 369 370 for item in categorys: 371 for temp in item.find_all("a"): 372 hrefurl = temp.get('href') 373 categoryurl.append(hrefurl) 374 375 for temp in item.find_all("span", attrs={"class", "a-color-tertiary"}): 376 spantext = temp.get_text() 377 pagenum.append(getpagenum(spantext)) 378 for i in range(0, len(categoryurl)): 379 name = getfilter(categoryurl[i]) 380 filtername.append(name) 381 categoryname = categoryname + "抓取(" + str(name) + ")请按" + str(i) + "," 382 383 # 选择抓取的类型 384 try: 385 print(categoryname) 386 selectcategory = int(input("请选择你要抓取类型的数字号码:")) 387 except: 388 print("请正确输入前面的数字!!!") 389 print(categoryname) 390 selectcategory = int(input("请选择你要抓取类型的数字编码:")) 391 392 filter = filtername[selectcategory] 393 mustpage = int(pagenum[selectcategory]) // 10 394 395 try: 396 print("温馨提醒:(1)后台仅仅展现1000页...(2)你要抓取的类型大约有" + str(mustpage) + "页...") 397 page = int(input("请问你要抓取多少页?(默认15页):")) 398 if page > 1000: 399 print("后台最多只能看到1000页!!!") 400 page = int(input("后台仅仅展现1000页!!!你要抓取的类型大约有" + str(mustpage) + "页!!!请问你要抓取多少页?(默认15页):")) 401 except: 402 page = 15 403 404 # 储存抓取到的东西 405 information = [] 406 temparr = [] 407 408 for i in range(0, page): 409 try: 410 if "jp" in Loginurl: 411 # https://sellercentral.amazon.co.jp/productsearch?filter=sporting&q=空気入れ&page=2 412 openurl = "https://sellercentral.amazon.co.jp/productsearch?filter=" + str(filter) + "&q=" + str( 413 keyword) + "&page=" + str(i + 1) 414 else: 415 # https://sellercentral.amazon.com/productsearch?filter=pets&q=dog 416 openurl = "https://sellercentral.amazon.com/productsearch?filter=" + str(filter) + "&q=" + str( 417 keyword) + "&page=" + str(i + 1) 418 419 print("开始抓取:" + str(openurl)) 420 openhtml = gethtml(openurl) 421 422 # BeautifulSoup解析需要的东西 423 soups = BeautifulSoup(openhtml, "html.parser") 424 # 筛选出商品的div 425 sellyours = soups.findAll('div', attrs={'class': "product"}) 426 427 if 'ap_email' in openhtml or "Amazon.com Page Not Found" in openhtml: 428 print("抓取得太快!需要重新登陆...") 429 openbrowser(Loginurl) 430 openhtml = gethtml(openurl) 431 432 elif sellyours == None: 433 print("已经翻到最后一页了...") 434 break 435 temparr = getinfo(openhtml, Loginurl) 436 except Exception as err: 437 print(err) 438 print("访问抓取过程中出现小错误...") 439 print("暂停20秒记录bug并尝试自我修复...") 440 time.sleep(20) 441 442 if temparr: 443 information.append(temparr[0]) 444 loadtime = random.randint(5, 10) 445 print("防止反爬虫设定暂停" + str(loadtime) + "秒...") 446 time.sleep(loadtime) 447 448 print("抓到的列表如下:") 449 print(information) 450 451 # 这里写入excel 452 # 创建文件夹 453 filename = time.strftime('%Y%m%d', time.localtime()) 454 path = "../xls/" + filename 455 createjia(path) 456 457 # 写入excel 458 timename = time.strftime('%Y%H%M%S', time.localtime()) 459 with xlsxwriter.Workbook(path + "/" + timename + '.xlsx') as workbook: 460 # workbook = xlsxwriter.Workbook(path + "/" + timename + '.xlsx') 461 worksheet = workbook.add_worksheet() 462 463 first = ['title', 'UPC', 'EAN', 'Rank', 'Nothing', 'ASIN', 'DetailUrl', 'Star', 'Reviews'] 464 # 写入第一行 465 for i in range(0, len(first)): 466 worksheet.write(0, i, first[i]) 467 # 写入后面几行 468 for m in range(0, len(information)): 469 for n in range(0, len(information[m])): 470 insert = str(information[m][n]).replace("UPC: ", "").replace("EAN: ", "").replace("Sales Rank:", 471 "").replace( 472 "customer reviews", "").replace("out of 5 stars", "") 473 worksheet.write(m + 1, n, insert) 474 workbook.close() 475 476 b = time.clock() 477 print('运行时间:' + timetochina(b - a)) 478 input('请关闭窗口') ##防止运行完毕后窗口直接关闭而看不到运行时间

由于selenium库支持低版本的浏览器,例如本文的谷歌浏览器需要下载插件,并将插件放到目录C:Python34即可:

插件为chromedriver.exe,自己搜索,网上很多哒