论文写作随手记

写完逐条核对吧。

引导读者思维(带节奏)

一篇文章不是十全十美的,总会有一些问题需要回答(给读者回答最有可能被问到的问题);一篇文章也不可能面面俱到,介绍总有轻重缓急。最重要的是,作者应该通过行文,引导读者的思维去向,也就是“带节奏”。

举例:初看Path-Restore: Learning Network Path Selection for Image Restoration这篇文章,内行人会隐约觉得该文与SkipNet有点相似,都是通过RL方法学习一个决策器。此时我们就要澄清这一较大隐患,而不要将这个问题留到审稿阶段。作者于是在introduction部分撰文:

The proposed method differs significantly from several works that explore ways to process an input image dynamically. Yu et al. [39] propose RL-Restore that dynamically selects a sequence of small CNNs to restore a distorted image. Wu et al. [37] and Wang et al. [33] propose to dynamically skip some blocks in ResNet [14] for each image, achieving fast inference while maintaining good performance for the image classification task. These existing dynamic networks treat all image regions equally, and thus cannot satisfy our demand to select different paths for different regions. We provide more discussions in the related work section.

用公式解释问题更简单易懂

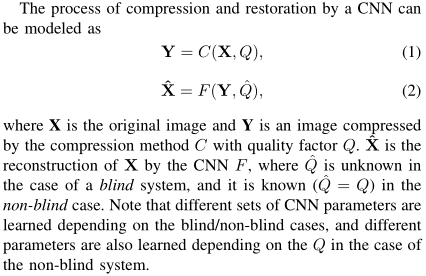

有些时候,我们会遇到比较难解释的问题。比如,我们想解释在图像增强问题中,我们需要对每一个量化参数(QP)的样本单独训练一个模型。这句话用公式解释就简单多了,我们看A Pseudo-Blind Convolutional Neural Network for the Reduction of Compression Artifacts怎么做:

这段话借助两个公式说明了非常多问题:

- 量化参数Q在图像压缩和恢复中起的作用。

- Q和盲/非盲恢复的关系。

- 在非盲训练中,不同Q对应的参数也是不同的,即各自训练过程独立。

规范

-

一般会议论文要求双盲。可以提自己的工作,但要以第三人称提,不要用my或our等字眼。

-

引用、标签等注意加波浪号:

MFQE~cite{yang2018} -

图表caption开头不加the。

-

初稿不用写致谢。

-

不要自己写etal、eg等,要用LaTeX提供的

etal、eg等。是不一样的。 -

要规范使用文本字体、斜体(公式)小写、斜体大写、正体加粗大写、艺术字体等的区别。比如,想用

S_{in}表示输入的sample,那么in应该加上文本标注ext{in};变量和标量用斜体小写(放在公式里就行,不需要标注)即可;矩阵(如特征图)用正体加粗大写mathbf{F};损失函数可以用艺术字mathcal{L}。 -

全文中指标的精度要统一。指标之间可以不同。

-

第一次出现要给全称(不要以为每一个审稿人的常识都和你一样),比如PSNR等。一般不用字母大写。

-

英式都改成美式吧,比如neighbouring的u去掉。

语法

- 专有名词一般不加the,但类似ResNet的本质是xxx network,按理说也可以加。全文统一即可。

留心

-

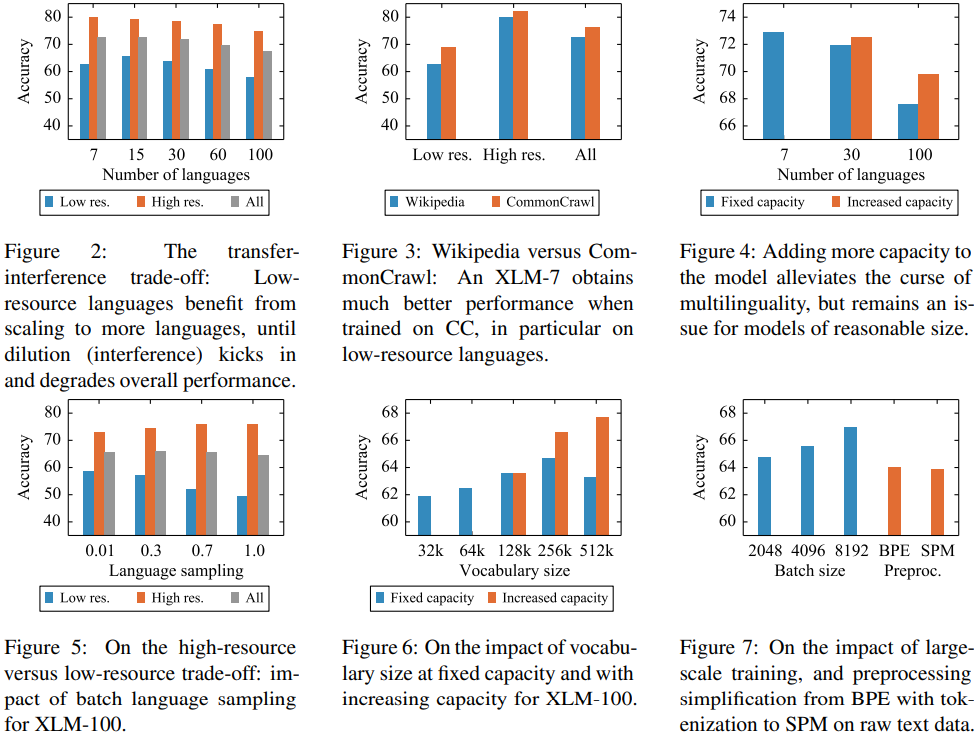

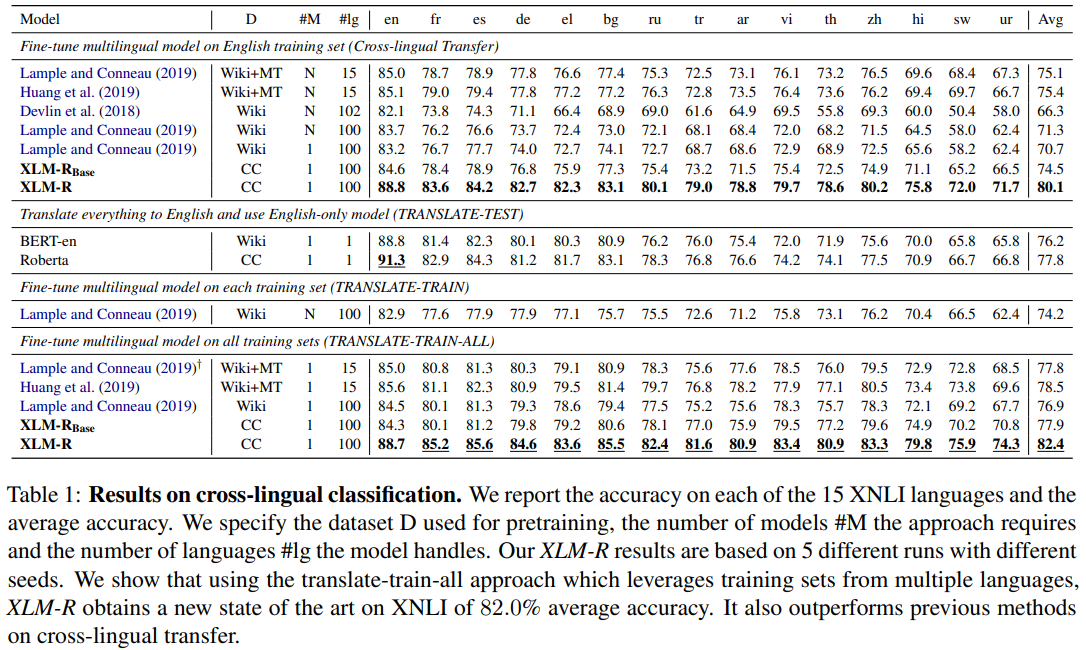

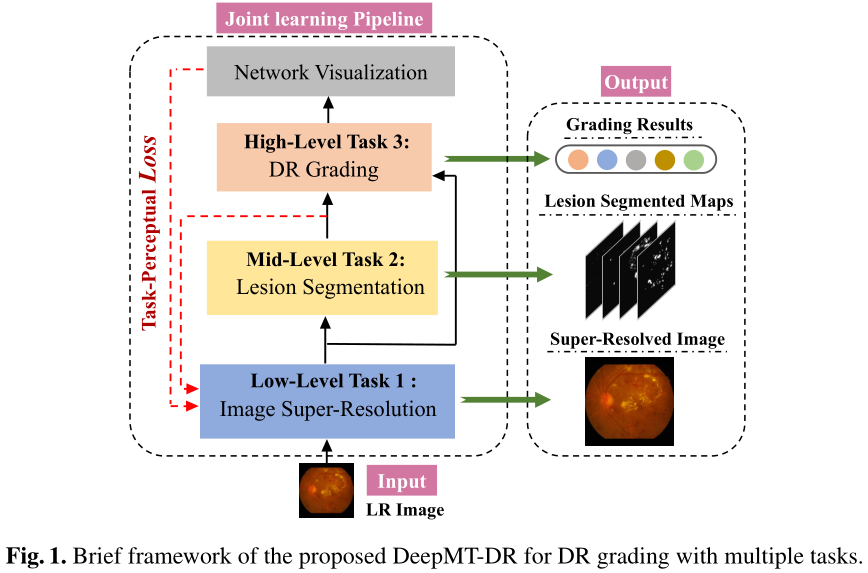

会议和期刊是很不一样的。会议审稿时间短(如CVPR建议审稿人审一篇论文的时间为2小时),第一感觉很重要,因此Figure1、图表的连贯性很重要,每一个图表里的legend、风格最好是连贯、一致的。通常实验比方法更容易被质疑。

-

有些visio图在pdf中会出现直线错位等情况,要缩放看看。

好图好表

基本的配色、图框和legend:

大表格:

文字较多时,可搭配有色框打底:

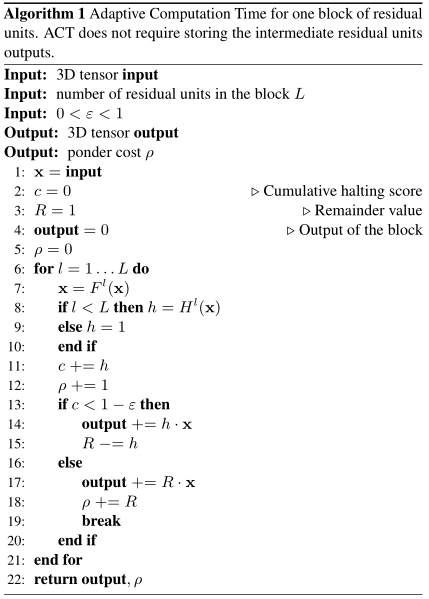

标准算法表:

好表达

包括单词和短语。

-

amenable(易于):These feedforward models are amenable to parallelized implementations.

-

At par with(可比肩):The new IQA quality index performs better than the popular PSNR index and delivers performance at par with top performing NR IQA approaches.

-

Come at the expense of(代价是):These very deep networks come at the expense of increased prediction cost and latency.

-

defer(延后):We defer a discussion of the relationship between these and other methods to section 5.

-

dominate(胜出):In our experiments, we find that the recurrent gate dominates the feed-forward gates in both prediction accuracy and computation cost.

-

drown(沉浸):Consumers are drowning in digital visual content.

-

eschew(避开):We propose the Transformer, a model eschewing recurrence and instead relying entirely on an attention mechanism.

-

First of a kind(第一个):Our contribution in this direction is the development of a NSS-based modeling framework for OU-DU NR IQA design, resulting in a first of a kind NSS-driven blind OU-DU NR IQA model.

-

halve(图(表)的左(右)侧):The Transformer uses stacked self-attention for both the encoder and decoder, shown in the left and right halves of Figure 1.

-

necessitate(迫使):The PointNet, however, still has to respect the fact that a point cloud is just a set of points and therefore invariant to permutations of its

members, necessitating certain symmetrizations in the net computation. -

obscure (to make it difficult to see, hear or understand sth): It introduces quantization artifacts that can obscure natural invariances of the data.

-

render(使得):This data representation transformation renders the resulting data unnecessarily voluminous.

-

seminal(意义重大的):RL-Restore is the seminal work that applies deep reinforcement learning in solving image restoration problems.

-

stem(归功于):PyTorch's success stems from weaving previous ideas into a design that balances speed and ease of use.

-

spanning(涵盖):We explore a range of gating network designs, spanning feed-forward convolutional architectures to recurrent networks with varying degrees of parameter sharing.

A 2D (k imes k) kernel can be inflated as a 3D (t imes k imes k) kernel that spans (t) frames.

-

weave(纳入考虑,融合,编入):PyTorch’s success stems from weaving previous ideas into a design that balances speed and ease of use.

-

Without any bells and whistles(没有任何花哨技巧):Using RGB only and without any bells and whistles, our method achieves results on par with or better than the latest competitions winners.