总体来说代码还不是太完美

实现了js渲染网页的解析的一种思路

主要是这个下拉操作,不能一下拉到底,数据是在中间加载进来的,

具体过程都有写注释

1 from selenium import webdriver 2 import time 3 from selenium.webdriver.support.ui import WebDriverWait 4 from selenium.webdriver.support import expected_conditions as EC 5 from selenium.webdriver.common.by import By 6 from lxml import etree 7 import re 8 import pymongo 9 10 client = pymongo.MongoClient('127.0.0.1', port=27017) 11 db = client.taobao_mark 12 collection = db.informations 13 14 15 def search(url): 16 17 # 设置无头浏览器 18 opt = webdriver.ChromeOptions() 19 opt.set_headless() 20 driver = webdriver.Chrome(options=opt) 21 driver.get(url) 22 23 # 使用显示等待加载输入文本框,设置搜索关键字,以'轻薄本'为例 24 input_key = WebDriverWait(driver, 10).until( 25 EC.presence_of_element_located((By.ID, 'q'))) 26 input_key.send_keys('轻薄本') 27 28 # 显示等待加载搜索按钮,然后执行点击操作 29 search_btn = WebDriverWait(driver, 10).until( 30 EC.presence_of_element_located((By.CLASS_NAME, 'btn-search'))) 31 search_btn.click() 32 33 # 搜索出来的网页内容只有一行与关键字相关,剩下的都是广告 34 # 此时发现更多相关内容在//span[@class="see-all"]这个标签的链接中, 35 # 通过xpath获取链接,进行跳转 36 element = etree.HTML(driver.page_source) 37 list_page_url = element.xpath('//span[@class="see-all"]/a/@href')[0] 38 list_page_url = 'https://s.taobao.com/' + list_page_url 39 driver.close() 40 return list_page_url 41 42 43 def scroll(driver): 44 # 如果没有进行动态加载,只能获取四分之一的内容,调用js实现下拉网页进行动态加载 45 for y in range(7): 46 js='window.scrollBy(0,600)' 47 driver.execute_script(js) 48 time.sleep(0.5) 49 50 51 def get_detail_url(url): 52 53 # 用来存储列表页中商品的的链接 54 detail_urls = [] 55 56 opt = webdriver.ChromeOptions() 57 opt.set_headless() 58 driver = webdriver.Chrome(options=opt) 59 # driver = webdriver.Chrome() 60 driver.get(url) 61 62 scroll(driver) 63 64 # 通过简单处理,获取当前最大页数 65 max_page = WebDriverWait(driver, 10).until( 66 EC.presence_of_element_located((By.XPATH, '//div[@class="total"]')) 67 ) 68 text = max_page.get_attribute('textContent').strip() 69 max_page = re.findall('d+', text, re.S)[0] 70 # max_page = int(max_page) 71 max_page = 1 72 # 翻页操作 73 for i in range(1, max_page+1): 74 print('正在爬取第%s页' % i) 75 # 使用显示等待获取页面跳转页数文本框 76 next_page_btn = WebDriverWait(driver, 10).until( 77 EC.presence_of_element_located((By.XPATH, '//input[@class="input J_Input"]')) 78 ) 79 80 # 获取确定按钮,点击后可进行翻页 81 submit = WebDriverWait(driver, 10).until( 82 EC.element_to_be_clickable((By.XPATH, '//span[@class="btn J_Submit"]')) 83 ) 84 85 next_page_btn.clear() 86 next_page_btn.send_keys(i) 87 # 这里直接点击会报错'提示不可点击',百度说有一个蒙层, 88 # 沉睡两秒等待蒙层消失即可点击 89 time.sleep(2) 90 submit.click() 91 scroll(driver) 92 93 urls = WebDriverWait(driver, 10).until( 94 EC.presence_of_all_elements_located((By.XPATH, '//a[@class="img-a"]')) 95 ) 96 for url in urls: 97 detail_urls.append(url.get_attribute('href')) 98 driver.close() 99 # 返回所有商品链接列表 100 return detail_urls 101 102 def parse_detail(detail_urls): 103 parameters = [] 104 opt = webdriver.ChromeOptions() 105 opt.set_headless() 106 driver = webdriver.Chrome(options=opt) 107 for url in detail_urls: 108 parameter = {} 109 110 print('正在解析网址%s' % url) 111 driver.get(url) 112 html = driver.page_source 113 element = etree.HTML(html) 114 115 # 名字name 116 name = element.xpath('//div[@class="spu-title"]/text()') 117 name = name[0].strip() 118 parameter['name'] = name 119 120 # 价格price 121 price = element.xpath('//span[@class="price g_price g_price-highlight"]/strong/text()')[0] 122 price = str(price) 123 parameter['price'] = price 124 125 # 特点specials 126 b = element.xpath('//div[@class="item col"]/text()') 127 specials = [] 128 for i in b: 129 if re.match('w', i, re.S): 130 specials.append(i) 131 parameter['specials'] = specials 132 133 param = element.xpath('//span[@class="param"]/text()') 134 # 尺寸 135 size = param[0] 136 parameter['size'] = size 137 # 笔记本CPU 138 cpu = param[1] 139 parameter['cpu'] = cpu 140 # 显卡 141 graphics_card = param[2] 142 parameter['graphics_card'] = graphics_card 143 # 硬盘容量 144 hard_disk = param[3] 145 parameter['hard_disk'] = hard_disk 146 # 处理器主频 147 processor = param[4] 148 parameter['processor'] = processor 149 # 内存容量 150 memory = param[5] 151 parameter['memory'] = memory 152 parameter['url'] = url 153 print(parameter) 154 print('='*50) 155 save_to_mongo(parameter) 156 parameters.append(parameter) 157 return parameters 158 159 160 def save_to_mongo(result): 161 try: 162 if collection.insert(result): 163 print('success save to mongodb', result) 164 except Exception: 165 print('error to mongo') 166 167 168 def main(): 169 url = 'https://www.taobao.com/' 170 list_page_url = search(url) 171 detail_urls = get_detail_url(list_page_url) 172 parameters = parse_detail(detail_urls) 173 174 if __name__ == '__main__': 175 main()

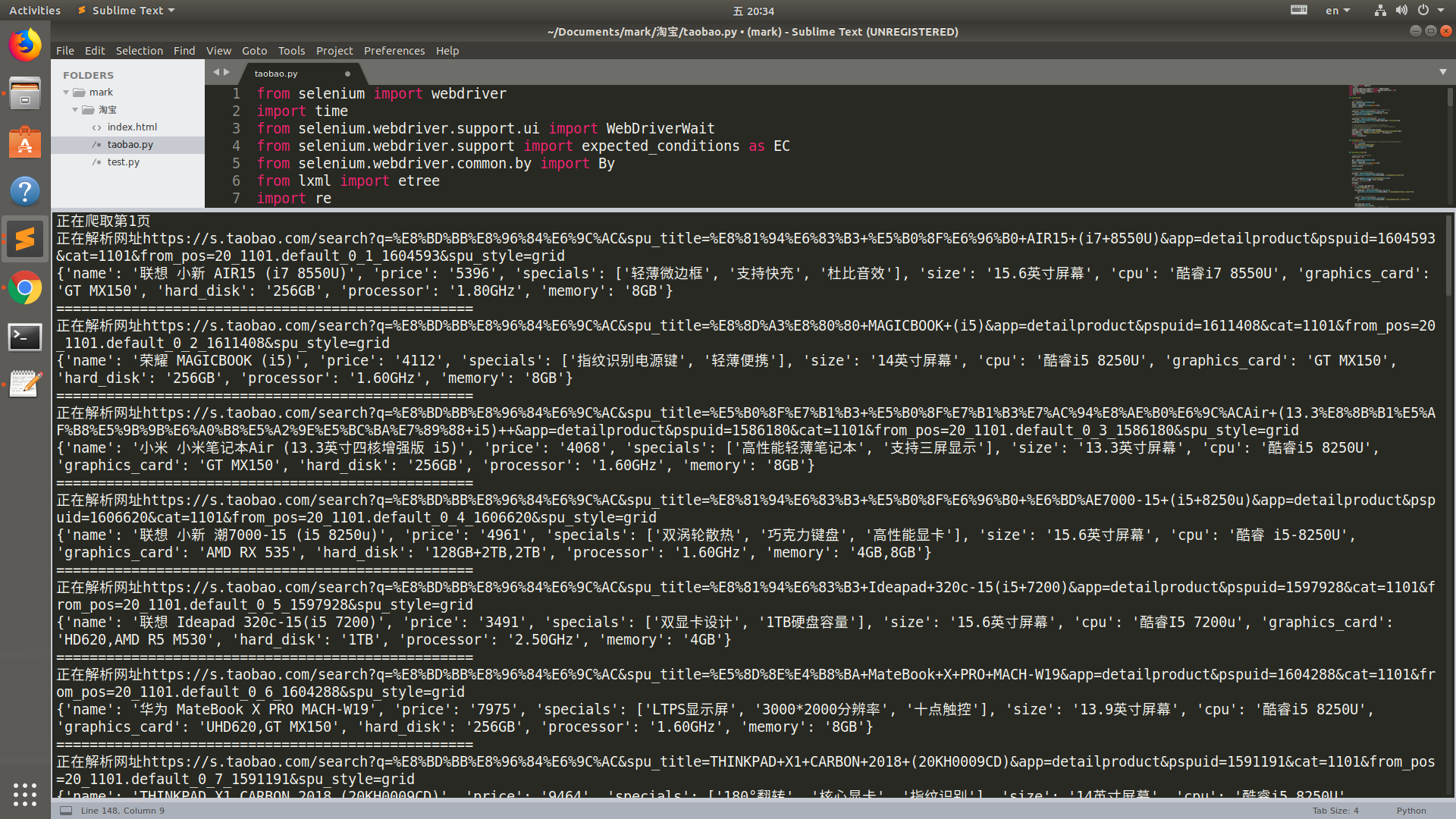

运行结果

数据库