# 全局初始化 tf.global_variables_initializer().run() # 等价于 init = tf.global_variables_initializer() self.sess = tf.Session() self.sess.run(init)

# 优化的分类

optimizer = tf.train.AdamOptimizer()

self.sess = tf.Session() self.cost = 0.5 * tf.reduce_sum(tf.pow(tf.subtract(self.reconstruction, self.x), 2.0)) self.optimizer = optimizer.minimize(self.cost) # 等价于self.optimizer=tf.train.AdamOptimizer().minimize(self.cost)

def partial_fit(self, X):

cost, opt = self.sess.run((self.cost, self.optimizer), feed_dict = {self.x: X, self.scale: self.training_scale }) # 等价于cost,opt = tf.train.Ada,Optimizer().minimize(self.cost).run(feed_dict)

return cost

# 第二种

train_step = tf.train.GradientDescentOptimizer(0.2).minimize(cross_entropy)

train_step.run({x: batch_xs, y_: batch_ys})

# 使用自适应的优化器Adagrad train_step = tf.train.AdagradOptimizer(0.3).minimize(cross_entropy)

# 简单的

self.optimizer = tf.train.AdamOptimizer(learning_rate = learning_rate).minimize(self.loss)

# 文件保存 self.summary_writer = tf.summary.FileWriter(logs_path, graph=tf.get_default_graph())

# 编码和解码

def encoder(self,x, weights):

shapes = []

# Encoder Hidden layer with relu activation #1

shapes.append(x.get_shape().as_list())

layer1 = tf.nn.bias_add(tf.nn.conv2d(x, weights['enc_w0'], strides=[1,2,2,1],padding='SAME'),weights['enc_b0'])

layer1 = tf.nn.relu(layer1) # 激活函数

shapes.append(layer1.get_shape().as_list())

layer2 = tf.nn.bias_add(tf.nn.conv2d(layer1, weights['enc_w1'], strides=[1,2,2,1],padding='SAME'),weights['enc_b1'])

layer2 = tf.nn.relu(layer2)

shapes.append(layer2.get_shape().as_list())

layer3 = tf.nn.bias_add(tf.nn.conv2d(layer2, weights['enc_w2'], strides=[1,2,2,1],padding='SAME'),weights['enc_b2'])

layer3 = tf.nn.relu(layer3)

return layer3, shapes

# Building the decoder

def decoder(self,z, weights, shapes):

# Encoder Hidden layer with relu activation #1

shape_de1 = shapes[2]

layer1 = tf.add(tf.nn.conv2d_transpose(z, weights['dec_w0'], tf.stack([tf.shape(self.x)[0],shape_de1[1],shape_de1[2],shape_de1[3]]),

strides=[1,2,2,1],padding='SAME'),weights['dec_b0'])

layer1 = tf.nn.relu(layer1)

shape_de2 = shapes[1]

layer2 = tf.add(tf.nn.conv2d_transpose(layer1, weights['dec_w1'], tf.stack([tf.shape(self.x)[0],shape_de2[1],shape_de2[2],shape_de2[3]]),

strides=[1,2,2,1],padding='SAME'),weights['dec_b1'])

layer2 = tf.nn.relu(layer2)

shape_de3= shapes[0]

layer3 = tf.add(tf.nn.conv2d_transpose(layer2, weights['dec_w2'], tf.stack([tf.shape(self.x)[0],shape_de3[1],shape_de3[2],shape_de3[3]]),

strides=[1,2,2,1],padding='SAME'),weights['dec_b2'])

layer3 = tf.nn.relu(layer3)

return layer3

# batch选择

def next_batch(data, _index_in_epoch ,batch_size , _epochs_completed):

_num_examples = data.shape[0]

start = _index_in_epoch

_index_in_epoch += batch_size

if _index_in_epoch > _num_examples:

# Finished epoch

_epochs_completed += 1

# Shuffle the data

perm = np.arange(_num_examples) # 创建一个0-np.arange的等差数组

np.random.shuffle(perm) # 随机打乱列表

data = data[perm]

#label = label[perm]

# Start next epoch

start = 0

_index_in_epoch = batch_size

assert batch_size <= _num_examples # 判断

end = _index_in_epoch

return data[start:end], _index_in_epoch, _epochs_completed

如何获得tensorflow各层的参数呢?

def getWeights(self): # 获得隐含层的权重

return self.sess.run(self.weights['w1'])

# 这里的self.weights['w1'] 需要在前面定义过

def print_activations(t):

print(t.op.name, ' ', t.get_shape().as_list())

数据预处理代码:

# 数据零均值,特征方差归一化处理

def standard_scale(X_train, X_test):

preprocessor = prep.StandardScaler().fit(X_train)

X_train = preprocessor.transform(X_train)

X_test = preprocessor.transform(X_test)

return X_train, X_test

batch样本选取

# batch随机选取

def get_random_block_from_data(data, batch_size): start_index = np.random.randint(0, len(data) - batch_size) return data[start_index:(start_index + batch_size)]

# 参数初始化的方法 np.random.normal; np.random.randint; tf.zeros;# tf.random_uniform

def _initialize_weights(self):

all_weights = dict()

all_weights['w1'] = tf.Variable(xavier_init(self.n_input, self.n_hidden))

all_weights['b1'] = tf.Variable(tf.zeros([self.n_hidden], dtype = tf.float32))

all_weights['w2'] = tf.Variable(tf.zeros([self.n_hidden, self.n_input], dtype = tf.float32))

all_weights['b2'] = tf.Variable(tf.zeros([self.n_input], dtype = tf.float32))

return all_weights

hidden = np.random.normal(size = self.weights["b1"]) # 随机噪声

start_index = np.random.randint(0, len(data) - batch_size)

def xavier_init(fan_in, fan_out, constant = 1): # 标准的均匀分布

low = -constant * np.sqrt(6.0 / (fan_in + fan_out))

high = constant * np.sqrt(6.0 / (fan_in + fan_out))

return tf.random_uniform((fan_in, fan_out),

minval = low, maxval = high,

dtype = tf.float32)

W1 = tf.Variable(tf.truncated_normal([in_units, h1_units], stddev=0.1)) # 截断的正太分布,方差为0.1

激活函数:

tf.nn.softplus

tf.nn.relu

tensorflow的卷积

weight2 = variable_with_weight_loss(shape=[5, 5, 64, 64], stddev=5e-2, wl=0.0) # 第二个卷积层权重的定义

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1], padding='SAME')

net = slim.conv2d(inputs, 32, [3, 3], stride=2, scope='Conv2d_1a_3x3') #用slim定义卷积

tensorflow的尺寸转换

x_image = tf.reshape(x, [-1,28,28,1]) # 将1*784的向量转换为28*28的图像

# 卷积层的定义 W_conv1 = weight_variable([5, 5, 1, 32]) b_conv1 = bias_variable([32]) h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1) h_pool1 = max_pool_2x2(h_conv1)

tensorflow获取卷积,池化之后数据的维度函数:

shape = h_pool2.get_shape()[1].value # 这样可以获取第2个维度

def print_activations(t):

print(t.op.name, ' ', t.get_shape().as_list())

卷积层命名

# 将 scope内生成的变量自动命名为conv1

with tf.name_scope('conv1') as scope: kernel = tf.Variable(tf.truncated_normal([11, 11, 3, 64], dtype=tf.float32, stddev=1e-1), name='weights') conv = tf.nn.conv2d(images, kernel, [1, 4, 4, 1], padding='SAME') biases = tf.Variable(tf.constant(0.0, shape=[64], dtype=tf.float32), trainable=True, name='biases') bias = tf.nn.bias_add(conv, biases) conv1 = tf.nn.relu(bias, name=scope) print_activations(conv1) # 打印卷积层1的输出结果 parameters += [kernel, biases]

执行计算

config = tf.ConfigProto() config.gpu_options.allocator_type = 'BFC' sess = tf.Session(config=config) # 可以这么替换 sess = tf.Session()

Block('block1', bottleneck, [(256, 64, 1)] * 2 + [(256, 64, 2)])

# 其中block1是名称,bottleneck是ResNet的残差学习单元,(256,64,1):弟三层的通道数深度是256,前两层的通道数是64,中间那层的步长是3;结构为:【(1*1/S1,64),(3*3/s2,64),(1*1/S1,256)】,

# 在这个Block中,一共有3个bottleneck残差学习单元,除了最后一个步长由3变为2,其余都一样。

一般我们用append得到的是一个list型数据,此时可以用np.array转换为一个三维数组

I = []

Label = []

for i in range(img.shape[2]): # img.shape[2] = 38 个类

for j in range(img.shape[1]): # img.shape[1] = 64 个样本/类

temp = np.reshape(img[:,j,i],[42,48]) # 将向量转换为矩阵

Label.append(i) # list

I.append(temp)

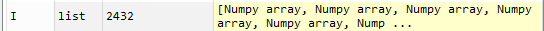

用I.append得到的I

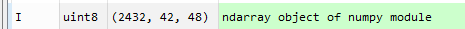

用np.array转换成的矩阵

然后使用Img = np.transpose(I,[0,2,1])得到的数据

然后使用Img = np.expand_dims(Img,3)

perm = np.arange(_num_examples) # 创建一个0-np.arange的等差数组 [0,1,2,3,..10] np.random.shuffle(perm) # 随机打乱列表 [0,2,6,7,..]

# 模型的保存

def save_model(self):

save_path = self.saver.save(self.sess,self.model_path)

print ("model saved in file: %s" % save_path)

# 模型的恢复

def restore(self):

self.saver.restore(self.sess, self.restore_path)

print ("model restored")