中文情感识别 2

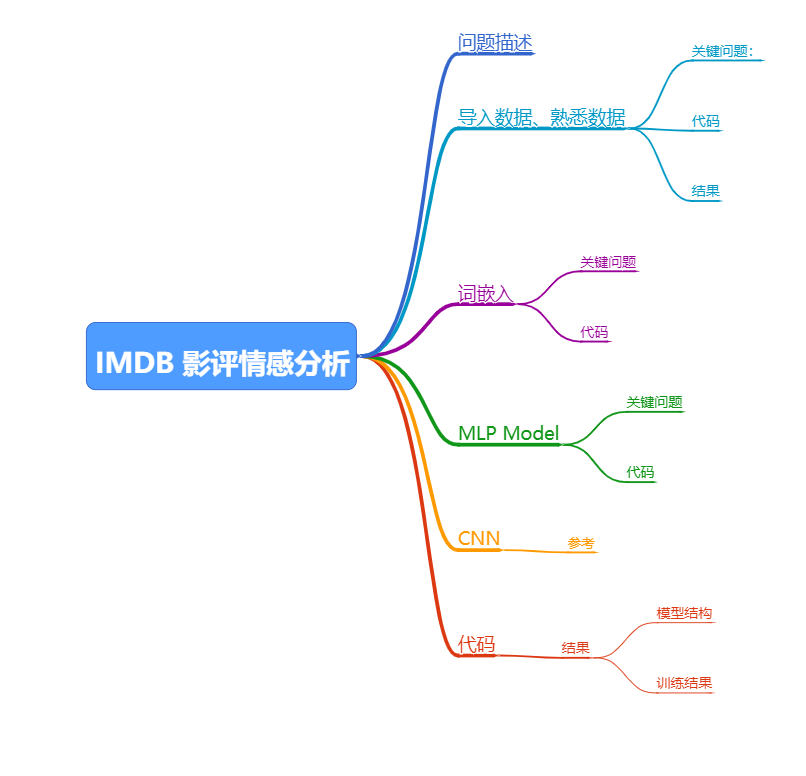

IMDB 影评情感分析

笔记参考自:

- 深度学习:基于 Keras 的 Python 实践 / 魏贞原著. —北京:电子工业出版社, 2018.5

问题描述

在这里使用 IMDB 提供的数据集中的评论信息来分析一部电影的好坏,数据集由

IMDB( http://www.imdb.com/interfaces/)提供,其中包含了 25000 部电影的评价信息。

导入数据、熟悉数据

关键问题:

- np.concatenate

数组连接函数

参考:https://blog.csdn.net/summer2day/article/details/79935058 - np.unique

该函数是去除数组中的重复数字,并进行排序之后输出

参考:https://blog.csdn.net/u012193416/article/details/79672729

参考:https://blog.csdn.net/a2224998/article/details/45499881 - np.hstack

沿着水平方向将数组堆叠起来。

参考:https://blog.csdn.net/u012609509/article/details/70319293 - plt.boxplot

箱线图

https://jingyan.baidu.com/article/9f63fb917e47eac8400f0eac.html - plt.hist

直方图

https://www.jianshu.com/p/f2f75754d4b3

代码

"""

情感分析实例:IMDB 影评情感分析

"""

# %%

from keras.datasets import imdb

import numpy as np

from matplotlib import pyplot as plt

# %% 导入数据

(x_train, y_train), (x_test, y_test) = imdb.load_data()

# 合并训练数据集和评估数据集

x = np.concatenate((x_train, x_test), axis=0)

y = np.concatenate((y_train, y_test), axis=0)

print('x shape is %s, y shape is %s' % (x.shape, y.shape))

print('Classes: %s' % np.unique(y))

print('Total words: %s' % len(np.unique(np.hstack(x))))

result = [len(word) for word in x]

print('Mean: %.2f words (STD: %.2f)' %(np.mean(result), np.std(result)))

# 图表展示

plt.subplot(121)

plt.boxplot(result)

plt.subplot(122)

plt.hist(result)

plt.show()结果

输出

句子长度分布

词嵌入

词嵌入( Word Embeddings)来源于 Bengio 的论文 Neural Probabilistic LanguageModels,是一种将词向量化的概念,是最近自然语言处理领域中的突破。其原理是,单词在高维空间中被编码为实值向量, 其中词语之间的相似性意味着向量空间中的接近度。离散词被映射到连续数的向量。

Keras 通过嵌入层( Embedding)将单词的正整数表示转换为词嵌入。嵌入层需要指定词汇大小预期的最大数量,以及输出的每个词向量的维度。

通过词嵌入来处理 IMDB 数据集,假设只对数据集前 5000 个最常用的单词感兴趣。因此,词向量的大小将为 5000。选择使用 32 维向量来表示每个单词,构建嵌入层输出。而且,将评价的长度限制在 500 个单词以内,长度超过 500 个单词的将转化为比 0 更短的值。

关键问题

-

imdb.load_data(num_words=5000)

num_words: integer or None. Top most frequent words to consider. Any less frequent word will appear as oov_char value in the sequence data. 即选择频率排名在5000以前的单词经行研究。 -

sequence.pad_sequences

序列补齐函数 :每一个评论长短是不一致的,补齐以后得到相同的句子

代码

"""

情感分析实例:IMDB 影评情感分析

"""

from keras.datasets import imdb

from keras.preprocessing import sequence

from keras.layers.embeddings import Embedding

(x_train, y_train), (x_validation, y_validation) = imdb.load_data(num_words=5000)

x_train = sequence.pad_sequences(x_train, maxlen=500)

x_validation = sequence.pad_sequences(x_validation, maxlen=500)

# 构建嵌入层

Embedding(5000, 32, input_length=500)

MLP Model

关键问题

- model.summary()

prints a summary representation of your model. - Converting sparse IndexedSlices to a dense Tensor of unknown shape. This may consume a large amount of memory.

代码

"""

情感分析实例:IMDB 影评情感分析

"""

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

from keras.datasets import imdb

import numpy as np

from keras.preprocessing import sequence

from keras.layers.embeddings import Embedding

from keras.layers import Dense, Flatten

from keras.models import Sequential

seed =7

top_words = 5000

max_words = 500

out_dimension = 32

batch_size = 128

epochs = 2

def create_model():

model = Sequential()

#构建嵌入层

model.add(Embedding(

top_words, out_dimension, input_length=max_words

)

)

model.add(

Flatten()

)

model.add(Dense(250, activation='relu'))

model.add(Dense(1,activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

model.summary()

return model

np.random.seed(seed)

# 导入数据

(x_train, y_train), (x_validation, y_validation) = imdb.load_data(num_words=top_words)

# 限定数据集的长度

x_train = sequence.pad_sequences(x_train, maxlen=max_words)

x_validation = sequence.pad_sequences(x_validation, maxlen=max_words)

model = create_model()

model.fit(x_train,y_train,validation_data=(x_validation, y_validation), batch_size = batch_size, epochs=epochs, verbose=2)CNN

参考

- http://frankchen.xyz/2017/12/18/How-to-Use-Word-Embedding-Layers-for-Deep-Learning-with-Keras/

这个文章写的很好,关于词嵌入写的很好了。

代码

"""

情感分析实例:IMDB 影评情感分析

CNN

"""

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

from keras.datasets import imdb

import numpy as np

from keras.preprocessing import sequence

from keras.layers.embeddings import Embedding

from keras.layers.convolutional import Conv1D, MaxPooling1D

from keras.layers import Dense, Flatten

from keras.models import Sequential

seed = 7

top_words = 5000

max_words = 500

out_dimension = 32

batch_size = 128

epochs = 2

def create_model():

model = Sequential()

model.add(

Embedding(top_words, output_dim=out_dimension, input_length=max_words)

)

model.add(

Conv1D(filters=32, kernel_size=3, padding='same', activation='relu')

)

model.add(MaxPooling1D(pool_size=2))

model.add(Flatten())

model.add(Dense(250, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

model.summary()

return model

np.random.seed(seed=seed)

(x_train, y_train), (x_validation, y_validation) = imdb.load_data(num_words=top_words)

x_train = sequence.pad_sequences(x_train, maxlen=max_words)

x_validation = sequence.pad_sequences(x_validation, maxlen=max_words)

# 生成模型

model = create_model()

model.fit(x_train, y_train, validation_data=(x_validation, y_validation), batch_size=batch_size,epochs=epochs, verbose=2)结果

模型结构

Model: "sequential_3"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding_3 (Embedding) (None, 500, 32) 160000

_________________________________________________________________

conv1d_1 (Conv1D) (None, 500, 32) 3104

_________________________________________________________________

max_pooling1d_1 (MaxPooling1 (None, 250, 32) 0

_________________________________________________________________

flatten_2 (Flatten) (None, 8000) 0

_________________________________________________________________

dense_3 (Dense) (None, 250) 2000250

_________________________________________________________________

dense_4 (Dense) (None, 1) 251

=================================================================

Total params: 2,163,605

Trainable params: 2,163,605

Non-trainable params: 0训练结果

Train on 25000 samples, validate on 25000 samples

Epoch 1/2

- 34s - loss: 0.4359 - accuracy: 0.7736 - val_loss: 0.2756 - val_accuracy: 0.8862

Epoch 2/2

- 33s - loss: 0.2091 - accuracy: 0.9178 - val_loss: 0.3009 - val_accuracy: 0.8742