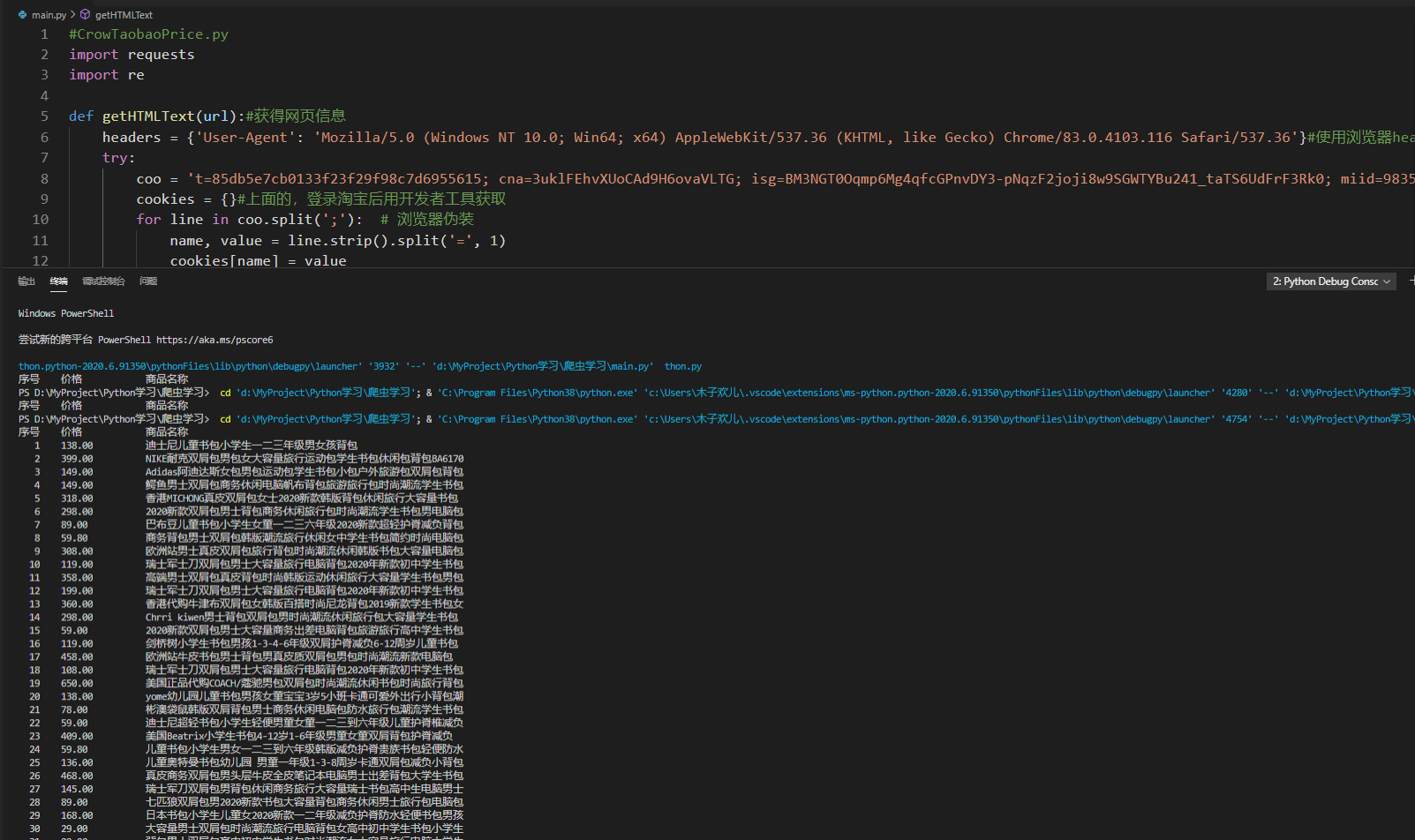

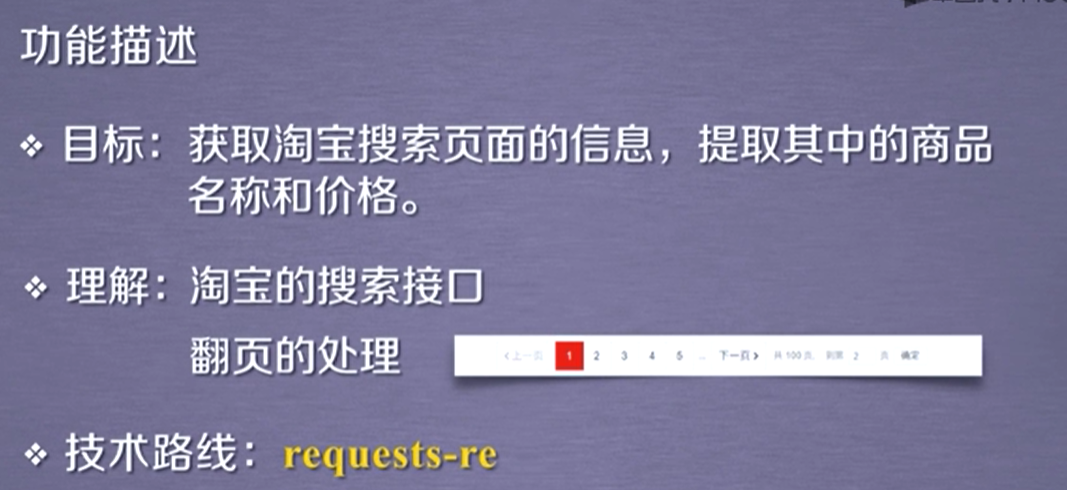

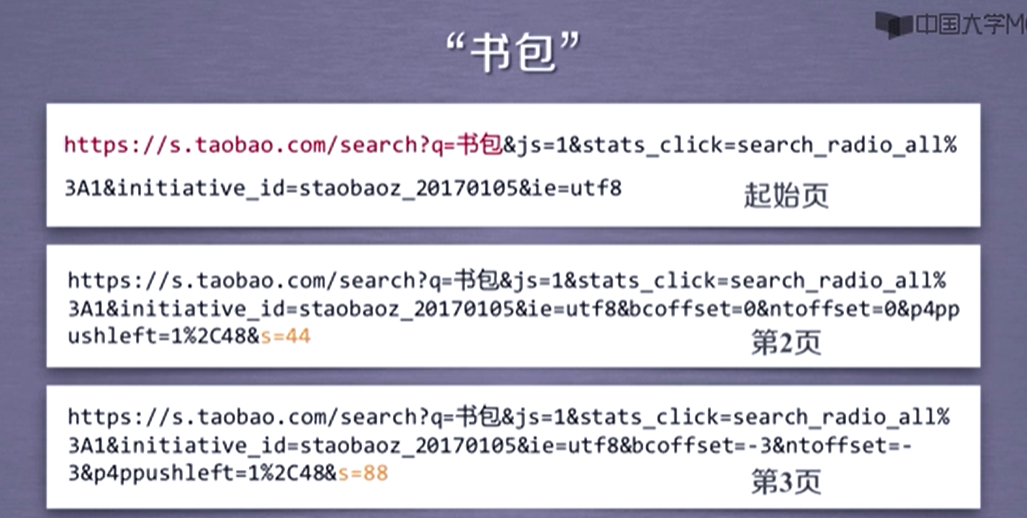

#CrowTaobaoPrice.py import requests import re def getHTMLText(url):#获得网页信息 headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.116 Safari/537.36'}#使用浏览器header try: coo = 't=85db5e7cb0133f23f29f98c7d6955615; cna=3uklFEhvXUoCAd9H6ovaVLTG; isg=BM3NGT0Oqmp6Mg4qfcGPnvDY3-pNqzF2joji8w9SGWTYBu241_taTS6UdFrF3Rk0; miid=983575671563913813; thw=cn; um=535523100CBE37C36EEFF761CFAC96BC4CD04CD48E6631C3112393F438E181DF6B34171FDA66B2C2CD43AD3E795C914C34A100CE538767508DAD6914FD9E61CE; _cc_=W5iHLLyFfA%3D%3D; tg=0; enc=oRI1V9aX5p%2BnPbULesXvnR%2BUwIh9CHIuErw0qljnmbKe0Ecu1Gxwa4C4%2FzONeGVH9StU4Isw64KTx9EHQEhI2g%3D%3D; hng=CN%7Czh-CN%7CCNY%7C156; mt=ci=0_0; hibext_instdsigdipv2=1; JSESSIONID=EC33B48CDDBA7F11577AA9FEB44F0DF3' cookies = {}#上面的,登录淘宝后用开发者工具获取 for line in coo.split(';'): # 浏览器伪装 name, value = line.strip().split('=', 1) cookies[name] = value r = requests.get(url,cookies=cookies,headers=headers,timeout=30) r.raise_for_status() r.encoding = r.apparent_encoding return r.text except: return "" def parsePage(ilt, html):#对所得页面进行解析 try: plt = re.findall(r'"view_price":"[d.]*"',html) tlt = re.findall(r'"raw_title":".*?"',html)#.*?-最小匹配/匹配的内容是商品本身的名字 for i in range(len(plt)): price = eval(plt[i].split(':')[1])#通过split方法分割:后面的部分只取价格 title = eval(tlt[i].split(':')[1])#eval去掉双引号 ilt.append([price , title]) except: print("") def printGoodsList(ilt):#将淘宝得商品信息输出到屏幕上 tplt = "{:4} {:8} {:16}"#规定输出格式 print(tplt.format("序号", "价格", "商品名称")) count = 0 for g in ilt: count = count + 1 print(tplt.format(count, g[0], g[1])) def main(): goods = '书包' depth = 3 # 要爬取几页 start_url = 'https://s.taobao.com/search?q=' + goods infoList = [] for i in range(depth): try: url = start_url + '&s=' + str(44*i) html = getHTMLText(url) parsePage(infoList, html) except: continue printGoodsList(infoList) main()

效果