1.环境: spark2.1.0+hadoop+jdk

设置环境

配置环境+激活环境

vim ~/.bashrc //设置配置文件

保存配置

source ~/.bashrc

启动环境

hadoop

start-all.sh

spark

spark-shell

编写应用文本

gedit word.txt

/**

*Spark is good

*Hadoop is good

*Spark is fast

*/

上传到hadoop

hadoop fs -mkdir -p /user/hadoop

hadoop fs -put word.txt /user/hadoop

hadoop fs -cat /user/hadoop/word.txt

使用spark脚本访问Hadoop上的word.txt

val lines = sc.textFile("hdfs://localhost:9000/user/hadoop/word.txt")

lines.foreach(v =>println(v))

筛选spark在word.txt中出现次数

filter:筛选器

val lines = sc.textFile("hdfs://localhost:9000/user/hadoop/word.txt")

val linesWithSpark = lines.filter(line => line.contains("spark"))

println("Lines with Spark :" + linesWithSpark.count()

LISTARRAY等的操作

val data = Array(1,2,3,4,5)

val rdd1 = sc.parallelize(data)

val rdd2 = rdd1.map(x => x+10)

rdd2.foreach(v =>println(v))

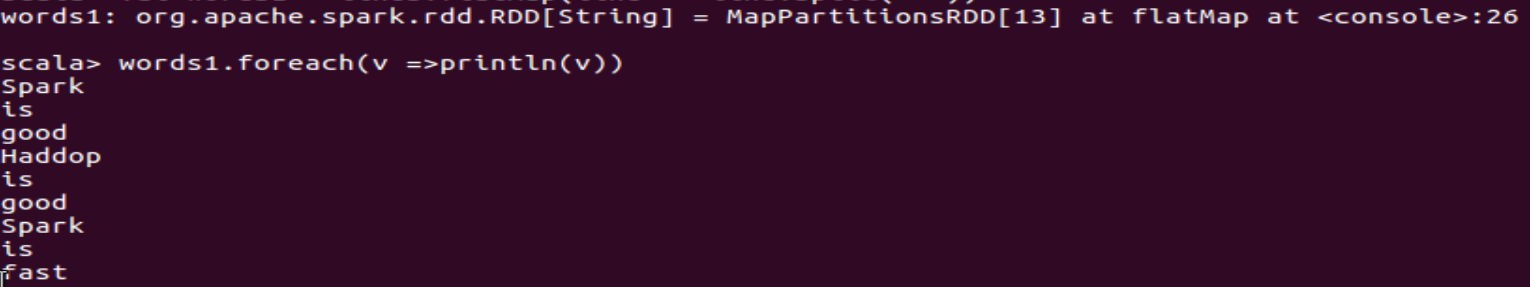

以“ ”为分割依据分割文本

val lines = sc.textFile("hdfs://localhost:9000/user/hadoop/word.txt")

lines.foreach(v=>println(v))

val words = lines.map(line => line.split(" "))

words.foreach(v => println(v))

words.foreach(v =>v.foreach(t => println(t))

val words1 = lines.flatMap(line => line.split(" "))

words1.foreach(v =>println(v))

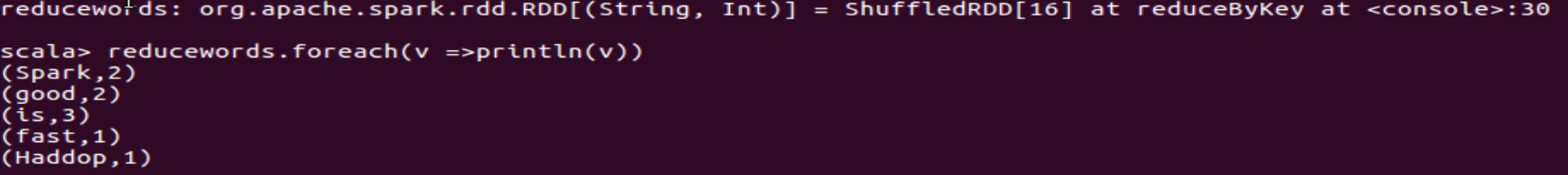

统计词频

val wordPairsRdd = words1.map(word => (word,1))

wordPairsRdd.foreach(v => println(v))

val groupwords = wordPairsRdd.groupByKey() //按照键分组

groupwords.foreach(v => println(v))

val reducewords = wordPairsRdd.reduceByKey((a,b) => a+b)

reducewords.foreach(v =>println(v))

spark对数据操作

val rdd = sc.parallelize(Array(1,2,3,4,5,6))

rdd.count()

rdd.first()

rdd.take(4)

rdd.reduce((a,b) => a+b)

rdd.collect()