第一步:查看本机是否安装 jdk ,如果有对进行删除

# 先查看是否已经安装的jdk [root@localhost ~]# rpm -qa | grep java java-1.7.0-openjdk-1.7.0.191-2.6.15.5.el7.x86_64 python-javapackages-3.4.1-11.el7.noarch java-1.7.0-openjdk-headless-1.7.0.191-2.6.15.5.el7.x86_64 java-1.8.0-openjdk-headless-1.8.0.181-7.b13.el7.x86_64 tzdata-java-2018e-3.el7.noarch javapackages-tools-3.4.1-11.el7.noarch java-1.8.0-openjdk-1.8.0.181-7.b13.el7.x86_64 # 将已安装的jdk删除 [root@localhost ~]# rpm -e --nodeps java-1.7.0-openjdk-1.7.0.191-2.6.15.5.el7.x86_64 [root@localhost ~]# rpm -e --nodeps java-1.7.0-openjdk-headless-1.7.0.191-2.6.15.5.el7.x86_64 [root@localhost ~]# rpm -e --nodeps java-1.7.0-openjdk-headless-1.7.0.191-2.6.15.5.el7.x86_64

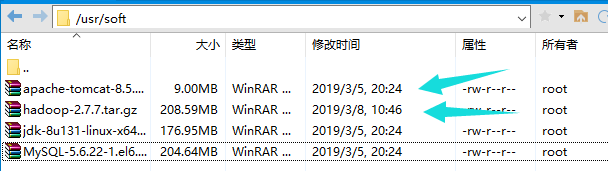

第二步:将所需要的环境加入文件soft下(soft文件夹创建再/usr下)

第三步:对 jdk 和 hadoop 进行解压

// 对 jdk 解压并配置环境变量

# 解压jdk [root@localhost ~]# cd /usr/soft [root@localhost soft]# ls apache-tomcat-8.5.20.tar.gz MySQL-5.6.22-1.el6.i686.rpm-bundle.tar jdk-8u131-linux-x64.tar.gz [root@localhost soft]# tar -xvf jdk-8u131-linux-x64.tar.gz -C /usr/local # 配置环境变量(打开后在末尾添加以下内容) [root@localhost ~]# vim /etc/profile

#set java environment JAVA_HOME=/usr/local/jdk1.7.0_71 CLASSPATH=.:$JAVA_HOME/lib.tools.jar PATH=$JAVA_HOME/bin:$PATH export JAVA_HOME CLASSPATH PATH

// 对 hadoop 解压并配置环境变量(初步配置)

# 将hadoop压缩文件复制到文件 /usr/soft下 # 解压hadoop 解压到 /usr/local/hadoop [root@localhost soft]# tar -xvf hadoop-2.7.7.tar.gz -C /usr/local/hadoop # 配置环境变量 [root@localhost ~]# vi /etc/profile

# hadoop export HADOOP_HOME=/usr/local/hadoop/hadoop-2.7.7 export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

// 配置完成 jdk 和 hadoop 环境变量后重新加载配置文件‘

[root@localhost ~]# source /etc/profile

// 检查环境配置是否完成

########## 检查java是否安装完成 [root@doaoao /]# java 用法: java [-options] class [args...] (执行类) 或 java [-options] -jar jarfile [args...] (执行 jar 文件) 其中选项包括: -d32 使用 32 位数据模型 (如果可用) -d64 使用 64 位数据模型 (如果可用) -server 选择 "server" VM 默认 VM 是 server. -cp <目录和 zip/jar 文件的类搜索路径> -classpath <目录和 zip/jar 文件的类搜索路径> 用 : 分隔的目录, JAR 档案 和 ZIP 档案列表, 用于搜索类文件。 -D<名称>=<值> 设置系统属性 -verbose:[class|gc|jni] 启用详细输出 -version 输出产品版本并退出 -version:<值> 警告: 此功能已过时, 将在 未来发行版中删除。 需要指定的版本才能运行 -showversion 输出产品版本并继续 -jre-restrict-search | -no-jre-restrict-search 警告: 此功能已过时, 将在 未来发行版中删除。 在版本搜索中包括/排除用户专用 JRE -? -help 输出此帮助消息 -X 输出非标准选项的帮助 -ea[:<packagename>...|:<classname>] -enableassertions[:<packagename>...|:<classname>] 按指定的粒度启用断言 -da[:<packagename>...|:<classname>] -disableassertions[:<packagename>...|:<classname>] 禁用具有指定粒度的断言 -esa | -enablesystemassertions 启用系统断言 -dsa | -disablesystemassertions 禁用系统断言 -agentlib:<libname>[=<选项>] 加载本机代理库 <libname>, 例如 -agentlib:hprof 另请参阅 -agentlib:jdwp=help 和 -agentlib:hprof=help -agentpath:<pathname>[=<选项>] 按完整路径名加载本机代理库 -javaagent:<jarpath>[=<选项>] 加载 Java 编程语言代理, 请参阅 java.lang.instrument -splash:<imagepath> 使用指定的图像显示启动屏幕 有关详细信息, 请参阅 http://www.oracle.com/technetwork/java/javase/documentation/index.html。 [root@doaoao /]# java -version java version "1.8.0_131" Java(TM) SE Runtime Environment (build 1.8.0_131-b11) Java HotSpot(TM) 64-Bit Server VM (build 25.131-b11, mixed mode) ######### 检查 hadoop 是否安装完成 [root@doaoao /]# hadoop /usr/local/hadoop/hadoop-2.7.7/etc/hadoop/hadoop-env.sh:行24: The: 未找到命令 Usage: hadoop [--config confdir] [COMMAND | CLASSNAME] CLASSNAME run the class named CLASSNAME or where COMMAND is one of: fs run a generic filesystem user client version print the version jar <jar> run a jar file note: please use "yarn jar" to launch YARN applications, not this command. checknative [-a|-h] check native hadoop and compression libraries availability distcp <srcurl> <desturl> copy file or directories recursively archive -archiveName NAME -p <parent path> <src>* <dest> create a hadoop archive classpath prints the class path needed to get the credential interact with credential providers Hadoop jar and the required libraries daemonlog get/set the log level for each daemon trace view and modify Hadoop tracing settings Most commands print help when invoked w/o parameters.

第四步:补充,修改主机名称

# 1 查看主机名称(红色部分) [root@doaoao /]# hostnamectl Static hostname: doaoao Icon name: computer-vm Chassis: vm Machine ID: 51ba8fcff16947be99cc12250cfd754a Boot ID: fe59876c79f64ed8939212b59a3e121a Virtualization: vmware Operating System: CentOS Linux 7 (Core) CPE OS Name: cpe:/o:centos:centos:7 Kernel: Linux 3.10.0-957.el7.x86_64 Architecture: x86-64 # 2 修改主机名称 [root@doaoao /]# hostnamectl set-hostname doaoao # 3 永久修改主机名称 [root@doaoao /]# hostnamectl --pretty # 灵活主机名 [root@doaoao /]# hostnamectl --static # 静态 doaoao [root@doaoao /]# hostnamectl --transient # 瞬态 doaoao [root@doaoao /]# # 4 修改hosts文件 [root@doaoao /]# vim /etc/hosts # 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 127.0.0.1 doaoao # ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 ::1 doaoao # 5 重启虚拟机生效 [root@doaoao /]# reboot

补充:配置文件hosts

# 这个文件的作用是,使用当前的start-dis.sh启动时hdfs时,会自动将各个atannode节点自动启动 [root@localhost html]# vim /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.37.138 doaoao 192.168.37.139 OLDTWO 192.168.37.140 OLDTHREE

第五步:配置各个节点名

# 主节点 [root@doaoao /]# vim /etc/sysconfig/network # Created by anaconda NETWORKING=yes HOSTNAME=doaoao # 子节点OLDTWO # Created by anaconda NETWORKING=yes HOSTNAME=OLDTWO # 子节点OLDTHREE # Created by anaconda NETWORKING=yes HOSTNAME=OLDTHREE

第六步:配置ssh无密码访问

# 生成公钥 [oot@doaoao /]# cd ~/.ssh [root@doaoao .ssh]# ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): /root/.ssh/id_rsa already exists. Overwrite (y/n)? y Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:fUwvgX6xqOIPhsq2/RifFkiPxZiRuoCag78iPq7dFYc root@doaoao The key's randomart image is: +---[RSA 2048]----+ | . | | o . | |. . = . + | |o . + o. o + = | |oo o =E S + * . | |= . o.oo . o . | | o o =.. | |o+o+ B.+ | |*=Bo+o=.. | +----[SHA256]-----+ // 执行结束之后每个节点上的/root/.ssh/目录下生成了两个文件 id_rsa 和 id_rsa.pub 其中前者为私钥,后者为公钥 # 将主节点的公钥拷贝到文件authorized_keys中 [root@doaoao .ssh]# cp id_rsa.pub authorized_keys # 再每个子节点上生成公钥id_rsa.pub (与主节点生成公钥方法相同) # 将子节点的公钥拷贝到主节点的指定文件夹下 [root@OLDTWO .ssh]# scp ~/.ssh/id_rsa.pub root@doaoao:/~/.ssh/id_rsa_OLDTWO.pub [root@OLDTHREE .ssh]# scp ~/.ssh/id_rsa.pub root@doaoao:/~/.ssh/id_rsa_OLDTHREE.pub # 将从子节点拷贝过来的含有各个节点公钥的文件合并到 authorized_keys 文件中 [root@doaoao .ssh]# cat id_rsa_OLDTWO.pub >> authorized_keys [root@doaoao .ssh]# cat id_rsa_OLDTHREE.pub >> authorized_keys # 测试是否可以进行免密登录 [root@doaoao .ssh]# ssh OLDTWO Last login: Wed Mar 13 17:53:13 2019 from 192.168.65.128 [root@OLDTWO ~]# exit 登出 Connection to oldtwo closed. [root@doaoao .ssh]# ssh OLDTHREE Last login: Wed Mar 13 17:53:24 2019 from 192.168.65.128 [root@OLDTHREE ~]#

第七步:编辑 hadoop 相关文件

需要配置的文件的位置为/hadoop-2.6.4/etc/hadoop,需要修改的有以下几个

hadoop-env.sh,yarn-env.sh,core-site.xml,hdfs-site.xml,mapred-site.xml,yarn-site.xml,slaves

首先:进入到装有hadoop文件的目录下

[root@doaoao /]# cd /usr/local/hadoop/hadoop-2.7.7/etc/hadoop [root@doaoao hadoop]#

// [root@doaoao hadoop]# vim hadoop-env.sh

# The java implementation to use. export JAVA_HOME=/home/usr/local/jdk1.8.0_131

// [root@doaoao hadoop]# vim yarn-env.sh

# some Java parameters export JAVA_HOME=/home/usr/local/jdk1.8.0_131

// [root@doaoao hadoop]# vim core-site.xml

# 添加一下字段,红色部分改为自己主机名称 <configuration> <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://doaoao:9000</value> </property> <property> <name>io.file.buffer.size</name> <value>131072</value> </property> <property> <name>hadoop.tmp.dir</name> <value>file:/usr/temp</value> </property> <property> <name>hadoop.proxyuser.root.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.root.groups</name> <value>*</value> </property> </configuration>

// [root@doaoao hadoop]# vim hdfs-site.xml

# 将红色部分改为自己主机名称 <configuration> <property> <name>dfs.namenode.secondary.http-address</name> <value>doaoao:9001</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/usr/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/usr/dfs/data</value> </property> <property> <name>dfs.replication</name> <value>2</value> </property> <property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> <property> <name>dfs.permissions</name> <value>false</value> </property> <property> <name>dfs.web.ugi</name> <value>supergroup</value> </property> </configuration>

// [root@doaoao hadoop]# vim mapred-site.xml.template

# 将红色部分改为自己主机名 <configuration> </property> <property> <name>mapreduce.jobhistory.address</name> <value>doaoao:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>doaoao:19888</value> </property> </configuration>

// [root@doaoao hadoop]# vim yarn-site.xml

# 将红色部分改为自己主机名 <configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> <property> <name>yarn.resourcemanager.address</name> <value>doaoao:8032</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>doaoao:8030</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>doaoao:8031</value> </property> <property> <name>yarn.resourcemanager.admin.address</name> <value>doaoao:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>doaoao:8088</value> </property> </configuration>

// [root@doaoao hadoop]# vim slaves

# 将主机和从机名添加进来(将原有的localhost删除) doaoao <------------- 主机 ODLTWO <------------- 从机 OLDTHREE <------------- 从机

第八步:将文件分发到子节点中(安装到子节点中)

## 将hadoop分发到子节点中 # 从机OLDTWO [root@doaoao .ssh]# scp -r /usr/local/hadoop/hadoop-2.7.7 root@OLDTWO:/usr/hadoop/ # 从机OLDTHREE [root@doaoao .ssh]# scp -r /usr/local/hadoop/hadoop-2.7.7 root@OLDTHREE:/usr/hadoop/

## 将配置文件profile发送到子节点 # 从机OLDTWO [root@doaoao .ssh]# scp /etc/profile root@OLDTWO:/etc/ profile 100% 2083 989.5KB/s 00:00 # 从机OLDTHREE [root@doaoao .ssh]# scp /etc/profile root@OLDTHREE:/etc/ profile 100% 2083 1.7MB/s 00:00

## 将jdk发送到子节点上 # 从机OLDTWO [root@doaoao .ssh]# scp -r /usr/local/jdk1.8.0_131 root@OLDTWO:/usr/local # 从机OLDTWO [root@doaoao .ssh]# scp -r /usr/local/jdk1.8.0_131 root@OLDTHREE:/usr/local

待补充:配置静态ip