一,hadoop下载

(前提:先安装java环境)

下载地址:http://hadoop.apache.org/releases.html(注意是binary文件,source那个是源码)

二,解压配置

tar xzvf hadoop-2.10.0.tar.gz [root@192-168-22-220 hadoop-2.10.0]# echo $JAVA_HOME /usr/java/jdk1.8.0_231-amd64 [root@192-168-22-220 hadoop-2.10.0]# vi /home/hadoop-2.10.0/etc/hadoop/hadoop-env.sh export JAVA_HOME=/usr/java/jdk1.8.0_231-amd64

[root@192-168-22-220 hadoop-2.10.0]# vi /home/hadoop-2.10.0/etc/hadoop/yarn-env.sh

export JAVA_HOME=/usr/java/jdk1.8.0_231-amd64

[root@192-168-22-220 hadoop-2.10.0]# hostname 192-168-22-220 [root@192-168-22-220 ~]# mkdir /home/hadoop-2.10.0/tmp [root@192-168-22-220 ~]# vi /home/hadoop-2.10.0/etc/hadoop/core-site.xml <configuration> <!-- 指定HDFS老大(namenode)的通信地址 --> <property> <name>fs.defaultFS</name> <value>hdfs://localhost:9000</value> </property> <!-- 指定hadoop运行时产生文件的存储路径 --> <property> <name>hadoop.tmp.dir</name> <value>/home/hadoop-2.10.0/tmp</value> </property> </configuration>

[root@192-168-22-220 hadoop-2.10.0]# mkdir /home/hadoop-2.10.0/hdfs [root@192-168-22-220 hadoop-2.10.0]# mkdir /home/hadoop-2.10.0/hdfs/name [root@192-168-22-220 hadoop-2.10.0]# mkdir /home/hadoop-2.10.0/hdfs/data [root@192-168-22-220 hadoop-2.10.0]# vi /home/hadoop-2.10.0/etc/hadoop/hdfs-site.xml <configuration> <property> <name>dfs.name.dir</name> <value>/home/hadoop-2.10.0/hdfs/name</value> <description>namenode上存储hdfs名字空间元数据 </description> </property> <property> <name>dfs.data.dir</name> <value>/home/hadoop-2.10.0/hdfs/data</value> <description>datanode上数据块的物理存储位置</description> </property> <property> <name>dfs.replication</name> <value>1</value> <description>副本个数,配置默认是3,应小于datanode机器数量</description> </property> </configuration>

[root@192-168-22-220 hadoop]# cp mapred-site.xml.template mapred-site.xml [root@192-168-22-220 hadoop]# vi /home/hadoop-2.10.0/etc/hadoop/mapred-site.xml <configuration> <!-- 通知框架MR使用YARN --> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

[root@192-168-22-220 hadoop]#vi /home/hadoop-2.10.0/etc/hadoop/yarn-site.xml <configuration> <!-- reducer取数据的方式是mapreduce_shuffle --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> </configuration>

三,环境变量

vi /etc/profile export HADOOP_HOME=/home/hadoop-2.10.0 export PATH=$PATH:$HADOOP_HOME/bin source /etc/profile

四,免密登陆

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys chmod 0600 ~/.ssh/authorized_keys

五,第一次先格式化hdfs

[root@192-168-22-220 hadoop-2.10.0]# pwd

/home/hadoop-2.10.0

[root@192-168-22-220 hadoop-2.10.0]# ./bin/hdfs namenode -format #或者 hadoop namenode -format

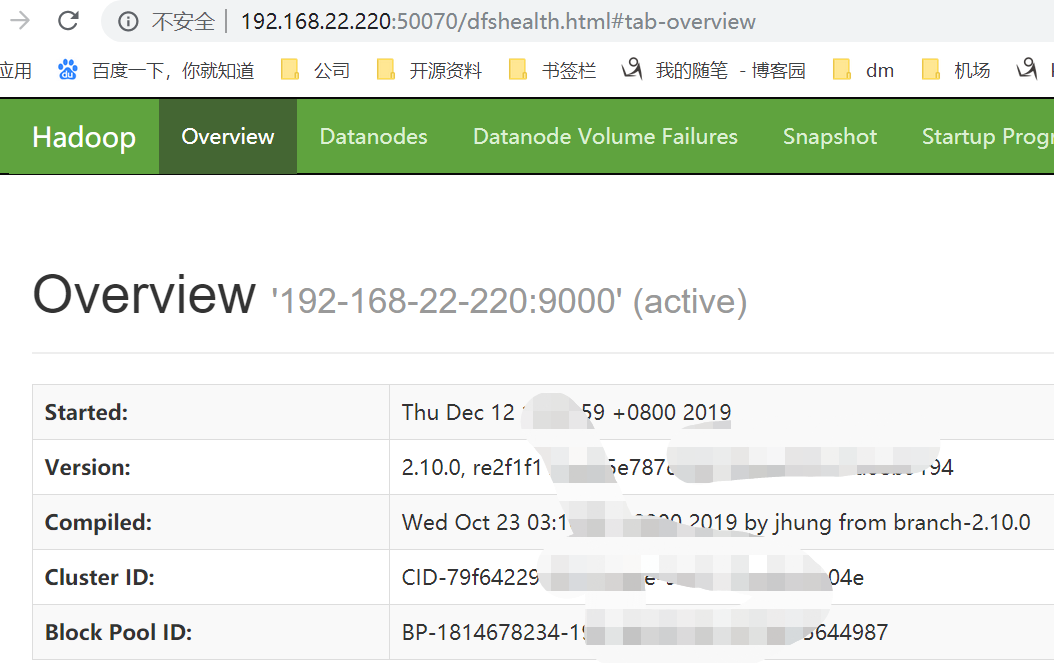

六,启动hdfs

https://blog.csdn.net/lglglgl/article/details/80553828

https://www.cnblogs.com/nihilwater/p/13849396.html

启动账号

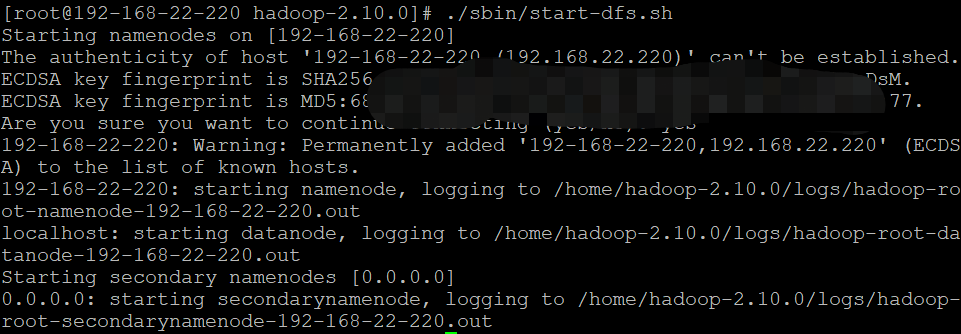

./sbin/start-dfs.sh #启动命令 ./sbin/stop-dfs.sh #停止命令

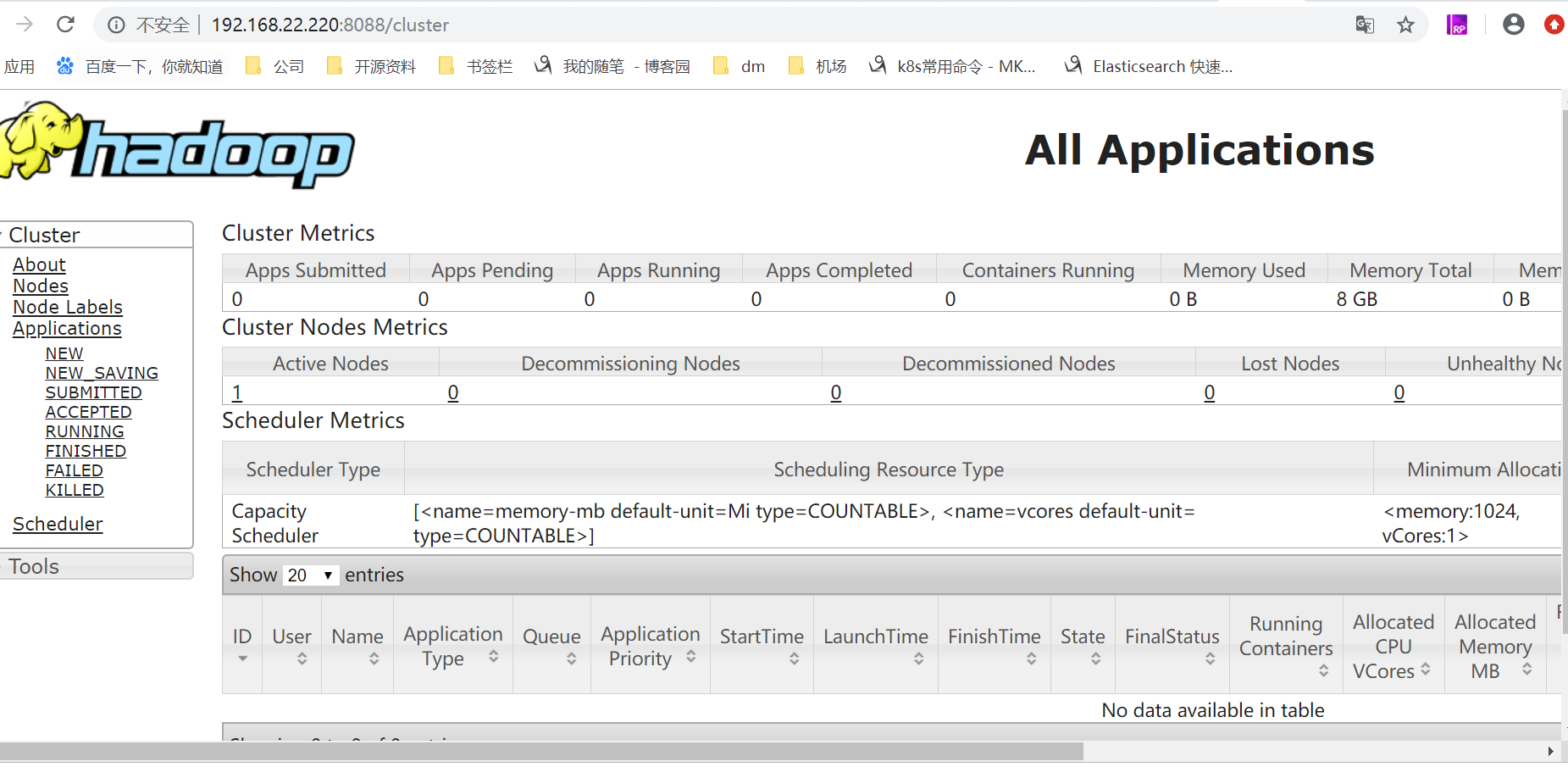

七,启动yarn

[root@192-168-22-220 hadoop-2.10.0]# pwd

/home/hadoop-2.10.0

[root@192-168-22-220 hadoop-2.10.0]# ./sbin/start-yarn.sh # 关闭命令:./sbin/stop-yarn.sh

starting yarn daemons

resourcemanager running as process 20625. Stop it first.

localhost: starting nodemanager, logging to /home/hadoop-2.10.0/logs/yarn-root-n

odemanager-192-168-22-220.out

[root@192-168-22-220 hadoop-2.10.0]# jps

26912 NameNode

20625 ResourceManager

28137 Jps

27020 DataNode

27230 SecondaryNameNode

27999 NodeManager

八,批量启动和停止

[root@192-168-22-220 hadoop-2.10.0]# ./sbin/stop-all.sh This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh Stopping namenodes on [192-168-22-220] 192-168-22-220: stopping namenode localhost: stopping datanode Stopping secondary namenodes [0.0.0.0] 0.0.0.0: stopping secondarynamenode stopping yarn daemons stopping resourcemanager localhost: stopping nodemanager localhost: nodemanager did not stop gracefully after 5 seconds: killing with kill -9 no proxyserver to stop [root@192-168-22-220 hadoop-2.10.0]# ./sbin/start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Starting namenodes on [192-168-22-220] 192-168-22-220: starting namenode, logging to /home/hadoop-2.10.0/logs/hadoop-root-namenode-192-168-22-220.out localhost: starting datanode, logging to /home/hadoop-2.10.0/logs/hadoop-root-datanode-192-168-22-220.out Starting secondary namenodes [0.0.0.0] 0.0.0.0: starting secondarynamenode, logging to /home/hadoop-2.10.0/logs/hadoop-root-secondarynamenode-192-168-22-220.out starting yarn daemons starting resourcemanager, logging to /home/hadoop-2.10.0/logs/yarn-root-resourcemanager-192-168-22-220.out localhost: starting nodemanager, logging to /home/hadoop-2.10.0/logs/yarn-root-nodemanager-192-168-22-220.out

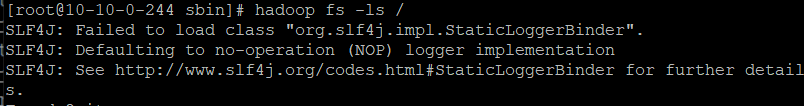

九,排错

解决:缺少slf4j-nop.jar导致

wget http://www.java2s.com/Code/JarDownload/slf4j-nop/slf4j-nop-1.7.2.jar.zip unzip slf4j-nop-1.7.2.jar.zip -d $HADOOP_HOME/share/hadoop/common/