Cloud Migration

Key Points

- Lift and Shift is the simple process of moving your application from an on-premises environment to the cloud without making any significant changes to the code. AWS Server migration can assist with this process

- Lift and Shift migrations don’t have many options for performance improvements other than to allocate additional resources where bottlenecks are observed. This exposes the company to the potential for excessive compute costs.

- Not all applications are good candidates for cloud-native redesign, but a redesign can advantage of cloud services like optimized instances and AWS RDS to produce improved performance

- The best candidates for cloud native redesign are lightweight applications whose functions can be driven by events like API calls, file uploads, database updates, and messages being added to a queue.

What obstacles can you expect when rearchitecting an application for the cloud?The decision to re-architect an application is not made lightly. All of the stakeholders on the project need an advocate- including the application!Budget estimates, timelines, and testing protocols will often be pain points when you are rebuilding an application. Money is a major factor- for large and complex enterprise applications, developer time and qa testing can run into the millions of dollars. You can also expect timelines to slip. I’m sorry, but its true. No matter how far out you project your development schedule, timeline pressure will be an obstacle. You can always hope for the best, but I have never seen a software project ship on or before schedule, and I've been around quite a while.The third obstacle I would anticipate is a term called “scope creep” where your original plans for the redesign and development are waylaid by new and updated plans along the way. Plans that nobody budgeted for or calculated into the timeline. The scope of the project changes along with changes to the vendor service offering- if AWS starts offering a brand new service that can be used to make the application perform better for less money, why not add it to the scope? Well, one good reason not to change the scope is because you want to ship your software on time.AWS, as a tech company, will always release new and improved services. Its up to you as the architect to decide if you are going to stick with you plan and stay the course to completion, or allow the scope of the project to get away from you by adding new services and features that weren’t a part of the original plan and seemingly never get your application out the door. I would vote for locking down the scope, and turning a blind eye to the tempting upgrades, but when it comes to your projects, it will be up to you!

Additional Reading

Learn more about Serverless Architecture here:

- Serverless Application Lens

- Servers LOL

- Amazon Server Migration Service

- What is Serverless Architecture? What are its Pros and Cons?

AWS Lambda

Key Points

-

AWS Lambda (Lambda for short) is the name Amazon’s serverless computing service.

-

There are no provisioned servers -- pay only for compute time used

-

Lambda scales automatically because it runs your code as it is triggered, and each trigger spawns a new thread (function)

-

You are charged for every 100ms of compute time used and the number of times your code is triggered by an event.

Making the Case for Serverless

-

Applications that are idle most of the time can benefit from an evaluation to see if they are a good candidate for a serverless rebuild. Applications with intense, long-running functions probably won't benefit from the cost savings because costs are calculated by resources allocated and execution time.

-

The server layer is abstracted and inaccessible, so you don’t have to worry about server maintenance like patching and monitoring; on the other hand, you are giving up control of server resources, so you don’t have access to the server OS or network because there is no server to manage.

-

Some big companies with lots of technical staff expertise are running serverless applications in production and reaping the cost and performance benefits

-

Serverless computing options have only been commercially available for about 5 years, so as the technology matures, we will see more and more companies looking to serverless compute for reduced cost and improved performance.

Additional Reading

Learn more about how to use AWS Lambda here:

- AWS Lambda Developer Guide

- AWS Lambda

- AWS API Gareway

- AWS DynamoDB

- AWS SQS

- AWS Cognito

- Step FUnctions

Serverless Costs

Key Points

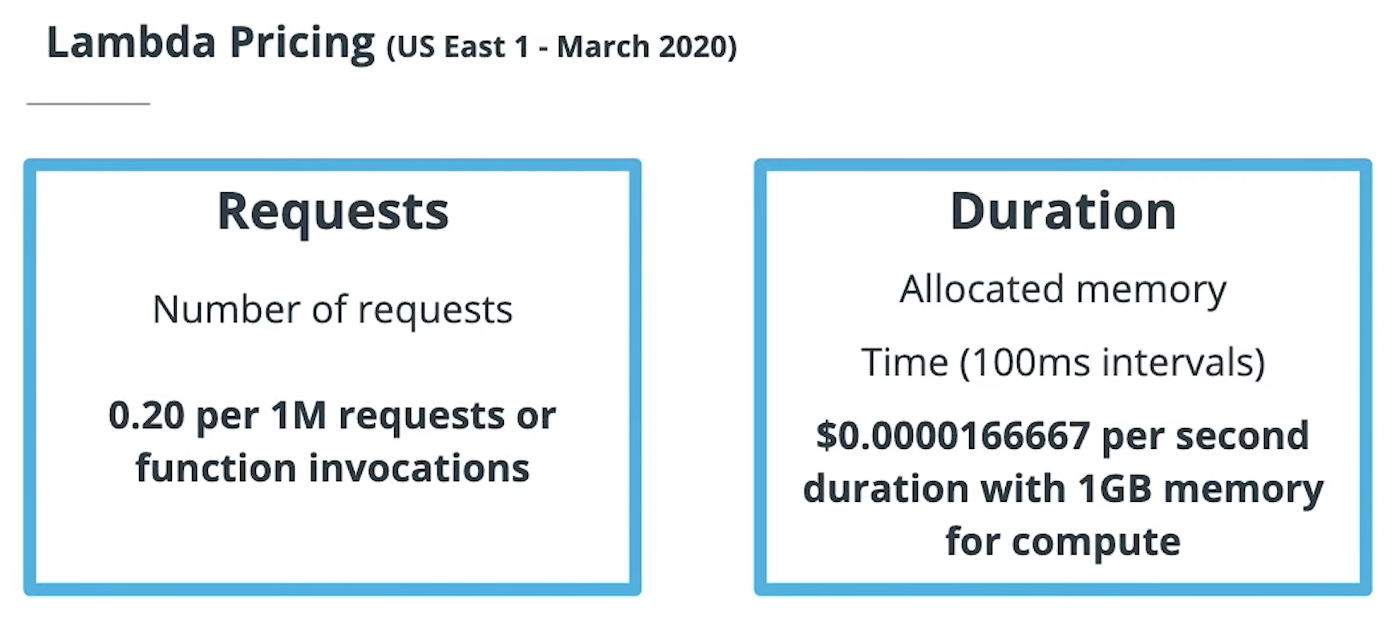

- Lambda requests are billed based on

- number of requests/function invocations

- duration of compute time and allocated memory

- Duration is billed per 100ms

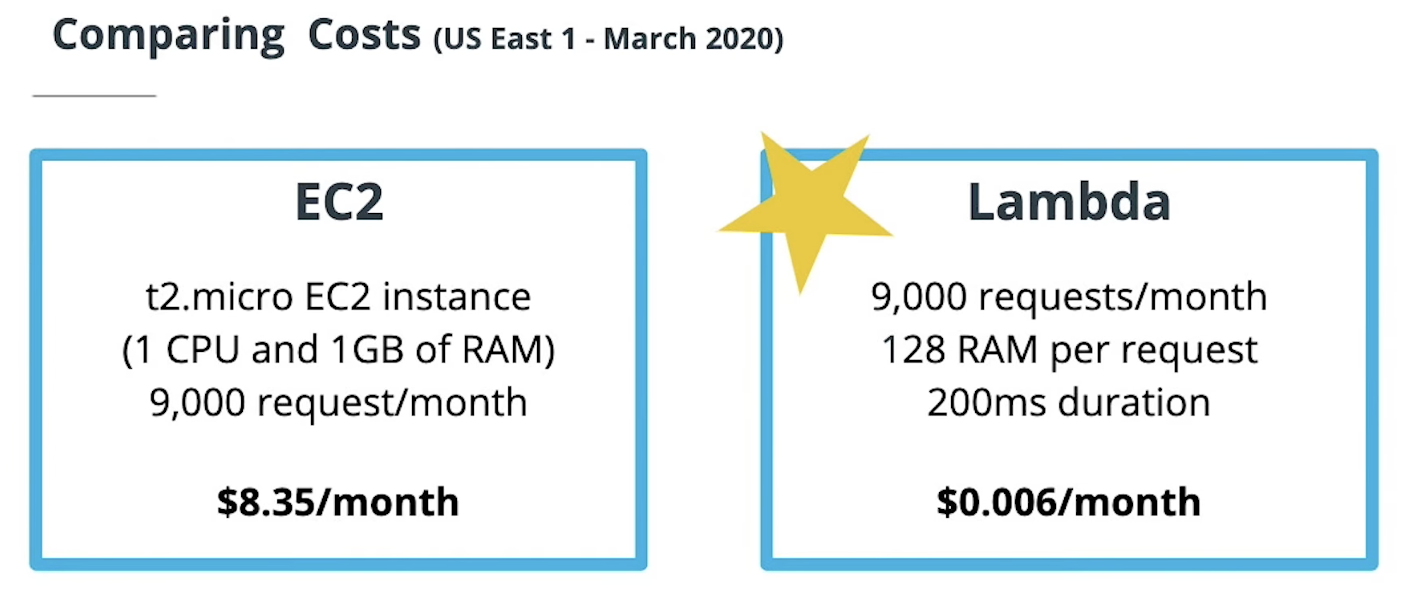

- Lambda works better for small scale apps

- EC2 works better for long-running functions

- Lambda functions are stateless and run in short-lived containers

- There is a "cold-start" delay after a period of inactivity but that can be avoided by using a keep warm service

- Serverless can also bring cost savings because fewer people are needed for operations support

When to Use Lambda

-

AWS Lambda is optimal for applications with irregular usage patterns and lulls between spikes in activity

-

AWS Lambda is not a good choice for applications with regular, consistent, or steady workloads and long running functions. It might end up being more costly than EC2 instances.

-

Consider the considerable cost of re-architecting an application when deciding if AWS Lambda is a good choice. It might be!

https://dashbird.io/lambda-cost-calculator/

Additional Reading

Please continue learning about how serverless compute is priced and how it fits into the cloud cost conversation by following the links below

- AWS Lambda Pricing

- AWS Lambda Pricing Model Explained with Examples

- Serverless Pricing and Costs

- Optimizing the cost of Serverless Web Applications

Lambda costs

-

Use the Lambda Pricing Calculator and do NOT include the Free Tier

-

Assume:

- 20 million functions calls per month

- 512 MB of Memory

- Execution time of 50ms

EC2 Costs

-

Find pricing for on-demand instances at AWS On-Demand Instance Pricing

-

Find pricing for 1 year reserved instances at Reserved Instance Pricing

Additional Reading

Learn more about the Lambda costs and how they compare to EC2 instance costs by following the links below

- AWS Lambda Pricing Calculator

- Should your EC2 be a Lambda?

- AWS Lambda vs EC2

- AwS EC2 vs Lambda- Which One is Right For You?

Lambda Events and Functions

Key Points About Lambda Events and Functions

-

Invoking Lambda functions means triggering them, or kicking them off.

-

AWS automatically provisions CPU and network resources based on the amount of memory you select to run your functions

-

Lambda functions can be written in a variety of coding languages: Node.js, Python, Go, Java, C# and more

-

The serverless code you write and configure for one cloud platform can only be used on that platform so using AWS Lambda to run your applications ties you to AWS

Key Points about Lambda@Edge

-

Lambda@Edge allows you to execute lambda functions in edge locations that are geographically closer to the user.

-

Running code closer to users improves user satisfaction

-

An example of a function that can be executed in an edge location is rewriting the response URL based on device type- serving a mobile user a mobile optimized page

Additional Reading

Read more about how to use Lambda functions

API Gateway & DynamoDB

API Gateway

-

An API is an interface to your application that exposes parts of your application or data for integration or sharing with another application.

-

AWS API Gateway is a managed service that removes the administrative work from the job of publishing APIs by publishing, maintaining, managing, and securing APIs.

-

API Gateway integrates with AWS Lambda, AWS SNS, AWS IAM, and Cognito Identity Pools, allowing for fully managed authentication and authorization

-

API Gateway works with lambda by sitting in between the user’s API request and the Lambda running compute functions on the back end.

DynamoDB

-

DynamoDB is a fast, high-performing NoSQL database that scales elastically

-

DynamoDB tables have a primary key, but the are not relational, making them an excellent option for varied, unstructured data

-

DynamoDB is tightly integrated with the AWS Serverless ecosystem and is hosted on a series of distributed managed servers, which increases database availability and performance, and facilitates automatic scaling

-

Lambda functions can be triggered by an update to a DynamoDB table via the activity logs

-

DynamoDB works well for applications that use self-contained data objects, but is usually too pricey for applications with high traffic of large objects

- DynamoDB read and write capacity can be allocated upfront to keep costs predictable, or DynamoDB autoscaling can modify read/write capacity based on requests, which can result in unpredictable costs.

Additional Reading

Please follow the links below to read more about AWS API Gateway

- Amazon API Gateway

- Using AWS Lambda with API Gateway

- Amazon API Gateway- The Ultimate Guide

- How to Build Your First Serverless API with with AWS Lambda and API Gateway

And AWS DynamoDB

AWS ECS and EKS

Key Points

Most companies are looking at how they can leverage container technology to accelerate software development, improve operational stability, and save time and money.

A container includes an application, its configuration, runtime, libraries, tools, and its dependencies.

Containers are portable and because of how their resources are packaged, their performance is consistent across platforms.

Amazon offers three categories of container management tools: AWS Elastic Container Registry AWS Elastic Container Service AWS Elastic Kubernetes Service

Additional Reading

To learn more about running containers on AWS, including how to manage and scale them securely, please follow the links below.

Serverless Recap

Key Points

-

Serverless allows you to build and run applications without the burden of thinking about servers

-

Serverless in AWS means built-in fault tolerance, maintenance, monitoring, and security,

-

Serverless allows the enterprise to reclaim the time and money that would be spent on operational tasks, and reduces the number of infrastructure engineers necessary to maintain the production environment

Additional Reading

If you would like to learn more about Serverless compute on AWS, and how its serverless servers integrate to provide customers with unlimited innovation potential, please follow the links below: