Overview

- How to architect our application for testability

- How to run our application locally

- Have multiple envs for our application

- Implement observability

- monitoring, logging, distributed tracing

- Improve performance of our application

- Cold start issue

- Security of our application

Vendor lock-in

- Vendor lock-in is Inability to switch from a selected technology provider to another

- Discuessed a lot when related to serverless

What can cause a vendor lock-in?

- Code in not root cause of vendor lock-in

- Other third party services are the cause

- Database

- File storage

- Messaging systems

How can we defend from a vendor lock-in?

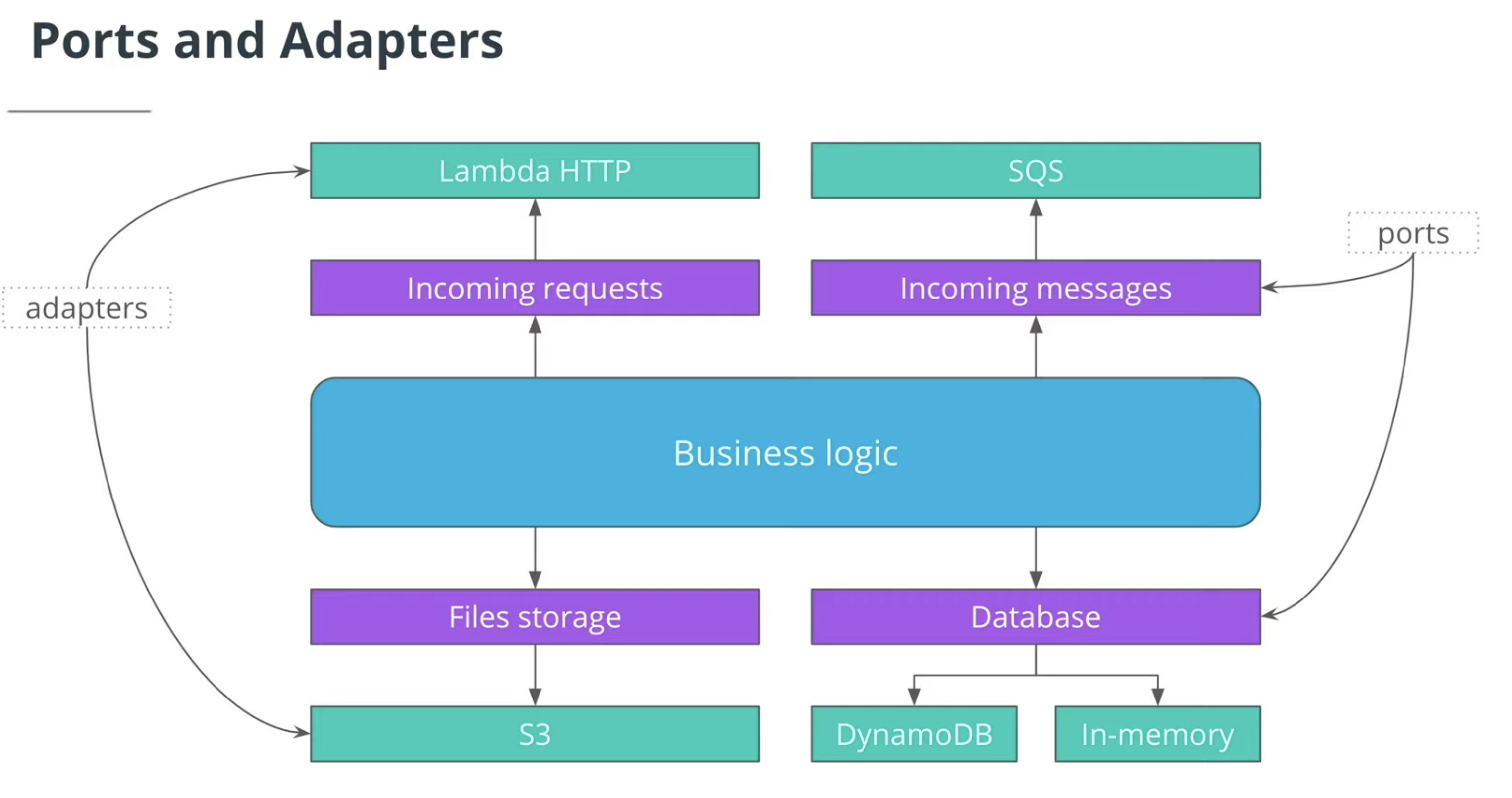

- Using "Ports and Adapters Architecture" or "hexagonal architecture"

Ports and Adapters Architecture

Main idea is to separate application into two components.

- Business logic which are independent from external services

- Ports and adaptor which interact with outside services such as DB, messaging queue.

Ports: You can think of ports as interface which defines the common actions to handle busniess logic

Adapter: You can think or Adapter as the real implementation detail behind the interface, for example database port might have 'read', 'write' interface

adapters are targeting different database, such as mysql, DyanmoDB ....

Benefits of the Ports and Adapters Architecture

- Business logic can be easily ported

- Can move application to another provider

- Allwe need to do is re-implement ports if switch third party vendor

See Example: Refactoring the code with Ports and Adapters pattern

Multi Stages Deployment

For testing, we can have multistaging env.

For example:

- CI: For intergion testing

- Dev: For developers

- Staging: For testers

- Prod: for customers

Here is how we can deploy a serverless application to different stages:

# Deploy to production ("prod" stage) sls deploy -v --stage prod # Can have additional stages sls deploy -v --stage staging

Run function locally

Invoke a function in AWS

serverless-offline-plugin

- Emulates API Gateway and Lambda

- Starts local web server that calls Lambda functions locally

- Intergets Authorizers, Lambda integrations, CORS, Velocity templates... etc.

- Can attach a debugger to a local process

- Works with plugins that emulate other services...DynamoDB, Kinesis, SNS, etc...

### Run locally 1. install the plugins. 2. Define in yaml: ```yaml plugins: - serverless-webpack - serverless-reqvalidator-plugin - serverless-aws-documentation - serverless-dynamodb-local - serverless-offline custom: topicName: ImagesTopic-${self:provider.stage} serverless-offline: httpPort: 3003 dynamodb: stages: - dev start: port: 8000 inMemory: true migrate: true ``` 3. run sls install/start: ```bash sls dynamodb install sls dynamodb start sls offline ```

Seeding a database

We can also automatically seed a local DynamoDB database. To do this, we need to set dynamodb.start.seed to true and provide seed configuration, like this:

custom:

dynamodb:

start:

seed: true

seed:

# Categories of data. We can optionally load just some of them

users:

sources:

- table: users

sources: [./users.json]

- table: user-roles

sources: [./userRoles.json]

posts:

sources:

- table: blog-posts

sources: [./blogPosts.json]

You can then seed a database with the following command:

sls dynamodb seed --seed=users,posts

Or you can start a local DynamoDB database and seed it:

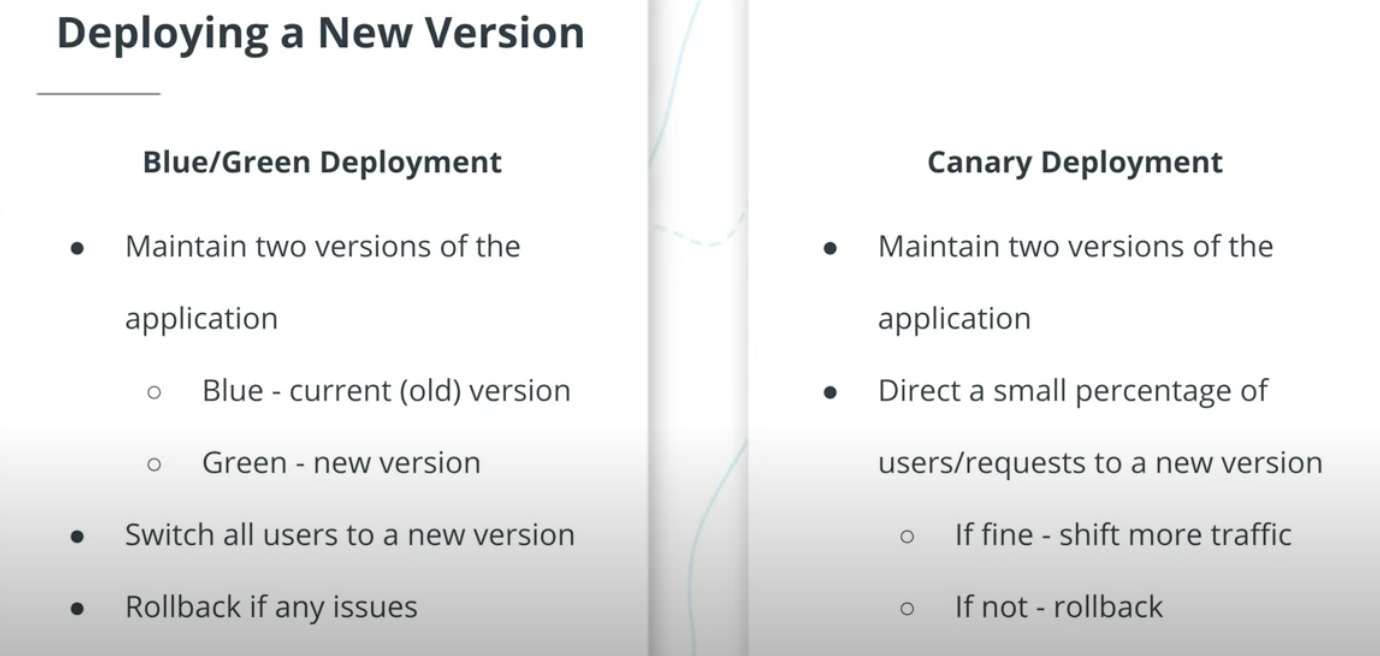

sls dynamodb start --seed=users,postsCanary Deployment

- Allows to release a new version slowly

- Exposes only a small percentage of users to potential issues

- Quickly rollback if any problems encountered

Lambda Weighted Alias

https://docs.aws.amazon.com/lambda/latest/dg/configuration-aliases.html

### Canary Deployment ```yaml plugins: ... - serverless-plugin-canary-deployments iamRoleStatements: ... - Effect: Allow Action: - codedeploy:* Resource: - "*" CreateGroup: handler: src/lambda/http/createGroup.handler events: - http: method: post path: groups authorizer: RS256Auth cors: true reqValidatorName: RequestBodyValidator request: schema: application/json: ${file(models/create-group-request.json)} deploymentSettings: ## add deploymentSetting for canary deployment type: Linear10PrecentEveryMinute alias: Live ### new alias called Live ```

X-Ray

provider: tracing: apiGateway: true lambda: true

import * as AWS from "aws-sdk"; import { APIGatewayProxyEvent, APIGatewayProxyHandler, APIGatewayProxyResult, } from "aws-lambda"; import * as AWSXRay from "aws-xray-sdk"; const XAWS = AWSXRay.captureAWS(AWS); const docClient = new XAWS.DynamoDB.DocumentClient(); const imagesTable = process.env.IMAGES_TABLE; const imageIdIndex = process.env.IMAGE_ID_INDEX; export const handler: APIGatewayProxyHandler = async ( event: APIGatewayProxyEvent ): Promise<APIGatewayProxyResult> => { const imageId = event.pathParameters.imageId; const result = await docClient .query({ TableName: imagesTable, IndexName: imageIdIndex, KeyConditionExpression: "imageId= :imageId", ExpressionAttributeValues: { ":imageId": imageId, }, }) .promise(); if (result.Count === 0) { return { statusCode: 404, headers: { "Access-Control-Allow-Origin": "*", }, body: "", }; } return { statusCode: 200, headers: { "Access-Control-Allow-Origin": "*", }, body: JSON.stringify({ item: result.Items[0], }), }; };

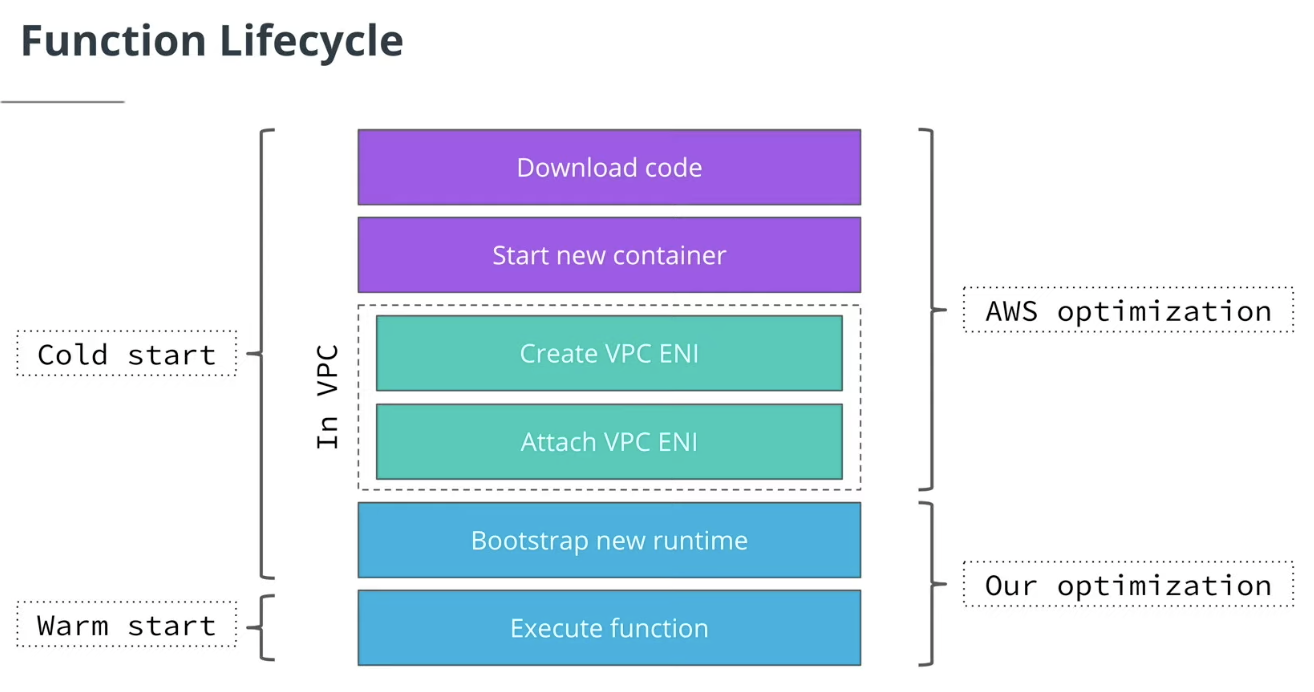

Lambda Cold start

- Common problem for all FaaS Solutions

- Functions are provisioned only when they are used

- Instancees removed when not used

- Take time to provision

When does cold start happen?

- Only experienced when a new function is started

- When no instances are running

- When an additional instance is required

- Mostly a problem for

- Processing customers requests

- Infrequent requests

- A new function instance for every request

- A spike of requests

- Not enough function instnaces running

- Not a problem if you have a steady stream of requests

Optimize - Code Size

- Smaller functions have a faster cold start

- AWS won't ship devDependencies

- We don't need to ship aws-sdk, lambda has it built-in

- Use Serverless plugins to optimize functions

- Split one function into multiple function

- One function pre task

Avoid Cold start altoghther

- Call your function periodically

- Send a special payload (warm-up request)

- If it is a warm-up request, your function should do nothing

- Enough to keep it ready to process incoming requests

- Serverless has a plugin for this

- Generates a function that periodically calls other functions. can use Serverless WarmUp Plugin

Optimize - Function Code

- Re-use state between executions

- Avoid invocation (for example, function should only being called when new object added to S3, then use S3 events filters to only invocation Lambda for create object event)

- Avoid sleep() calls in Lambda function

- You are paying for waiting

- Use AWS Step Function for orchestration

- Send multiple request in parallel

Optimize - Avoid Running in VPC

- Only run a function in a VPC if it needs an access a protected endpoint

- Running in VPC in creases cold start dtastically

- Avoid if you can

- Not needed in most cases

- AWS has a guide for deciding when to put Lambda functions in VPC

- Best Practices for Working with AWS Lambda Functions

Optimize - Bootstrapping New Runtime

- Select a different runtime

- Go, Node.js Python have lowest cold start

- Java, .NET have the highest cold start

- Provision more memory

- Provides a faster CPU

- Allows to bootstrap a runtime faster

Optimize - More Memory

- Can allow to save money

- May seem conterintuitive

- We pay more if we provision more memory

- More memory => faster CPU

- Spend less time

- Pay less for execution time

Lambda Power Tuning

- Selecting an optimal memory size can be tricky

- aws-lambda-power-tuning

- Run a function with different memory sizes

- Fidns the most cost efficient memory sizes

Optimize - functions size

- Package functions individually

- Supported by Webpack and Serverless

- Re-build our application

When use Serverless framework, it packages all the deps used by at least one Lambda function, and create a single zip package. So the single zip package is used by all the Lambda funtion.

The only difference is that the hander for each function is different.

// serverless.yaml package: individually: true

[Notice]: A small size reduces the cold start time but we might pay for longer compilation time.

Security

Lambda

- Application security (OWASP Top 10 still applies)

- Configuring IAM permissions

- Define what function / role can access what resources

- Use the principle of least privilege

- Data encryption

- Enable encryption at rest for services like DynamoDB and Kinesis

- Secrets handling

- Use KMS, secrets manager, SSM

API

- Use throttling

- Can be configured per stage or per method

- Throttle individual customers if applicable

- Validate incoming data

- Do it in API Gateway or in your code

- Use a firewall to limit access to your API

- AWS Web Application Firewall (WAF)

https://docs.aws.amazon.com/lambda/latest/dg/lambda-security.html

Lambda Minimal Privileges for Serverless

- All our functions have the same set of permissions

- We need to set minimal required privileges per function

- Will use serverless-iam-roles-per-function for this

GetImages: handler: src/lambda/http/getImages.handler events: - http: method: get path: group/{groupId}/images cors: true iamRoleStatements: - Effect: Allow Action: - dynamodb:Query Resource: arn:aws:dynamodb:${self:provider.region}:*:table/${self:provider.environment.IMAGES_TABLE} - Effect: Allow Action: - dynamodb:GetItem Resource: arn:aws:dynamodb:${self:provider.region}:*:table/${self:provider.environment.GROUPS_TABLE}