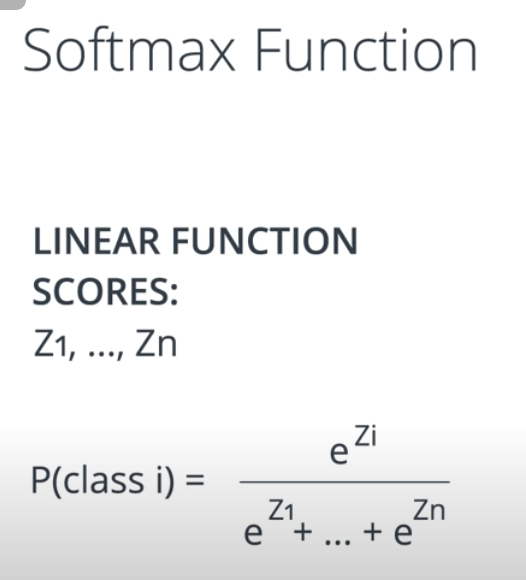

The Softmax Function

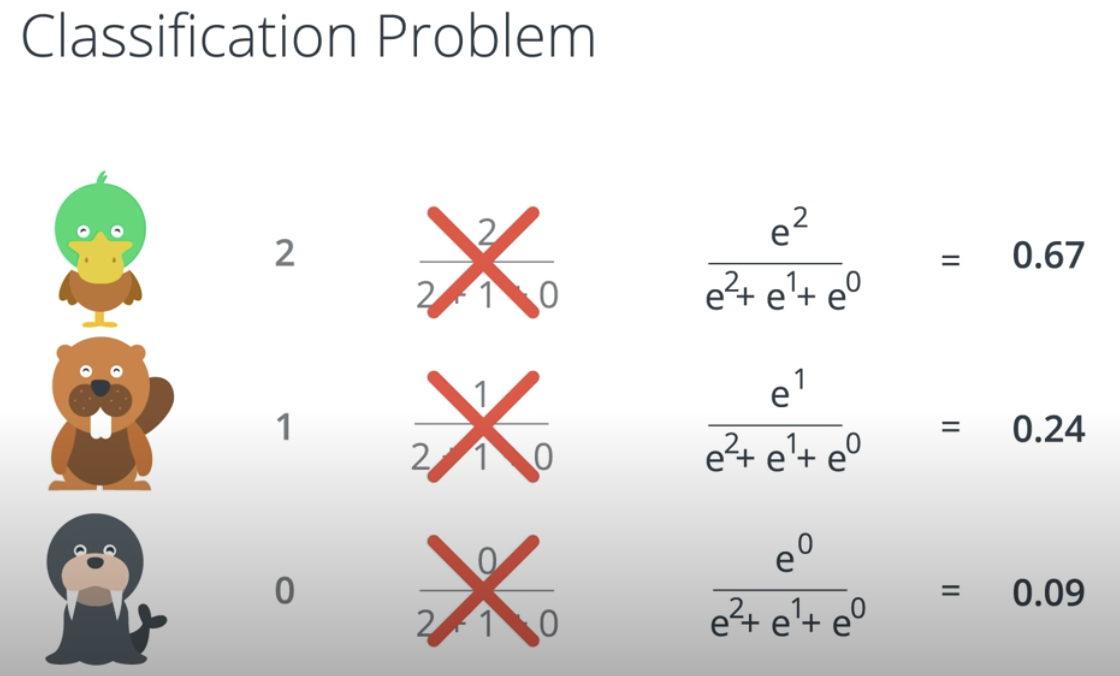

In the next video, we'll learn about the softmax function, which is the equivalent of the sigmoid activation function, but when the problem has 3 or more classes.

import numpy as np def softmax(L): expL = np.exp(L) sumExpL = sum(expL) result = [] for i in expL: result.append(i*1.0/sumExpL) return result