主要内容:

1、动机

2、聚类

3、python实现

一、动机

之前我们实现的分类器都是基于带标签或类别的数据集,这种学习方法叫做有监督的学习,这些数据一般都是通过人工标注的,成本和代价比较高。

而实际中的原生数据都是没有标注的,如果没有标签,是否也能为这些数据进行分类呢?

答案是肯定的,那就是本文要介绍的无监督学习方法——聚类。

有监督学习:对带类别标签的数据集进行学习,训练出一个分类模型对新来的样本进行预测

无监督学习:对无类别标签的数据集进行学习,以发现训练集中数据的类别归属。

二、聚类Clustering

两种聚类方法:

1、层次聚类hierarchical clustering

层次聚类的原理:不指定要分为多少类,起始时,每个样本各自成为一簇,之后进行迭代,每次迭代时合并距离最近的两个簇,依次进行,直至最后只剩下一个簇。

结果有点类似于霍夫曼树的树形结构,如下图:

细节:簇间距离的计算有以下三种:single-linkage聚类法,complete-linkage聚类法,average-linkage聚类法

具体参考:

http://baike.baidu.com/link?url=OxYi2gA8dsfvyg8EjnxzwNkh3YHpqC8rePFdNeOUDYbnzE1XSKDxAj1-F9P0htnUHnUBx7vBflMLjWZdajcan_

2、K-means聚类

K-means聚类是EM算法的一个典型应用,是最常用的聚类算法之一。

聚类过程:

1)、随机选择k个点作为簇中心;

2)、计算每个样本点的最近簇中心,并将该样本点归于该簇

3)、重新计算每个簇的中心点;

4)、重复上面的操作直至簇中心不再变化或变化很小;(不一定能够收敛至最优解,取决于初始值的选择,类似于梯度下降法)

在上述计算过程中,可能会出现少于k的簇,即有些簇可能为空。

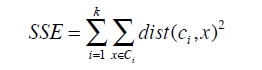

聚类结果的衡量/评价:

3、k-meas++

k-means++是在前面的k-means基础上针对初始簇中心点的选择改进而来的。

(暂时不太明白这个算法,详见代码)

三、python实现

1、层次聚类

from queue import PriorityQueue import math """ Example code for hierarchical clustering """ def getMedian(alist): """get median value of list alist""" tmp = list(alist) tmp.sort() alen = len(tmp) if (alen % 2) == 1: return tmp[alen // 2] else: return (tmp[alen // 2] + tmp[(alen // 2) - 1]) / 2 def normalizeColumn(column): """Normalize column using Modified Standard Score""" median = getMedian(column) asd = sum([abs(x - median) for x in column]) / len(column) result = [(x - median) / asd for x in column] return result class hClusterer: """ this clusterer assumes that the first column of the data is a label not used in the clustering. The other columns contain numeric data""" def __init__(self, filename): file = open(filename) self.data = {} self.counter = 0 self.queue = PriorityQueue() lines = file.readlines() file.close() header = lines[0].split(',') self.cols = len(header) self.data = [[] for i in range(len(header))] for line in lines[1:]: cells = line.split(',') toggle = 0 for cell in range(self.cols): if toggle == 0: self.data[cell].append(cells[cell]) toggle = 1 else: self.data[cell].append(float(cells[cell])) # now normalize number columns (that is, skip the first column) for i in range(1, self.cols): self.data[i] = normalizeColumn(self.data[i]) ### ### I have read in the data and normalized the ### columns. Now for each element i in the data, I am going to ### 1. compute the Euclidean Distance from element i to all the ### other elements. This data will be placed in neighbors, ### which is a Python dictionary. Let's say i = 1, and I am ### computing the distance to the neighbor j and let's say j ### is 2. The neighbors dictionary for i will look like ### {2: ((1,2), 1.23), 3: ((1, 3), 2.3)... } ### ### 2. find the closest neighbor ### ### 3. place the element on a priority queue, called simply queue, ### based on the distance to the nearest neighbor (and a counter ### used to break ties. # now push distances on queue rows = len(self.data[0]) for i in range(rows): minDistance = 99999 nearestNeighbor = 0 neighbors = {} for j in range(rows): if i != j: dist = self.distance(i, j) if i < j: pair = (i,j) else: pair = (j,i) neighbors[j] = (pair, dist) if dist < minDistance: minDistance = dist nearestNeighbor = j nearestNum = j # create nearest Pair if i < nearestNeighbor: nearestPair = (i, nearestNeighbor) else: nearestPair = (nearestNeighbor, i) # put instance on priority queue self.queue.put((minDistance, self.counter, [[self.data[0][i]], nearestPair, neighbors])) self.counter += 1 def distance(self, i, j): sumSquares = 0 for k in range(1, self.cols): sumSquares += (self.data[k][i] - self.data[k][j])**2 return math.sqrt(sumSquares) def cluster(self): done = False while not done: topOne = self.queue.get() nearestPair = topOne[2][1] if not self.queue.empty(): nextOne = self.queue.get() nearPair = nextOne[2][1] tmp = [] ## ## I have just popped two elements off the queue, ## topOne and nextOne. I need to check whether nextOne ## is topOne's nearest neighbor and vice versa. ## If not, I will pop another element off the queue ## until I find topOne's nearest neighbor. That is what ## this while loop does. ## while nearPair != nearestPair: tmp.append((nextOne[0], self.counter, nextOne[2])) self.counter += 1 nextOne = self.queue.get() nearPair = nextOne[2][1] ## ## this for loop pushes the elements I popped off in the ## above while loop. ## for item in tmp: self.queue.put(item) if len(topOne[2][0]) == 1: item1 = topOne[2][0][0] else: item1 = topOne[2][0] if len(nextOne[2][0]) == 1: item2 = nextOne[2][0][0] else: item2 = nextOne[2][0] ## curCluster is, perhaps obviously, the new cluster ## which combines cluster item1 with cluster item2. curCluster = (item1, item2) ## Now I am doing two things. First, finding the nearest ## neighbor to this new cluster. Second, building a new ## neighbors list by merging the neighbors lists of item1 ## and item2. If the distance between item1 and element 23 ## is 2 and the distance betweeen item2 and element 23 is 4 ## the distance between element 23 and the new cluster will ## be 2 (i.e., the shortest distance). ## minDistance = 99999 nearestPair = () nearestNeighbor = '' merged = {} nNeighbors = nextOne[2][2] for (key, value) in topOne[2][2].items(): if key in nNeighbors: if nNeighbors[key][1] < value[1]: dist = nNeighbors[key] else: dist = value if dist[1] < minDistance: minDistance = dist[1] nearestPair = dist[0] nearestNeighbor = key merged[key] = dist if merged == {}: return curCluster else: self.queue.put( (minDistance, self.counter, [curCluster, nearestPair, merged])) self.counter += 1 def printDendrogram(T, sep=3): """Print dendrogram of a binary tree. Each tree node is represented by a length-2 tuple. printDendrogram is written and provided by David Eppstein 2002. Accessed on 14 April 2014: http://code.activestate.com/recipes/139422-dendrogram-drawing/ """ def isPair(T): return type(T) == tuple and len(T) == 2 def maxHeight(T): if isPair(T): h = max(maxHeight(T[0]), maxHeight(T[1])) else: h = len(str(T)) return h + sep activeLevels = {} def traverse(T, h, isFirst): if isPair(T): traverse(T[0], h-sep, 1) s = [' ']*(h-sep) s.append('|') else: s = list(str(T)) s.append(' ') while len(s) < h: s.append('-') if (isFirst >= 0): s.append('+') if isFirst: activeLevels[h] = 1 else: del activeLevels[h] A = list(activeLevels) A.sort() for L in A: if len(s) < L: while len(s) < L: s.append(' ') s.append('|') print (''.join(s)) if isPair(T): traverse(T[1], h-sep, 0) traverse(T, maxHeight(T), -1) filename = 'dogs.csv' hg = hClusterer(filename) cluster = hg.cluster() printDendrogram(cluster)

2、k-means

import math import random """ Implementation of the K-means algorithm for the book A Programmer's Guide to Data Mining" http://www.guidetodatamining.com """ def getMedian(alist): """get median of list""" tmp = list(alist) tmp.sort() alen = len(tmp) if (alen % 2) == 1: return tmp[alen // 2] else: return (tmp[alen // 2] + tmp[(alen // 2) - 1]) / 2 def normalizeColumn(column): """normalize the values of a column using Modified Standard Score that is (each value - median) / (absolute standard deviation)""" median = getMedian(column) asd = sum([abs(x - median) for x in column]) / len(column) result = [(x - median) / asd for x in column] return result class kClusterer: """ Implementation of kMeans Clustering This clusterer assumes that the first column of the data is a label not used in the clustering. The other columns contain numeric data """ def __init__(self, filename, k): """ k is the number of clusters to make This init method: 1. reads the data from the file named filename 2. stores that data by column in self.data 3. normalizes the data using Modified Standard Score 4. randomly selects the initial centroids 5. assigns points to clusters associated with those centroids """ file = open(filename) self.data = {} self.k = k self.counter = 0 self.iterationNumber = 0 # used to keep track of % of points that change cluster membership # in an iteration self.pointsChanged = 0 # Sum of Squared Error self.sse = 0 # # read data from file # lines = file.readlines() file.close() header = lines[0].split(',') self.cols = len(header) self.data = [[] for i in range(len(header))] # we are storing the data by column. # For example, self.data[0] is the data from column 0. # self.data[0][10] is the column 0 value of item 10. for line in lines[1:]: cells = line.split(',') toggle = 0 for cell in range(self.cols): if toggle == 0: self.data[cell].append(cells[cell]) toggle = 1 else: self.data[cell].append(float(cells[cell])) self.datasize = len(self.data[1]) self.memberOf = [-1 for x in range(len(self.data[1]))] # # now normalize number columns # for i in range(1, self.cols): self.data[i] = normalizeColumn(self.data[i]) # select random centroids from existing points random.seed() self.centroids = [[self.data[i][r] for i in range(1, len(self.data))] for r in random.sample(range(len(self.data[0])), self.k)] self.assignPointsToCluster() def updateCentroids(self): """Using the points in the clusters, determine the centroid (mean point) of each cluster""" members = [self.memberOf.count(i) for i in range(len(self.centroids))] self.centroids = [[sum([self.data[k][i] for i in range(len(self.data[0])) if self.memberOf[i] == centroid])/members[centroid] for k in range(1, len(self.data))] for centroid in range(len(self.centroids))] def assignPointToCluster(self, i): """ assign point to cluster based on distance from centroids""" min = 999999 clusterNum = -1 for centroid in range(self.k): dist = self.euclideanDistance(i, centroid) if dist < min: min = dist clusterNum = centroid # here is where I will keep track of changing points if clusterNum != self.memberOf[i]: self.pointsChanged += 1 # add square of distance to running sum of squared error self.sse += min**2 return clusterNum def assignPointsToCluster(self): """ assign each data point to a cluster""" self.pointsChanged = 0 self.sse = 0 self.memberOf = [self.assignPointToCluster(i) for i in range(len(self.data[1]))] def euclideanDistance(self, i, j): """ compute distance of point i from centroid j""" sumSquares = 0 for k in range(1, self.cols): sumSquares += (self.data[k][i] - self.centroids[j][k-1])**2 return math.sqrt(sumSquares) def kCluster(self): """the method that actually performs the clustering As you can see this method repeatedly updates the centroids by computing the mean point of each cluster re-assign the points to clusters based on these new centroids until the number of points that change cluster membership is less than 1%. """ done = False while not done: self.iterationNumber += 1 self.updateCentroids() self.assignPointsToCluster() # # we are done if fewer than 1% of the points change clusters # if float(self.pointsChanged) / len(self.memberOf) < 0.01: done = True print("Final SSE: %f" % self.sse) def showMembers(self): """Display the results""" for centroid in range(len(self.centroids)): print (" Class %i ========" % centroid) for name in [self.data[0][i] for i in range(len(self.data[0])) if self.memberOf[i] == centroid]: print (name) ## ## RUN THE K-MEANS CLUSTERER ON THE DOG DATA USING K = 3 ### # change the path in the following to match where dogs.csv is on your machine km = kClusterer('dogs.csv', 3) km.kCluster() km.showMembers()

3、k-means++

import math import random """ Implementation of the K-means++ algorithm for the book A Programmer's Guide to Data Mining" http://www.guidetodatamining.com """ def getMedian(alist): """get median of list""" tmp = list(alist) tmp.sort() alen = len(tmp) if (alen % 2) == 1: return tmp[alen // 2] else: return (tmp[alen // 2] + tmp[(alen // 2) - 1]) / 2 def normalizeColumn(column): """normalize the values of a column using Modified Standard Score that is (each value - median) / (absolute standard deviation)""" median = getMedian(column) asd = sum([abs(x - median) for x in column]) / len(column) result = [(x - median) / asd for x in column] return result class kClusterer: """ Implementation of kMeans Clustering This clusterer assumes that the first column of the data is a label not used in the clustering. The other columns contain numeric data """ def __init__(self, filename, k): """ k is the number of clusters to make This init method: 1. reads the data from the file named filename 2. stores that data by column in self.data 3. normalizes the data using Modified Standard Score 4. randomly selects the initial centroids 5. assigns points to clusters associated with those centroids """ file = open(filename) self.data = {} self.k = k self.counter = 0 self.iterationNumber = 0 # used to keep track of % of points that change cluster membership # in an iteration self.pointsChanged = 0 # Sum of Squared Error self.sse = 0 # # read data from file # lines = file.readlines() file.close() header = lines[0].split(',') self.cols = len(header) self.data = [[] for i in range(len(header))] # we are storing the data by column. # For example, self.data[0] is the data from column 0. # self.data[0][10] is the column 0 value of item 10. for line in lines[1:]: cells = line.split(',') toggle = 0 for cell in range(self.cols): if toggle == 0: self.data[cell].append(cells[cell]) toggle = 1 else: self.data[cell].append(float(cells[cell])) self.datasize = len(self.data[1]) self.memberOf = [-1 for x in range(len(self.data[1]))] # # now normalize number columns # for i in range(1, self.cols): self.data[i] = normalizeColumn(self.data[i]) # select random centroids from existing points random.seed() self.selectInitialCentroids() self.assignPointsToCluster() def showData(self): for i in range(len(self.data[0])): print("%20s %8.4f %8.4f" % (self.data[0][i], self.data[1][i], self.data[2][i])) def distanceToClosestCentroid(self, point, centroidList): result = self.eDistance(point, centroidList[0]) for centroid in centroidList[1:]: distance = self.eDistance(point, centroid) if distance < result: result = distance return result def selectInitialCentroids(self): """implement the k-means++ method of selecting the set of initial centroids""" centroids = [] total = 0 # first step is to select a random first centroid current = random.choice(range(len(self.data[0]))) centroids.append(current) # loop to select the rest of the centroids, one at a time for i in range(0, self.k - 1): # for every point in the data find its distance to # the closest centroid weights = [self.distanceToClosestCentroid(x, centroids) for x in range(len(self.data[0]))] total = sum(weights) # instead of raw distances, convert so sum of weight = 1 weights = [x / total for x in weights] # # now roll virtual die num = random.random() total = 0 x = -1 # the roulette wheel simulation while total < num: x += 1 total += weights[x] centroids.append(x) self.centroids = [[self.data[i][r] for i in range(1, len(self.data))] for r in centroids] def updateCentroids(self): """Using the points in the clusters, determine the centroid (mean point) of each cluster""" members = [self.memberOf.count(i) for i in range(len(self.centroids))] self.centroids = [[sum([self.data[k][i] for i in range(len(self.data[0])) if self.memberOf[i] == centroid])/members[centroid] for k in range(1, len(self.data))] for centroid in range(len(self.centroids))] def assignPointToCluster(self, i): """ assign point to cluster based on distance from centroids""" min = 999999 clusterNum = -1 for centroid in range(self.k): dist = self.euclideanDistance(i, centroid) if dist < min: min = dist clusterNum = centroid # here is where I will keep track of changing points if clusterNum != self.memberOf[i]: self.pointsChanged += 1 # add square of distance to running sum of squared error self.sse += min**2 return clusterNum def assignPointsToCluster(self): """ assign each data point to a cluster""" self.pointsChanged = 0 self.sse = 0 self.memberOf = [self.assignPointToCluster(i) for i in range(len(self.data[1]))] def eDistance(self, i, j): """ compute distance of point i from centroid j""" sumSquares = 0 for k in range(1, self.cols): sumSquares += (self.data[k][i] - self.data[k][j])**2 return math.sqrt(sumSquares) def euclideanDistance(self, i, j): """ compute distance of point i from centroid j""" sumSquares = 0 for k in range(1, self.cols): sumSquares += (self.data[k][i] - self.centroids[j][k-1])**2 return math.sqrt(sumSquares) def kCluster(self): """the method that actually performs the clustering As you can see this method repeatedly updates the centroids by computing the mean point of each cluster re-assign the points to clusters based on these new centroids until the number of points that change cluster membership is less than 1%. """ done = False while not done: self.iterationNumber += 1 self.updateCentroids() self.assignPointsToCluster() # # we are done if fewer than 1% of the points change clusters # if float(self.pointsChanged) / len(self.memberOf) < 0.01: done = True print("Final SSE: %f" % self.sse) def showMembers(self): """Display the results""" for centroid in range(len(self.centroids)): print (" Class %i ========" % centroid) for name in [self.data[0][i] for i in range(len(self.data[0])) if self.memberOf[i] == centroid]: print (name) ## ## RUN THE K-MEANS CLUSTERER ON THE DOG DATA USING K = 3 ### km = kClusterer('dogs.csv', 3) km.kCluster() km.showMembers()