首先自问自答几个问题,以让各位看官了解写此文的目的

什么是站内搜索?与一般搜索的区别?

多网站都有搜索功能,很多都是用SQL语句的Like实现的,但是Like无法做到模糊匹配(例如我搜索“.net学习”,如果有“.net的学习”,Like就无法搜索到,这明显不符合需求,但是站内搜索就能做到),另外Like会造成全盘扫描,会对数据库造成很大压力,为什么不用数据库全文检索,跟普通SQL一样,很傻瓜,灵活性不行

本文有借鉴其他大神及园友的技术,在此谢谢!

站内搜索使用的技术

- Log4Net 日志记录

- lucene.Net 全文检索开发包,只能检索文本信息

- 分词(lucene.Net提供StandardAnalyzer一元分词,按照单个字进行分词,一个汉字一个词)

- 盘古分词 基于词库的分词,可以维护词库

首先我们新增的SearchHelper类需要将其做成一个单例,使用单例是因为:有许多地方需要使用使用,但我们同时又希望只有一个对象去操作,具体代码如下:

#region 创建单例 // 定义一个静态变量来保存类的实例 private static SearchHelper uniqueInstance; // 定义一个标识确保线程同步 private static readonly object locker = new object(); // 定义私有构造函数,使外界不能创建该类实例 private SearchHelper() { } /// <summary> /// 定义公有方法提供一个全局访问点,同时你也可以定义公有属性来提供全局访问点 /// </summary> /// <returns></returns> public static SearchHelper GetInstance() { // 当第一个线程运行到这里时,此时会对locker对象 "加锁", // 当第二个线程运行该方法时,首先检测到locker对象为"加锁"状态,该线程就会挂起等待第一个线程解锁 // lock语句运行完之后(即线程运行完之后)会对该对象"解锁" lock (locker) { // 如果类的实例不存在则创建,否则直接返回 if (uniqueInstance == null) { uniqueInstance = new SearchHelper(); } } return uniqueInstance; } #endregion

其次,使用Lucene.Net需要将被搜索的进行索引,然后保存到索引库以便被搜索,我们引入了“生产者,消费者模式”. 生产者就是当我们新增,修改或删除的时候我们就需要将其在索引库进行相应的操作,我们将此操作交给另一个线程去处理,这个线程就是我们的消费者,使用“生产者,消费者模式”是因为:索引库使用前需解锁操作,使用完成之后必须解锁,所以只能有一个对象对索引库进行操作,避免数据混乱,所以要使用生产者,消费者模式

首先我们来看生产者,代码如下:

private Queue<IndexJob> jobs = new Queue<IndexJob>(); //任务队列,保存生产出来的任务和消费者使用,不使用list避免移除时数据混乱问题 /// <summary> /// 任务类,包括任务的Id,操作的类型 /// </summary> class IndexJob { public string Id { get; set; } public JobType JobType { get; set; } public HaiMi.Data.Article art { get; set; }//这里为项目中的文章实体类,根据需要自己定义 } /// <summary> /// 枚举,操作类型是增加还是删除 /// </summary> enum JobType { Add, Remove } #region 任务添加 public void AddArticle(HaiMi.Data.Article model) { IndexJob job = new IndexJob(); job.Id = model.Id; job.JobType = JobType.Add; job.art = model; logger.Debug(model.Id + "加入任务列表"); jobs.Enqueue(job);//把任务加入商品库 } public void RemoveArticle(HaiMi.Data.Article model) { IndexJob job = new IndexJob(); job.JobType = JobType.Remove; job.art = model; job.Id = model.Id; logger.Debug(model.Id + "加入删除任务列表"); jobs.Enqueue(job); } #endregion

下面是消费者,消费者我们单独一个线程来进行任务的处理:

#region 任务索引 /// <summary> /// 索引任务线程 /// </summary> private void IndexOn() { try { logger.Debug("索引任务线程启动"); while (true) { if (jobs.Count < 0) { Thread.Sleep(5 * 1000); continue; } //创建索引目录 if (!System.IO.Directory.Exists(IndexDic)) { System.IO.Directory.CreateDirectory(IndexDic); } FSDirectory directory = FSDirectory.Open(new DirectoryInfo(IndexDic), new NativeFSLockFactory()); bool isUpdate = IndexReader.IndexExists(directory); logger.Debug("索引库存在状态" + isUpdate); if (isUpdate) { //如果索引目录被锁定 if (IndexWriter.IsLocked(directory)) { logger.Debug("开始解锁索引库"); IndexWriter.Unlock(directory); logger.Debug("解锁索引库完成"); } } IndexWriter writer = new IndexWriter(directory, new PanGuAnalyzer(), !isUpdate, Lucene.Net.Index.IndexWriter.MaxFieldLength.UNLIMITED); ProcessJobs(writer); writer.Dispose(); directory.Dispose(); //writer.Close(); //directory.Close(); logger.Debug("全部索引完毕"); } } catch (Exception ex) { logger.Error(ex.Message,ex); } } private void ProcessJobs(IndexWriter writer) { while (jobs.Count != 0) { IndexJob job = jobs.Dequeue(); writer.DeleteDocuments(new Term("number", job.Id.ToString())); //如果"添加文章"任务再添加 if (job.JobType == JobType.Add) { HaiMi.Data.Article art = new HaiMi.Data.Article(); //这里的数据体通过任务线程传递 art = job.art; if (art == null) { continue; } string channel_id = art.Categoryid.ToString(); string title = art.Title; DateTime time = art.Createtime; string content = HtmlHelper.DropHTML(art.Content.ToString()); string Addtime = art.Createtime.ToString("yyyy-MM-dd"); Document document = new Document(); //只有对需要全文检索的字段才ANALYZED document.Add(new Field("number", job.Id.ToString(), Field.Store.YES, Field.Index.NOT_ANALYZED)); document.Add(new Field("title", title, Field.Store.YES, Field.Index.ANALYZED, Lucene.Net.Documents.Field.TermVector.WITH_POSITIONS_OFFSETS)); document.Add(new Field("channel_id", channel_id, Field.Store.YES, Field.Index.NOT_ANALYZED)); document.Add(new Field("Addtime", Addtime, Field.Store.YES, Field.Index.NOT_ANALYZED)); document.Add(new Field("content", content, Field.Store.YES, Field.Index.ANALYZED, Lucene.Net.Documents.Field.TermVector.WITH_POSITIONS_OFFSETS)); writer.AddDocument(document); logger.Debug("索引" + job.Id + "完毕"); } } } #endregion

以上我们就把索引库建立完毕了,接下来就是进行搜索了,搜索操作里面包括对搜索关键词进行分词,其次是搜索内容搜索词高亮显示,下面就是搜索的代码:

#region 从索引搜索结果 public List<Model.article> SearchIndex(string Words, int PageSize, int PageIndex, out int _totalcount) { _totalcount = 0; Dictionary<string, string> dic = new Dictionary<string, string>(); BooleanQuery bQuery = new BooleanQuery(); string title = string.Empty; string content = string.Empty; title = GetKeyWordsSplitBySpace(Words); QueryParser parse = new QueryParser(Lucene.Net.Util.Version.LUCENE_29, "title", new PanGuAnalyzer()); //Query query = parse.Query(title); Query query = parse.Parse(title); parse.DefaultOperator = QueryParser.Operator.AND; //parse.SetDefaultOperator(QueryParser.Operator.AND); bQuery.Add(query, Occur.SHOULD); dic.Add("title", Words); content = GetKeyWordsSplitBySpace(Words); QueryParser parseC = new QueryParser(Lucene.Net.Util.Version.LUCENE_29, "content", new PanGuAnalyzer()); Query queryC = parseC.Parse(content); parseC.DefaultOperator = QueryParser.Operator.AND; bQuery.Add(queryC, Occur.SHOULD); dic.Add("content", Words); if (bQuery != null && bQuery.GetClauses().Length > 0) { return GetSearchResult(bQuery, dic, PageSize, PageIndex, out _totalcount); } return null; } /// <summary> /// 获取 /// </summary> /// <returns></returns> private List<Model.article> GetSearchResult(BooleanQuery bQuery, Dictionary<string, string> dicKeywords, int PageSize, int PageIndex, out int totalCount) { List<Model.article> list = new List<Model.article>(); FSDirectory directory = FSDirectory.Open(new DirectoryInfo(IndexDic), new NoLockFactory()); IndexReader reader = IndexReader.Open(directory, true); IndexSearcher searcher = new IndexSearcher(reader); TopScoreDocCollector collector = TopScoreDocCollector.Create(1000, true); Sort sort = new Sort(new SortField("Addtime", SortField.DOC, true)); searcher.Search(bQuery, null, collector); totalCount = collector.TotalHits; //返回总条数 TopDocs docs = searcher.Search(bQuery, (Filter)null, PageSize * PageIndex, sort); if (docs != null && docs.TotalHits > 0) { for (int i = 0; i < docs.TotalHits; i++) { if (i >= (PageIndex - 1) * PageSize && i < PageIndex * PageSize) { Document doc = searcher.Doc(docs.ScoreDocs[i].Doc); Model.article model = new Model.article() { id = doc.Get("number").ToString(), title = doc.Get("title").ToString(), content = doc.Get("content").ToString(), add_time = DateTime.Parse(doc.Get("Addtime").ToString()), channel_id = doc.Get("channel_id").ToString() }; list.Add(SetHighlighter(dicKeywords, model)); } } } return list; } /// <summary> /// 设置关键字高亮 /// </summary> /// <param name="dicKeywords">关键字列表</param> /// <param name="model">返回的数据模型</param> /// <returns></returns> private Model.article SetHighlighter(Dictionary<string, string> dicKeywords, Model.article model) { SimpleHTMLFormatter simpleHTMLFormatter = new PanGu.HighLight.SimpleHTMLFormatter("<font color="red">", "</font>"); Highlighter highlighter = new PanGu.HighLight.Highlighter(simpleHTMLFormatter, new Segment()); highlighter.FragmentSize = 250; string strTitle = string.Empty; string strContent = string.Empty; dicKeywords.TryGetValue("title", out strTitle); dicKeywords.TryGetValue("content", out strContent); if (!string.IsNullOrEmpty(strTitle)) { string title = model.title; model.title = highlighter.GetBestFragment(strTitle, model.title); if (string.IsNullOrEmpty(model.title)) { model.title = title; } } if (!string.IsNullOrEmpty(strContent)) { string content = model.content; model.content = highlighter.GetBestFragment(strContent, model.content); if (string.IsNullOrEmpty(model.content)) { model.content = content; } } return model; } /// <summary> /// 处理关键字为索引格式 /// </summary> /// <param name="keywords"></param> /// <returns></returns> private string GetKeyWordsSplitBySpace(string keywords) { PanGuTokenizer ktTokenizer = new PanGuTokenizer(); StringBuilder result = new StringBuilder(); ICollection<WordInfo> words = ktTokenizer.SegmentToWordInfos(keywords); foreach (WordInfo word in words) { if (word == null) { continue; } result.AppendFormat("{0}^{1}.0 ", word.Word, (int)Math.Pow(3, word.Rank)); } return result.ToString().Trim(); } #endregion

以上我们的站内搜索的SearchHelper类就建立好了,下面来讲讲如何使用,此类提供以下几个方法对外使用:

在Global里面启动消费者线程:

/// <summary>

/// 启动消费者线程

/// </summary>

public void CustomerStart()

{

log4net.Config.XmlConfigurator.Configure();

PanGu.Segment.Init(PanGuPath);

Thread threadIndex = new Thread(IndexOn);

threadIndex.IsBackground = true;

threadIndex.Start();

}

protected void Application_Start(object sender, EventArgs e)

{

//启动索引库的扫描线程(生产者)

SearchHelper.GetInstance().CustomerStart();

}

在需被搜索的新增或修改处添加下面方法(model为你项目中的文章实体类):

SearchHelper.GetInstance().AddArticle(model);

在需被搜索的删除处添加下面方法:

SearchHelper.GetInstance().RemoveArticle(model);

搜索的时候使用下面的方法即可:

List<Model.article> list = new List<Model.article>();

try

{

int _totalcount = 0;

int PageSize = 10;

int PageIndex = 1;

list= SearchHelper.GetInstance().SearchIndex(words, PageSize, PageIndex, out _totalcount);

}

catch (Exception ex)

{

throw;

}

return View(list);

最后配置一下索引存放目录和分词文件目录

/// <summary> /// 索引存放目录 /// </summary> protected string IndexDic { get { //return Utils.GetXmlMapPath(DTKeys.FILE_INDEXDICPATH_XML_CONFING); return System.AppDomain.CurrentDomain.BaseDirectory + "\lucenedir"; } } /// <summary> /// 盘古分词配置目录 /// </summary> protected string PanGuPath { get { //return Utils.GetXmlMapPath(DTKeys.FILE_PANGU_XML_CONFING); return System.AppDomain.CurrentDomain.BaseDirectory + "\PanGu\PanGu.xml"; } }

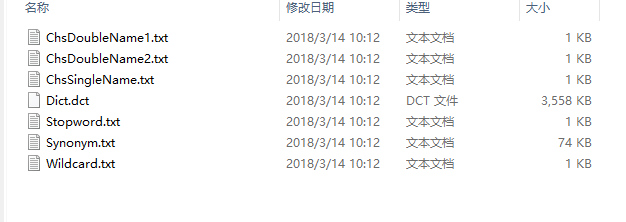

分词可以从网上下载,然后在PanGu.xml中配置DictionaryPath为你的分词存放路径

<DictionaryPath>..Dictionaries</DictionaryPath>

以上就是整个站内搜索的全部代码,SearchHelper帮助类下载地址:http://files.cnblogs.com/beimeng/SearchHelper.rar