1.选一个自己感兴趣的主题或网站。(所有同学不能雷同)

2.用python 编写爬虫程序,从网络上爬取相关主题的数据。

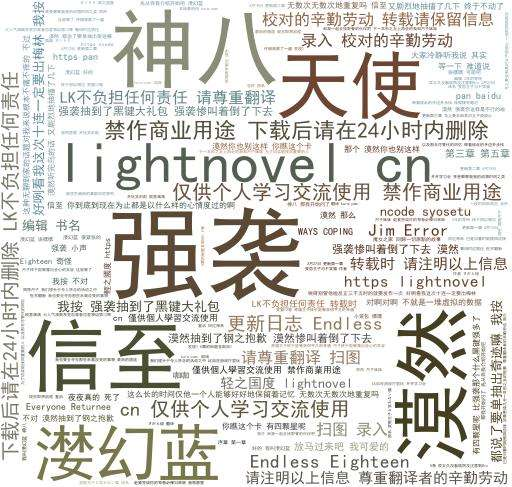

3.对爬了的数据进行文本分析,生成词云。

4.对文本分析结果进行解释说明。

5.写一篇完整的博客,描述上述实现过程、遇到的问题及解决办法、数据分析思想及结论。

6.最后提交爬取的全部数据、爬虫及数据分析源代码。

import re

import requests

import numpy as np

import jieba.analyse

import matplotlib.pyplot as plt

from bs4 import BeautifulSoup

from PIL import Image,ImageSequence

from wordcloud import WordCloud,ImageColorGenerator

# 将标签内容写入文件

def writeNewsDetail(tag):

f=open('dog.txt','a',encoding='utf-8')

f.write(tag)

f.close()

'''

def getPage(PageUrl):

res = requests.get(PageUrl)

res.encoding = "utf-8"

soup = BeautifulSoup(res.text, "html.parser")

n = soup.select("span a")[0].text

print(n)

return int(n)'''

# 获取全部页码链接

def getgain():

for i in range(1,10):

NeedUrl = 'https://www.lightnovel.cn/forum.php?mod=forumdisplay&fid=173&typeid=368&typeid=368&filter=typeid&page={}'.format(i)

print(NeedUrl)

getList(NeedUrl)

def getinformation(url):

res = requests.get(url)

res.encoding = 'utf-8'

soup = BeautifulSoup(res.text, 'html.parser')

# 获取标题

title = soup.select("#thread_subject")[0].text

print("标题:", title)

# # 获取时间s

times = soup.select("div.pti div.authi em")[0].text

# time = times.lstrip('发表于').rstrip('</em')

a = re.findall("d+-d+-d+sd+:d+", times, re.S)

print("时间:", a)

sss = soup.select(".t_f")[0].text

print("内容:", sss)

writeNewsDetail(sss)

# 获取单个页面页面所有链接

def getList(Url):

res = requests.get(Url)

res.encoding = "utf-8"

soup = BeautifulSoup(res.text, "html.parser")

# 获取单个页面所有的资源链接

page_url = soup.select("a.s.xst")

# 将获得的资源链接进行单个输出,然后获取链接的页面信息

for i in page_url:

listurl = 'https://www.lightnovel.cn/' + i.get('href')

print(listurl)

getinformation(listurl)

return listurl

def getWord():

lyric = ''

f = open('dog.txt', 'r', encoding='utf-8')

# 将文档里面的数据进行单个读取,便于生成词云

for i in f:

lyric += f.read()

# 进行分析

result = jieba.analyse.textrank(lyric, topK=50, withWeight=True)

keywords = dict()

for i in result:

keywords[i[0]] = i[1]

print(keywords)

# 获取词云生成所需要的模板图片

image = Image.open('chaowei.jpg')

graph = np.array(image)

# 进行词云的设置

wc = WordCloud(font_path='./fonts/simhei.ttf', background_color='White', max_words=50, mask=graph)

wc.generate_from_frequencies(keywords)

image_color = ImageColorGenerator(graph)

plt.imshow(wc)

plt.imshow(wc.recolor(color_func=image_color))

plt.axis("off")

plt.show()

wc.to_file('dream.png')

PageUrl = 'https://www.lightnovel.cn/forum-173-1.html'

getgain()

getWord()