kubeadm是官方推出的部署工具,旨在降低kubernetes使用门槛与提高集群部署的便捷性. 同时越来越多的官方文档,围绕kubernetes容器化部署为环境, 所以容器化部署kubernetes已成为趋势.

一、环境准备:

1、基础环境

| 主机名 | 系统环境 | 容器环境 | kubernetes版本 | IP地址 | 角色 | CPU核 |

| k8s-master | CentOS Linux 7 | Docker version 1.13.1 | v1.13.2 | 192.168.10.5 | master | 4核 |

| k8s-node-1 | CentOS Linux 7 | Docker version 1.13.1 | v1.13.2 | 192.168.10.8 | node | 8核 |

| k8s-node-2 | CentOS Linux 7 | Docker version 1.13.1 | v1.13.2 | 192.168.10.9 | node | 8核 |

2、初始化环境:

2.1关闭防火墙

systemctl stop firewalld && systemctl disable firewalld && setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

2、2关闭交换分区

swapoff -a && sysctl -w vm.swappiness=0

2.3.设置主机名,修改hosts

hostnamectl --static set-hostname k8s-master hostnamectl --static set-hostname k8s-node-1 hostnamectl --static set-hostname k8s-node-2

vim /etc/hosts

192.168.10.5 k8s-master 192.168.10.8 k8s-node-1 192.168.10.9 k8s-node-2

2.3、安装docker(所有节点)

yum -y install docker && systemctl start docker

2.4、kubernetes repo源(所有节点)

cat>>/etc/yum.repos.d/kubrenetes.repo<<EOF [kubernetes] name=Kubernetes Repo baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg EOF

2.5、安装kubelet、kubeadm、kubectl(所有节点)

yum install -y kubelet kubeadm kubectl

[root@k8s-master ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.2", GitCommit:"cff46ab41ff0bb44d8584413b598ad8360ec1def", GitTreeState:"clean", BuildDate:"2019-01-10T23:35:51Z", GoVersion:"go1.11.4", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@k8s-master ~]# kube

kubeadm kubectl kubelet

[root@k8s-master ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.2", GitCommit:"cff46ab41ff0bb44d8584413b598ad8360ec1def", GitTreeState:"clean", BuildDate:"2019-01-10T23:33:30Z", GoVersion:"go1.11.4", Compiler:"gc", Platform:"linux/amd64"}

二、开始安装master节点:

1、下载基础集群组件镜像(master和node节点都需要下载):

[root@k8s-master yaml]# cat docker_pull.sh docker pull mirrorgooglecontainers/kube-apiserver:v1.13.2 docker pull mirrorgooglecontainers/kube-controller-manager:v1.13.2 docker pull mirrorgooglecontainers/kube-scheduler:v1.13.2 docker pull mirrorgooglecontainers/kube-proxy:v1.13.2 docker pull mirrorgooglecontainers/pause:3.1 docker pull mirrorgooglecontainers/etcd:3.2.24 docker pull coredns/coredns:1.2.6 docker pull docker.io/dockerofwj/flannel docker tag mirrorgooglecontainers/kube-apiserver:v1.13.2 k8s.gcr.io/kube-apiserver:v1.13.2 docker tag mirrorgooglecontainers/kube-controller-manager:v1.13.2 k8s.gcr.io/kube-controller-manager:v1.13.2 docker tag mirrorgooglecontainers/kube-scheduler:v1.13.2 k8s.gcr.io/kube-scheduler:v1.13.2 docker tag mirrorgooglecontainers/kube-proxy:v1.13.2 k8s.gcr.io/kube-proxy:v1.13.2 docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1 docker tag mirrorgooglecontainers/etcd:3.2.24 k8s.gcr.io/etcd:3.2.24 docker tag coredns/coredns:1.2.6 k8s.gcr.io/coredns:1.2.6 docker tag docker.io/dockerofwj/flannel quay.io/coreos/flannel:v0.10.0-amd64 docker rmi mirrorgooglecontainers/kube-apiserver:v1.13.2 docker rmi mirrorgooglecontainers/kube-controller-manager:v1.13.2 docker rmi mirrorgooglecontainers/kube-scheduler:v1.13.2 docker rmi mirrorgooglecontainers/kube-proxy:v1.13.2 docker rmi mirrorgooglecontainers/pause:3.1 docker rmi mirrorgooglecontainers/etcd:3.2.24 docker rmi coredns/coredns:1.2.6 docker rmi docker.io/dockerofwj/flannel

[root@k8s-node-2 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE k8s.gcr.io/kube-apiserver v1.13.2 177db4b8e93a Less than a second ago 181 MB k8s.gcr.io/kube-proxy v1.13.2 01cfa56edcfc Less than a second ago 80.3 MB docker.io/mirrorgooglecontainers/kube-scheduler v1.13.2 3193be46e0b3 Less than a second ago 79.6 MB k8s.gcr.io/kube-scheduler v1.13.2 3193be46e0b3 Less than a second ago 79.6 MB k8s.gcr.io/coredns 1.2.6 f59dcacceff4 2 months ago 40 MB k8s.gcr.io/etcd 3.2.24 3cab8e1b9802 3 months ago 220 MB quay.io/coreos/flannel v0.10.0-amd64 17ccf3fc30e3 4 months ago 44.6 MB k8s.gcr.io/pause 3.1 da86e6ba6ca1 12 months ago 742 kB

2、在master执行初始化命令:

[root@k8s-master ~]# kubeadm init --kubernetes-version=v1.13.2 --apiserver-advertise-address 192.168.10.5 --pod-network-cidr=10.10.0.0/16 [init] Using Kubernetes version: v1.13.2 [preflight] Running pre-flight checks [WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service' [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Activating the kubelet service [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.10.5] [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.10.5 127.0.0.1 ::1] [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.10.5 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 20.510036 seconds [uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.13" in namespace kube-system with the configuration for the kubelets in the cluster [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-master" as an annotation [mark-control-plane] Marking the node k8s-master as control-plane by adding the label "node-role.kubernetes.io/master=''" [mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] [bootstrap-token] Using token: lwduhf.b20k2ahvgs9akc3o [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join 192.168.10.5:6443 --token lwduhf.b20k2ahvgs9akc3o --discovery-token-ca-cert-hash sha256:19b470c59e24b46b86372e7b798f0c0fd058169a78b904d841fe8d54115c5e16

这条指令用于在node节点添加到master集群,同时node节点需要kube-proxy、flannel镜像

kubeadm join 192.168.10.5:6443 --token lwduhf.b20k2ahvgs9akc3o --discovery-token-ca-cert-hash sha256:19b470c59e24b46b86372e7b798f0c0fd058169a78b904d841fe8d54115c5e16

3、配置 kubectl

在 Master上用 root用户执行下列命令来配置 kubectl:

[root@k8s-master ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile [root@k8s-master ~]# source /etc/profile [root@k8s-master ~]# echo $KUBECONFIG /etc/kubernetes/admin.conf

4、安装Pod网络

安装 Pod网络是 Pod之间进行通信的必要条件,k8s支持众多网络方案,这里我们依然选用经典的 flannel方案

4.1 首先设置系统参数

[root@k8s-master ~]# sysctl net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-iptables = 1

4.2 、加载yaml文件:

[root@k8s-master script]# kubectl apply -f kube-flannel.yaml

[root@k8s-master script]# cat kube-flannel.yaml kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel rules: - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-system --- apiVersion: v1 kind: ServiceAccount metadata: name: flannel namespace: kube-system --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-system labels: tier: node app: flannel data: cni-conf.json: | { "name": "cbr0", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.10.0.0/16", "Backend": { "Type": "vxlan" } } --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: kube-flannel-ds-amd64 namespace: kube-system labels: tier: node app: flannel spec: template: metadata: labels: tier: node app: flannel spec: hostNetwork: true nodeSelector: beta.kubernetes.io/arch: amd64 tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.10.0-amd64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.10.0-amd64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: true env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: kube-flannel-ds-arm64 namespace: kube-system labels: tier: node app: flannel spec: template: metadata: labels: tier: node app: flannel spec: hostNetwork: true nodeSelector: beta.kubernetes.io/arch: arm64 tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.10.0-arm64 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.10.0-arm64 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: true env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: kube-flannel-ds-arm namespace: kube-system labels: tier: node app: flannel spec: template: metadata: labels: tier: node app: flannel spec: hostNetwork: true nodeSelector: beta.kubernetes.io/arch: arm tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.10.0-arm command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.10.0-arm command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: true env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: kube-flannel-ds-ppc64le namespace: kube-system labels: tier: node app: flannel spec: template: metadata: labels: tier: node app: flannel spec: hostNetwork: true nodeSelector: beta.kubernetes.io/arch: ppc64le tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.10.0-ppc64le command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.10.0-ppc64le command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: true env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg --- apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: kube-flannel-ds-s390x namespace: kube-system labels: tier: node app: flannel spec: template: metadata: labels: tier: node app: flannel spec: hostNetwork: true nodeSelector: beta.kubernetes.io/arch: s390x tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni image: quay.io/coreos/flannel:v0.10.0-s390x command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: quay.io/coreos/flannel:v0.10.0-s390x command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: true env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run - name: flannel-cfg mountPath: /etc/kube-flannel/ volumes: - name: run hostPath: path: /run - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg

4.3、查看运行的POD,检查 CoreDNS Pod此刻是否正常运行起来

[root@k8s-master script]# kubectl get pods --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system coredns-86c58d9df4-dwbnz 1/1 Running 0 28m 10.10.0.2 k8s-master <none> <none> kube-system coredns-86c58d9df4-xqjz2 1/1 Running 0 28m 10.10.0.3 k8s-master <none> <none> kube-system etcd-k8s-master 1/1 Running 0 27m 192.168.10.5 k8s-master <none> <none> kube-system kube-apiserver-k8s-master 1/1 Running 0 27m 192.168.10.5 k8s-master <none> <none> kube-system kube-controller-manager-k8s-master 1/1 Running 0 27m 192.168.10.5 k8s-master <none> <none> kube-system kube-flannel-ds-amd64-dvggv 1/1 Running 0 4m17s 192.168.10.5 k8s-master <none> <none> kube-system kube-proxy-sj6sm 1/1 Running 0 28m 192.168.10.5 k8s-master <none> <none> kube-system kube-scheduler-k8s-master 1/1 Running 0 27m 192.168.10.5 k8s-master <none> <none>

查看master节点运行状态:

[root@k8s-master script]# kubectl get node NAME STATUS ROLES AGE VERSION k8s-master Ready master 28m v1.13.2

三、在node节点 加入集群:

1、node节点需要的下载镜像

[root@k8s-node-1 ~]# cat docker_pull.sh docker pull mirrorgooglecontainers/kube-proxy:v1.13.2 docker pull mirrorgooglecontainers/pause:3.1 docker pull docker.io/dockerofwj/flannel docker tag mirrorgooglecontainers/kube-proxy:v1.13.2 k8s.gcr.io/kube-proxy:v1.13.2 docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1 docker tag docker.io/dockerofwj/flannel quay.io/coreos/flannel:v0.10.0-amd64 docker rmi mirrorgooglecontainers/kube-proxy:v1.13.2 docker rmi mirrorgooglecontainers/pause:3.1 docker rmi docker.io/dockerofwj/flannel

2、在两个 Slave节点上分别执行如下命令来让其加入Master上已经就绪了的 k8s集群

[root@k8s-node-2 ~]# kubeadm join 192.168.10.5:6443 --token lwduhf.b20k2ahvgs9akc3o --discovery-token-ca-cert-hash sha256:19b470c59e24b46b86372e7b798f0c0fd058169a78b904d841fe8d54115c5e16 [preflight] Running pre-flight checks [WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service' [WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service' [discovery] Trying to connect to API Server "192.168.10.5:6443" [discovery] Created cluster-info discovery client, requesting info from "https://192.168.10.5:6443" [discovery] Requesting info from "https://192.168.10.5:6443" again to validate TLS against the pinned public key [discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.10.5:6443" [discovery] Successfully established connection with API Server "192.168.10.5:6443" [join] Reading configuration from the cluster... [join] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.13" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Activating the kubelet service [tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap... [patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-node-2" as an annotation This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the master to see this node join the cluster.

查看token

[root@k8s-master script]# kubeadm token list

查看添加节点状态

[root@k8s-master script]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready master 40m v1.13.2 k8s-node-1 Ready <none> 15s v1.13.2 k8s-node-2 Ready <none> 116s v1.13.2

查看pod状态:

[root@k8s-master script]# kubectl get pods --all-namespaces -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system coredns-86c58d9df4-dwbnz 1/1 Running 0 43m 10.10.0.2 k8s-master <none> <none> kube-system coredns-86c58d9df4-xqjz2 1/1 Running 0 43m 10.10.0.3 k8s-master <none> <none> kube-system etcd-k8s-master 1/1 Running 0 42m 192.168.10.5 k8s-master <none> <none> kube-system kube-apiserver-k8s-master 1/1 Running 0 42m 192.168.10.5 k8s-master <none> <none> kube-system kube-controller-manager-k8s-master 1/1 Running 0 42m 192.168.10.5 k8s-master <none> <none> kube-system kube-flannel-ds-amd64-dvggv 1/1 Running 0 19m 192.168.10.5 k8s-master <none> <none> kube-system kube-flannel-ds-amd64-gxmnk 1/1 Running 0 3m18s 192.168.10.8 k8s-node-1 <none> <none> kube-system kube-flannel-ds-amd64-rbpmq 1/1 Running 0 4m59s 192.168.10.9 k8s-node-2 <none> <none> kube-system kube-proxy-rblt6 1/1 Running 0 3m18s 192.168.10.8 k8s-node-1 <none> <none> kube-system kube-proxy-sj6sm 1/1 Running 0 43m 192.168.10.5 k8s-master <none> <none> kube-system kube-proxy-tx2p9 1/1 Running 0 4m59s 192.168.10.9 k8s-node-2 <none> <none> kube-system kube-scheduler-k8s-master 1/1 Running 0 42m 192.168.10.5 k8s-master <none> <none>

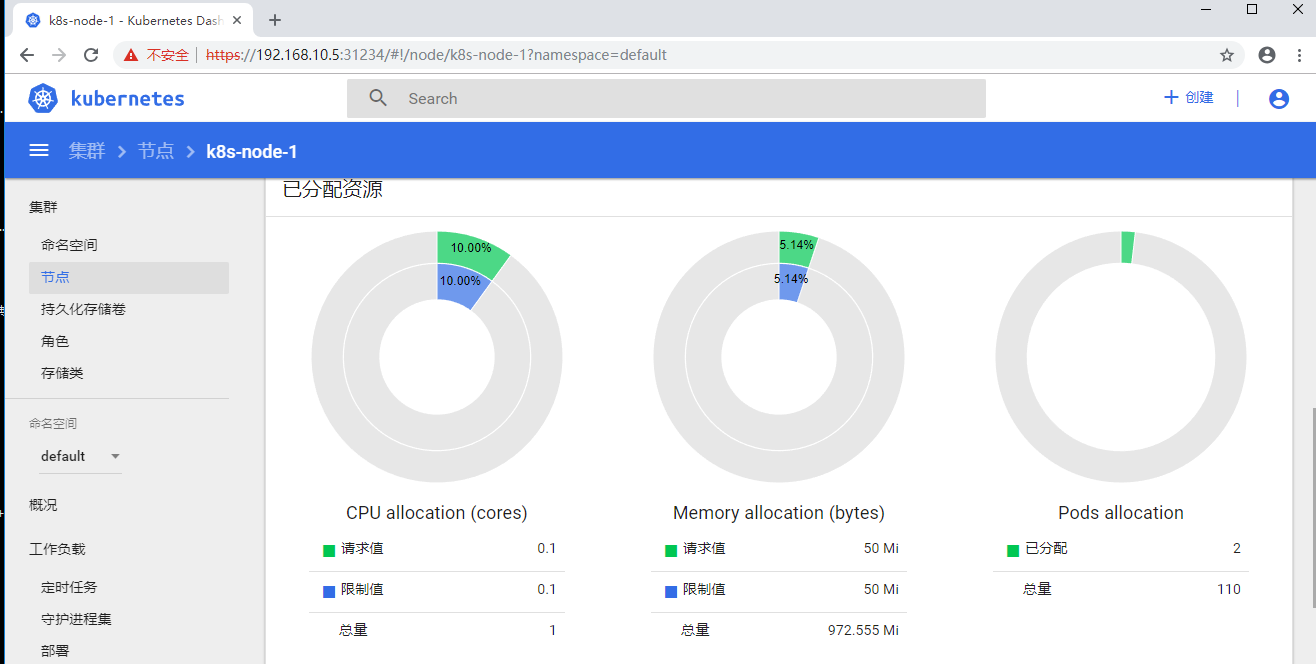

四、安装dashboard

Kubernetes Dashboard (仪表盘)是一个旨在将通用的基于 Web 的监控和操作界面加入 Kubernetes 的项目。

1、下载Kubernetes Dashboard镜像:

[root@k8s-node-2 ~]# docker pull docker.io/mirrorgooglecontainers/kubernetes-dashboard-amd64:v1.8.3

[root@k8s-node-1 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE k8s.gcr.io/kube-proxy v1.13.2 01cfa56edcfc Less than a second ago 80.3 MB quay.io/coreos/flannel v0.10.0-amd64 17ccf3fc30e3 4 months ago 44.6 MB docker.io/mirrorgooglecontainers/kubernetes-dashboard-amd64 v1.8.3 0c60bcf89900 11 months ago 102 MB k8s.gcr.io/pause 3.1 da86e6ba6ca1 12 months ago 742 kB

创建目录:

[root@k8s-node-1 ~]# mkdir -p /var/share/certs

2、安装 (在master节点加载)dashboard:

[root@k8s-master script]# cat kubernetes-dashboard.yaml # Copyright 2017 The Kubernetes Authors. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # ------------------- Dashboard Secret ------------------- # apiVersion: v1 kind: Secret metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kube-system type: Opaque --- # ------------------- Dashboard Service Account ------------------- # apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system --- # ------------------- Dashboard Role & Role Binding ------------------- # kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: kubernetes-dashboard-minimal namespace: kube-system rules: # Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret. - apiGroups: [""] resources: ["secrets"] verbs: ["create"] # Allow Dashboard to create 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] verbs: ["create"] # Allow Dashboard to get, update and delete Dashboard exclusive secrets. - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics from heapster. - apiGroups: [""] resources: ["services"] resourceNames: ["heapster"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:"] verbs: ["get"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: kubernetes-dashboard-minimal namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard-minimal subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kube-system --- # ------------------- Dashboard Deployment ------------------- # kind: Deployment apiVersion: apps/v1beta2 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: containers: - name: kubernetes-dashboard image: docker.io/mirrorgooglecontainers/kubernetes-dashboard-amd64:v1.8.3 ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates - --token-ttl=5400 # Uncomment the following line to manually specify Kubernetes API server Host # If not specified, Dashboard will attempt to auto discover the API server and connect # to it. Uncomment only if the default does not work. # - --apiserver-host=http://my-address:port volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs # Create on-disk volume to store exec logs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 volumes: - name: kubernetes-dashboard-certs hostPath: path: /var/share/certs type: Directory - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule --- # ------------------- Dashboard Service ------------------- # kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: ports: - port: 443 targetPort: 8443 nodePort: 31234 selector: k8s-app: kubernetes-dashboard type: NodePort

[root@k8s-master script]# kubectl create -f kubernetes-dashboard.yaml secret/kubernetes-dashboard-certs created serviceaccount/kubernetes-dashboard created role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created deployment.apps/kubernetes-dashboard created service/kubernetes-dashboard created

查看服务运行状态:

[root@k8s-master script]# kubectl get pods --namespace=kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES coredns-86c58d9df4-dwbnz 1/1 Running 0 89m 10.10.0.2 k8s-master <none> <none> coredns-86c58d9df4-xqjz2 1/1 Running 0 89m 10.10.0.3 k8s-master <none> <none> etcd-k8s-master 1/1 Running 0 88m 192.168.10.5 k8s-master <none> <none> kube-apiserver-k8s-master 1/1 Running 0 88m 192.168.10.5 k8s-master <none> <none> kube-controller-manager-k8s-master 1/1 Running 0 88m 192.168.10.5 k8s-master <none> <none> kube-flannel-ds-amd64-dvggv 1/1 Running 0 65m 192.168.10.5 k8s-master <none> <none> kube-flannel-ds-amd64-gxmnk 1/1 Running 2 49m 192.168.10.8 k8s-node-1 <none> <none> kube-flannel-ds-amd64-rbpmq 1/1 Running 0 50m 192.168.10.9 k8s-node-2 <none> <none> kube-proxy-rblt6 1/1 Running 2 49m 192.168.10.8 k8s-node-1 <none> <none> kube-proxy-sj6sm 1/1 Running 0 89m 192.168.10.5 k8s-master <none> <none> kube-proxy-tx2p9 1/1 Running 0 50m 192.168.10.9 k8s-node-2 <none> <none> kube-scheduler-k8s-master 1/1 Running 0 88m 192.168.10.5 k8s-master <none> <none> kubernetes-dashboard-7d9958fcfd-b6z9k 1/1 Running 0 2m43s 10.10.1.2 k8s-node-2 <none> <none>

查看kubernetes-dashboard映射端口

[root@k8s-master script]# kubectl get service --namespace=kube-system -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 90m k8s-app=kube-dns kubernetes-dashboard NodePort 10.105.177.107 <none> 443:31234/TCP 3m27s k8s-app=kubernetes-dashboard

3、生成私钥和证书签名:

openssl genrsa -des3 -passout pass:x -out dashboard.pass.key 2048 openssl rsa -passin pass:x -in dashboard.pass.key -out dashboard.key rm dashboard.pass.key openssl req -new -key dashboard.key -out dashboard.csr【如遇输入,一路回车即可】

4、生成SSL证书:

openssl x509 -req -sha256 -days 365 -in dashboard.csr -signkey dashboard.key -out dashboard.crt

然后将生成的 dashboard.key 和 dashboard.crt置于路径 /home/share/certs下,

[root@k8s-master script]# scp dashboard.key dashboard.crt /var/share/certs/

该路径会配置到下面即将要操作的

5、加载dashboard-user-role.yaml 配置:

[root@k8s-master script]# cat dashboard-user-role.yaml kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: admin annotations: rbac.authorization.kubernetes.io/autoupdate: "true" roleRef: kind: ClusterRole name: cluster-admin apiGroup: rbac.authorization.k8s.io subjects: - kind: ServiceAccount name: admin namespace: kube-system --- apiVersion: v1 kind: ServiceAccount metadata: name: admin namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile

[root@k8s-master script]# kubectl create -f dashboard-user-role.yaml clusterrolebinding.rbac.authorization.k8s.io/admin created serviceaccount/admin created

查看token

[root@k8s-master script]# kubectl describe secret/$(kubectl get secret -nkube-system |grep admin|awk '{print $1}') -nkube-system Name: admin-token-kcz8s Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name: admin kubernetes.io/service-account.uid: b72b94d9-0b9e-11e9-819f-000c2953a750 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1025 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi10b2tlbi1rY3o4cyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImI3MmI5NGQ5LTBiOWUtMTFlOS04MTlmLTAwMGMyOTUzYTc1MCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTphZG1pbiJ9.LwNkLys00I3YLH2gXzvCerFN5C2nwnw-w1I6VHNDjByLucG6BXdiKTKGwIJGfznsEhp54SJ6_pgn5WGjhq-hL5Kp9fSQU4HT1DFS5VIjnwv3yBe4JfuBLEpF0AiSyefLgX5oRwiAogrXYMLNlYD4aaPThgfLjRuexUUQJKBaoVs5MikI24kgHv1wA0wFFqZUTxM6KGFhc7JmvyVyLLjDu8SQ2AXCOMefOoV-GKQ3ZVwNsVtricjnZBPX__5AbLBbdXS0KE1R32uSM_M-BgV5W4X0WNQ_vollVRRevX0i-JvXOJunHa1eB6uZXM_X_t5xv_DA8-QAt644wjHejj2LMQ

6、通过浏览器登录web界面:

复制token