[zhangbin@hadoop102 hadoop-3.3.0]$ sbin/start-dfs.sh

异常信息:

hadoop102: ERROR: Cannot set priority of namenode process 35346

解决方法:

切换到hadoop日志目录:

[zhangbin@hadoop102 hadoop-3.3.0]$ cd logs/

查看日志信息:

[zhangbin@hadoop102 logs]$ cat hadoop-zhangbin-namenode-hadoop102.log

异常信息大体为这样:

2021-04-05 10:21:36,656 INFO org.apache.hadoop.http.HttpServer2: HttpServer.start() threw a non Bind IOException

java.net.BindException: Port in use: hadoop102:9870

at org.apache.hadoop.http.HttpServer2.constructBindException(HttpServer2.java:1292)

at org.apache.hadoop.http.HttpServer2.bindForSinglePort(HttpServer2.java:1314)

at org.apache.hadoop.http.HttpServer2.openListeners(HttpServer2.java:1373)

at org.apache.hadoop.http.HttpServer2.start(HttpServer2.java:1223)

at org.apache.hadoop.hdfs.server.namenode.NameNodeHttpServer.start(NameNodeHttpServer.java:170)

at org.apache.hadoop.hdfs.server.namenode.NameNode.startHttpServer(NameNode.java:946)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:757)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:1014)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:987)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1756)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1821)

Caused by: java.io.IOException: Failed to bind to hadoop102/192.168.10.102:9870

at org.eclipse.jetty.server.ServerConnector.openAcceptChannel(ServerConnector.java:346)

at org.eclipse.jetty.server.ServerConnector.open(ServerConnector.java:307)

at org.apache.hadoop.http.HttpServer2.bindListener(HttpServer2.java:1279)

at org.apache.hadoop.http.HttpServer2.bindForSinglePort(HttpServer2.java:1310)

... 9 more

Caused by: java.net.BindException: 地址已在使用

at sun.nio.ch.Net.bind0(Native Method)

at sun.nio.ch.Net.bind(Net.java:433)

at sun.nio.ch.Net.bind(Net.java:425)

at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:223)

at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74)

at org.eclipse.jetty.server.ServerConnector.openAcceptChannel(ServerConnector.java:342)

... 12 more

解决方法:找到到占用端口,并杀死

[zhangbin@hadoop102 logs]$ lsof -i:9870 -P

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

java 22003 zhangbin 289u IPv4 89125 0t0 TCP hadoop102:9870 (LISTEN)

[zhangbin@hadoop102 logs]$ kill -9 22003

重新启动即可

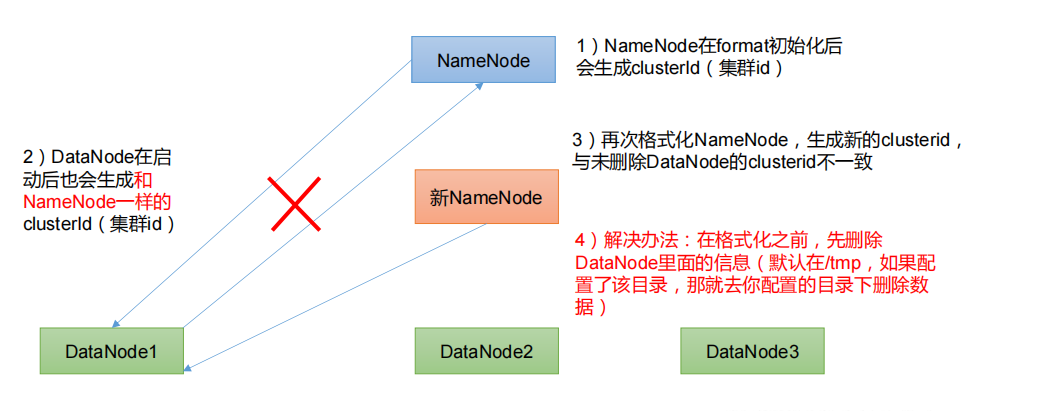

解决办法:

停止所有hadoop节点的所有服务

删除每个服务节点(服务器)中的DataNode里面的信息(默认在/tmp,我的在DataNode信息配置在了hadoop项目根目录的data中, 并且删除hadoop下的logs目录,删除这两个目录里的所有文件)

重新格式化hadoop:指令:

hdfs namenode -format

启动hadoop服务即可