写在前面

- 学习并理解基于Python的Mininet脚本。

- 核心:通过“ovs-vsctl”命令,给OvS交换机下流表。

实验简介

在SDN环境中,控制器可以通过对交换机下发流表操作来控制交换机的转发行为。在本实验中,使用Mininet基于python的脚本,调用“ovs-vsctl”命令直接控制OpenvSwitch。使用默认的交换机泛洪规则,设置更高的优先级规则进行预先定义IP报文的转发。在多个交换机中通过设置不同TOS值的数据包将通过不同的方式到达目的地址,验证主机间的连通性及到达目的的时间。

实验任务一

使用默认的交换机泛洪规则,设置更高的优先级规则进行预先定义IP报文的转发。

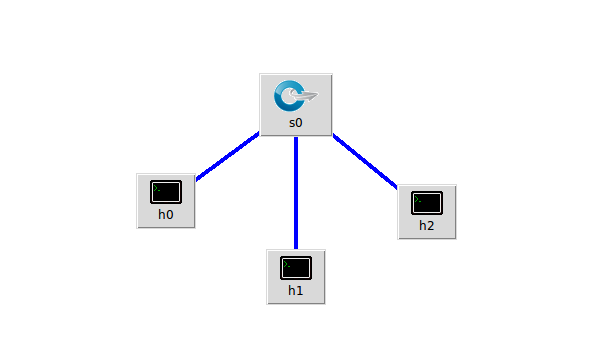

- 实验一拓扑

- 创建脚本,并编辑内容如下:

#!/usr/bin/python

from mininet.net import Mininet

from mininet.node import Node

from mininet.link import Link

from mininet.log import setLogLevel, info

def myNet():

"Create network from scratch using Open vSwitch."

info( "*** Creating nodes

" ) #创建节点

switch0 = Node( 's0', inNamespace=False )

h0 = Node( 'h0' )

h1 = Node( 'h1' )

h2 = Node( 'h2' )

info( "*** Creating links

" ) #创建连接

Link( h0, switch0)

Link( h1, switch0)

Link( h2, switch0)

info( "*** Configuring hosts

" )

h0.setIP( '192.168.123.1/24' )

h1.setIP( '192.168.123.2/24' )

h2.setIP( '192.168.123.3/24' )

info( "*** Starting network using Open vSwitch

" )

switch0.cmd( 'ovs-vsctl del-br dp0' )

switch0.cmd( 'ovs-vsctl add-br dp0' )

for intf in switch0.intfs.values(): #为刚刚创建的连接,依次分配端口

print intf

print switch0.cmd( 'ovs-vsctl add-port dp0 %s' % intf )

# Note: controller and switch are in root namespace, and we

# can connect via loopback interface

#switch0.cmd( 'ovs-vsctl set-controller dp0 tcp:127.0.0.1:6633' )

print switch0.cmd(r'ovs-vsctl show')

#下发流表

print switch0.cmd(r'ovs-ofctl add-flow dp0 idle_timeout=0,priority=1,in_port=1,actions=flood' )

print switch0.cmd(r'ovs-ofctl add-flow dp0 idle_timeout=0,priority=1,in_port=2,actions=flood' )

print switch0.cmd(r'ovs-ofctl add-flow dp0 idle_timeout=0,priority=1,in_port=3,actions=flood' )

print switch0.cmd(r'ovs-ofctl add-flow dp0 idle_timeout=0,priority=10,ip,nw_dst=192.168.123.1,actions=output:1' )

print switch0.cmd(r'ovs-ofctl add-flow dp0 idle_timeout=0,priority=10,ip,nw_dst=192.168.123.2,actions=output:2' )

print switch0.cmd(r'ovs-ofctl add-flow dp0 idle_timeout=0,priority=10,ip,nw_dst=192.168.123.3,actions=output:3')

#switch0.cmd('tcpdump -i s0-eth0 -U -w aaa &')

#h0.cmd('tcpdump -i h0-eth0 -U -w aaa &')

info( "*** Running test

" )

h0.cmdPrint( 'ping -c 3 ' + h1.IP() )

h0.cmdPrint( 'ping -c 3 ' + h2.IP() )

#print switch0.cmd( 'ovs-ofctl show dp0' )

#print switch0.cmd( 'ovs-ofctl dump-tables dp0' )

#print switch0.cmd( 'ovs-ofctl dump-ports dp0' )

#print switch0.cmd( 'ovs-ofctl dump-flows dp0' )

#print switch0.cmd( 'ovs-ofctl dump-aggregate dp0' )

#print switch0.cmd( 'ovs-ofctl queue-stats dp0' )

info( "*** Stopping network

" )

switch0.cmd( 'ovs-vsctl del-br dp0' )

switch0.deleteIntfs()

info( '

' )

if __name__ == '__main__':

setLogLevel( 'info' )

info( '*** Scratch network demo (kernel datapath)

' )

Mininet.init()

myNet()

s0中的流表项:

- 优先级为10:

- 目的IP为192.168.123.1(h0)的包,1口发出。

- 目的IP为192.168.123.2(h1)的包,2口发出。

- 目的IP为192.168.123.3(h2)的包,3口发出。

- 优先级为1:

- 1口进来的包,泛洪发出。

- 2口进来的包,泛洪发出。

- 3口进来的包,泛洪发出。

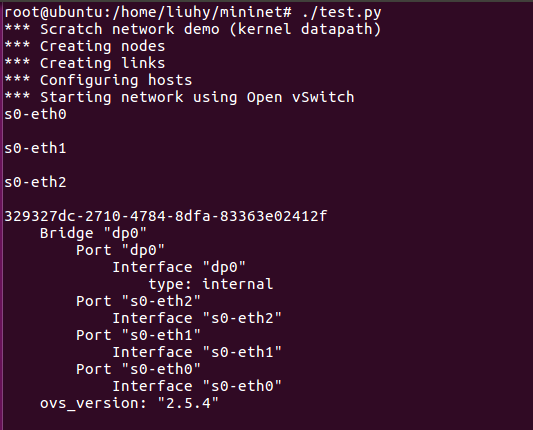

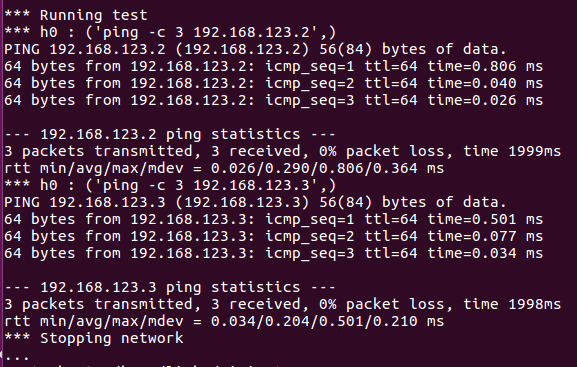

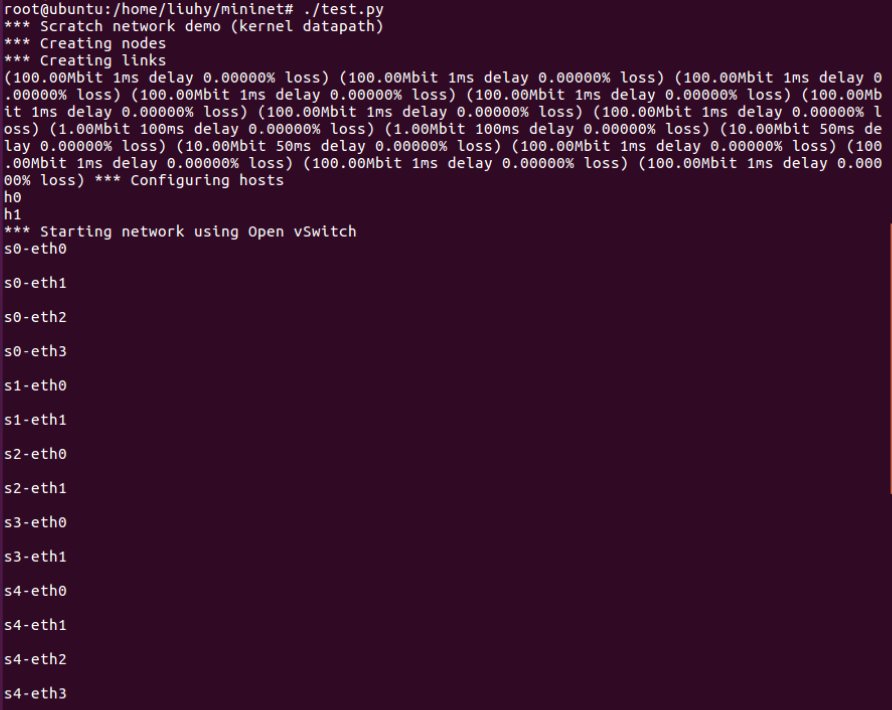

- 给权限后运行脚本。

- 结果如下:

实验任务二

在多个交换机中通过设置不同TOS值的数据包将通过不同的方式到达目的地址,验证主机间的连通性及到达目的的时间。

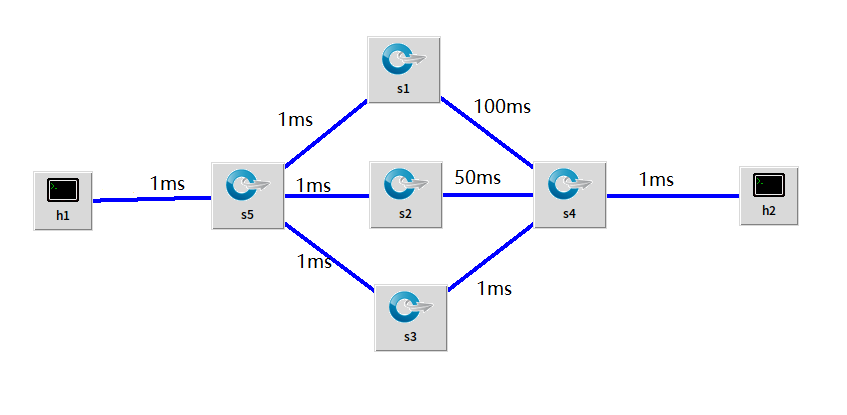

- 实验二拓扑:

TOS值:

RFC 791中定义了TOS位的前三位为IP Precedence,划分成了8个优先级,即:IP优先级字段。可以应用于流分类,数值越大表示优先级越高。IP 优先权与CoS 相同,有8种服务(0 到7)可以标记。IP优先权值应用类型如下:

- 7 预留(Reserved)

- 6 预留(Reserved)

- 5 语音(Voice)

- 4 视频会议(Video Conference)

- 3 呼叫信号(Call Signaling)

- 2 高优先级数据(High-priority Data)

- 1 中优先级数据(Medium-priority Data)

- 0 尽力服务数据(Best-effort Data)

在网络中实际部署的时候8个优先级是远远不够的,于是在RFC 2474中又对TOS进行了重新的定义。把前六位定义成DSCP差分服务代码点(Differentiated Services Code Point),后两位保留。

- 建立脚本并修改为以下内容:

#!/usr/bin/python

from mininet.net import Mininet

from mininet.node import Node

from mininet.link import TCLink

from mininet.log import setLogLevel, info

def myNet():

"Create network from scratch using Open vSwitch."

info( "*** Creating nodes

" )

switch0 = Node( 's0', inNamespace=False )

switch1 = Node( 's1', inNamespace=False )

switch2 = Node( 's2', inNamespace=False )

switch3 = Node( 's3', inNamespace=False )

switch4 = Node( 's4', inNamespace=False )

h0 = Node( 'h0' )

h1 = Node( 'h1' )

info( "*** Creating links

" )

linkopts0=dict(bw=100, delay='1ms', loss=0)

linkopts1=dict(bw=1, delay='100ms', loss=0)

linkopts2=dict(bw=10, delay='50ms', loss=0)

linkopts3=dict(bw=100, delay='1ms', loss=0)

TCLink( h0, switch0, **linkopts0)

TCLink( switch0, switch1, **linkopts0)

TCLink( switch0, switch2, **linkopts0)

TCLink( switch0, switch3, **linkopts0)

TCLink( switch1, switch4,**linkopts1)

TCLink( switch2, switch4,**linkopts2)

TCLink( switch3, switch4,**linkopts3)

TCLink( h1, switch4, **linkopts0)

info( "*** Configuring hosts

" )

h0.setIP( '192.168.123.1/24' )

h1.setIP( '192.168.123.2/24' )

info( str( h0 ) + '

' )

info( str( h1 ) + '

' )

info( "*** Starting network using Open vSwitch

" )

switch0.cmd( 'ovs-vsctl del-br dp0' )

switch0.cmd( 'ovs-vsctl add-br dp0' )

switch1.cmd( 'ovs-vsctl del-br dp1' )

switch1.cmd( 'ovs-vsctl add-br dp1' )

switch2.cmd( 'ovs-vsctl del-br dp2' )

switch2.cmd( 'ovs-vsctl add-br dp2' )

switch3.cmd( 'ovs-vsctl del-br dp3' )

switch3.cmd( 'ovs-vsctl add-br dp3' )

switch4.cmd( 'ovs-vsctl del-br dp4' )

switch4.cmd( 'ovs-vsctl add-br dp4' )

for intf in switch0.intfs.values():

print intf

print switch0.cmd( 'ovs-vsctl add-port dp0 %s' % intf )

for intf in switch1.intfs.values():

print intf

print switch1.cmd( 'ovs-vsctl add-port dp1 %s' % intf )

for intf in switch2.intfs.values():

print intf

print switch2.cmd( 'ovs-vsctl add-port dp2 %s' % intf )

for intf in switch3.intfs.values():

print intf

print switch3.cmd( 'ovs-vsctl add-port dp3 %s' % intf )

for intf in switch4.intfs.values():

print intf

print switch4.cmd( 'ovs-vsctl add-port dp4 %s' % intf )

print switch1.cmd(r'ovs-ofctl add-flow dp1 idle_timeout=0,priority=1,in_port=1,actions=flood' )

print switch1.cmd(r'ovs-ofctl add-flow dp1 idle_timeout=0,priority=1,in_port=1,actions=output:2' )

print switch1.cmd(r'ovs-ofctl add-flow dp1 idle_timeout=0,priority=1,in_port=2,actions=output:1' )

print switch2.cmd(r'ovs-ofctl add-flow dp2 idle_timeout=0,priority=1,in_port=1,actions=output:2' )

print switch2.cmd(r'ovs-ofctl add-flow dp2 idle_timeout=0,priority=1,in_port=2,actions=output:1' )

print switch3.cmd(r'ovs-ofctl add-flow dp3 idle_timeout=0,priority=1,in_port=1,actions=output:2' )

print switch3.cmd(r'ovs-ofctl add-flow dp3 idle_timeout=0,priority=1,in_port=2,actions=output:1' )

print switch4.cmd(r'ovs-ofctl add-flow dp4 idle_timeout=0,priority=1,in_port=1,actions=output:4' )

print switch4.cmd(r'ovs-ofctl add-flow dp4 idle_timeout=0,priority=1,in_port=2,actions=output:4' )

print switch4.cmd(r'ovs-ofctl add-flow dp4 idle_timeout=0,priority=1,in_port=3,actions=output:4' )

print switch4.cmd(r'ovs-ofctl add-flow dp4 idle_timeout=0,priority=1,in_port=4,actions=output:3' )

#print switch0.cmd(r'ovs-ofctl add-flow dp0 idle_timeout=0,priority=10,ip,nw_dst=192.168.123.2,actions=output:4')

print switch0.cmd(r'ovs-ofctl add-flow dp0 idle_timeout=0,priority=10,ip,nw_dst=192.168.123.2,nw_tos=0x10,actions=output:2')

print switch0.cmd(r'ovs-ofctl add-flow dp0 idle_timeout=0,priority=10,ip,nw_dst=192.168.123.2,nw_tos=0x20,actions=output:3')

print switch0.cmd(r'ovs-ofctl add-flow dp0 idle_timeout=0,priority=10,ip,nw_dst=192.168.123.2,nw_tos=0x30,actions=output:4')

#print switch0.cmd(r'ovs-ofctl add-flow dp0 idle_timeout=0,priority=10,ip,nw_dst=192.168.123.1,actions=output:1')

#switch0.cmd('tcpdump -i s0-eth0 -U -w aaa &')

#h0.cmd('tcpdump -i h0-eth0 -U -w aaa &')

info( "*** Running test

" )

h0.cmdPrint( 'ping -Q 0x10 -c 3 ' + h1.IP() )

h0.cmdPrint( 'ping -Q 0x20 -c 3 ' + h1.IP() )

h0.cmdPrint( 'ping -Q 0x30 -c 3 ' + h1.IP() )

#h1.cmdPrint('iperf -s -p 12345 -u &')

#h0.cmdPrint('iperf -c ' + h1.IP() +' -u -b 10m -p 12345 -t 10 -i 1')

#print switch0.cmd( 'ovs-ofctl show dp0' )

#print switch1.cmd( 'ovs-ofctl show dp1' )

#print switch2.cmd( 'ovs-ofctl show dp2' )

#print switch3.cmd( 'ovs-ofctl show dp3' )

#print switch4.cmd( 'ovs-ofctl show dp4' )

#print switch0.cmd( 'ovs-ofctl dump-tables dp0' )

#print switch0.cmd( 'ovs-ofctl dump-ports dp0' )

#print switch0.cmd( 'ovs-ofctl dump-flows dp0' )

#print switch0.cmd( 'ovs-ofctl dump-aggregate dp0' )

#print switch0.cmd( 'ovs-ofctl queue-stats dp0' )

#print "Testing video transmission between h1 and h2"

#h1.cmd('./myrtg_svc -u > myrd &')

#h0.cmd('./mystg_svc -trace st 192.168.123.2')

info( "*** Stopping network

" )

switch0.cmd( 'ovs-vsctl del-br dp0' )

switch0.deleteIntfs()

switch1.cmd( 'ovs-vsctl del-br dp1' )

switch1.deleteIntfs()

switch2.cmd( 'ovs-vsctl del-br dp2' )

switch2.deleteIntfs()

switch3.cmd( 'ovs-vsctl del-br dp3' )

switch3.deleteIntfs()

switch4.cmd( 'ovs-vsctl del-br dp4' )

switch4.deleteIntfs()

info( '

' )

if __name__ == '__main__':

setLogLevel( 'info' )

info( '*** Scratch network demo (kernel datapath)

' )

Mininet.init()

myNet()

s0中的流表项:

- 优先级为10:

- 目的IP为192.168.123.2(h1)的包且TOS为10,2口发出。

- 目的IP为192.168.123.2(h1)的包且TOS为20,3口发出。

- 目的IP为192.168.123.2(h1)的包且TOS为30,4口发出。

s1中的流表项:

- 优先级为1:

- 1口进来的包,泛洪发出。

- 1口进来的包,2口发出。

- 2口进来的包,1口发出。

s2中的流表项:

- 优先级为1:

- 1口进来的包,2口发出。

- 2口进来的包,1口发出。

s3中的流表项:

- 优先级为1:

- 1口进来的包,2口发出。

- 2口进来的包,1口发出。

s4中的流表项:

- 优先级为1:

- 1口进来的包,4口发出。

- 2口进来的包,4口发出。

- 3口进来的包,4口发出。

- 4口进来的包,3口发出。

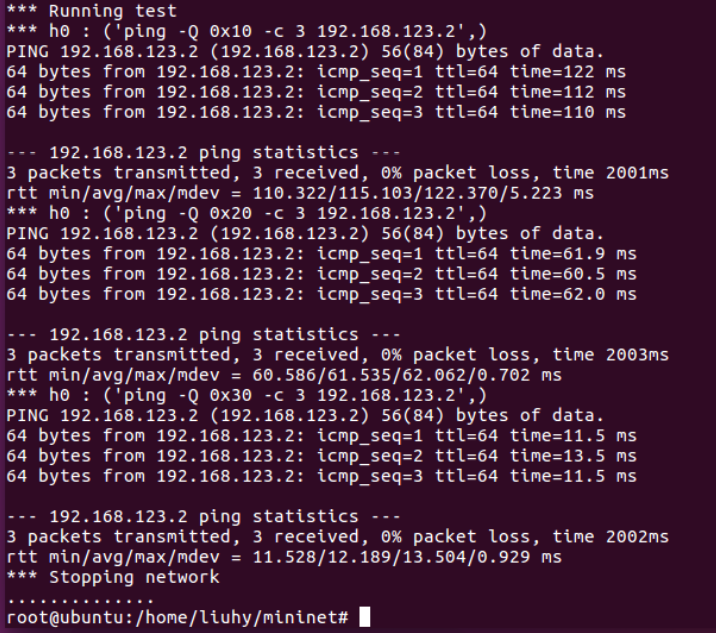

本实验中设置了链路的带宽以及时延,可以通过ping的时间来区分转发的路径。

- 效果截图:

实验结论

此实验并未连接控制器,只通过脚本在单个/多个交换机中下发静态流表实现主机间的通信。在给多个交换机下发流表时,通过ping操作测试验证主机间的连通性,并通过-Q参数设置不同的tos值验证主机间的连通性及到达目的地址的时间,通过验证发现,tos值设置越大,时间使用越少。因为TOS值不同,走的路径不同,时间自然不同。