https://www.youtube.com/watch?v=fevMOp5TDQs

http://www.denizyuret.com/2015/03/alec-radfords-animations-for.html

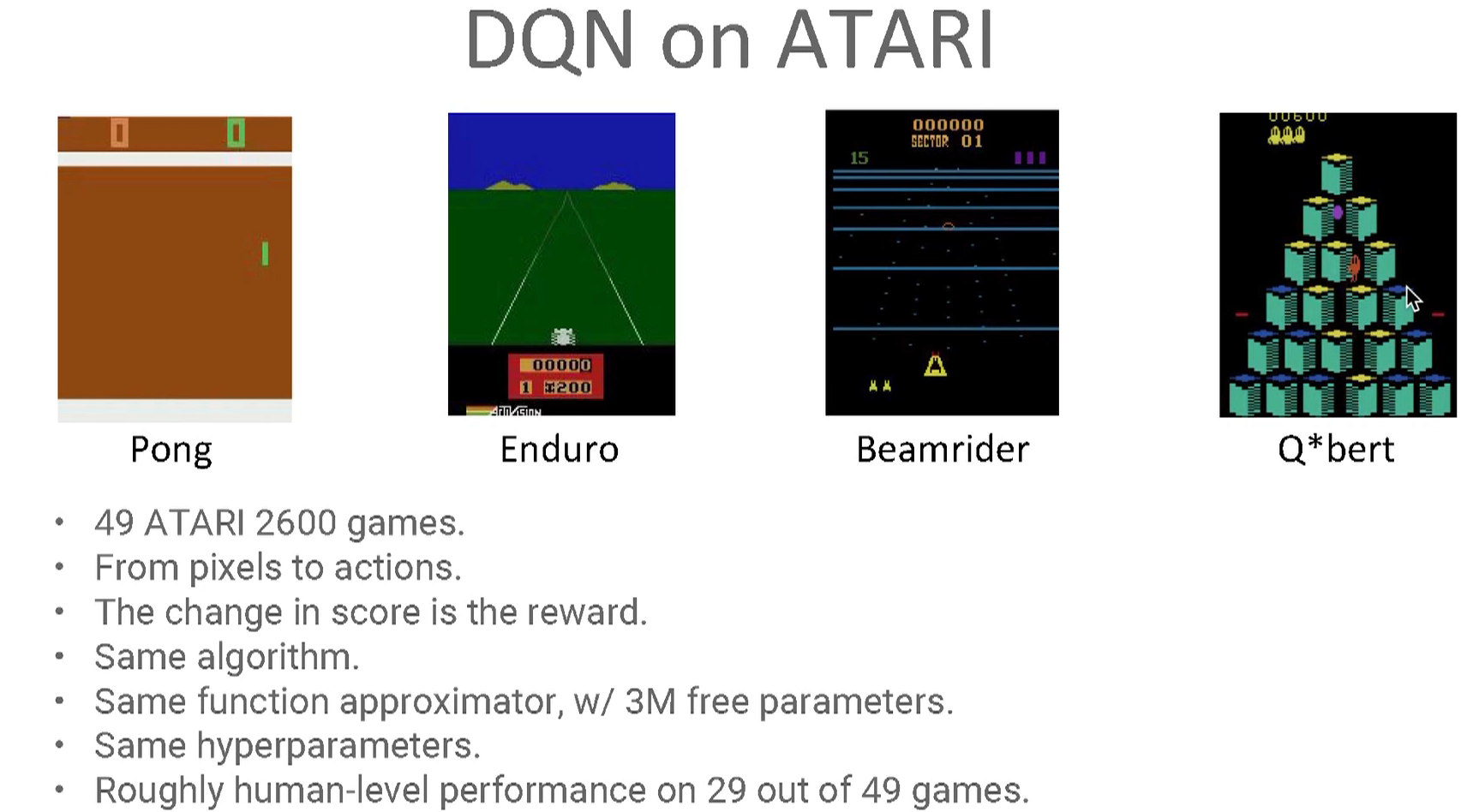

artari is not a MDP, but MDP method works well. or use RNN

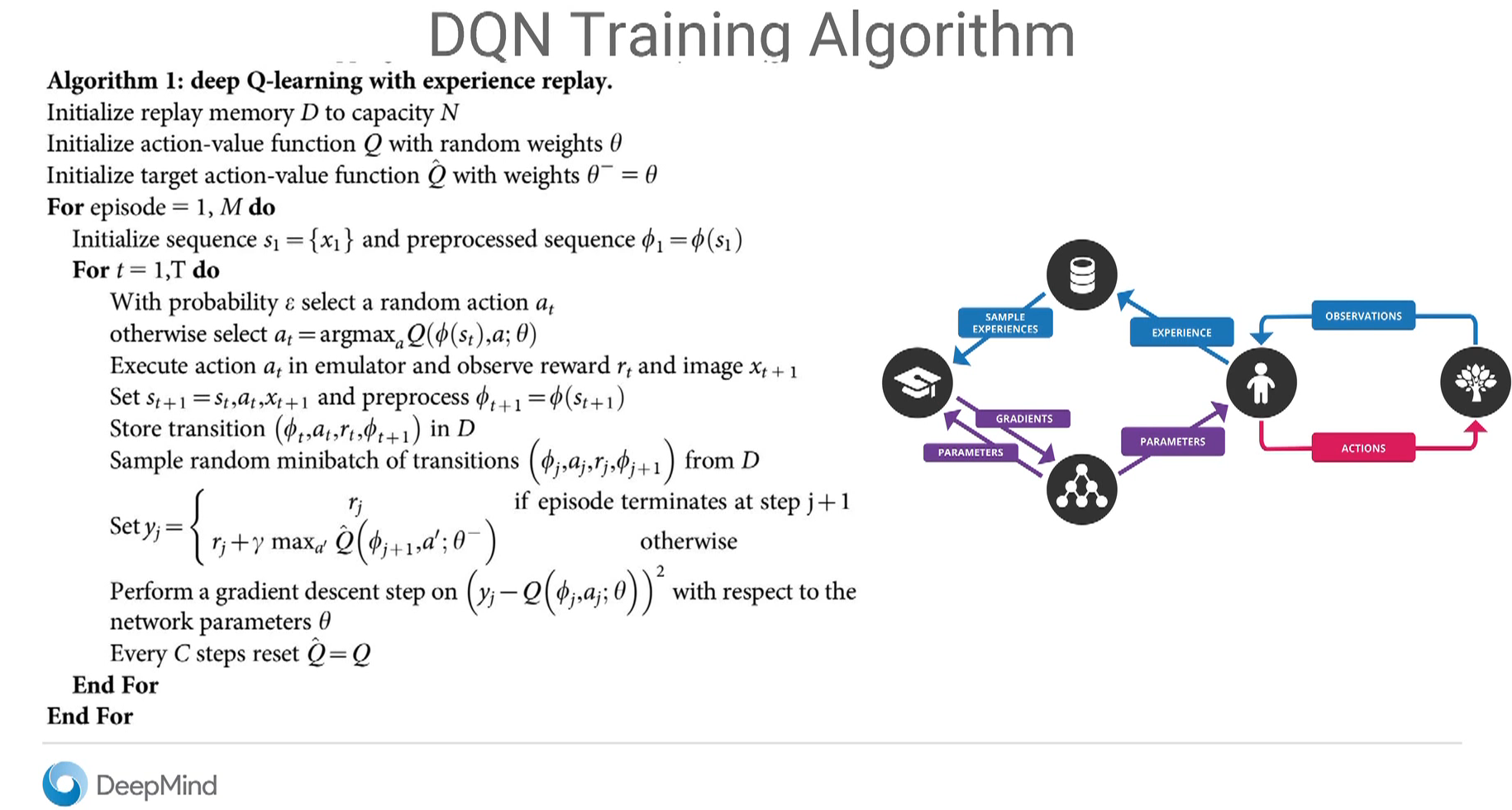

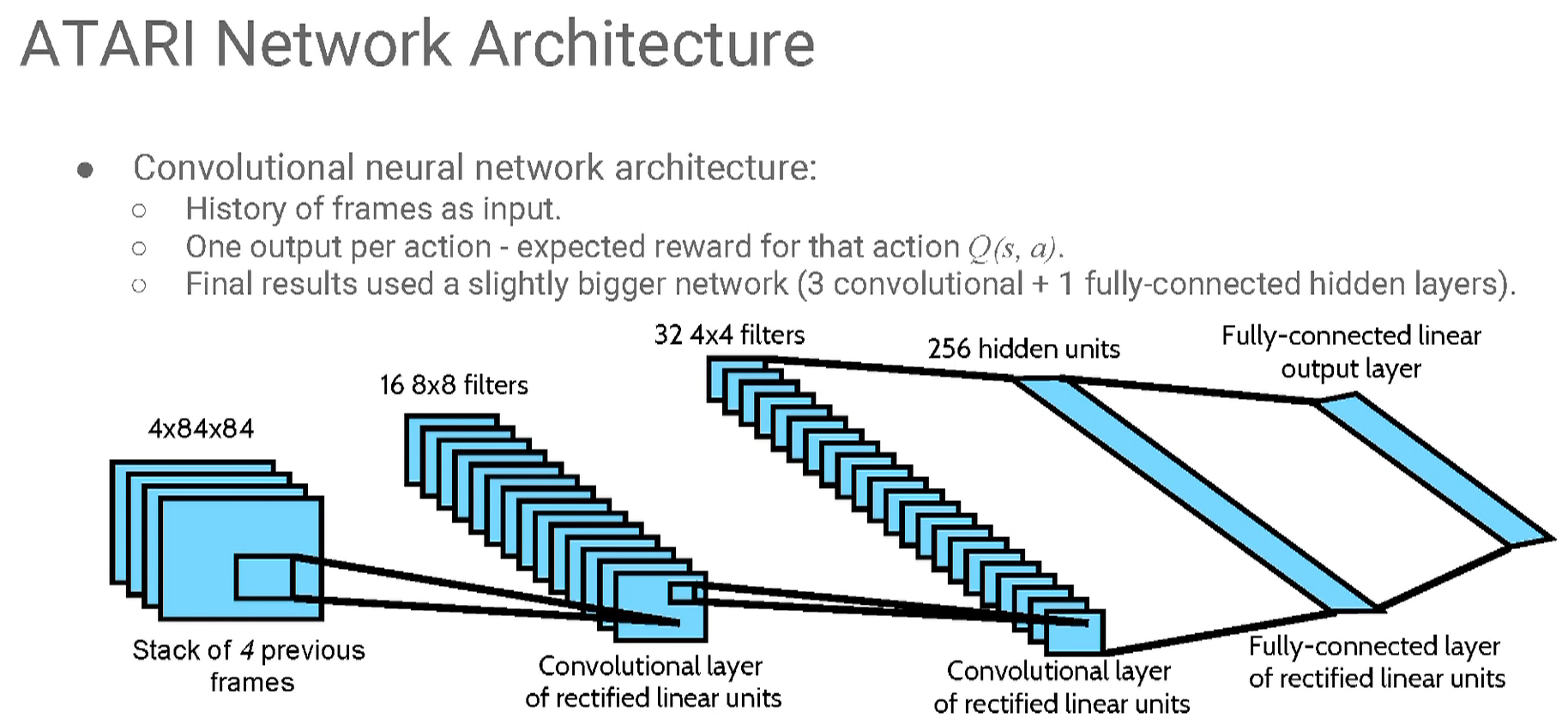

in many domains, people end up using RNN to represent q-function.

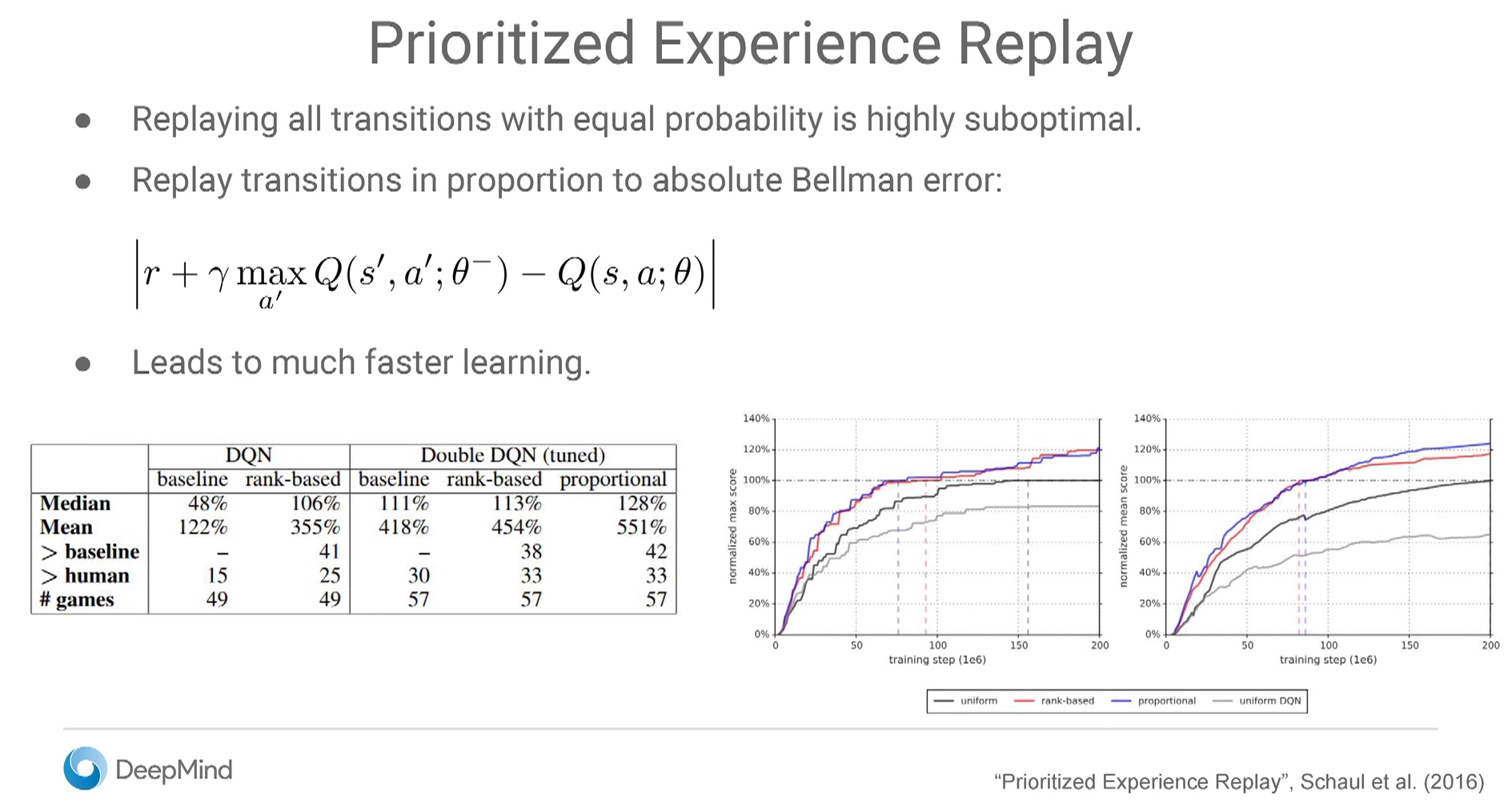

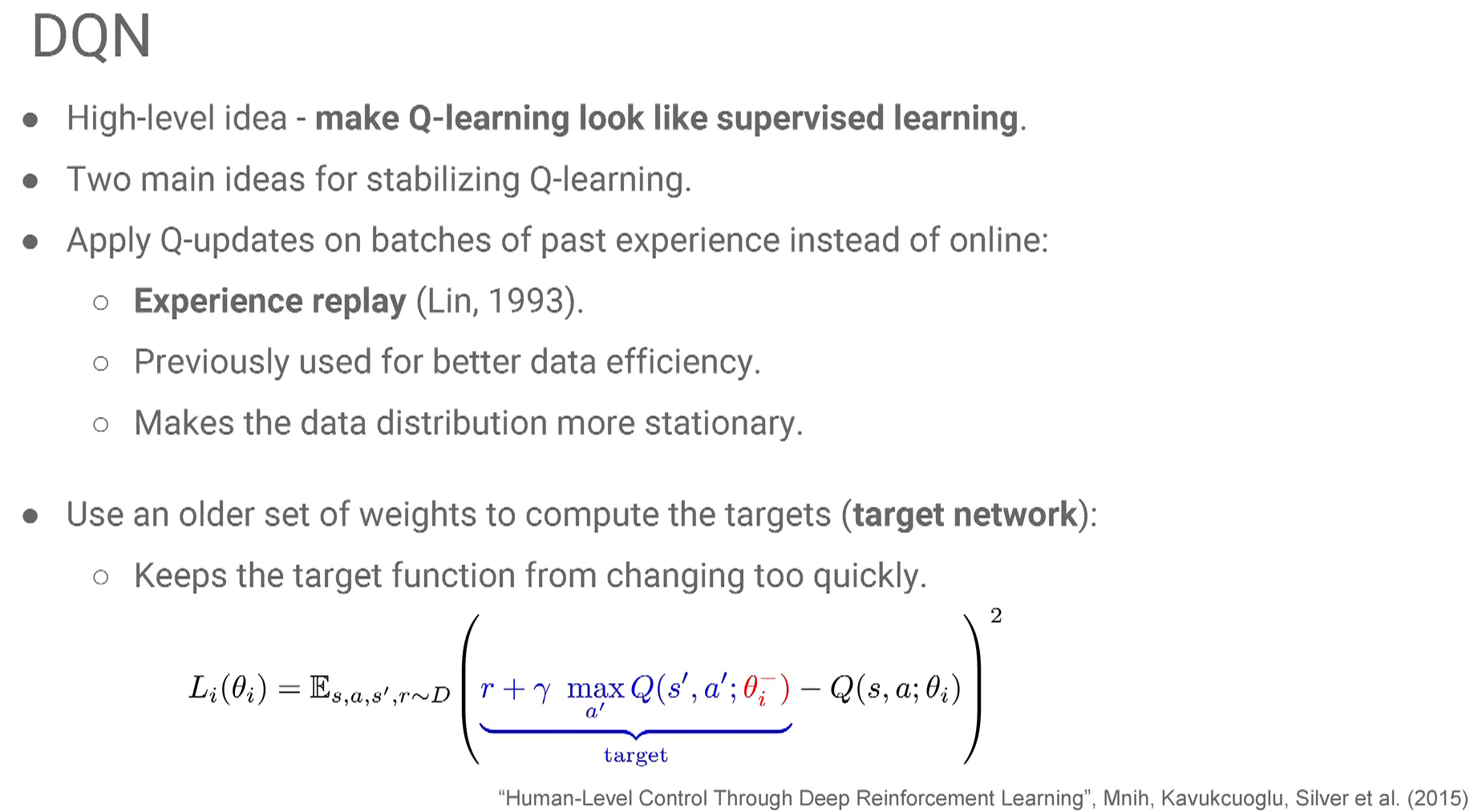

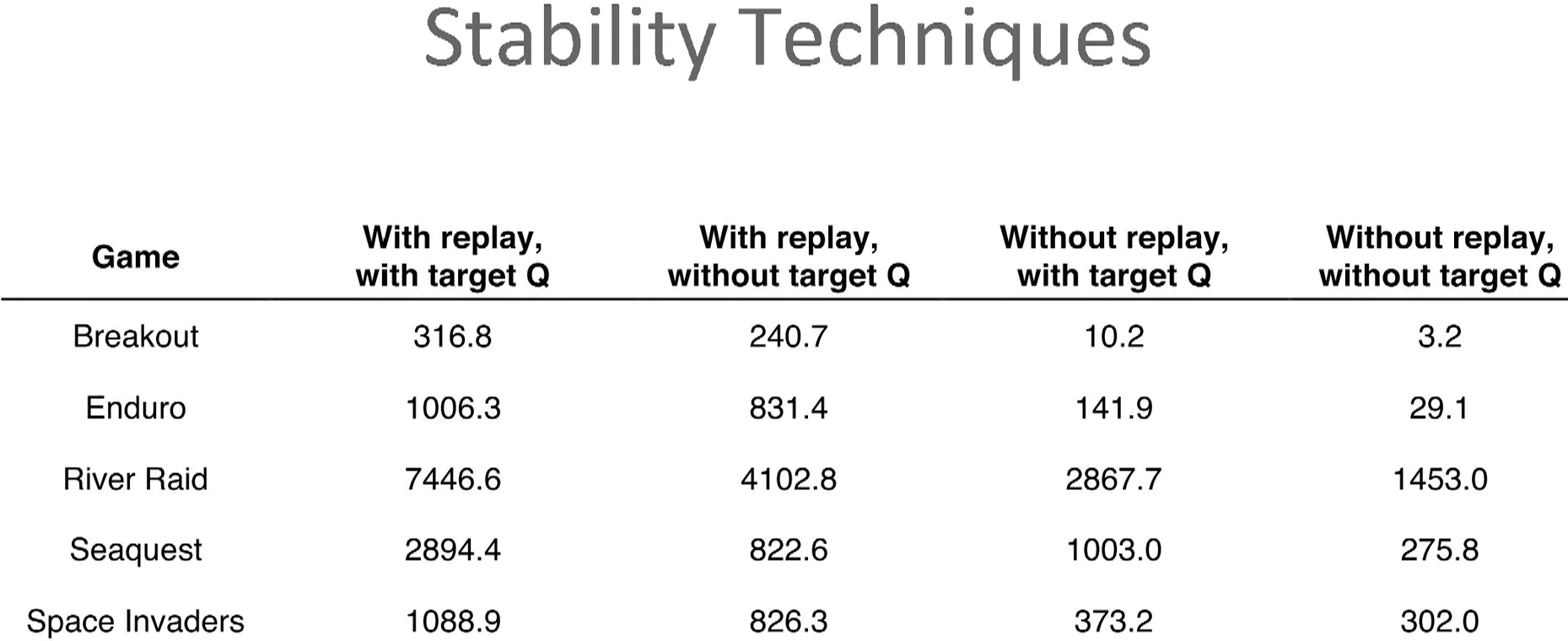

replay really makes a difference!!!

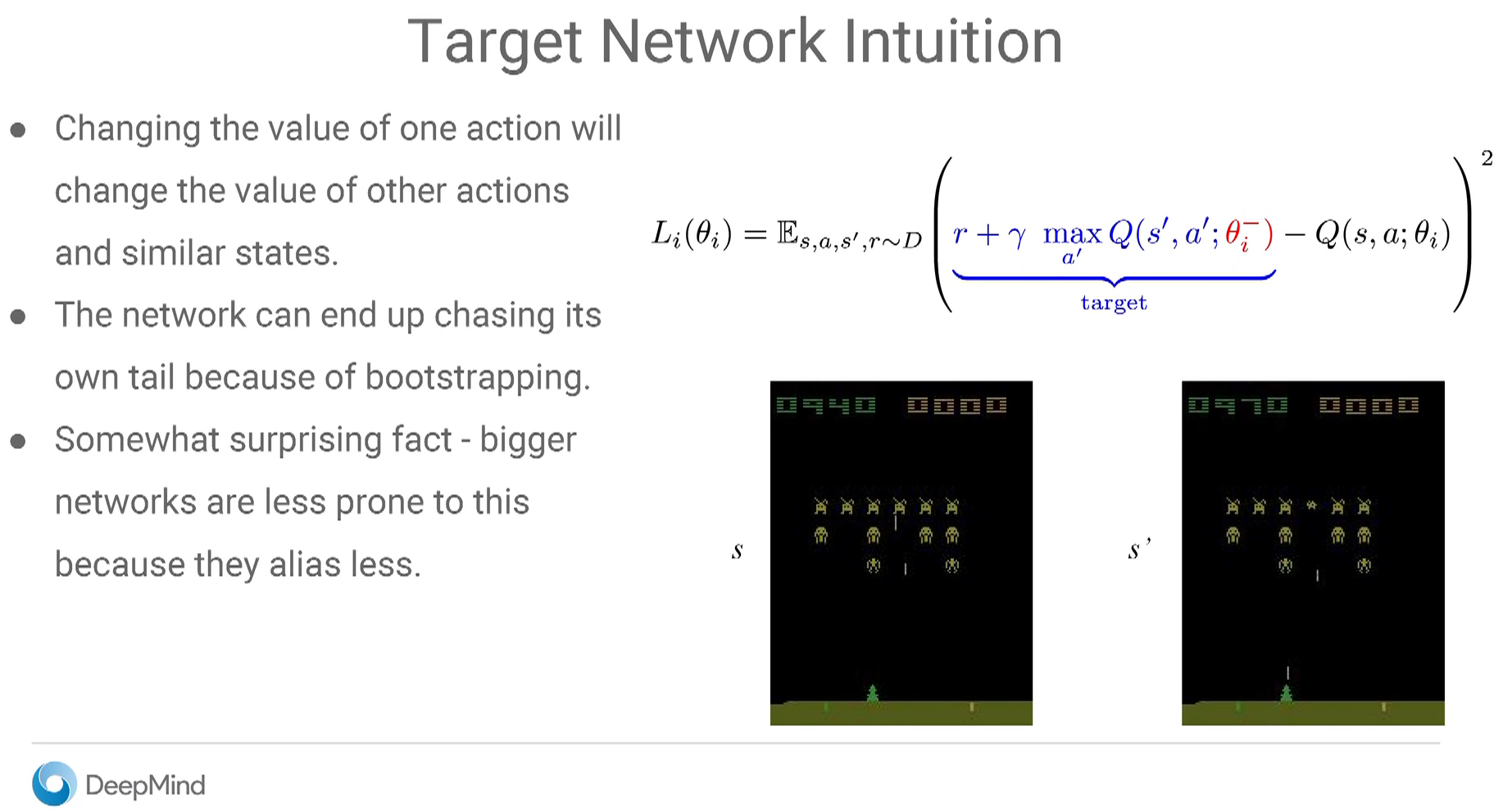

should the two network have different set of hyperparameter? just like a group of workers with different kinds of personality? will the collaboration help?